uploaded readme

Browse files

README.md

ADDED

|

@@ -0,0 +1,204 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Quantization made by Richard Erkhov.

|

| 2 |

+

|

| 3 |

+

[Github](https://github.com/RichardErkhov)

|

| 4 |

+

|

| 5 |

+

[Discord](https://discord.gg/pvy7H8DZMG)

|

| 6 |

+

|

| 7 |

+

[Request more models](https://github.com/RichardErkhov/quant_request)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

Llama-3.1-8B-bonito-v1 - GGUF

|

| 11 |

+

- Model creator: https://huggingface.co/BatsResearch/

|

| 12 |

+

- Original model: https://huggingface.co/BatsResearch/Llama-3.1-8B-bonito-v1/

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

| Name | Quant method | Size |

|

| 16 |

+

| ---- | ---- | ---- |

|

| 17 |

+

| [Llama-3.1-8B-bonito-v1.Q2_K.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q2_K.gguf) | Q2_K | 2.96GB |

|

| 18 |

+

| [Llama-3.1-8B-bonito-v1.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.IQ3_XS.gguf) | IQ3_XS | 3.28GB |

|

| 19 |

+

| [Llama-3.1-8B-bonito-v1.IQ3_S.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.IQ3_S.gguf) | IQ3_S | 3.43GB |

|

| 20 |

+

| [Llama-3.1-8B-bonito-v1.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q3_K_S.gguf) | Q3_K_S | 3.41GB |

|

| 21 |

+

| [Llama-3.1-8B-bonito-v1.IQ3_M.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.IQ3_M.gguf) | IQ3_M | 3.52GB |

|

| 22 |

+

| [Llama-3.1-8B-bonito-v1.Q3_K.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q3_K.gguf) | Q3_K | 3.74GB |

|

| 23 |

+

| [Llama-3.1-8B-bonito-v1.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q3_K_M.gguf) | Q3_K_M | 3.74GB |

|

| 24 |

+

| [Llama-3.1-8B-bonito-v1.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q3_K_L.gguf) | Q3_K_L | 4.03GB |

|

| 25 |

+

| [Llama-3.1-8B-bonito-v1.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.IQ4_XS.gguf) | IQ4_XS | 4.18GB |

|

| 26 |

+

| [Llama-3.1-8B-bonito-v1.Q4_0.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q4_0.gguf) | Q4_0 | 4.34GB |

|

| 27 |

+

| [Llama-3.1-8B-bonito-v1.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.IQ4_NL.gguf) | IQ4_NL | 4.38GB |

|

| 28 |

+

| [Llama-3.1-8B-bonito-v1.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q4_K_S.gguf) | Q4_K_S | 4.37GB |

|

| 29 |

+

| [Llama-3.1-8B-bonito-v1.Q4_K.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q4_K.gguf) | Q4_K | 4.58GB |

|

| 30 |

+

| [Llama-3.1-8B-bonito-v1.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q4_K_M.gguf) | Q4_K_M | 4.58GB |

|

| 31 |

+

| [Llama-3.1-8B-bonito-v1.Q4_1.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q4_1.gguf) | Q4_1 | 4.78GB |

|

| 32 |

+

| [Llama-3.1-8B-bonito-v1.Q5_0.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q5_0.gguf) | Q5_0 | 5.21GB |

|

| 33 |

+

| [Llama-3.1-8B-bonito-v1.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q5_K_S.gguf) | Q5_K_S | 5.21GB |

|

| 34 |

+

| [Llama-3.1-8B-bonito-v1.Q5_K.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q5_K.gguf) | Q5_K | 5.34GB |

|

| 35 |

+

| [Llama-3.1-8B-bonito-v1.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q5_K_M.gguf) | Q5_K_M | 5.34GB |

|

| 36 |

+

| [Llama-3.1-8B-bonito-v1.Q5_1.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q5_1.gguf) | Q5_1 | 5.65GB |

|

| 37 |

+

| [Llama-3.1-8B-bonito-v1.Q6_K.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q6_K.gguf) | Q6_K | 6.14GB |

|

| 38 |

+

| [Llama-3.1-8B-bonito-v1.Q8_0.gguf](https://huggingface.co/RichardErkhov/BatsResearch_-_Llama-3.1-8B-bonito-v1-gguf/blob/main/Llama-3.1-8B-bonito-v1.Q8_0.gguf) | Q8_0 | 7.95GB |

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

Original model description:

|

| 44 |

+

---

|

| 45 |

+

license: llama3.1

|

| 46 |

+

datasets:

|

| 47 |

+

- BatsResearch/ctga-v1

|

| 48 |

+

language:

|

| 49 |

+

- en

|

| 50 |

+

pipeline_tag: text-generation

|

| 51 |

+

tags:

|

| 52 |

+

- task generation

|

| 53 |

+

- synthetic datasets

|

| 54 |

+

---

|

| 55 |

+

# Model Card for Llama-3.1-8B-bonito-v1

|

| 56 |

+

|

| 57 |

+

<!-- Provide a quick summary of what the model is/does. -->

|

| 58 |

+

|

| 59 |

+

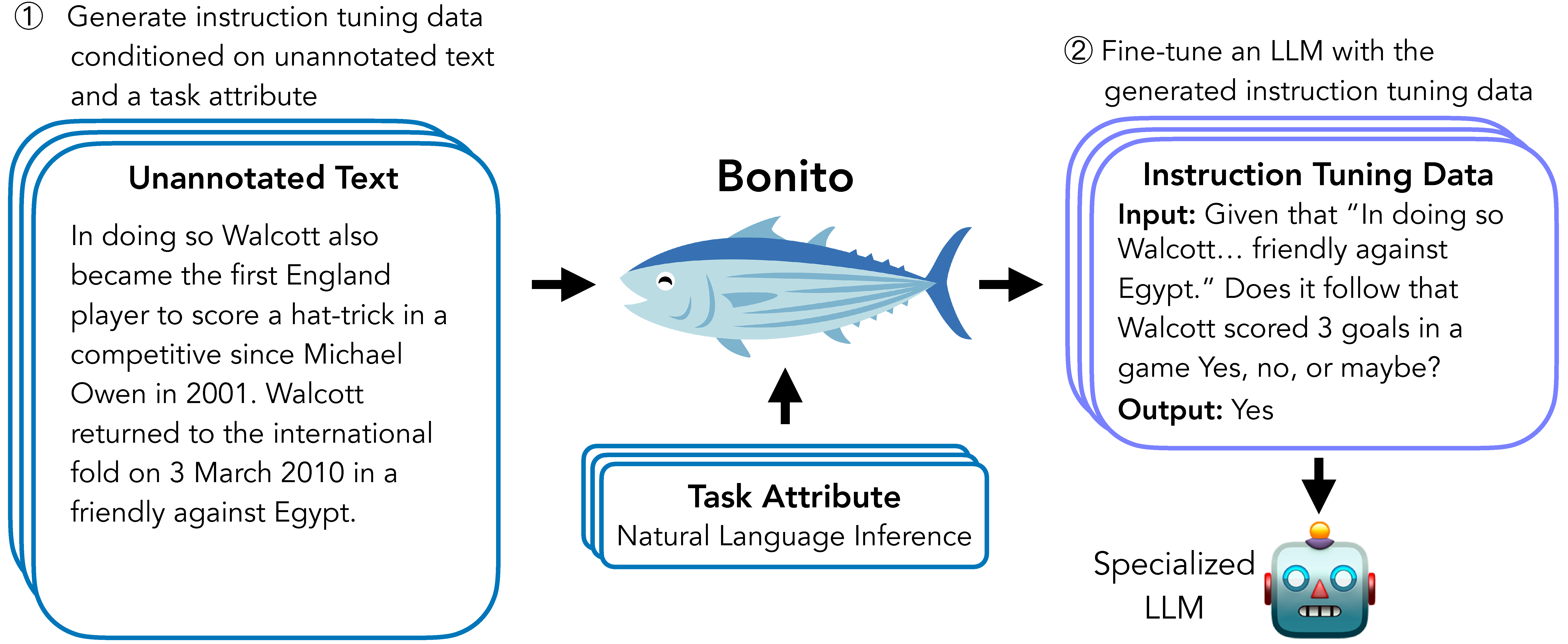

Bonito is an open-source model for conditional task generation: the task of converting unannotated text into task-specific training datasets for instruction tuning.

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

## Model Details

|

| 64 |

+

|

| 65 |

+

### Model Description

|

| 66 |

+

|

| 67 |

+

<!-- Provide a longer summary of what this model is. -->

|

| 68 |

+

|

| 69 |

+

Bonito can be used to create synthetic instruction tuning datasets to adapt large language models on users' specialized, private data.

|

| 70 |

+

In our [paper](https://arxiv.org/abs/2402.18334), we show that Bonito can be used to adapt both pretrained and instruction tuned models to tasks without any annotations.

|

| 71 |

+

|

| 72 |

+

- **Developed by:** Nihal V. Nayak, Yiyang Nan, Avi Trost, and Stephen H. Bach

|

| 73 |

+

- **Model type:** LlamaForCausalLM

|

| 74 |

+

- **Language(s) (NLP):** English

|

| 75 |

+

- **License:** [Llama 3.1 Community License](https://github.com/meta-llama/llama-models/blob/main/models/llama3_1/LICENSE)

|

| 76 |

+

- **Finetuned from model:** `meta-llama/Meta-Llama-3.1-8B`

|

| 77 |

+

|

| 78 |

+

### Model Sources

|

| 79 |

+

|

| 80 |

+

<!-- Provide the basic links for the model. -->

|

| 81 |

+

|

| 82 |

+

- **Repository:** [https://github.com/BatsResearch/bonito](https://github.com/BatsResearch/bonito)

|

| 83 |

+

- **Paper:** [Learning to Generate Instruction Tuning Datasets for

|

| 84 |

+

Zero-Shot Task Adaptation](https://arxiv.org/abs/2402.18334)

|

| 85 |

+

|

| 86 |

+

### Model Performance

|

| 87 |

+

|

| 88 |

+

Downstream performance of Mistral-7B-v0.1 after training with Llama-3.1-8B-bonito-v1 generated instructions.

|

| 89 |

+

|

| 90 |

+

| Model | PubMedQA | PrivacyQA | NYT | Amazon | Reddit | ContractNLI | Vitamin C | Average |

|

| 91 |

+

|------------------------------------------|----------|-----------|------|--------|--------|-------------|-----------|---------|

|

| 92 |

+

| Mistral-7B-v0.1 | 25.6 | 44.1 | 24.2 | 17.5 | 12.0 | 31.2 | 38.9 | 27.6 |

|

| 93 |

+

| Mistral-7B-v0.1 + Llama-3.1-8B-bonito-v1 | 44.5 | 53.7 | 80.7 | 72.9 | 70.1 | 69.7 | 73.3 | 66.4 |

|

| 94 |

+

|

| 95 |

+

## Uses

|

| 96 |

+

|

| 97 |

+

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

|

| 98 |

+

|

| 99 |

+

### Direct Use

|

| 100 |

+

|

| 101 |

+

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

|

| 102 |

+

To easily generate synthetic instruction tuning datasets, we recommend using the [bonito](https://github.com/BatsResearch/bonito) package built using the `transformers` and the `vllm` libraries.

|

| 103 |

+

|

| 104 |

+

```python

|

| 105 |

+

from bonito import Bonito

|

| 106 |

+

from vllm import SamplingParams

|

| 107 |

+

from datasets import load_dataset

|

| 108 |

+

|

| 109 |

+

# Initialize the Bonito model

|

| 110 |

+

bonito = Bonito("BatsResearch/Llama-3.1-8B-bonito-v1")

|

| 111 |

+

|

| 112 |

+

# load dataaset with unannotated text

|

| 113 |

+

unannotated_text = load_dataset(

|

| 114 |

+

"BatsResearch/bonito-experiment",

|

| 115 |

+

"unannotated_contract_nli"

|

| 116 |

+

)["train"].select(range(10))

|

| 117 |

+

|

| 118 |

+

# Generate synthetic instruction tuning dataset

|

| 119 |

+

sampling_params = SamplingParams(max_tokens=256, top_p=0.95, temperature=0.5, n=1)

|

| 120 |

+

synthetic_dataset = bonito.generate_tasks(

|

| 121 |

+

unannotated_text,

|

| 122 |

+

context_col="input",

|

| 123 |

+

task_type="nli",

|

| 124 |

+

sampling_params=sampling_params

|

| 125 |

+

)

|

| 126 |

+

```

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

### Out-of-Scope Use

|

| 130 |

+

|

| 131 |

+

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

|

| 132 |

+

|

| 133 |

+

Our model is trained to generate the following task types: summarization, sentiment analysis, multiple-choice question answering, extractive question answering, topic classification, natural language inference, question generation, text generation, question answering without choices, paraphrase identification, sentence completion, yes-no question answering, word sense disambiguation, paraphrase generation, textual entailment, and

|

| 134 |

+

coreference resolution.

|

| 135 |

+

The model might not produce accurate synthetic tasks beyond these task types.

|

| 136 |

+

|

| 137 |

+

## Bias, Risks, and Limitations

|

| 138 |

+

|

| 139 |

+

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

|

| 140 |

+

**Limitations**

|

| 141 |

+

|

| 142 |

+

Our work relies on the availability of large amounts of unannotated text.

|

| 143 |

+

If only a small quantity of unannotated text is present, the target language model, after adaptation, may experience a drop in performance.

|

| 144 |

+

While we demonstrate positive improvements on pretrained and instruction-tuned models, our observations are limited to the three task types (yes-no question answering, extractive question answering, and natural language inference) considered in our paper.

|

| 145 |

+

|

| 146 |

+

**Risks**

|

| 147 |

+

|

| 148 |

+

Bonito poses risks similar to those of any large language model.

|

| 149 |

+

For example, our model could be used to generate factually incorrect datasets in specialized domains.

|

| 150 |

+

Our model can exhibit the biases and stereotypes of the base model, Mistral-7B, even after extensive supervised fine-tuning.

|

| 151 |

+

Finally, our model does not include safety training and can potentially generate harmful content.

|

| 152 |

+

|

| 153 |

+

### Recommendations

|

| 154 |

+

|

| 155 |

+

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

|

| 156 |

+

|

| 157 |

+

We recommend users thoroughly inspect the generated tasks and benchmark performance on critical datasets before deploying the models trained with the synthetic tasks into the real world.

|

| 158 |

+

|

| 159 |

+

## Training Details

|

| 160 |

+

|

| 161 |

+

### Training Data

|

| 162 |

+

|

| 163 |

+

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

|

| 164 |

+

To train Bonito, we create a new dataset called conditional task generation with attributes by remixing existing instruction tuning datasets.

|

| 165 |

+

See [ctga-v1](https://huggingface.co/datasets/BatsResearch/ctga-v1) for more details.

|

| 166 |

+

|

| 167 |

+

### Training Procedure

|

| 168 |

+

|

| 169 |

+

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

|

| 170 |

+

|

| 171 |

+

#### Training Hyperparameters

|

| 172 |

+

|

| 173 |

+

- **Training regime:** <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

|

| 174 |

+

We train the model using [Q-LoRA](https://github.com/artidoro/qlora) by optimizing the cross entropy loss over the output tokens.

|

| 175 |

+

The model is trained for 100,000 steps.

|

| 176 |

+

The training takes about 1 day on eight A100 GPUs to complete.

|

| 177 |

+

|

| 178 |

+

We use the following hyperparameters:

|

| 179 |

+

- Q-LoRA rank (r): 64

|

| 180 |

+

- Q-LoRA scaling factor (alpha): 4

|

| 181 |

+

- Q-LoRA dropout: 0

|

| 182 |

+

- Optimizer: Paged AdamW

|

| 183 |

+

- Learning rate scheduler: linear

|

| 184 |

+

- Max. learning rate: 1e-04

|

| 185 |

+

- Min. learning rate: 0

|

| 186 |

+

- Weight decay: 0

|

| 187 |

+

- Dropout: 0

|

| 188 |

+

- Max. gradient norm: 0.3

|

| 189 |

+

- Effective batch size: 16

|

| 190 |

+

- Max. input length: 2,048

|

| 191 |

+

- Max. output length: 2,048

|

| 192 |

+

- Num. steps: 100,000

|

| 193 |

+

|

| 194 |

+

## Citation

|

| 195 |

+

|

| 196 |

+

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

|

| 197 |

+

```

|

| 198 |

+

@inproceedings{bonito:aclfindings24,

|

| 199 |

+

title = {Learning to Generate Instruction Tuning Datasets for Zero-Shot Task Adaptation},

|

| 200 |

+

author = {Nayak, Nihal V. and Nan, Yiyang and Trost, Avi and Bach, Stephen H.},

|

| 201 |

+

booktitle = {Findings of the Association for Computational Linguistics: ACL 2024},

|

| 202 |

+

year = {2024}}

|

| 203 |

+

```

|

| 204 |

+

|