Add files using upload-large-folder tool

Browse files- extras/check_drum_channel_slakh.py +24 -0

- extras/dataset_mutable_var_sanity_check.py +81 -0

- extras/demo_intra_augmentation.py +52 -0

- extras/examples/singing_note_events.npy +3 -0

- extras/examples/singing_notes.npy +3 -0

- extras/fig/label_smooth_interval_of_interest.png +0 -0

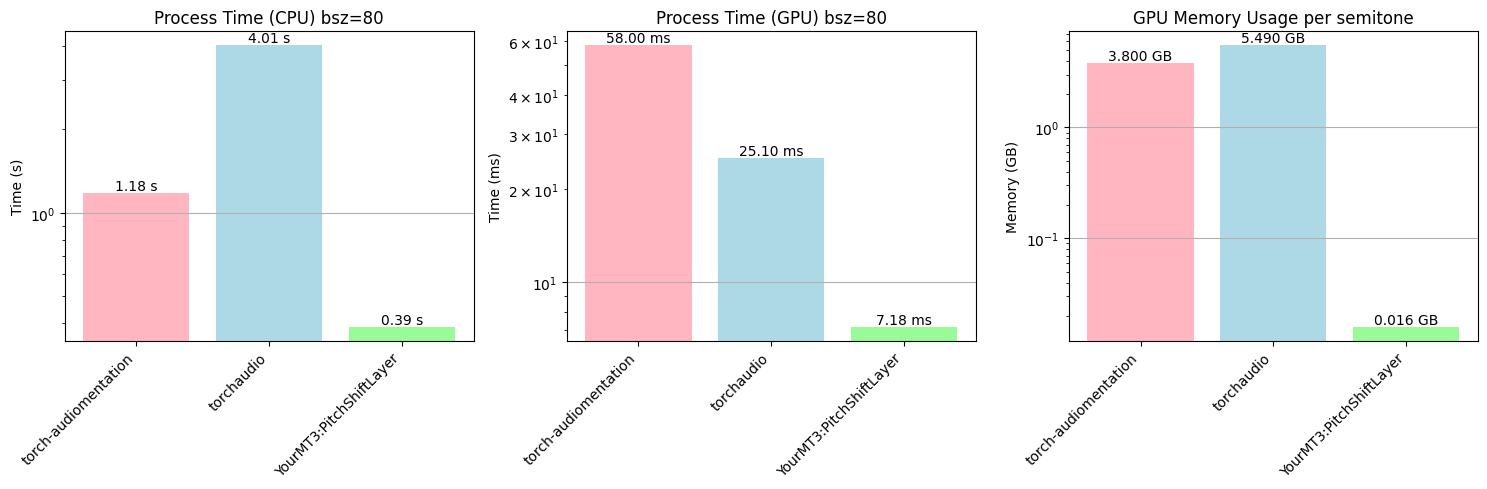

- extras/fig/pitchshift_benchnmark.png +0 -0

- extras/fig/pitchshift_stretch_and_resampler_process_time.png +0 -0

- extras/label_smoothing.py +67 -0

- extras/npy_speed_benchmark.py +187 -0

- extras/perceivertf_inspect.py +640 -0

- extras/perceivertf_multi_inspect.py +778 -0

- extras/pitch_shift_benchmark.py +167 -0

- extras/run_spleeter_mir1k.sh +17 -0

- extras/run_spleeter_mirst500.sh +13 -0

- model/__pycache__/conformer_mod.cpython-310.pyc +0 -0

extras/check_drum_channel_slakh.py

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from utils.mirdata_dev.datasets import slakh16k

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

def check_drum_channel_slakh(data_home: str):

|

| 5 |

+

ds = slakh16k.Dataset(data_home, version='default')

|

| 6 |

+

for track_id in ds.track_ids:

|

| 7 |

+

is_drum = ds.track(track_id).is_drum

|

| 8 |

+

midi = MidiFile(ds.track(track_id).midi_path)

|

| 9 |

+

cnt = 0

|

| 10 |

+

for msg in midi:

|

| 11 |

+

if 'note' in msg.type:

|

| 12 |

+

if is_drum and (msg.channel != 9):

|

| 13 |

+

print('found drum track with channel != 9 in track_id: ',

|

| 14 |

+

track_id)

|

| 15 |

+

if not is_drum and (msg.channel == 9):

|

| 16 |

+

print(

|

| 17 |

+

'found non-drum track with channel == 9 in track_id: ',

|

| 18 |

+

track_id)

|

| 19 |

+

if is_drum and (msg.channel == 9):

|

| 20 |

+

cnt += 1

|

| 21 |

+

if cnt > 0:

|

| 22 |

+

print(f'found {cnt} notes in drum track with ch 9 in track_id: ',

|

| 23 |

+

track_id)

|

| 24 |

+

return

|

extras/dataset_mutable_var_sanity_check.py

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

for n in range(1000):

|

| 2 |

+

sampled_data = ds.__getitem__(n)

|

| 3 |

+

|

| 4 |

+

a = deepcopy(sampled_data['note_event_segments'])

|

| 5 |

+

b = deepcopy(sampled_data['note_event_segments'])

|

| 6 |

+

|

| 7 |

+

for (note_events, tie_note_events, start_time) in list(zip(*b.values())):

|

| 8 |

+

note_events = pitch_shift_note_events(note_events, 2)

|

| 9 |

+

tie_note_events = pitch_shift_note_events(tie_note_events, 2)

|

| 10 |

+

|

| 11 |

+

# compare

|

| 12 |

+

for i, (note_events, tie_note_events, start_time) in enumerate(list(zip(*b.values()))):

|

| 13 |

+

for j, ne in enumerate(note_events):

|

| 14 |

+

if ne.is_drum is False:

|

| 15 |

+

if ne.pitch != a['note_events'][i][j].pitch + 2:

|

| 16 |

+

print(i, j)

|

| 17 |

+

assert ne.pitch == a['note_events'][i][j].pitch + 2

|

| 18 |

+

|

| 19 |

+

for k, tne in enumerate(tie_note_events):

|

| 20 |

+

assert tne.pitch == a['tie_note_events'][i][k].pitch + 2

|

| 21 |

+

|

| 22 |

+

print('test {} passed'.format(n))

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def assert_note_events_almost_equal(actual_note_events,

|

| 26 |

+

predicted_note_events,

|

| 27 |

+

ignore_time=False,

|

| 28 |

+

ignore_activity=True,

|

| 29 |

+

delta=5.1e-3):

|

| 30 |

+

"""

|

| 31 |

+

Asserts that the given lists of Note instances are equal up to a small

|

| 32 |

+

floating-point tolerance, similar to `assertAlmostEqual` of `unittest`.

|

| 33 |

+

Tolerance is 5e-3 by default, which is 5 ms for 100 ticks-per-second.

|

| 34 |

+

|

| 35 |

+

If `ignore_time` is True, then the time field is ignored. (useful for

|

| 36 |

+

comparing tie note events, default is False)

|

| 37 |

+

|

| 38 |

+

If `ignore_activity` is True, then the activity field is ignored (default

|

| 39 |

+

is True).

|

| 40 |

+

"""

|

| 41 |

+

assert len(actual_note_events) == len(predicted_note_events)

|

| 42 |

+

for j, (actual_note_event,

|

| 43 |

+

predicted_note_event) in enumerate(zip(actual_note_events, predicted_note_events)):

|

| 44 |

+

if ignore_time is False:

|

| 45 |

+

assert abs(actual_note_event.time - predicted_note_event.time) <= delta

|

| 46 |

+

assert actual_note_event.is_drum == predicted_note_event.is_drum

|

| 47 |

+

if actual_note_event.is_drum is False and predicted_note_event.is_drum is False:

|

| 48 |

+

assert actual_note_event.program == predicted_note_event.program

|

| 49 |

+

assert actual_note_event.pitch == predicted_note_event.pitch

|

| 50 |

+

assert actual_note_event.velocity == predicted_note_event.velocity

|

| 51 |

+

if ignore_activity is False:

|

| 52 |

+

assert actual_note_event.activity == predicted_note_event.activity

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

cache_old = deepcopy(dict(ds.cache))

|

| 56 |

+

for n in range(500):

|

| 57 |

+

sampled_data = ds.__getitem__(n)

|

| 58 |

+

cache_new = ds.cache

|

| 59 |

+

cnt = 0

|

| 60 |

+

for k, v in cache_new.items():

|

| 61 |

+

if k in cache_old:

|

| 62 |

+

cnt += 1

|

| 63 |

+

assert (cache_new[k]['programs'] == cache_old[k]['programs']).all()

|

| 64 |

+

assert (cache_new[k]['is_drum'] == cache_old[k]['is_drum']).all()

|

| 65 |

+

assert (cache_new[k]['has_stems'] == cache_old[k]['has_stems'])

|

| 66 |

+

assert (cache_new[k]['has_unannotated'] == cache_old[k]['has_unannotated'])

|

| 67 |

+

assert (cache_new[k]['audio_array'] == cache_old[k]['audio_array']).all()

|

| 68 |

+

|

| 69 |

+

for nes_new, nes_old in zip(cache_new[k]['note_event_segments']['note_events'],

|

| 70 |

+

cache_old[k]['note_event_segments']['note_events']):

|

| 71 |

+

assert_note_events_almost_equal(nes_new, nes_old)

|

| 72 |

+

|

| 73 |

+

for tnes_new, tnes_old in zip(cache_new[k]['note_event_segments']['tie_note_events'],

|

| 74 |

+

cache_old[k]['note_event_segments']['tie_note_events']):

|

| 75 |

+

assert_note_events_almost_equal(tnes_new, tnes_old, ignore_time=True)

|

| 76 |

+

|

| 77 |

+

for s_new, s_old in zip(cache_new[k]['note_event_segments']['start_times'],

|

| 78 |

+

cache_old[k]['note_event_segments']['start_times']):

|

| 79 |

+

assert s_new == s_old

|

| 80 |

+

cache_old = deepcopy(dict(ds.cache))

|

| 81 |

+

print(n, cnt)

|

extras/demo_intra_augmentation.py

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2024 The YourMT3 Authors.

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Please see the details in the LICENSE file.

|

| 10 |

+

import numpy as np

|

| 11 |

+

import torch

|

| 12 |

+

import json

|

| 13 |

+

import soundfile as sf

|

| 14 |

+

from utils.datasets_train import get_cache_data_loader

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def get_filelist(track_id: int) -> dict:

|

| 18 |

+

filelist = '../../data/yourmt3_indexes/slakh_train_file_list.json'

|

| 19 |

+

with open(filelist, 'r') as f:

|

| 20 |

+

fl = json.load(f)

|

| 21 |

+

new_filelist = dict()

|

| 22 |

+

for key, value in fl.items():

|

| 23 |

+

if int(key) == track_id:

|

| 24 |

+

new_filelist[0] = value

|

| 25 |

+

return new_filelist

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def get_ds(track_id: int, random_amp_range: list = [1., 1.], stem_aug_prob: float = 0.8):

|

| 29 |

+

filelist = get_filelist(track_id)

|

| 30 |

+

dl = get_cache_data_loader(filelist,

|

| 31 |

+

'train',

|

| 32 |

+

1,

|

| 33 |

+

1,

|

| 34 |

+

random_amp_range=random_amp_range,

|

| 35 |

+

stem_aug_prob=stem_aug_prob,

|

| 36 |

+

shuffle=False)

|

| 37 |

+

ds = dl.dataset

|

| 38 |

+

return ds

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def gen_audio(track_id: int, n_segments: int = 30, random_amp_range: list = [1., 1.], stem_aug_prob: float = 0.8):

|

| 42 |

+

ds = get_ds(track_id, random_amp_range, stem_aug_prob)

|

| 43 |

+

audio = []

|

| 44 |

+

for i in range(n_segments):

|

| 45 |

+

audio.append(ds.__getitem__(0)[0])

|

| 46 |

+

# audio.append(ds.__getitem__(i)[0])

|

| 47 |

+

|

| 48 |

+

audio = torch.concat(audio, dim=2).numpy()[0, 0, :]

|

| 49 |

+

sf.write('audio.wav', audio, 16000, subtype='PCM_16')

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

gen_audio(1, 20)

|

extras/examples/singing_note_events.npy

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8eabaecc837fb052fba68483b965a59d40220fdf2a91a57b5155849c72306ba0

|

| 3 |

+

size 37504

|

extras/examples/singing_notes.npy

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aa8ebde37531514ea145063af32543f8e520a6c34525c98e863e152b66119be8

|

| 3 |

+

size 15085

|

extras/fig/label_smooth_interval_of_interest.png

ADDED

|

extras/fig/pitchshift_benchnmark.png

ADDED

|

extras/fig/pitchshift_stretch_and_resampler_process_time.png

ADDED

|

extras/label_smoothing.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import numpy as np

|

| 3 |

+

import matplotlib.pyplot as plt

|

| 4 |

+

|

| 5 |

+

a = torch.signal.windows.gaussian(11, sym=True, std=3)

|

| 6 |

+

plt.plot(a)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def gaussian_smoothing(y_hot, mu=5, sigma=0.865):

|

| 10 |

+

"""

|

| 11 |

+

y_hot: one-hot encoded array

|

| 12 |

+

"""

|

| 13 |

+

#sigma = np.sqrt(np.abs(np.log(0.05) / ((4 - mu)**2))) / 2

|

| 14 |

+

|

| 15 |

+

# Generate index array

|

| 16 |

+

i = np.arange(len(y_hot))

|

| 17 |

+

|

| 18 |

+

# Gaussian function

|

| 19 |

+

y_smooth = np.exp(-(i - mu)**2 / (2 * sigma**2))

|

| 20 |

+

|

| 21 |

+

# Normalize the resulting array

|

| 22 |

+

y_smooth /= y_smooth.sum()

|

| 23 |

+

return y_smooth, sigma

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

# y_ls = (1 - α) * y_hot + α / K, where K is the number of classes, alpha is the smoothing parameter

|

| 27 |

+

|

| 28 |

+

y_hot = torch.zeros(11)

|

| 29 |

+

y_hot[5] = 1

|

| 30 |

+

plt.plot(y_hot, 'b.-')

|

| 31 |

+

|

| 32 |

+

alpha = 0.3

|

| 33 |

+

y_ls = (1 - alpha) * y_hot + alpha / 10

|

| 34 |

+

plt.plot(y_ls, 'r.-')

|

| 35 |

+

|

| 36 |

+

y_gs, std = gaussian_smoothing(y_hot, A=0.5)

|

| 37 |

+

plt.plot(y_gs, 'g.-')

|

| 38 |

+

|

| 39 |

+

y_gst_a, std = gaussian_smoothing(y_hot, A=0.5, mu=5.5)

|

| 40 |

+

plt.plot(y_gst_a, 'y.-')

|

| 41 |

+

|

| 42 |

+

y_gst_b, std = gaussian_smoothing(y_hot, A=0.5, mu=5.8)

|

| 43 |

+

plt.plot(y_gst_b, 'c.-')

|

| 44 |

+

|

| 45 |

+

plt.legend([

|

| 46 |

+

'y_hot', 'label smoothing' + '\n' + '(alpha=0.3)',

|

| 47 |

+

'gaussian smoothing' + '\n' + 'for interval of interest' + '\n' + 'mu=5',

|

| 48 |

+

'gaussian smoothing' + '\n' + 'mu=5.5', 'gaussian smoothing' + '\n' + 'mu=5.8'

|

| 49 |

+

])

|

| 50 |

+

|

| 51 |

+

plt.grid()

|

| 52 |

+

plt.xticks(np.arange(11), np.arange(0, 110, 10))

|

| 53 |

+

plt.xlabel('''Time (ms)

|

| 54 |

+

original (quantized) one hot label:

|

| 55 |

+

[0,0,0,0,0,1,0,0,0,0,0]

|

| 56 |

+

\n

|

| 57 |

+

label smooting is defined as:

|

| 58 |

+

y_ls = (1 - α) * y_hot + α / K,

|

| 59 |

+

where K is the number of classes, α is the smoothing parameter

|

| 60 |

+

\n

|

| 61 |

+

gaussian smoothing for the interval (± 10ms) of interest:

|

| 62 |

+

y_gs = A * exp(-(i - mu)**2 / (2 * sigma**2))

|

| 63 |

+

with sigma = 0.865 an mu = 5

|

| 64 |

+

\n

|

| 65 |

+

gaussian smoothing with unqunatized target timing:

|

| 66 |

+

mu = 5.5 for 55ms target timing

|

| 67 |

+

''')

|

extras/npy_speed_benchmark.py

ADDED

|

@@ -0,0 +1,187 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from tasks.utils.event_codec import Event, EventRange

|

| 3 |

+

from tasks.utils import event_codec

|

| 4 |

+

|

| 5 |

+

ec = event_codec.Codec(

|

| 6 |

+

max_shift_steps=1000, # this means 0,1,...,1000

|

| 7 |

+

steps_per_second=100,

|

| 8 |

+

event_ranges=[

|

| 9 |

+

EventRange('pitch', min_value=0, max_value=127),

|

| 10 |

+

EventRange('velocity', min_value=0, max_value=1),

|

| 11 |

+

EventRange('tie', min_value=0, max_value=0),

|

| 12 |

+

EventRange('program', min_value=0, max_value=127),

|

| 13 |

+

EventRange('drum', min_value=0, max_value=127),

|

| 14 |

+

],

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

events = [

|

| 18 |

+

Event(type='shift', value=0), # actually not needed

|

| 19 |

+

Event(type='shift', value=1), # 10 ms shift

|

| 20 |

+

Event(type='shift', value=1000), # 10 s shift

|

| 21 |

+

Event(type='pitch', value=0), # lowest pitch 8.18 Hz

|

| 22 |

+

Event(type='pitch', value=60), # C4 or 261.63 Hz

|

| 23 |

+

Event(type='pitch', value=127), # highest pitch G9 or 12543.85 Hz

|

| 24 |

+

Event(type='velocity', value=0), # lowest velocity)

|

| 25 |

+

Event(type='velocity', value=1), # lowest velocity)

|

| 26 |

+

Event(type='tie', value=0), # tie

|

| 27 |

+

Event(type='program', value=0), # program

|

| 28 |

+

Event(type='program', value=127), # program

|

| 29 |

+

Event(type='drum', value=0), # drum

|

| 30 |

+

Event(type='drum', value=127), # drum

|

| 31 |

+

]

|

| 32 |

+

|

| 33 |

+

events = events * 100

|

| 34 |

+

tokens = [ec.encode_event(e) for e in events]

|

| 35 |

+

tokens = np.array(tokens, dtype=np.int16)

|

| 36 |

+

|

| 37 |

+

import csv

|

| 38 |

+

# Save events to a CSV file

|

| 39 |

+

with open('events.csv', 'w', newline='') as file:

|

| 40 |

+

writer = csv.writer(file)

|

| 41 |

+

for event in events:

|

| 42 |

+

writer.writerow([event.type, event.value])

|

| 43 |

+

|

| 44 |

+

# Load events from a CSV file

|

| 45 |

+

with open('events.csv', 'r') as file:

|

| 46 |

+

reader = csv.reader(file)

|

| 47 |

+

events2 = [Event(row[0], int(row[1])) for row in reader]

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

import json

|

| 51 |

+

# Save events to a JSON file

|

| 52 |

+

with open('events.json', 'w') as file:

|

| 53 |

+

json.dump([event.__dict__ for event in events], file)

|

| 54 |

+

|

| 55 |

+

# Load events from a JSON file

|

| 56 |

+

with open('events.json', 'r') as file:

|

| 57 |

+

events = [Event(**event_dict) for event_dict in json.load(file)]

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

"""----------------------------"""

|

| 63 |

+

# Write the tokens to a npy file

|

| 64 |

+

import numpy as np

|

| 65 |

+

np.save('tokens.npy', tokens)

|

| 66 |

+

|

| 67 |

+

def t_npy():

|

| 68 |

+

t = np.load('tokens.npy', allow_pickle=True) # allow pickle doesn't affect speed

|

| 69 |

+

|

| 70 |

+

os.makedirs('temp', exist_ok=True)

|

| 71 |

+

for i in range(2400):

|

| 72 |

+

np.save(f'temp/tokens{i}.npy', tokens)

|

| 73 |

+

|

| 74 |

+

def t_npy2400():

|

| 75 |

+

for i in range(2400):

|

| 76 |

+

t = np.load(f'temp/tokens{i}.npy')

|

| 77 |

+

def t_npy2400_take200():

|

| 78 |

+

for i in range(200):

|

| 79 |

+

t = np.load(f'temp/tokens{i}.npy')

|

| 80 |

+

|

| 81 |

+

import shutil

|

| 82 |

+

shutil.rmtree('temp', ignore_errors=True)

|

| 83 |

+

|

| 84 |

+

# Write the 2400 tokens to a single npy file

|

| 85 |

+

data = dict()

|

| 86 |

+

for i in range(2400):

|

| 87 |

+

data[f'arr{i}'] = tokens.copy()

|

| 88 |

+

np.save(f'tokens_2400x.npy', data)

|

| 89 |

+

def t_npy2400single():

|

| 90 |

+

t = np.load('tokens_2400x.npy', allow_pickle=True).item()

|

| 91 |

+

|

| 92 |

+

def t_mmap2400single():

|

| 93 |

+

t = np.load('tokens_2400x.npy', mmap_mode='r')

|

| 94 |

+

|

| 95 |

+

# Write the tokens to a npz file

|

| 96 |

+

np.savez('tokens.npz', arr0=tokens)

|

| 97 |

+

def t_npz():

|

| 98 |

+

npz_file = np.load('tokens.npz')

|

| 99 |

+

tt = npz_file['arr0']

|

| 100 |

+

|

| 101 |

+

data = dict()

|

| 102 |

+

for i in range(2400):

|

| 103 |

+

data[f'arr{i}'] = tokens

|

| 104 |

+

np.savez('tokens.npz', **data )

|

| 105 |

+

def t_npz2400():

|

| 106 |

+

npz_file = np.load('tokens.npz')

|

| 107 |

+

for i in range(2400):

|

| 108 |

+

tt = npz_file[f'arr{i}']

|

| 109 |

+

|

| 110 |

+

def t_npz2400_take200():

|

| 111 |

+

npz_file = np.load('tokens.npz')

|

| 112 |

+

# npz_file.files

|

| 113 |

+

for i in range(200):

|

| 114 |

+

tt = npz_file[f'arr{i}']

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

# Write the tokens to a txt file

|

| 118 |

+

with open('tokens.txt', 'w') as file:

|

| 119 |

+

file.write(' '.join(map(str, tokens)))

|

| 120 |

+

|

| 121 |

+

def t_txt():

|

| 122 |

+

# Read the tokens from the file

|

| 123 |

+

with open('tokens.txt', 'r') as file:

|

| 124 |

+

t = list(map(int, file.read().split()))

|

| 125 |

+

t = np.array(t)

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

# Write the tokens to a CSV file

|

| 129 |

+

with open('tokens.csv', 'w', newline='') as file:

|

| 130 |

+

writer = csv.writer(file)

|

| 131 |

+

writer.writerow(tokens)

|

| 132 |

+

|

| 133 |

+

def t_csv():

|

| 134 |

+

# Read the tokens from the CSV file

|

| 135 |

+

with open('tokens.csv', 'r') as file:

|

| 136 |

+

reader = csv.reader(file)

|

| 137 |

+

t = list(map(int, next(reader)))

|

| 138 |

+

t = np.array(t)

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

# Write the tokens to a JSON file

|

| 142 |

+

with open('tokens.json', 'w') as file:

|

| 143 |

+

json.dump(tokens, file)

|

| 144 |

+

|

| 145 |

+

def t_json():

|

| 146 |

+

# Read the tokens from the JSON file

|

| 147 |

+

with open('tokens.json', 'r') as file:

|

| 148 |

+

t = json.load(file)

|

| 149 |

+

t = np.array(t)

|

| 150 |

+

|

| 151 |

+

with open('tokens_2400x.json', 'w') as file:

|

| 152 |

+

json.dump(data, file)

|

| 153 |

+

|

| 154 |

+

def t_json2400single():

|

| 155 |

+

# Read the tokens from the JSON file

|

| 156 |

+

with open('tokens_2400x.json', 'r') as file:

|

| 157 |

+

t = json.load(file)

|

| 158 |

+

|

| 159 |

+

def t_mmap():

|

| 160 |

+

t = np.load('tokens.npy', mmap_mode='r')

|

| 161 |

+

|

| 162 |

+

# Write the tokens to bytes file

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

np.savetxt('tokens.ntxt', tokens)

|

| 168 |

+

def t_ntxt():

|

| 169 |

+

t = np.loadtxt('tokens.ntxt').astype(np.int32)

|

| 170 |

+

|

| 171 |

+

%timeit t_npz() # 139 us

|

| 172 |

+

%timeit t_mmap() # 3.12 ms

|

| 173 |

+

%timeit t_npy() # 87.8 us

|

| 174 |

+

%timeit t_txt() # 109 152 us

|

| 175 |

+

%timeit t_csv() # 145 190 us

|

| 176 |

+

%timeit t_json() # 72.8 119 us

|

| 177 |

+

%timeit t_ntxt() # 878 us

|

| 178 |

+

|

| 179 |

+

%timeit t_npy2400() # 212 ms; 2400 files in a folder

|

| 180 |

+

%timeit t_npz2400() # 296 ms; uncompreesed 1000 arrays in a single file

|

| 181 |

+

|

| 182 |

+

%timeit t_npy2400_take200() # 17.4 ms; 25 Mb

|

| 183 |

+

%timeit t_npz2400_take200() # 28.8 ms; 3.72 ms for 10 arrays; 25 Mb

|

| 184 |

+

%timeit t_npy2400single() # 4 ms; frozen dictionary containing 2400 arrays; 6.4 Mb; int16

|

| 185 |

+

%timeit t_mmap2400single() # dictionary is not supported

|

| 186 |

+

%timeit t_json2400single() # 175 ms; 17 Mb

|

| 187 |

+

# 2400 files from 100ms hop for 4 minutes

|

extras/perceivertf_inspect.py

ADDED

|

@@ -0,0 +1,640 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def l2_normalize(matrix):

|

| 7 |

+

"""

|

| 8 |

+

L2 Normalize the matrix along its rows.

|

| 9 |

+

|

| 10 |

+

Parameters:

|

| 11 |

+

matrix (numpy.ndarray): The input matrix.

|

| 12 |

+

|

| 13 |

+

Returns:

|

| 14 |

+

numpy.ndarray: The L2 normalized matrix.

|

| 15 |

+

"""

|

| 16 |

+

l2_norms = np.linalg.norm(matrix, axis=1, keepdims=True)

|

| 17 |

+

normalized_matrix = matrix / l2_norms

|

| 18 |

+

return normalized_matrix

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def z_normalize(matrix):

|

| 22 |

+

"""

|

| 23 |

+

Z-normalize the matrix along its rows (mean=0 and std=1).

|

| 24 |

+

Z-normalization is also known as "standardization", and derives from z-score.

|

| 25 |

+

Z = (X - mean) / std

|

| 26 |

+

Z-nomarlized, each row has mean=0 and std=1.

|

| 27 |

+

|

| 28 |

+

Parameters:

|

| 29 |

+

matrix (numpy.ndarray): The input matrix.

|

| 30 |

+

|

| 31 |

+

Returns:

|

| 32 |

+

numpy.ndarray: The Z normalized matrix.

|

| 33 |

+

"""

|

| 34 |

+

mean = np.mean(matrix, axis=1, keepdims=True)

|

| 35 |

+

std = np.std(matrix, axis=1, keepdims=True)

|

| 36 |

+

normalized_matrix = (matrix - mean) / std

|

| 37 |

+

return normalized_matrix

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def l2_normalize_tensors(tensor_tuple):

|

| 41 |

+

"""

|

| 42 |

+

Applies L2 normalization on the last two dimensions for each tensor in a tuple.

|

| 43 |

+

|

| 44 |

+

Parameters:

|

| 45 |

+

tensor_tuple (tuple of torch.Tensor): A tuple containing N tensors, each of shape (1, k, 30, 30).

|

| 46 |

+

|

| 47 |

+

Returns:

|

| 48 |

+

tuple of torch.Tensor: A tuple containing N L2-normalized tensors.

|

| 49 |

+

"""

|

| 50 |

+

normalized_tensors = []

|

| 51 |

+

for tensor in tensor_tuple:

|

| 52 |

+

# Ensure the tensor is a floating-point type

|

| 53 |

+

tensor = tensor.float()

|

| 54 |

+

|

| 55 |

+

# Calculate L2 norm on the last two dimensions, keeping the dimensions using keepdim=True

|

| 56 |

+

l2_norm = torch.linalg.norm(tensor, dim=(-2, -1), keepdim=True)

|

| 57 |

+

|

| 58 |

+

# Apply L2 normalization

|

| 59 |

+

normalized_tensor = tensor / (

|

| 60 |

+

l2_norm + 1e-7) # Small value to avoid division by zero

|

| 61 |

+

|

| 62 |

+

normalized_tensors.append(normalized_tensor)

|

| 63 |

+

|

| 64 |

+

return tuple(normalized_tensors)

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

def z_normalize_tensors(tensor_tuple):

|

| 68 |

+

"""

|

| 69 |

+

Applies Z-normalization on the last two dimensions for each tensor in a tuple.

|

| 70 |

+

|

| 71 |

+

Parameters:

|

| 72 |

+

tensor_tuple (tuple of torch.Tensor): A tuple containing N tensors, each of shape (1, k, 30, 30).

|

| 73 |

+

|

| 74 |

+

Returns:

|

| 75 |

+

tuple of torch.Tensor: A tuple containing N Z-normalized tensors.

|

| 76 |

+

"""

|

| 77 |

+

normalized_tensors = []

|

| 78 |

+

for tensor in tensor_tuple:

|

| 79 |

+

# Ensure the tensor is a floating-point type

|

| 80 |

+

tensor = tensor.float()

|

| 81 |

+

|

| 82 |

+

# Calculate mean and std on the last two dimensions

|

| 83 |

+

mean = tensor.mean(dim=(-2, -1), keepdim=True)

|

| 84 |

+

std = tensor.std(dim=(-2, -1), keepdim=True)

|

| 85 |

+

|

| 86 |

+

# Apply Z-normalization

|

| 87 |

+

normalized_tensor = (tensor - mean) / (

|

| 88 |

+

std + 1e-7) # Small value to avoid division by zero

|

| 89 |

+

|

| 90 |

+

normalized_tensors.append(normalized_tensor)

|

| 91 |

+

|

| 92 |

+

return tuple(normalized_tensors)

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

def apply_temperature_to_attention_tensors(tensor_tuple, temperature=1.0):

|

| 96 |

+

"""

|

| 97 |

+

Applies temperature scaling to the attention weights in each tensor in a tuple.

|

| 98 |

+

|

| 99 |

+

Parameters:

|

| 100 |

+

tensor_tuple (tuple of torch.Tensor): A tuple containing N tensors,

|

| 101 |

+

each of shape (1, k, 30, 30).

|

| 102 |

+

temperature (float): Temperature parameter to control the sharpness

|

| 103 |

+

of the attention weights. Default is 1.0.

|

| 104 |

+

|

| 105 |

+

Returns:

|

| 106 |

+

tuple of torch.Tensor: A tuple containing N tensors with scaled attention weights.

|

| 107 |

+

"""

|

| 108 |

+

scaled_attention_tensors = []

|

| 109 |

+

|

| 110 |

+

for tensor in tensor_tuple:

|

| 111 |

+

# Ensure the tensor is a floating-point type

|

| 112 |

+

tensor = tensor.float()

|

| 113 |

+

|

| 114 |

+

# Flatten the last two dimensions

|

| 115 |

+

flattened_tensor = tensor.reshape(1, tensor.shape[1],

|

| 116 |

+

-1) # Modified line here

|

| 117 |

+

|

| 118 |

+

# Apply temperature scaling and softmax along the last dimension

|

| 119 |

+

scaled_attention = flattened_tensor / temperature

|

| 120 |

+

scaled_attention = F.softmax(scaled_attention, dim=-1)

|

| 121 |

+

|

| 122 |

+

# Reshape to original shape

|

| 123 |

+

scaled_attention = scaled_attention.view_as(tensor)

|

| 124 |

+

|

| 125 |

+

scaled_attention_tensors.append(scaled_attention)

|

| 126 |

+

|

| 127 |

+

return tuple(scaled_attention_tensors)

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

def shorten_att(tensor_tuple, length=30):

|

| 131 |

+

shortend_tensors = []

|

| 132 |

+

for tensor in tensor_tuple:

|

| 133 |

+

shortend_tensors.append(tensor[:, :, :length, :length])

|

| 134 |

+

return tuple(shortend_tensors)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

def keep_top_k(matrix, k=6):

|

| 138 |

+

"""

|

| 139 |

+

Keep only the top k values in each row, set the rest to 0.

|

| 140 |

+

|

| 141 |

+

Parameters:

|

| 142 |

+

matrix (numpy.ndarray): The input matrix.

|

| 143 |

+

k (int): The number of top values to keep in each row.

|

| 144 |

+

|

| 145 |

+

Returns:

|

| 146 |

+

numpy.ndarray: The transformed matrix.

|

| 147 |

+

"""

|

| 148 |

+

topk_indices_per_row = np.argpartition(matrix, -k, axis=1)[:, -k:]

|

| 149 |

+

result_matrix = np.zeros_like(matrix)

|

| 150 |

+

|

| 151 |

+

for i in range(matrix.shape[0]):

|

| 152 |

+

result_matrix[i, topk_indices_per_row[i]] = matrix[

|

| 153 |

+

i, topk_indices_per_row[i]]

|

| 154 |

+

return result_matrix

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

def test_case_forward_enc_perceiver_tf_dec_t5():

|

| 158 |

+

import torch

|

| 159 |

+

from model.ymt3 import YourMT3

|

| 160 |

+

from config.config import audio_cfg, model_cfg, shared_cfg

|

| 161 |

+

model_cfg["encoder_type"] = "perceiver-tf"

|

| 162 |

+

model_cfg["encoder"]["perceiver-tf"]["attention_to_channel"] = True

|

| 163 |

+

model_cfg["encoder"]["perceiver-tf"]["num_latents"] = 24

|

| 164 |

+

model_cfg["decoder_type"] = "t5"

|

| 165 |

+

model_cfg["pre_decoder_type"] = "default"

|

| 166 |

+

|

| 167 |

+

audio_cfg["codec"] = "spec"

|

| 168 |

+

audio_cfg["hop_length"] = 300

|

| 169 |

+

model = YourMT3(audio_cfg=audio_cfg, model_cfg=model_cfg)

|

| 170 |

+

model.eval()

|

| 171 |

+

|

| 172 |

+

# x = torch.randn(2, 1, 32767)

|

| 173 |

+

# labels = torch.randint(0, 400, (2, 1024), requires_grad=False)

|

| 174 |

+

|

| 175 |

+

# # forward

|

| 176 |

+

# output = model.forward(x, labels)

|

| 177 |

+

|

| 178 |

+

# # inference

|

| 179 |

+

# result = model.inference(x, None)

|

| 180 |

+

|

| 181 |

+

# display latents

|

| 182 |

+

checkpoint = torch.load(

|

| 183 |

+

"../logs/ymt3/ptf_all_cross_rebal5_spec300_xk2_amp0811_edr_005_attend_c_full_plus_b52/checkpoints/model.ckpt",

|

| 184 |

+

map_location="cpu")

|

| 185 |

+

state_dict = checkpoint['state_dict']

|

| 186 |

+

new_state_dict = {

|

| 187 |

+

k: v

|

| 188 |

+

for k, v in state_dict.items() if 'pitchshift' not in k

|

| 189 |

+

}

|

| 190 |

+

model.load_state_dict(new_state_dict, strict=False)

|

| 191 |

+

|

| 192 |

+

latents = model.encoder.latent_array.latents.detach().numpy()

|

| 193 |

+

import matplotlib.pyplot as plt

|

| 194 |

+

import numpy as np

|

| 195 |

+

from sklearn.metrics.pairwise import cosine_similarity

|

| 196 |

+

cos = cosine_similarity(latents)

|

| 197 |

+

|

| 198 |

+

from utils.data_modules import AMTDataModule

|

| 199 |

+

from einops import rearrange

|

| 200 |

+

dm = AMTDataModule(data_preset_multi={"presets": ["slakh"]})

|

| 201 |

+

dm.setup("test")

|

| 202 |

+

dl = dm.test_dataloader()

|

| 203 |

+

ds = list(dl.values())[0].dataset

|

| 204 |

+

audio, notes, tokens, _ = ds.__getitem__(7)

|

| 205 |

+

x = audio[[16], ::]

|

| 206 |

+

label = tokens[[16], :]

|

| 207 |

+

# spectrogram

|

| 208 |

+

x_spec = model.spectrogram(x)

|

| 209 |

+

plt.imshow(x_spec[0].detach().numpy().T, aspect='auto', origin='lower')

|

| 210 |

+

plt.title("spectrogram")

|

| 211 |

+

plt.xlabel('time step')

|

| 212 |

+

plt.ylabel('frequency bin')

|

| 213 |

+

plt.show()

|

| 214 |

+

x_conv = model.pre_encoder(x_spec)

|

| 215 |

+

# Create a larger figure

|

| 216 |

+

plt.figure(

|

| 217 |

+

figsize=(15,

|

| 218 |

+

10)) # Adjust these numbers as needed for width and height

|

| 219 |

+

plt.subplot(2, 4, 1)

|

| 220 |

+

plt.imshow(x_spec[0].detach().numpy().T, aspect='auto', origin='lower')

|

| 221 |

+

plt.title("spectrogram")

|

| 222 |

+

plt.xlabel('time step')

|

| 223 |

+

plt.ylabel('frequency bin')

|

| 224 |

+

plt.subplot(2, 4, 2)

|

| 225 |

+

plt.imshow(x_conv[0][:, :, 0].detach().numpy().T,

|

| 226 |

+

aspect='auto',

|

| 227 |

+

origin='lower')

|

| 228 |

+

plt.title("conv(spec), ch=0")

|

| 229 |

+

plt.xlabel('time step')

|

| 230 |

+

plt.ylabel('F')

|

| 231 |

+

plt.subplot(2, 4, 3)

|

| 232 |

+

plt.imshow(x_conv[0][:, :, 42].detach().numpy().T,

|

| 233 |

+

aspect='auto',

|

| 234 |

+

origin='lower')

|

| 235 |

+

plt.title("ch=42")

|

| 236 |

+

plt.xlabel('time step')

|

| 237 |

+

plt.ylabel('F')

|

| 238 |

+

plt.subplot(2, 4, 4)

|

| 239 |

+

plt.imshow(x_conv[0][:, :, 80].detach().numpy().T,

|

| 240 |

+

aspect='auto',

|

| 241 |

+

origin='lower')

|

| 242 |

+

plt.title("ch=80")

|

| 243 |

+

plt.xlabel('time step')

|

| 244 |

+

plt.ylabel('F')

|

| 245 |

+

plt.subplot(2, 4, 5)

|

| 246 |

+

plt.imshow(x_conv[0][:, :, 11].detach().numpy().T,

|

| 247 |

+

aspect='auto',

|

| 248 |

+

origin='lower')

|

| 249 |

+

plt.title("ch=11")

|

| 250 |

+

plt.xlabel('time step')

|

| 251 |

+

plt.ylabel('F')

|

| 252 |

+

plt.subplot(2, 4, 6)

|

| 253 |

+

plt.imshow(x_conv[0][:, :, 20].detach().numpy().T,

|

| 254 |

+

aspect='auto',

|

| 255 |

+

origin='lower')

|

| 256 |

+

plt.title("ch=20")

|

| 257 |

+

plt.xlabel('time step')

|

| 258 |

+

plt.ylabel('F')

|

| 259 |

+

plt.subplot(2, 4, 7)

|

| 260 |

+

plt.imshow(x_conv[0][:, :, 77].detach().numpy().T,

|

| 261 |

+

aspect='auto',

|

| 262 |

+

origin='lower')

|

| 263 |

+

plt.title("ch=77")

|

| 264 |

+

plt.xlabel('time step')

|

| 265 |

+

plt.ylabel('F')

|

| 266 |

+

plt.subplot(2, 4, 8)

|

| 267 |

+

plt.imshow(x_conv[0][:, :, 90].detach().numpy().T,

|

| 268 |

+

aspect='auto',

|

| 269 |

+

origin='lower')

|

| 270 |

+

plt.title("ch=90")

|

| 271 |

+

plt.xlabel('time step')

|

| 272 |

+

plt.ylabel('F')

|

| 273 |

+

plt.tight_layout()

|

| 274 |

+

plt.show()

|

| 275 |

+

|

| 276 |

+

# encoding

|

| 277 |

+

output = model.encoder(inputs_embeds=x_conv,

|

| 278 |

+

output_hidden_states=True,

|

| 279 |

+

output_attentions=True)

|

| 280 |

+

enc_hs_all, att, catt = output["hidden_states"], output[

|

| 281 |

+

"attentions"], output["cross_attentions"]

|

| 282 |

+

enc_hs_last = enc_hs_all[2]

|

| 283 |

+

|

| 284 |

+

# enc_hs: time-varying encoder hidden state

|

| 285 |

+

plt.subplot(2, 3, 1)

|

| 286 |

+

plt.imshow(enc_hs_all[0][0][:, :, 21].detach().numpy().T)

|

| 287 |

+

plt.title('ENC_HS B0, d21')

|

| 288 |

+

plt.colorbar(orientation='horizontal')

|

| 289 |

+

plt.ylabel('latent k')

|

| 290 |

+

plt.xlabel('t')

|

| 291 |

+

plt.subplot(2, 3, 4)

|

| 292 |

+

plt.imshow(enc_hs_all[0][0][:, :, 127].detach().numpy().T)

|

| 293 |

+

plt.colorbar(orientation='horizontal')

|

| 294 |

+

plt.title('B0, d127')

|

| 295 |

+

plt.ylabel('latent k')

|

| 296 |

+

plt.xlabel('t')

|

| 297 |

+

plt.subplot(2, 3, 2)

|

| 298 |

+

plt.imshow(enc_hs_all[1][0][:, :, 21].detach().numpy().T)

|

| 299 |

+

plt.colorbar(orientation='horizontal')

|

| 300 |

+

plt.title('B1, d21')

|

| 301 |

+

plt.ylabel('latent k')

|

| 302 |

+

plt.xlabel('t')

|

| 303 |

+

plt.subplot(2, 3, 5)

|

| 304 |

+

plt.imshow(enc_hs_all[1][0][:, :, 127].detach().numpy().T)

|

| 305 |

+

plt.colorbar(orientation='horizontal')

|

| 306 |

+

plt.title('B1, d127')

|

| 307 |

+

plt.ylabel('latent k')

|

| 308 |

+

plt.xlabel('t')

|

| 309 |

+

plt.subplot(2, 3, 3)

|

| 310 |

+

plt.imshow(enc_hs_all[2][0][:, :, 21].detach().numpy().T)

|

| 311 |

+

plt.colorbar(orientation='horizontal')

|

| 312 |

+

plt.title('B2, d21')

|

| 313 |

+

plt.ylabel('latent k')

|

| 314 |

+

plt.xlabel('t')

|

| 315 |

+

plt.subplot(2, 3, 6)

|

| 316 |

+

plt.imshow(enc_hs_all[2][0][:, :, 127].detach().numpy().T)

|

| 317 |

+

plt.colorbar(orientation='horizontal')

|

| 318 |

+

plt.title('B2, d127')

|

| 319 |

+

plt.ylabel('latent k')

|

| 320 |

+

plt.xlabel('t')

|

| 321 |

+

plt.tight_layout()

|

| 322 |

+

plt.show()

|

| 323 |

+

|

| 324 |

+

enc_hs_proj = model.pre_decoder(enc_hs_last)

|

| 325 |

+

plt.imshow(enc_hs_proj[0].detach().numpy())

|

| 326 |

+

plt.title(

|

| 327 |

+

'ENC_HS_PROJ: linear projection of encoder output, which is used for enc-dec cross attention'

|

| 328 |

+

)

|

| 329 |

+

plt.colorbar(orientation='horizontal')

|

| 330 |

+

plt.ylabel('latent k')

|

| 331 |

+

plt.xlabel('d')

|

| 332 |

+

plt.show()

|

| 333 |

+

|

| 334 |

+

plt.subplot(221)

|

| 335 |

+

plt.imshow(enc_hs_all[2][0][0, :, :].detach().numpy(), aspect='auto')

|

| 336 |

+

plt.title('enc_hs, t=0')

|

| 337 |

+

plt.ylabel('latent k')

|

| 338 |

+

plt.xlabel('d')

|

| 339 |

+

plt.subplot(222)

|

| 340 |

+

plt.imshow(enc_hs_all[2][0][10, :, :].detach().numpy(), aspect='auto')

|

| 341 |

+

plt.title('enc_hs, t=10')

|

| 342 |

+

plt.ylabel('latent k')

|

| 343 |

+

plt.xlabel('d')

|

| 344 |

+

plt.subplot(223)

|

| 345 |

+