Unnamed: 0

int64 0

832k

| id

float64 2.49B

32.1B

| type

stringclasses 1

value | created_at

stringlengths 19

19

| repo

stringlengths 5

112

| repo_url

stringlengths 34

141

| action

stringclasses 3

values | title

stringlengths 1

957

| labels

stringlengths 4

795

| body

stringlengths 1

259k

| index

stringclasses 12

values | text_combine

stringlengths 96

259k

| label

stringclasses 2

values | text

stringlengths 96

252k

| binary_label

int64 0

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

76,852 | 3,497,312,665 | IssuesEvent | 2016-01-06 00:15:27 | IQSS/dataverse | https://api.github.com/repos/IQSS/dataverse | opened | Dataverse: Edit General Info, cannot disable selected blocks by Use metadata fields from parent. | Component: UX & Upgrade Priority: Medium Status: Dev Type: Bug |

Related #1473

If in a sub dv you choose specific metadata blocks, then later decide to use parent blocks again, simply checking use parent and saving doesn't work, though it acts like it does: clears check boxes and saves but revisiting settings shows old values still there.

The way to deselect is to first deselect each selected block, save, then select use parent, save. Not obvious. | 1.0 | Dataverse: Edit General Info, cannot disable selected blocks by Use metadata fields from parent. -

Related #1473

If in a sub dv you choose specific metadata blocks, then later decide to use parent blocks again, simply checking use parent and saving doesn't work, though it acts like it does: clears check boxes and saves but revisiting settings shows old values still there.

The way to deselect is to first deselect each selected block, save, then select use parent, save. Not obvious. | priority | dataverse edit general info cannot disable selected blocks by use metadata fields from parent related if in a sub dv you choose specific metadata blocks then later decide to use parent blocks again simply checking use parent and saving doesn t work though it acts like it does clears check boxes and saves but revisiting settings shows old values still there the way to deselect is to first deselect each selected block save then select use parent save not obvious | 1 |

396,624 | 11,711,678,014 | IssuesEvent | 2020-03-09 06:06:57 | AY1920S2-CS2103T-W12-4/main | https://api.github.com/repos/AY1920S2-CS2103T-W12-4/main | opened | As a user who likes experimenting, I can give me a random recipe that I have added | priority.Medium status.Ongoing type.Story | .. so that I can challenge myself to cook what has been given

Command: `random`

| 1.0 | As a user who likes experimenting, I can give me a random recipe that I have added - .. so that I can challenge myself to cook what has been given

Command: `random`

| priority | as a user who likes experimenting i can give me a random recipe that i have added so that i can challenge myself to cook what has been given command random | 1 |

554,126 | 16,389,597,249 | IssuesEvent | 2021-05-17 14:36:12 | ruuvi/com.ruuvi.station | https://api.github.com/repos/ruuvi/com.ruuvi.station | closed | Starting without wifi access causes crash - 1.5.8 - defect | bug medium priority | If mobile is out of range of wifi or otherwise not connected to wifi app will crash.

May be related to issue #350 | 1.0 | Starting without wifi access causes crash - 1.5.8 - defect - If mobile is out of range of wifi or otherwise not connected to wifi app will crash.

May be related to issue #350 | priority | starting without wifi access causes crash defect if mobile is out of range of wifi or otherwise not connected to wifi app will crash may be related to issue | 1 |

497,476 | 14,371,366,516 | IssuesEvent | 2020-12-01 12:28:14 | replicate/replicate | https://api.github.com/repos/replicate/replicate | closed | Add development support for Linux | priority/medium type/bug | The development environment outlined in `CONTRIBUTING.md` currently does not support Linux systems. Fixing this would enable more developers to contribute to the project. | 1.0 | Add development support for Linux - The development environment outlined in `CONTRIBUTING.md` currently does not support Linux systems. Fixing this would enable more developers to contribute to the project. | priority | add development support for linux the development environment outlined in contributing md currently does not support linux systems fixing this would enable more developers to contribute to the project | 1 |

30,056 | 2,722,147,107 | IssuesEvent | 2015-04-14 00:24:15 | CruxFramework/crux-smart-faces | https://api.github.com/repos/CruxFramework/crux-smart-faces | closed | DialogBox without close button | bug imported Milestone-M14-C4 Module-CruxWidgets Priority-Medium TargetVersion-5.3.0 | _From [[email protected]](https://code.google.com/u/[email protected]/) on March 17, 2015 11:22:45_

DialogBox used in the showcase project does not have close button on the small view type.

_Original issue: http://code.google.com/p/crux-framework/issues/detail?id=639_ | 1.0 | DialogBox without close button - _From [[email protected]](https://code.google.com/u/[email protected]/) on March 17, 2015 11:22:45_

DialogBox used in the showcase project does not have close button on the small view type.

_Original issue: http://code.google.com/p/crux-framework/issues/detail?id=639_ | priority | dialogbox without close button from on march dialogbox used in the showcase project does not have close button on the small view type original issue | 1 |

598,710 | 18,250,675,389 | IssuesEvent | 2021-10-02 06:22:23 | FantasticoFox/VerifyPage | https://api.github.com/repos/FantasticoFox/VerifyPage | closed | Only show numbers of revision of the page file in question instead of backend rev_id's | medium priority feature UX |

The backed revision ID's are only impotent for debugging purpose (so best is to have a debugging option to enable it). Useful for the user is to understand how many revisions the local file has. | 1.0 | Only show numbers of revision of the page file in question instead of backend rev_id's -

The backed revision ID's are only impotent for debugging purpose (so best is to have a debugging option to enable it). Useful for the user is to understand how many revisions the local file has. | priority | only show numbers of revision of the page file in question instead of backend rev id s the backed revision id s are only impotent for debugging purpose so best is to have a debugging option to enable it useful for the user is to understand how many revisions the local file has | 1 |

671,022 | 22,738,969,675 | IssuesEvent | 2022-07-07 00:26:50 | PolyhedralDev/TerraOverworldConfig | https://api.github.com/repos/PolyhedralDev/TerraOverworldConfig | opened | Global heightmap refactor | enhancement priority=medium major | Rework all terrain to use a global height map, rather than determining general height via biome distribution. This will make height variation look significantly better as terrain won't need to be interpolated so much. Biome specific detailing can be done by different EQs that utilize the heightmap in different ways.

Here are some examples of an early implementation

| 1.0 | Global heightmap refactor - Rework all terrain to use a global height map, rather than determining general height via biome distribution. This will make height variation look significantly better as terrain won't need to be interpolated so much. Biome specific detailing can be done by different EQs that utilize the heightmap in different ways.

Here are some examples of an early implementation

| priority | global heightmap refactor rework all terrain to use a global height map rather than determining general height via biome distribution this will make height variation look significantly better as terrain won t need to be interpolated so much biome specific detailing can be done by different eqs that utilize the heightmap in different ways here are some examples of an early implementation | 1 |

30,370 | 2,723,600,757 | IssuesEvent | 2015-04-14 13:36:54 | CruxFramework/crux-widgets | https://api.github.com/repos/CruxFramework/crux-widgets | closed | ClassPathResolver section in UserManual is out of date | bug imported Milestone-3.0.0 Priority-Medium Wiki | _From [[email protected]](https://code.google.com/u/108972312674998482139/) on May 21, 2010 16:20:51_

What steps will reproduce the problem? 1.Go to Wiki/ UserManual 2.Check instructions for creating a WeblogicClassPathResolver

3.Check method public URL findWebBaseDir()

The document says to override method public URL findWebBaseDir(). However

the class ClassPathResolverImpl doesn't have this method. It has a similar

method:

public URL[] findWebBaseDirs().

Seems like this section of the UserManual is out of date. Could you guys

update it?

Cheers

B

_Original issue: http://code.google.com/p/crux-framework/issues/detail?id=115_ | 1.0 | ClassPathResolver section in UserManual is out of date - _From [[email protected]](https://code.google.com/u/108972312674998482139/) on May 21, 2010 16:20:51_

What steps will reproduce the problem? 1.Go to Wiki/ UserManual 2.Check instructions for creating a WeblogicClassPathResolver

3.Check method public URL findWebBaseDir()

The document says to override method public URL findWebBaseDir(). However

the class ClassPathResolverImpl doesn't have this method. It has a similar

method:

public URL[] findWebBaseDirs().

Seems like this section of the UserManual is out of date. Could you guys

update it?

Cheers

B

_Original issue: http://code.google.com/p/crux-framework/issues/detail?id=115_ | priority | classpathresolver section in usermanual is out of date from on may what steps will reproduce the problem go to wiki usermanual check instructions for creating a weblogicclasspathresolver check method public url findwebbasedir the document says to override method public url findwebbasedir however the class classpathresolverimpl doesn t have this method it has a similar method public url findwebbasedirs seems like this section of the usermanual is out of date could you guys update it cheers b original issue | 1 |

524,357 | 15,212,062,272 | IssuesEvent | 2021-02-17 09:55:19 | staxrip/staxrip | https://api.github.com/repos/staxrip/staxrip | closed | NVENC and QSVENS Subtitles file option must be removed | added/fixed/done bug priority medium | **Describe the bug**

The option Other > Subtitle File must be removed from :

- NVEnc : h264, h265

- QSVEnc : h264, h265

because this options allows to **mux (not harcode!)** a subtitle file to the output of NVEnc and QSVEnc. Since in Staxrip the output of the encoder is *.h264 or *.h265, this option makes crash.

| 1.0 | NVENC and QSVENS Subtitles file option must be removed - **Describe the bug**

The option Other > Subtitle File must be removed from :

- NVEnc : h264, h265

- QSVEnc : h264, h265

because this options allows to **mux (not harcode!)** a subtitle file to the output of NVEnc and QSVEnc. Since in Staxrip the output of the encoder is *.h264 or *.h265, this option makes crash.

| priority | nvenc and qsvens subtitles file option must be removed describe the bug the option other subtitle file must be removed from nvenc qsvenc because this options allows to mux not harcode a subtitle file to the output of nvenc and qsvenc since in staxrip the output of the encoder is or this option makes crash | 1 |

57,630 | 3,083,237,308 | IssuesEvent | 2015-08-24 07:30:21 | magro/memcached-session-manager | https://api.github.com/repos/magro/memcached-session-manager | closed | Support context configured with cookies="false" | bug imported Milestone-1.6.5 Priority-Medium | _From [[email protected]](https://code.google.com/u/102339389615967637599/) on April 02, 2013 09:22:14_

<b>What steps will reproduce the problem?</b>

1. tomcat forbid cookies

/conf/context.xml:

<Context cookies="false">

<!-- Default set of monitored resources -->

<WatchedResource>WEB-INF/web.xml</WatchedResource>

<Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager"

memcachedNodes="n1:192.168.1.55:11211,n2:192.168.1.56:11211"

sticky="false"

sessionBackupAsync="false"

requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$"

transcoderFactoryClass="de.javakaffee.web.msm.JavaSerializationTranscoderFactory"

/>

</Context>

2.start tomcat server,do Login request,

i put customer info in session,login success

but i do other action,like query customer info with "http://ip:port:/something;jsessionid=46A87DE63835612CAF557AF34013E18D-n2";

fail,note i'm not login.

why memcached do not save Session when tomcat forbid cookies?

3.logs:

2013-4-2 11:56:08 de.javakaffee.web.msm.SessionIdFormat createSessionId

良好: Creating new session id with orig id 'ping' and memcached id 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.NodeAvailabilityCache updateIsNodeAvailable

良好: CacheLoader returned node availability 'true' for node 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.SessionIdFormat createSessionId

良好: Creating new session id with orig id 'E6636323F89B006F4E86DEA12FC02653' and memcached id 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.MemcachedSessionService createSession

良好: Created new session with id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

良好: <<<<<< Request finished: POST /ark/client/customer/login ==================

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

良好: <<<<<< Request finished: POST /ark/client/customer/login ==================

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

_Original issue: http://code.google.com/p/memcached-session-manager/issues/detail?id=159_ | 1.0 | Support context configured with cookies="false" - _From [[email protected]](https://code.google.com/u/102339389615967637599/) on April 02, 2013 09:22:14_

<b>What steps will reproduce the problem?</b>

1. tomcat forbid cookies

/conf/context.xml:

<Context cookies="false">

<!-- Default set of monitored resources -->

<WatchedResource>WEB-INF/web.xml</WatchedResource>

<Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager"

memcachedNodes="n1:192.168.1.55:11211,n2:192.168.1.56:11211"

sticky="false"

sessionBackupAsync="false"

requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$"

transcoderFactoryClass="de.javakaffee.web.msm.JavaSerializationTranscoderFactory"

/>

</Context>

2.start tomcat server,do Login request,

i put customer info in session,login success

but i do other action,like query customer info with "http://ip:port:/something;jsessionid=46A87DE63835612CAF557AF34013E18D-n2";

fail,note i'm not login.

why memcached do not save Session when tomcat forbid cookies?

3.logs:

2013-4-2 11:56:08 de.javakaffee.web.msm.SessionIdFormat createSessionId

良好: Creating new session id with orig id 'ping' and memcached id 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.NodeAvailabilityCache updateIsNodeAvailable

良好: CacheLoader returned node availability 'true' for node 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.SessionIdFormat createSessionId

良好: Creating new session id with orig id 'E6636323F89B006F4E86DEA12FC02653' and memcached id 'n1'.

2013-4-2 11:56:08 de.javakaffee.web.msm.MemcachedSessionService createSession

良好: Created new session with id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

良好: <<<<<< Request finished: POST /ark/client/customer/login ==================

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

良好: <<<<<< Request finished: POST /ark/client/customer/login ==================

2013-4-2 11:56:09 de.javakaffee.web.msm.MemcachedSessionService backupSession

良好: No session found in session map for E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.LockingStrategy onBackupWithoutLoadedSession

警告: Found no validity info for session id E6636323F89B006F4E86DEA12FC02653-n1

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve logDebugResponseCookie

良好: Request finished, with Set-Cookie header: JSESSIONID=E6636323F89B006F4E86DEA12FC02653-n1; Path=/; HttpOnly

2013-4-2 11:56:09 de.javakaffee.web.msm.RequestTrackingHostValve invoke

_Original issue: http://code.google.com/p/memcached-session-manager/issues/detail?id=159_ | priority | support context configured with cookies false from on april what steps will reproduce the problem tomcat forbid cookies conf context xml lt context cookies false gt lt default set of monitored resources gt lt watchedresource gt web inf web xml lt watchedresource gt lt manager classname de javakaffee web msm memcachedbackupsessionmanager memcachednodes sticky false sessionbackupasync false requesturiignorepattern ico png gif jpg css js transcoderfactoryclass de javakaffee web msm javaserializationtranscoderfactory gt lt context gt start tomcat server do login request i put customer info in session login success but i do other action like query customer info with fail note i m not login why memcached do not save session when tomcat forbid cookies logs de javakaffee web msm sessionidformat createsessionid 良好 creating new session id with orig id ping and memcached id de javakaffee web msm nodeavailabilitycache updateisnodeavailable 良好 cacheloader returned node availability true for node de javakaffee web msm sessionidformat createsessionid 良好 creating new session id with orig id and memcached id de javakaffee web msm memcachedsessionservice createsession 良好 created new session with id de javakaffee web msm memcachedsessionservice backupsession 良好 no session found in session map for de javakaffee web msm lockingstrategy onbackupwithoutloadedsession 警告 found no validity info for session id de javakaffee web msm requesttrackinghostvalve logdebugresponsecookie 良好 request finished with set cookie header jsessionid path httponly de javakaffee web msm requesttrackinghostvalve invoke 良好 lt lt lt lt lt lt request finished post ark client customer login de javakaffee web msm memcachedsessionservice backupsession 良好 no session found in session map for de javakaffee web msm lockingstrategy onbackupwithoutloadedsession 警告 found no validity info for session id de javakaffee web msm requesttrackinghostvalve logdebugresponsecookie 良好 request finished with set cookie header jsessionid path httponly de javakaffee web msm requesttrackinghostvalve invoke 良好 lt lt lt lt lt lt request finished post ark client customer login de javakaffee web msm memcachedsessionservice backupsession 良好 no session found in session map for de javakaffee web msm lockingstrategy onbackupwithoutloadedsession 警告 found no validity info for session id de javakaffee web msm requesttrackinghostvalve logdebugresponsecookie 良好 request finished with set cookie header jsessionid path httponly de javakaffee web msm requesttrackinghostvalve invoke original issue | 1 |

532,073 | 15,529,417,577 | IssuesEvent | 2021-03-13 15:07:42 | AY2021S2-CS2103-T14-2/tp | https://api.github.com/repos/AY2021S2-CS2103-T14-2/tp | opened | Improved Search Feature - search by ratings | priority.Medium | As a user, I can search for food diary entries by ratings, so that I can filter out the good places to eat at. | 1.0 | Improved Search Feature - search by ratings - As a user, I can search for food diary entries by ratings, so that I can filter out the good places to eat at. | priority | improved search feature search by ratings as a user i can search for food diary entries by ratings so that i can filter out the good places to eat at | 1 |

607,505 | 18,784,025,124 | IssuesEvent | 2021-11-08 10:11:47 | DiscordDungeons/Bugs | https://api.github.com/repos/DiscordDungeons/Bugs | closed | Reaping Ring Doesn't Work | Bug Bot Priority: Medium | **Describe the bug**

If a ring has the "reaping" attribute it doesn't do what it's supposed to do with boosts.

**To Reproduce**

Steps to reproduce the behavior:

1. Use a ring with reaping and see that you do not get 1.5x the amount in checking plants item - felix's plant ring

**Expected behavior**

For the boost to actually work

**Version**

(all versions since you need to add for all attributes to work xd, the issue happened with mineboost ring, salvaging ring xd)

4.15.4

**Additional context**

Best to actually go through all attributes that the ring can have and pre-add them all to work so any future ring that has a "new" boost that no one has will work | 1.0 | Reaping Ring Doesn't Work - **Describe the bug**

If a ring has the "reaping" attribute it doesn't do what it's supposed to do with boosts.

**To Reproduce**

Steps to reproduce the behavior:

1. Use a ring with reaping and see that you do not get 1.5x the amount in checking plants item - felix's plant ring

**Expected behavior**

For the boost to actually work

**Version**

(all versions since you need to add for all attributes to work xd, the issue happened with mineboost ring, salvaging ring xd)

4.15.4

**Additional context**

Best to actually go through all attributes that the ring can have and pre-add them all to work so any future ring that has a "new" boost that no one has will work | priority | reaping ring doesn t work describe the bug if a ring has the reaping attribute it doesn t do what it s supposed to do with boosts to reproduce steps to reproduce the behavior use a ring with reaping and see that you do not get the amount in checking plants item felix s plant ring expected behavior for the boost to actually work version all versions since you need to add for all attributes to work xd the issue happened with mineboost ring salvaging ring xd additional context best to actually go through all attributes that the ring can have and pre add them all to work so any future ring that has a new boost that no one has will work | 1 |

665,067 | 22,298,284,111 | IssuesEvent | 2022-06-13 05:53:22 | OpenFunction/OpenFunction | https://api.github.com/repos/OpenFunction/OpenFunction | closed | Let functions be triggered by GitHub events | Feature priority/medium | **Proposal**

- Motivation

GitHub is a mainstream code repository, and many developers choose to push their project on GitHub. To meet the needs of the CI\CD of the users, GitHub provides [Webhooks and events](https://docs.github.com/en/developers/webhooks-and-events).

OpenFunction's event framework is dedicated to improving OpenFunction's event responsiveness, so I thought it would be useful to introduce a solution for triggering OpenFunction functions from GitHub events.

- Goals

OpenFunction functions can be triggered by GitHub events.

- Example

In this case, it was necessary to introduce [Argo Events](https://argoproj.github.io/argo-events/), which supports rich event sources, and push Github events to Nats Streaming via Argo Events' [GitHub EventSource](https://argoproj.github.io/argo-events/eventsources/setup/github/).

After that, the OpenFunction Events Trigger, which is subscribed to Nats Streaming, can fetch GitHub events from it and wake up the OpenFunction functions to handle GitHub events.

- Action Items

- Setup Argo Events

- Create a GitHub EventSource in Argo Events

- Create an Ingress to expose the EventSource service

- Configure the GitHub webhook to use the Ingress address as the callback address

- Docking OpenFunction Events EventBus with Nats Streaming enables OpenFunction Events Trigger to read events from Nats Streaming | 1.0 | Let functions be triggered by GitHub events - **Proposal**

- Motivation

GitHub is a mainstream code repository, and many developers choose to push their project on GitHub. To meet the needs of the CI\CD of the users, GitHub provides [Webhooks and events](https://docs.github.com/en/developers/webhooks-and-events).

OpenFunction's event framework is dedicated to improving OpenFunction's event responsiveness, so I thought it would be useful to introduce a solution for triggering OpenFunction functions from GitHub events.

- Goals

OpenFunction functions can be triggered by GitHub events.

- Example

In this case, it was necessary to introduce [Argo Events](https://argoproj.github.io/argo-events/), which supports rich event sources, and push Github events to Nats Streaming via Argo Events' [GitHub EventSource](https://argoproj.github.io/argo-events/eventsources/setup/github/).

After that, the OpenFunction Events Trigger, which is subscribed to Nats Streaming, can fetch GitHub events from it and wake up the OpenFunction functions to handle GitHub events.

- Action Items

- Setup Argo Events

- Create a GitHub EventSource in Argo Events

- Create an Ingress to expose the EventSource service

- Configure the GitHub webhook to use the Ingress address as the callback address

- Docking OpenFunction Events EventBus with Nats Streaming enables OpenFunction Events Trigger to read events from Nats Streaming | priority | let functions be triggered by github events proposal motivation github is a mainstream code repository and many developers choose to push their project on github to meet the needs of the ci cd of the users github provides openfunction s event framework is dedicated to improving openfunction s event responsiveness so i thought it would be useful to introduce a solution for triggering openfunction functions from github events goals openfunction functions can be triggered by github events example in this case it was necessary to introduce which supports rich event sources and push github events to nats streaming via argo events after that the openfunction events trigger which is subscribed to nats streaming can fetch github events from it and wake up the openfunction functions to handle github events action items setup argo events create a github eventsource in argo events create an ingress to expose the eventsource service configure the github webhook to use the ingress address as the callback address docking openfunction events eventbus with nats streaming enables openfunction events trigger to read events from nats streaming | 1 |

722,884 | 24,877,365,720 | IssuesEvent | 2022-10-27 20:24:25 | AY2223S1-CS2113-W12-1/tp | https://api.github.com/repos/AY2223S1-CS2113-W12-1/tp | closed | Finish User Guide (before 28th Oct) | type.Task priority.Medium | We need UG before Friday 28th for PED

Format is similar to UG ip (command, description, note, Example, Expected Outcome) | 1.0 | Finish User Guide (before 28th Oct) - We need UG before Friday 28th for PED

Format is similar to UG ip (command, description, note, Example, Expected Outcome) | priority | finish user guide before oct we need ug before friday for ped format is similar to ug ip command description note example expected outcome | 1 |

71,388 | 3,356,379,343 | IssuesEvent | 2015-11-18 20:14:48 | TechReborn/TechReborn | https://api.github.com/repos/TechReborn/TechReborn | closed | Missing texture with standard machine casing. | bug Medium priority | Techreborn: 0.5.6.1004

reborncore:1.0.0.9

forge:10.13.4.1558

ic2/3:2.2.2.791

# Enable Connected textures

B:"Enable Connected textures"=false

| 1.0 | Missing texture with standard machine casing. - Techreborn: 0.5.6.1004

reborncore:1.0.0.9

forge:10.13.4.1558

ic2/3:2.2.2.791

# Enable Connected textures

B:"Enable Connected textures"=false

| priority | missing texture with standard machine casing techreborn reborncore forge enable connected textures b enable connected textures false | 1 |

481,017 | 13,879,487,940 | IssuesEvent | 2020-10-17 14:40:29 | Unibo-PPS-1920/pps-19-motoScala | https://api.github.com/repos/Unibo-PPS-1920/pps-19-motoScala | closed | As a client I want to start a game with enemies that move with advanced ai based on the difficulty i select | backlog item priority:medium | - [x] #110

- [x] different ai behaviours

| 1.0 | As a client I want to start a game with enemies that move with advanced ai based on the difficulty i select - - [x] #110

- [x] different ai behaviours

| priority | as a client i want to start a game with enemies that move with advanced ai based on the difficulty i select different ai behaviours | 1 |

610,858 | 18,926,776,525 | IssuesEvent | 2021-11-17 10:22:01 | davidvavra/duna-valka-assassinu | https://api.github.com/repos/davidvavra/duna-valka-assassinu | opened | Rozsireni modulu jednotky - armady | priority-medium | Pokud bychom rozlisovali typ jednotky podrobneji, umozni nam to vylepsit infrastrukturu v nekolika ohledech.

Lepsi prezentace parametru armad

Armady maji nasledujici parametry viditelne pro hrace:

- jmeno,

- sila,

- velikost,

- moralka,

- general,

- specialni schopnost.

Vsechny tyto hodnoty muzeme uchovavat v jednom poli v ramci komentare pro hrace. Z hlediska citelnosti pro hrace a jednoduchosti uprav pro nas by bylo fajn mit novy typ, "aktivni/armada", ktery by navic mel parametry vyse (s vyjimkou jmena, ktere nepotrebujeme duplikovat). | 1.0 | Rozsireni modulu jednotky - armady - Pokud bychom rozlisovali typ jednotky podrobneji, umozni nam to vylepsit infrastrukturu v nekolika ohledech.

Lepsi prezentace parametru armad

Armady maji nasledujici parametry viditelne pro hrace:

- jmeno,

- sila,

- velikost,

- moralka,

- general,

- specialni schopnost.

Vsechny tyto hodnoty muzeme uchovavat v jednom poli v ramci komentare pro hrace. Z hlediska citelnosti pro hrace a jednoduchosti uprav pro nas by bylo fajn mit novy typ, "aktivni/armada", ktery by navic mel parametry vyse (s vyjimkou jmena, ktere nepotrebujeme duplikovat). | priority | rozsireni modulu jednotky armady pokud bychom rozlisovali typ jednotky podrobneji umozni nam to vylepsit infrastrukturu v nekolika ohledech lepsi prezentace parametru armad armady maji nasledujici parametry viditelne pro hrace jmeno sila velikost moralka general specialni schopnost vsechny tyto hodnoty muzeme uchovavat v jednom poli v ramci komentare pro hrace z hlediska citelnosti pro hrace a jednoduchosti uprav pro nas by bylo fajn mit novy typ aktivni armada ktery by navic mel parametry vyse s vyjimkou jmena ktere nepotrebujeme duplikovat | 1 |

345,860 | 10,374,137,445 | IssuesEvent | 2019-09-09 08:57:17 | 52North/sos4R | https://api.github.com/repos/52North/sos4R | closed | Test other xml parsing functions and add configuration parameters | priority medium | Add some unit tests for parsing responses and then try out different available xml parsing functions.

``` r

library("XML")

?xml

?xmlParse

?xmlTreeParse # try out internal nodes!

?xmlParseDoc

```

Also try out the options setting. The vector should be part of the SOS instance.

``` r

mySOS@xmlParseDocOptions

```

For available options see http://web.mit.edu/~r/current/arch/i386_linux26/lib/R/library/XML/html/xmlParseDoc.html and http://xmlsoft.org/xmllint.html

| 1.0 | Test other xml parsing functions and add configuration parameters - Add some unit tests for parsing responses and then try out different available xml parsing functions.

``` r

library("XML")

?xml

?xmlParse

?xmlTreeParse # try out internal nodes!

?xmlParseDoc

```

Also try out the options setting. The vector should be part of the SOS instance.

``` r

mySOS@xmlParseDocOptions

```

For available options see http://web.mit.edu/~r/current/arch/i386_linux26/lib/R/library/XML/html/xmlParseDoc.html and http://xmlsoft.org/xmllint.html

| priority | test other xml parsing functions and add configuration parameters add some unit tests for parsing responses and then try out different available xml parsing functions r library xml xml xmlparse xmltreeparse try out internal nodes xmlparsedoc also try out the options setting the vector should be part of the sos instance r mysos xmlparsedocoptions for available options see and | 1 |

85,254 | 3,688,510,955 | IssuesEvent | 2016-02-25 13:13:26 | leoncastillejos/sonar | https://api.github.com/repos/leoncastillejos/sonar | closed | send email on condition fixed | priority:medium type:enhancement | When an alert event is raised, it should automatically notify when the alert condition is fixed. | 1.0 | send email on condition fixed - When an alert event is raised, it should automatically notify when the alert condition is fixed. | priority | send email on condition fixed when an alert event is raised it should automatically notify when the alert condition is fixed | 1 |

249,131 | 7,953,878,638 | IssuesEvent | 2018-07-12 04:29:12 | StrangeLoopGames/EcoIssues | https://api.github.com/repos/StrangeLoopGames/EcoIssues | closed | Storage UI Stockpile Duplication bug | Medium Priority Respond ASAP | I have 2 stockpiles that are bugged a glass and a brick stockpile which show up as doubles in the storage ui.

when I try to consolidate the items into the stockpiles it duplicates the materials.

I'm currently trying to find a fix to remove the stockpiles that duplicate items any ideas would be appreciated | 1.0 | Storage UI Stockpile Duplication bug - I have 2 stockpiles that are bugged a glass and a brick stockpile which show up as doubles in the storage ui.

when I try to consolidate the items into the stockpiles it duplicates the materials.

I'm currently trying to find a fix to remove the stockpiles that duplicate items any ideas would be appreciated | priority | storage ui stockpile duplication bug i have stockpiles that are bugged a glass and a brick stockpile which show up as doubles in the storage ui when i try to consolidate the items into the stockpiles it duplicates the materials i m currently trying to find a fix to remove the stockpiles that duplicate items any ideas would be appreciated | 1 |

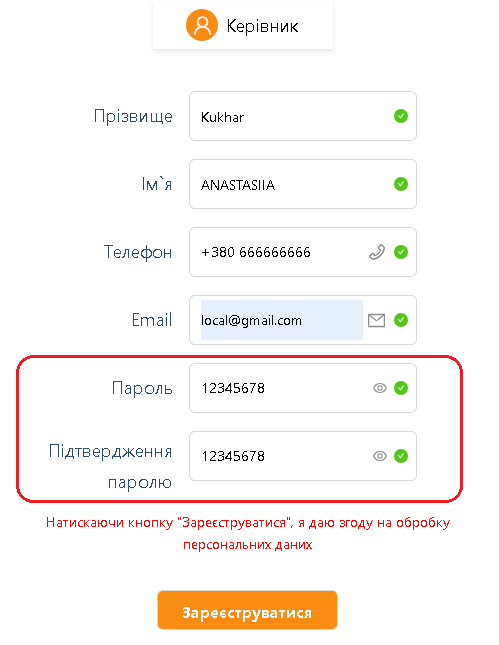

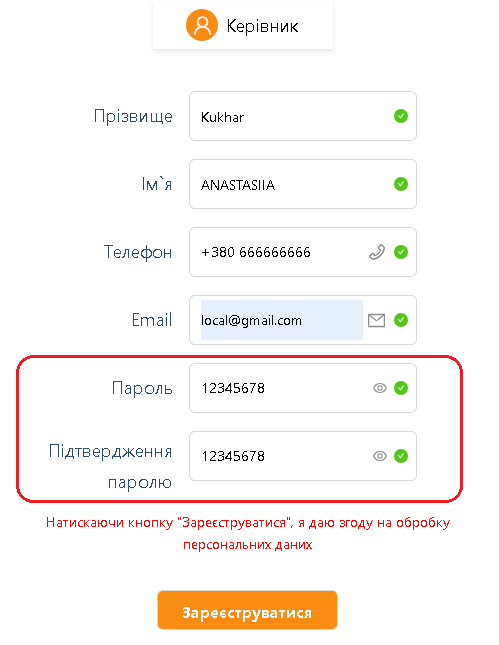

605,733 | 18,739,783,808 | IssuesEvent | 2021-11-04 12:17:33 | ita-social-projects/TeachUA | https://api.github.com/repos/ita-social-projects/TeachUA | opened | [Registration] User can register with invalid password. | bug Frontend Priority: Medium Desktop | **Environment**: Windows 10, Google Chrome Version 92.0.4515.159, (64 бит)

**Reproducible**: Always

**Build found**: Last commit

**Steps to reproduce**

1. Go to https://speak-ukrainian.org.ua/dev/

2. Click on the profile drop-down list

3. Click on the 'Зареєструватися'

4. Enter the correct data in each field (except the 'Password' field)

5. In the 'Password' field enter data: '12345678'

**Actual result**

'Password' with data '12345678' are considered correct.

**Expected result**

‘Password’ **must contain** the following LATIN characters: upper/lower-case, numbers and reserved characters (~, `, !, @, #, $, %, ^, &, (, ), _, =, +, {, }, [, ], /, |, :, ;,", <, >, ?) // RUS, UKR letters are not valid. Cannot be shorter than 8 characters and longer than 20 characters

Error message appears: “Пароль не може бути коротшим, ніж 8 та довшим, ніж 20 символів.

Пароль повинен містити великі/маленькі літери латинського алфавіту, цифри та спеціальні символи”

"User story #97

| 1.0 | [Registration] User can register with invalid password. - **Environment**: Windows 10, Google Chrome Version 92.0.4515.159, (64 бит)

**Reproducible**: Always

**Build found**: Last commit

**Steps to reproduce**

1. Go to https://speak-ukrainian.org.ua/dev/

2. Click on the profile drop-down list

3. Click on the 'Зареєструватися'

4. Enter the correct data in each field (except the 'Password' field)

5. In the 'Password' field enter data: '12345678'

**Actual result**

'Password' with data '12345678' are considered correct.

**Expected result**

‘Password’ **must contain** the following LATIN characters: upper/lower-case, numbers and reserved characters (~, `, !, @, #, $, %, ^, &, (, ), _, =, +, {, }, [, ], /, |, :, ;,", <, >, ?) // RUS, UKR letters are not valid. Cannot be shorter than 8 characters and longer than 20 characters

Error message appears: “Пароль не може бути коротшим, ніж 8 та довшим, ніж 20 символів.

Пароль повинен містити великі/маленькі літери латинського алфавіту, цифри та спеціальні символи”

"User story #97

| priority | user can register with invalid password environment windows google chrome version бит reproducible always build found last commit steps to reproduce go to click on the profile drop down list click on the зареєструватися enter the correct data in each field except the password field in the password field enter data actual result password with data are considered correct expected result ‘password’ must contain the following latin characters upper lower case numbers and reserved characters rus ukr letters are not valid cannot be shorter than characters and longer than characters error message appears “пароль не може бути коротшим ніж та довшим ніж символів пароль повинен містити великі маленькі літери латинського алфавіту цифри та спеціальні символи” user story | 1 |

141,323 | 5,434,894,673 | IssuesEvent | 2017-03-05 12:10:44 | open-serious/open-serious | https://api.github.com/repos/open-serious/open-serious | closed | Projects no longer compile on Win32 after Linux port | os.win32 priority.medium | Due to certain changes made in the engine/game codebase when porting the code to Linux, the following projects no longer compile on Windows properly:

* `Shaders`

* `MakeFONT`

* `DecodeReport`

* `EngineGUI`

* `Modeler`

* `RCon`

* `SeriousSkaStudio`

* `DedicatedServer`

* `GameGUIMP`

* `WorldEditor`

All compile errors should be fixed. | 1.0 | Projects no longer compile on Win32 after Linux port - Due to certain changes made in the engine/game codebase when porting the code to Linux, the following projects no longer compile on Windows properly:

* `Shaders`

* `MakeFONT`

* `DecodeReport`

* `EngineGUI`

* `Modeler`

* `RCon`

* `SeriousSkaStudio`

* `DedicatedServer`

* `GameGUIMP`

* `WorldEditor`

All compile errors should be fixed. | priority | projects no longer compile on after linux port due to certain changes made in the engine game codebase when porting the code to linux the following projects no longer compile on windows properly shaders makefont decodereport enginegui modeler rcon seriousskastudio dedicatedserver gameguimp worldeditor all compile errors should be fixed | 1 |

462,006 | 13,239,675,538 | IssuesEvent | 2020-08-19 04:11:20 | reizuseharu/Diana | https://api.github.com/repos/reizuseharu/Diana | opened | Add Service | [priority] medium [type] enhancement | ### Description

Add Service class

### Details

Service class needed to separate business logic from client

### Acceptance Criteria

- Service capable of accessing SRC endpoint

### Tasks

- [ ] Add Service class

- [ ] Add Unit Tests | 1.0 | Add Service - ### Description

Add Service class

### Details

Service class needed to separate business logic from client

### Acceptance Criteria

- Service capable of accessing SRC endpoint

### Tasks

- [ ] Add Service class

- [ ] Add Unit Tests | priority | add service description add service class details service class needed to separate business logic from client acceptance criteria service capable of accessing src endpoint tasks add service class add unit tests | 1 |

55,036 | 3,071,846,365 | IssuesEvent | 2015-08-19 14:17:59 | RobotiumTech/robotium | https://api.github.com/repos/RobotiumTech/robotium | closed | solo.searchButton(String text, boolean onlyVisible) is not working properly. | bug imported Priority-Medium wontfix | _From [[email protected]](https://code.google.com/u/116639303515044083042/) on April 07, 2011 22:33:16_

What steps will reproduce the problem? 1.Launch the contacts.

2.From menu-> manage contacts-> select an account which has no contacts

3.call the solo.searchButton(String text, boolean onlyVisible) api. What is the expected output? What do you see instead? Actual:

======

Returns true even though the button is not present.

Expected:

========

Should return false when button is not available. What version of the product are you using? On what operating system? Android2.3 and robotium 2.2 Please provide any additional information below.

_Original issue: http://code.google.com/p/robotium/issues/detail?id=99_ | 1.0 | solo.searchButton(String text, boolean onlyVisible) is not working properly. - _From [[email protected]](https://code.google.com/u/116639303515044083042/) on April 07, 2011 22:33:16_

What steps will reproduce the problem? 1.Launch the contacts.

2.From menu-> manage contacts-> select an account which has no contacts

3.call the solo.searchButton(String text, boolean onlyVisible) api. What is the expected output? What do you see instead? Actual:

======

Returns true even though the button is not present.

Expected:

========

Should return false when button is not available. What version of the product are you using? On what operating system? Android2.3 and robotium 2.2 Please provide any additional information below.

_Original issue: http://code.google.com/p/robotium/issues/detail?id=99_ | priority | solo searchbutton string text boolean onlyvisible is not working properly from on april what steps will reproduce the problem launch the contacts from menu manage contacts select an account which has no contacts call the solo searchbutton string text boolean onlyvisible api what is the expected output what do you see instead actual returns true even though the button is not present expected should return false when button is not available what version of the product are you using on what operating system and robotium please provide any additional information below original issue | 1 |

730,158 | 25,162,428,822 | IssuesEvent | 2022-11-10 17:49:47 | CDCgov/prime-reportstream | https://api.github.com/repos/CDCgov/prime-reportstream | closed | HHS Protect Data Issue - Blanks for MSH-3.1 and MSH-3.2 | onboarding-ops support Medium Priority Needs refinement | ## Problem statement

NIH user Krishna, found some data quality issues in HHS Protect from ReportStream Data.

For all test results coming from Intrivo, the MSH-3 segments are being left blank:

MSH-3.1 (Sending system namespace)

MSH-3.2 (Sending system OID)

The MARS specifications requires that these be populated.

## What you need to know

I reviewed 1 Intrivo HL7 file dated 10/6/2022 and it looks like there's data in those fields.

MSH

^~\&

Intrivo^2.16.840.1.113434.6.2.2.1.1.2^ISO

Intrivo^2.16.840.1.113434.6.2.10411^CLIA

CDC PRIME^2.16.840.1.114222.4.1.237821^ISO

CDC PRIME^2.16.840.1.114222.4.1.237821^ISO

20221006165232+0000

ORU^R01^ORU_R01

`b8c4a9ff-53fe-4746-8602-03092cc3dbbf`

P

2.5.1

NE

NE

USA

UNICODE UTF-8

PHLabReport-NoAck^ELR251R1_Rcvr_Prof^2.16.840.1.113883.9.11^ISO

## Acceptance criteria

- Confirm whether the MSH-3.1 and MSH-3.2 fields are blank on ReportStream side.

-Figure out a way to send that over to HHS Protect with no blanks

## To do

- [ ] ...

| 1.0 | HHS Protect Data Issue - Blanks for MSH-3.1 and MSH-3.2 - ## Problem statement

NIH user Krishna, found some data quality issues in HHS Protect from ReportStream Data.

For all test results coming from Intrivo, the MSH-3 segments are being left blank:

MSH-3.1 (Sending system namespace)

MSH-3.2 (Sending system OID)

The MARS specifications requires that these be populated.

## What you need to know

I reviewed 1 Intrivo HL7 file dated 10/6/2022 and it looks like there's data in those fields.

MSH

^~\&

Intrivo^2.16.840.1.113434.6.2.2.1.1.2^ISO

Intrivo^2.16.840.1.113434.6.2.10411^CLIA

CDC PRIME^2.16.840.1.114222.4.1.237821^ISO

CDC PRIME^2.16.840.1.114222.4.1.237821^ISO

20221006165232+0000

ORU^R01^ORU_R01

`b8c4a9ff-53fe-4746-8602-03092cc3dbbf`

P

2.5.1

NE

NE

USA

UNICODE UTF-8

PHLabReport-NoAck^ELR251R1_Rcvr_Prof^2.16.840.1.113883.9.11^ISO

## Acceptance criteria

- Confirm whether the MSH-3.1 and MSH-3.2 fields are blank on ReportStream side.

-Figure out a way to send that over to HHS Protect with no blanks

## To do

- [ ] ...

| priority | hhs protect data issue blanks for msh and msh problem statement nih user krishna found some data quality issues in hhs protect from reportstream data for all test results coming from intrivo the msh segments are being left blank msh sending system namespace msh sending system oid the mars specifications requires that these be populated what you need to know i reviewed intrivo file dated and it looks like there s data in those fields msh intrivo iso intrivo clia cdc prime iso cdc prime iso oru oru p ne ne usa unicode utf phlabreport noack rcvr prof iso acceptance criteria confirm whether the msh and msh fields are blank on reportstream side figure out a way to send that over to hhs protect with no blanks to do | 1 |

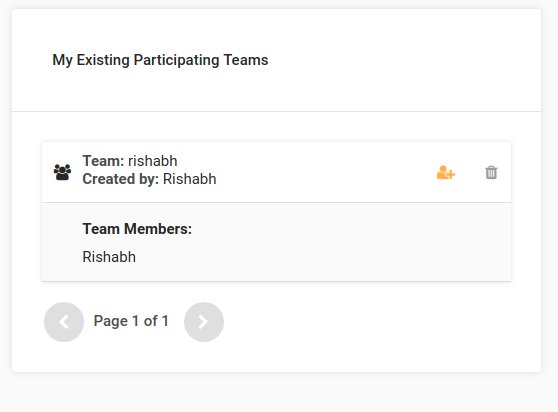

220,414 | 7,359,811,979 | IssuesEvent | 2018-03-10 11:40:14 | Cloud-CV/EvalAI | https://api.github.com/repos/Cloud-CV/EvalAI | opened | UI Improvements in Leaderboard Entry | GSOC enhancement frontend medium-difficulty new-feature priority-high | - [ ] Show the meta data fields (**Team Members** and **Method Description**) in each leaderboard entry.

Hint: Please refer the screenshot.

- [ ] The user should be able to edit the **Method Description** field if the entry is created by the same user.

| 1.0 | UI Improvements in Leaderboard Entry - - [ ] Show the meta data fields (**Team Members** and **Method Description**) in each leaderboard entry.

Hint: Please refer the screenshot.

- [ ] The user should be able to edit the **Method Description** field if the entry is created by the same user.

| priority | ui improvements in leaderboard entry show the meta data fields team members and method description in each leaderboard entry hint please refer the screenshot the user should be able to edit the method description field if the entry is created by the same user | 1 |

351,643 | 10,521,697,610 | IssuesEvent | 2019-09-30 06:53:07 | AY1920S1-CS2103T-T09-2/main | https://api.github.com/repos/AY1920S1-CS2103T-T09-2/main | opened | As a student who is impatient i want to have simple commands | priority.Medium type.Story | so that i can input new entries quickly | 1.0 | As a student who is impatient i want to have simple commands - so that i can input new entries quickly | priority | as a student who is impatient i want to have simple commands so that i can input new entries quickly | 1 |

543,562 | 15,883,599,534 | IssuesEvent | 2021-04-09 17:37:15 | AY2021S2-CS2103T-W10-2/tp | https://api.github.com/repos/AY2021S2-CS2103T-W10-2/tp | closed | [PE-D] Unsure of what my current "list" filter is | priority.Medium severity.Low type.Chore | By executing this command,

It is slightly difficult to find out what the current list filter is in place or was executed. Hence if I want to go up a level or recall what other filters I want to apply to list, I would have to do "list" and restart the process.

There was clear documentation of this feature however, it may be a good idea to indicate what filters have been applied for "list".

PS: Perhaps consider moving the note on "list", that other commands like "find" will only apply to that particular "list", into a more prominent section.

e.g:

<!--session: 1617429915572-7ea4bbe5-9f37-4e7b-97d5-95926028d84a-->

-------------

Labels: `severity.Medium` `type.FeatureFlaw`

original: justgnohUG/ped#3 | 1.0 | [PE-D] Unsure of what my current "list" filter is - By executing this command,

It is slightly difficult to find out what the current list filter is in place or was executed. Hence if I want to go up a level or recall what other filters I want to apply to list, I would have to do "list" and restart the process.

There was clear documentation of this feature however, it may be a good idea to indicate what filters have been applied for "list".

PS: Perhaps consider moving the note on "list", that other commands like "find" will only apply to that particular "list", into a more prominent section.

e.g:

<!--session: 1617429915572-7ea4bbe5-9f37-4e7b-97d5-95926028d84a-->

-------------

Labels: `severity.Medium` `type.FeatureFlaw`

original: justgnohUG/ped#3 | priority | unsure of what my current list filter is by executing this command it is slightly difficult to find out what the current list filter is in place or was executed hence if i want to go up a level or recall what other filters i want to apply to list i would have to do list and restart the process there was clear documentation of this feature however it may be a good idea to indicate what filters have been applied for list ps perhaps consider moving the note on list that other commands like find will only apply to that particular list into a more prominent section e g labels severity medium type featureflaw original justgnohug ped | 1 |

4,500 | 2,552,678,989 | IssuesEvent | 2015-02-02 18:43:00 | Sistema-Integrado-Gestao-Academica/SiGA | https://api.github.com/repos/Sistema-Integrado-Gestao-Academica/SiGA | opened | Currículo de Curso | enhancement [Medium Priority] | Como **secretária acadêmica** [de determinado Curso] desejo alocar disciplinas (issue #31) no **Currículo** de determinado **Curso de Pós-Graduação** (issue #5 ) para que possa organizar corretamente a lista de oferta do meu curso a partir dos cursos existentes no sistema.

------------------------

| 1.0 | Currículo de Curso - Como **secretária acadêmica** [de determinado Curso] desejo alocar disciplinas (issue #31) no **Currículo** de determinado **Curso de Pós-Graduação** (issue #5 ) para que possa organizar corretamente a lista de oferta do meu curso a partir dos cursos existentes no sistema.

------------------------

| priority | currículo de curso como secretária acadêmica desejo alocar disciplinas issue no currículo de determinado curso de pós graduação issue para que possa organizar corretamente a lista de oferta do meu curso a partir dos cursos existentes no sistema | 1 |

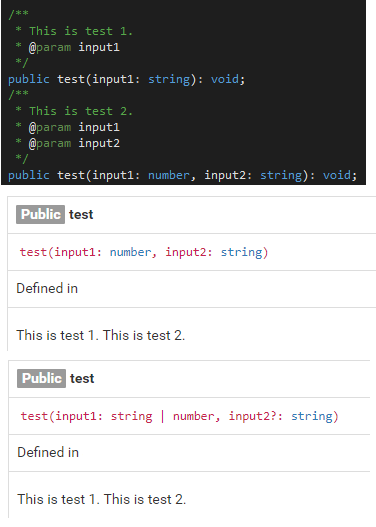

355,050 | 10,576,036,948 | IssuesEvent | 2019-10-07 16:58:11 | compodoc/compodoc | https://api.github.com/repos/compodoc/compodoc | closed | [BUG] Same Description used for all Overload Functions | Priority: Medium Time: ~1 hour Type: Bug wontfix | <!--

> Please follow the issue template below for bug reports and queries.

> For issue, start the label of the title with [BUG]

> For feature requests, start the label of the title with [FEATURE] and explain your use case and ideas clearly below, you can remove sections which are not relevant.

-->

##### **Overview of the issue**

When adding a description to each overload function Compodoc adds the description together for all overloads and uses it for all of the functions.

##### **Operating System, Node.js, npm, compodoc version(s)**

Compodoc 1.0.9

##### **Angular configuration, a `package.json` file in the root folder**

##### **Compodoc installed globally or locally ?**

Locally

##### **Motivation for or Use Case**

Each override function should have it's own description.

##### **Reproduce the error**

<!-- an unambiguous set of steps to reproduce the error. -->

##### **Related issues**

##### **Suggest a Fix**

| 1.0 | [BUG] Same Description used for all Overload Functions - <!--

> Please follow the issue template below for bug reports and queries.

> For issue, start the label of the title with [BUG]

> For feature requests, start the label of the title with [FEATURE] and explain your use case and ideas clearly below, you can remove sections which are not relevant.

-->

##### **Overview of the issue**

When adding a description to each overload function Compodoc adds the description together for all overloads and uses it for all of the functions.

##### **Operating System, Node.js, npm, compodoc version(s)**

Compodoc 1.0.9

##### **Angular configuration, a `package.json` file in the root folder**

##### **Compodoc installed globally or locally ?**

Locally

##### **Motivation for or Use Case**

Each override function should have it's own description.

##### **Reproduce the error**

<!-- an unambiguous set of steps to reproduce the error. -->

##### **Related issues**

##### **Suggest a Fix**

| priority | same description used for all overload functions please follow the issue template below for bug reports and queries for issue start the label of the title with for feature requests start the label of the title with and explain your use case and ideas clearly below you can remove sections which are not relevant overview of the issue when adding a description to each overload function compodoc adds the description together for all overloads and uses it for all of the functions operating system node js npm compodoc version s compodoc angular configuration a package json file in the root folder compodoc installed globally or locally locally motivation for or use case each override function should have it s own description reproduce the error related issues suggest a fix | 1 |

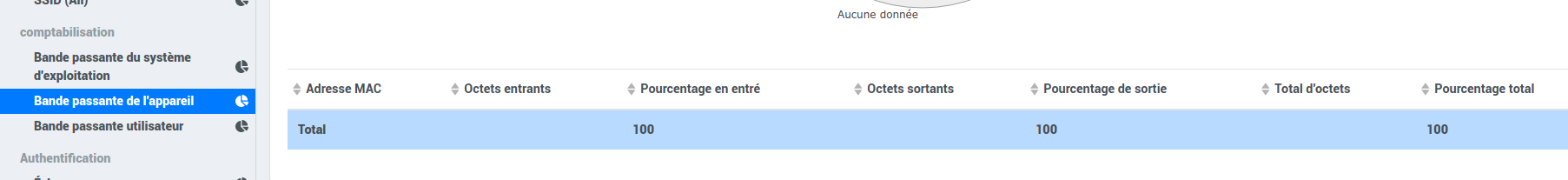

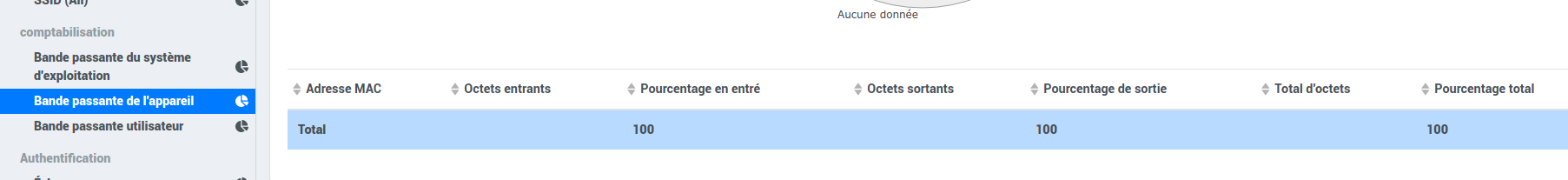

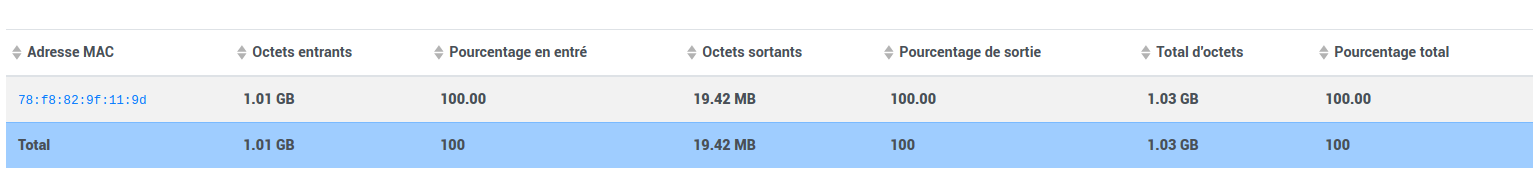

411,193 | 12,015,528,672 | IssuesEvent | 2020-04-10 14:11:25 | inverse-inc/packetfence | https://api.github.com/repos/inverse-inc/packetfence | opened | Bandwith Accounting per hour for device is empty | Priority: Medium Type: Bug | **Describe the bug**

Bandwith Accounting per hour for device is empty

**To Reproduce**

Inline l2 setup, downloaded 1gb file, the hour repport is empty:

1. Go to https://mgmt_ip:1443/admin/alt#/reports/standard/chart/nodebandwidth/hour

2. Go to https://mgmt_ip:1443/admin/alt#/reports/standard/chart/nodebandwidth/day

**Expected behavior**

We should be able to see what happen in the last hour.

**Additional context**

```

MariaDB [pf]> select * from bandwidth_accounting_history;

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

| node_id | time_bucket | in_bytes | out_bytes | total_bytes | mac | tenant_id |

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

| 414483715395997 | 2020-04-10 09:00:00 | 1083687638 | 20356317 | 1104043955 | 78:f8:82:9f:11:9d | 1 |

| 414483715395997 | 2020-04-10 10:00:00 | 135 | 5439 | 5574 | 78:f8:82:9f:11:9d | 1 |

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

``` | 1.0 | Bandwith Accounting per hour for device is empty - **Describe the bug**

Bandwith Accounting per hour for device is empty

**To Reproduce**

Inline l2 setup, downloaded 1gb file, the hour repport is empty:

1. Go to https://mgmt_ip:1443/admin/alt#/reports/standard/chart/nodebandwidth/hour

2. Go to https://mgmt_ip:1443/admin/alt#/reports/standard/chart/nodebandwidth/day

**Expected behavior**

We should be able to see what happen in the last hour.

**Additional context**

```

MariaDB [pf]> select * from bandwidth_accounting_history;

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

| node_id | time_bucket | in_bytes | out_bytes | total_bytes | mac | tenant_id |

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

| 414483715395997 | 2020-04-10 09:00:00 | 1083687638 | 20356317 | 1104043955 | 78:f8:82:9f:11:9d | 1 |

| 414483715395997 | 2020-04-10 10:00:00 | 135 | 5439 | 5574 | 78:f8:82:9f:11:9d | 1 |

+-----------------+---------------------+------------+-----------+-------------+-------------------+-----------+

``` | priority | bandwith accounting per hour for device is empty describe the bug bandwith accounting per hour for device is empty to reproduce inline setup downloaded file the hour repport is empty go to go to expected behavior we should be able to see what happen in the last hour additional context mariadb select from bandwidth accounting history node id time bucket in bytes out bytes total bytes mac tenant id | 1 |

330,307 | 10,038,306,541 | IssuesEvent | 2019-07-18 14:54:13 | Citykleta/web-app | https://api.github.com/repos/Citykleta/web-app | closed | Route exploration | component:Itinerary enhancement priority:medium | At the moment, a user can quickly find a route going from A to B going through various intermediate points.

It would be however important to improve this part of the application.

- [ ] change the style of the displayed route to convey the sens of the route as the moment it just displays the path but no direction is shown

- [ ] propose alternatives

- [ ] give a way to the user to preview the instructions | 1.0 | Route exploration - At the moment, a user can quickly find a route going from A to B going through various intermediate points.

It would be however important to improve this part of the application.

- [ ] change the style of the displayed route to convey the sens of the route as the moment it just displays the path but no direction is shown

- [ ] propose alternatives

- [ ] give a way to the user to preview the instructions | priority | route exploration at the moment a user can quickly find a route going from a to b going through various intermediate points it would be however important to improve this part of the application change the style of the displayed route to convey the sens of the route as the moment it just displays the path but no direction is shown propose alternatives give a way to the user to preview the instructions | 1 |

152,724 | 5,867,980,866 | IssuesEvent | 2017-05-14 07:54:21 | tootsuite/mastodon | https://api.github.com/repos/tootsuite/mastodon | closed | Unable to add a media (Windows Phone 10) | bug priority - medium ui | Hello,

I searched and I thinked this issue was not opened yet.

When I try to add a media to a toot from Edge on Windows Phone 10 (Lumia 550), I've got a refresh, but media is not added and text is erased. This happens everytime I try.

I reproduce with mastodon.gougere.fr and mastodon.xyz.

xakan | 1.0 | Unable to add a media (Windows Phone 10) - Hello,

I searched and I thinked this issue was not opened yet.

When I try to add a media to a toot from Edge on Windows Phone 10 (Lumia 550), I've got a refresh, but media is not added and text is erased. This happens everytime I try.

I reproduce with mastodon.gougere.fr and mastodon.xyz.

xakan | priority | unable to add a media windows phone hello i searched and i thinked this issue was not opened yet when i try to add a media to a toot from edge on windows phone lumia i ve got a refresh but media is not added and text is erased this happens everytime i try i reproduce with mastodon gougere fr and mastodon xyz xakan | 1 |

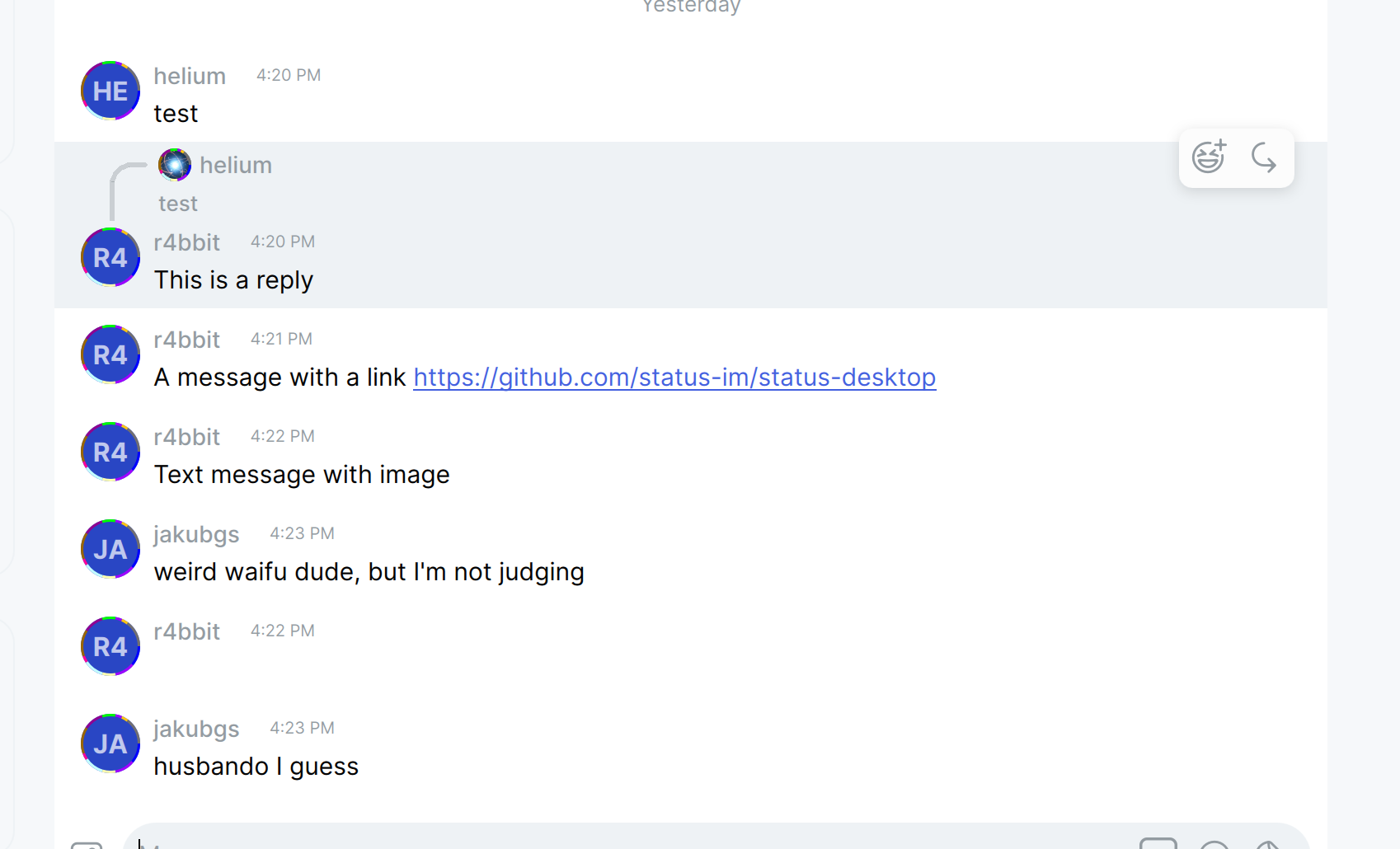

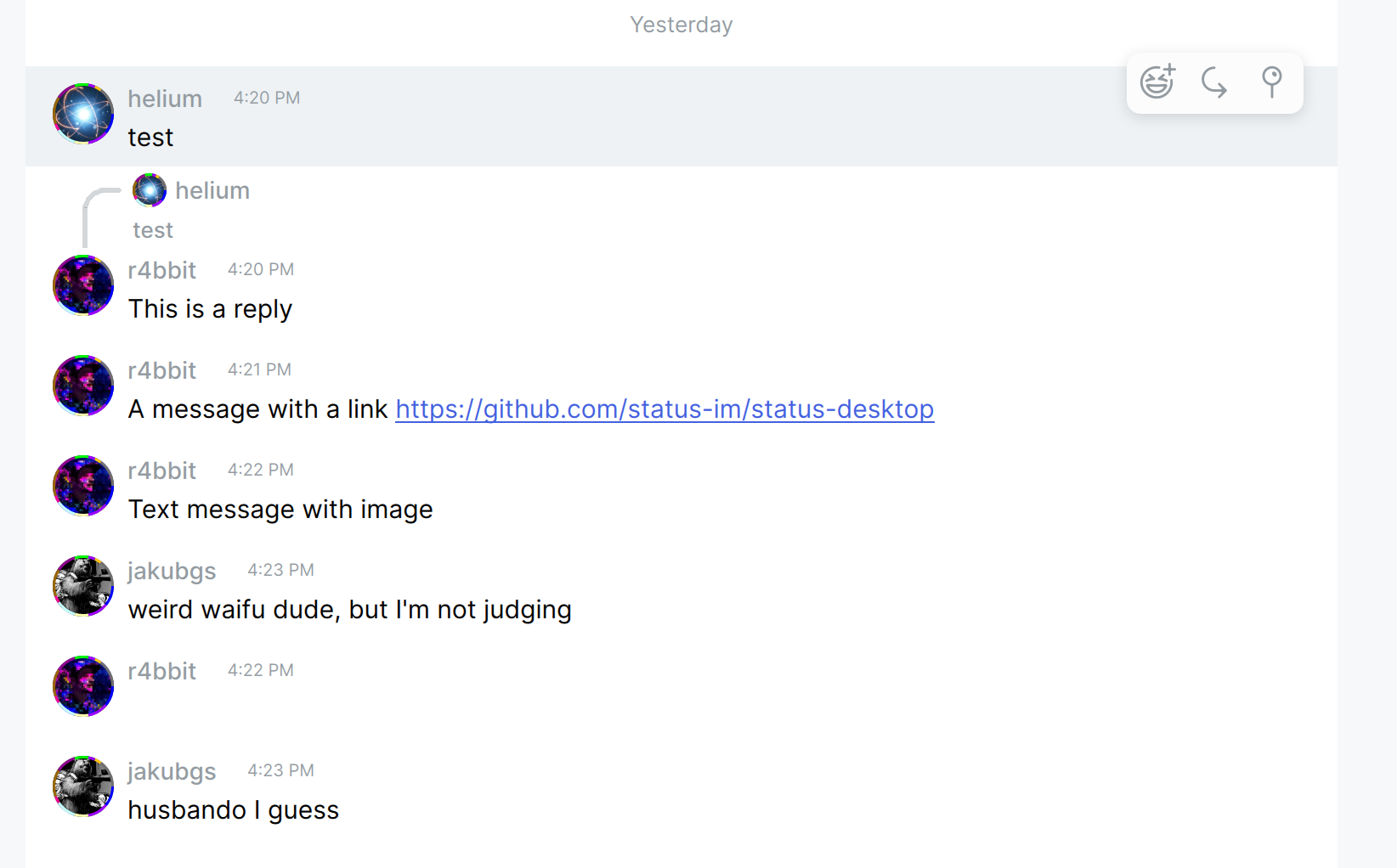

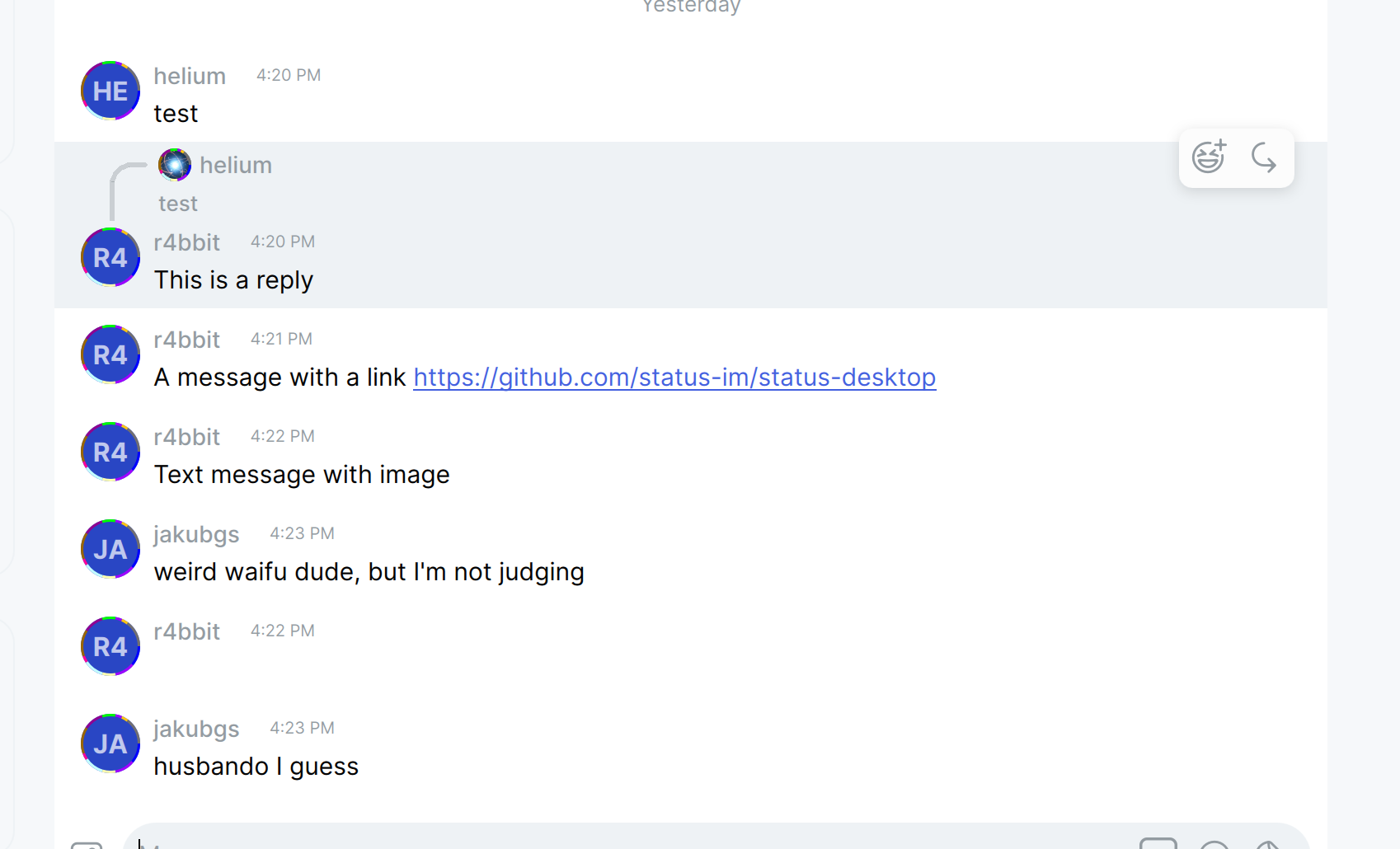

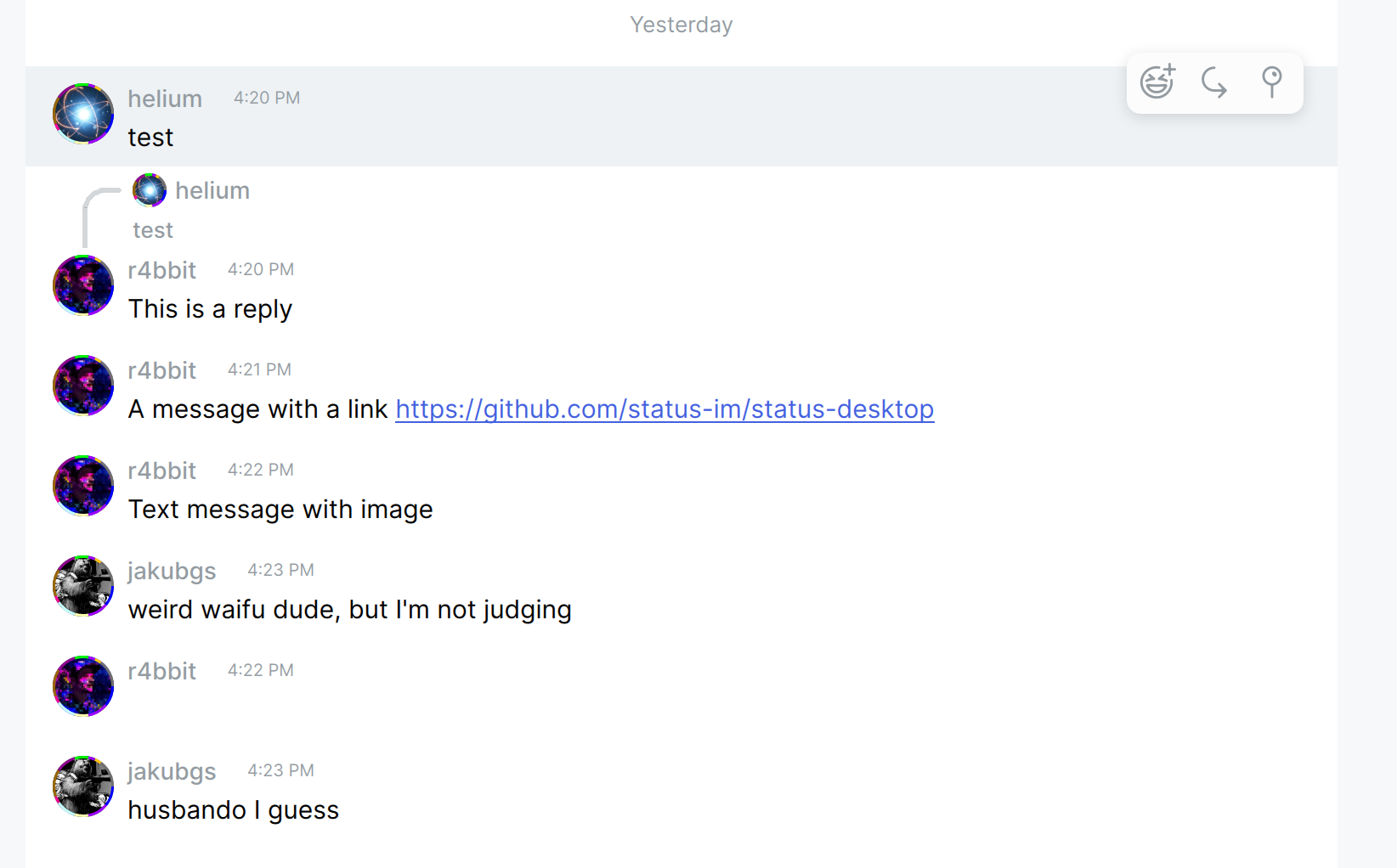

676,015 | 23,113,372,247 | IssuesEvent | 2022-07-27 14:42:26 | status-im/status-desktop | https://api.github.com/repos/status-im/status-desktop | opened | `UserImage` component doesn't take privacy settings into account inside message replies | bug Chat priority 2: medium E:Bugfixes E:Settings | Here's a an example message history:

There's a reply, notice how the image of the message to be replied is shown while it's not shown in the original profile image.

The reason it's not shown in the original message is because by default, we don't show profile image.

When turning this setting on for "everyone" it looks like this:

This means, inside message replies `UserImage` doesn't seem to honor the settings.

| 1.0 | `UserImage` component doesn't take privacy settings into account inside message replies - Here's a an example message history:

There's a reply, notice how the image of the message to be replied is shown while it's not shown in the original profile image.

The reason it's not shown in the original message is because by default, we don't show profile image.

When turning this setting on for "everyone" it looks like this:

This means, inside message replies `UserImage` doesn't seem to honor the settings.

| priority | userimage component doesn t take privacy settings into account inside message replies here s a an example message history there s a reply notice how the image of the message to be replied is shown while it s not shown in the original profile image the reason it s not shown in the original message is because by default we don t show profile image when turning this setting on for everyone it looks like this this means inside message replies userimage doesn t seem to honor the settings | 1 |

226,701 | 7,522,370,920 | IssuesEvent | 2018-04-12 20:12:16 | CS2103JAN2018-F12-B4/main | https://api.github.com/repos/CS2103JAN2018-F12-B4/main | closed | Locate a customer directly. | priority.medium type.enhancement | Possible enhancement: be able to locate a customer directly instead of having to pull a pre-defined list first. | 1.0 | Locate a customer directly. - Possible enhancement: be able to locate a customer directly instead of having to pull a pre-defined list first. | priority | locate a customer directly possible enhancement be able to locate a customer directly instead of having to pull a pre defined list first | 1 |

58,127 | 3,087,817,953 | IssuesEvent | 2015-08-25 13:55:19 | pavel-pimenov/flylinkdc-r5xx | https://api.github.com/repos/pavel-pimenov/flylinkdc-r5xx | closed | Неверное отображение кумулятивной статистики приянтого/отданного по команде /stats | bug imported Priority-Medium | _From [[email protected]](https://code.google.com/u/101495626515388303633/) on October 28, 2013 00:37:39_

1. На протяжении многих бета версий ( r502 ) видел нулевую статистику отданного/принятого:

-=[ Total download: 0 Б. Total upload: 0 Б ]=-

2. Однако если выдать в чат команду /r , то статистика пишется правильная.

3. После этой команды /r по команде /stats тоже начинают выводиться правильные данные.

**Attachment:** [Fly_r15808_statistics.png](http://code.google.com/p/flylinkdc/issues/detail?id=1363)

_Original issue: http://code.google.com/p/flylinkdc/issues/detail?id=1363_ | 1.0 | Неверное отображение кумулятивной статистики приянтого/отданного по команде /stats - _From [[email protected]](https://code.google.com/u/101495626515388303633/) on October 28, 2013 00:37:39_

1. На протяжении многих бета версий ( r502 ) видел нулевую статистику отданного/принятого:

-=[ Total download: 0 Б. Total upload: 0 Б ]=-

2. Однако если выдать в чат команду /r , то статистика пишется правильная.

3. После этой команды /r по команде /stats тоже начинают выводиться правильные данные.

**Attachment:** [Fly_r15808_statistics.png](http://code.google.com/p/flylinkdc/issues/detail?id=1363)

_Original issue: http://code.google.com/p/flylinkdc/issues/detail?id=1363_ | priority | неверное отображение кумулятивной статистики приянтого отданного по команде stats from on october на протяжении многих бета версий видел нулевую статистику отданного принятого однако если выдать в чат команду r то статистика пишется правильная после этой команды r по команде stats тоже начинают выводиться правильные данные attachment original issue | 1 |

767,375 | 26,921,456,534 | IssuesEvent | 2023-02-07 10:44:45 | AUBGTheHUB/spa-website-2022 | https://api.github.com/repos/AUBGTheHUB/spa-website-2022 | closed | OnClick function for redirecting to Landing page | frontend medium priority SPA | Create an onClick function in React for redirecting users from 'subpages' (e.g. jobs page, or HackAUBG page) to the Landing page (main page) every time the user clicks the HUB logo/name in the Navbar.

| 1.0 | OnClick function for redirecting to Landing page - Create an onClick function in React for redirecting users from 'subpages' (e.g. jobs page, or HackAUBG page) to the Landing page (main page) every time the user clicks the HUB logo/name in the Navbar.

| priority | onclick function for redirecting to landing page create an onclick function in react for redirecting users from subpages e g jobs page or hackaubg page to the landing page main page every time the user clicks the hub logo name in the navbar | 1 |

795,784 | 28,086,121,953 | IssuesEvent | 2023-03-30 09:53:12 | robotframework/robotframework | https://api.github.com/repos/robotframework/robotframework | opened | Support type aliases in formats `'list[int]'` and `'int | float'` in argument conversion | enhancement priority: medium effort: medium | Our argument conversion typically uses based on actual types like `int`, `list[int]` and `int | float`, but we also support type aliases as strings like `'int'` or `'integer'`. The motivation for type aliases is to support types returned, for example, by dynamic libraries wrapping code using other languages. Such libraries can simply return type names as strings instead of mapping them to actual Python types.

There are two limitations with type aliases, though:

- It isn't possible to represent types with nested types like `'list[int]'`. Aliases always map to a single concrete type, not to nested types.

- Unions cannot be represented using "Python syntax" like `'int | float'`. It is possible to use a tuple like `('int', 'float')`, though, so this is mainly an inconvenience.

Implementing this enhancement requires two things:

- Support for parsing strings like `'list[int]'` and `'int | float'`. Results could be newish [TypeInfo](https://github.com/robotframework/robotframework/blob/6e6f3a595d800ff43e792c4a7c582e7bf6abc131/src/robot/running/arguments/argumentspec.py#L183) objects that were added to make Libdoc handle nested types properly (#4538). Probably we could add a new `TypeInfo.from_string` class method.

- Enhance type conversion to work with `TypeInfo`. Currently these objects are only used by Libdoc.

In addition to helping with libraries wrapping non-Python code, this enhancement would allow us to create argument converters based on Libdoc spec files. That would probably be useful for external tools such as editor plugins. | 1.0 | Support type aliases in formats `'list[int]'` and `'int | float'` in argument conversion - Our argument conversion typically uses based on actual types like `int`, `list[int]` and `int | float`, but we also support type aliases as strings like `'int'` or `'integer'`. The motivation for type aliases is to support types returned, for example, by dynamic libraries wrapping code using other languages. Such libraries can simply return type names as strings instead of mapping them to actual Python types.

There are two limitations with type aliases, though:

- It isn't possible to represent types with nested types like `'list[int]'`. Aliases always map to a single concrete type, not to nested types.

- Unions cannot be represented using "Python syntax" like `'int | float'`. It is possible to use a tuple like `('int', 'float')`, though, so this is mainly an inconvenience.

Implementing this enhancement requires two things:

- Support for parsing strings like `'list[int]'` and `'int | float'`. Results could be newish [TypeInfo](https://github.com/robotframework/robotframework/blob/6e6f3a595d800ff43e792c4a7c582e7bf6abc131/src/robot/running/arguments/argumentspec.py#L183) objects that were added to make Libdoc handle nested types properly (#4538). Probably we could add a new `TypeInfo.from_string` class method.

- Enhance type conversion to work with `TypeInfo`. Currently these objects are only used by Libdoc.

In addition to helping with libraries wrapping non-Python code, this enhancement would allow us to create argument converters based on Libdoc spec files. That would probably be useful for external tools such as editor plugins. | priority | support type aliases in formats list and int float in argument conversion our argument conversion typically uses based on actual types like int list and int float but we also support type aliases as strings like int or integer the motivation for type aliases is to support types returned for example by dynamic libraries wrapping code using other languages such libraries can simply return type names as strings instead of mapping them to actual python types there are two limitations with type aliases though it isn t possible to represent types with nested types like list aliases always map to a single concrete type not to nested types unions cannot be represented using python syntax like int float it is possible to use a tuple like int float though so this is mainly an inconvenience implementing this enhancement requires two things support for parsing strings like list and int float results could be newish objects that were added to make libdoc handle nested types properly probably we could add a new typeinfo from string class method enhance type conversion to work with typeinfo currently these objects are only used by libdoc in addition to helping with libraries wrapping non python code this enhancement would allow us to create argument converters based on libdoc spec files that would probably be useful for external tools such as editor plugins | 1 |

488,899 | 14,099,094,136 | IssuesEvent | 2020-11-06 00:29:23 | drashland/website | https://api.github.com/repos/drashland/website | closed | Write required documentation for deno-drash issue #427 (after_resource middleware hook) | Priority: Medium Remark: Deploy To Production Type: Chore | ## Summary

The following issue requires documentation before it can be closed:

https://github.com/drashland/deno-drash/issues/427

The following pull request is associated with the above issue:

https://github.com/drashland/deno-drash/issues/428 | 1.0 | Write required documentation for deno-drash issue #427 (after_resource middleware hook) - ## Summary

The following issue requires documentation before it can be closed:

https://github.com/drashland/deno-drash/issues/427

The following pull request is associated with the above issue:

https://github.com/drashland/deno-drash/issues/428 | priority | write required documentation for deno drash issue after resource middleware hook summary the following issue requires documentation before it can be closed the following pull request is associated with the above issue | 1 |

135,388 | 5,247,424,657 | IssuesEvent | 2017-02-01 12:59:41 | moodlepeers/moodle-mod_groupformation | https://api.github.com/repos/moodlepeers/moodle-mod_groupformation | opened | layout issues with clean theme or beuth03 theme | bug FE (frontend) Priority medium | 1. Inside the Group Formation page, the Move block and Actions in every block will disappear, the icons will not appear appropriatly!

2. On the beuth03 Theme (the offical theme for Beuth Hochschule) the navigation header will not appear appropriatly inside the page of the groupformation, the header will navigate to the right.

3. Width of the input type="text": At the beginning of creating a new group formation, the group formation name text (under General) is very narrow, the letters will not appear in the appropriate size! | 1.0 | layout issues with clean theme or beuth03 theme - 1. Inside the Group Formation page, the Move block and Actions in every block will disappear, the icons will not appear appropriatly!

2. On the beuth03 Theme (the offical theme for Beuth Hochschule) the navigation header will not appear appropriatly inside the page of the groupformation, the header will navigate to the right.

3. Width of the input type="text": At the beginning of creating a new group formation, the group formation name text (under General) is very narrow, the letters will not appear in the appropriate size! | priority | layout issues with clean theme or theme inside the group formation page the move block and actions in every block will disappear the icons will not appear appropriatly on the theme the offical theme for beuth hochschule the navigation header will not appear appropriatly inside the page of the groupformation the header will navigate to the right width of the input type text at the beginning of creating a new group formation the group formation name text under general is very narrow the letters will not appear in the appropriate size | 1 |

785,672 | 27,622,209,292 | IssuesEvent | 2023-03-10 01:45:30 | yugabyte/yugabyte-db | https://api.github.com/repos/yugabyte/yugabyte-db | closed | [YSQL] yb_enable_expression_pushdown for GIN index scan can yield incorrect results | kind/bug area/ysql priority/medium | Jira Link: [DB-5677](https://yugabyte.atlassian.net/browse/DB-5677)

### Description

yb_enable_expression_pushdown does not seem to work correctly. Refer to the slack thread - https://yugabyte.slack.com/archives/CAR5BCH29/p1677518458483219

The test case to reproduce the error is below:

```

drop table demo;

CREATE TABLE demo(

demo_id varchar(255) not null,

guid varchar(255) not null unique,

status varchar(255),

json_content jsonb not null,

primary key (demo_id)

);

CREATE INDEX ref_idx ON demo USING ybgin (json_content jsonb_path_ops) ;

insert into demo select x::text, x::text, x::text, ('{"externalReferences": [{"val":"'||x||'"}]}')::jsonb

from generate_series (1, 10) x;

-- wrong answer

set yb_enable_expression_pushdown=on;

select * from demo where json_content @> '{"externalReferences": [{"val":"9"}]}' and demo_id <> '9';

demo_id | guid | status | json_content

---------+------+--------+----------------------------------------

9 | 9 | 9 | {"externalReferences": [{"val": "9"}]}

-- correct answer

set yb_enable_expression_pushdown=off;

select * from demo where json_content @> '{"externalReferences": [{"val":"9"}]}' and demo_id <> '9';

yugabyte=# demo_id | guid | status | json_content

---------+------+--------+--------------

(0 rows)

```

[DB-5677]: https://yugabyte.atlassian.net/browse/DB-5677?atlOrigin=eyJpIjoiNWRkNTljNzYxNjVmNDY3MDlhMDU5Y2ZhYzA5YTRkZjUiLCJwIjoiZ2l0aHViLWNvbS1KU1cifQ | 1.0 | [YSQL] yb_enable_expression_pushdown for GIN index scan can yield incorrect results - Jira Link: [DB-5677](https://yugabyte.atlassian.net/browse/DB-5677)

### Description

yb_enable_expression_pushdown does not seem to work correctly. Refer to the slack thread - https://yugabyte.slack.com/archives/CAR5BCH29/p1677518458483219

The test case to reproduce the error is below:

```

drop table demo;

CREATE TABLE demo(

demo_id varchar(255) not null,

guid varchar(255) not null unique,

status varchar(255),

json_content jsonb not null,

primary key (demo_id)

);

CREATE INDEX ref_idx ON demo USING ybgin (json_content jsonb_path_ops) ;

insert into demo select x::text, x::text, x::text, ('{"externalReferences": [{"val":"'||x||'"}]}')::jsonb

from generate_series (1, 10) x;

-- wrong answer

set yb_enable_expression_pushdown=on;