text

stringlengths 29

320k

| id

stringlengths 22

166

| metadata

dict | __index_level_0__

int64 0

195

|

|---|---|---|---|

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Text generation strategies

Text generation is essential to many NLP tasks, such as open-ended text generation, summarization, translation, and

more. It also plays a role in a variety of mixed-modality applications that have text as an output like speech-to-text

and vision-to-text. Some of the models that can generate text include

GPT2, XLNet, OpenAI GPT, CTRL, TransformerXL, XLM, Bart, T5, GIT, Whisper.

Check out a few examples that use [`~transformers.generation_utils.GenerationMixin.generate`] method to produce

text outputs for different tasks:

* [Text summarization](./tasks/summarization#inference)

* [Image captioning](./model_doc/git#transformers.GitForCausalLM.forward.example)

* [Audio transcription](./model_doc/whisper#transformers.WhisperForConditionalGeneration.forward.example)

Note that the inputs to the generate method depend on the model's modality. They are returned by the model's preprocessor

class, such as AutoTokenizer or AutoProcessor. If a model's preprocessor creates more than one kind of input, pass all

the inputs to generate(). You can learn more about the individual model's preprocessor in the corresponding model's documentation.

The process of selecting output tokens to generate text is known as decoding, and you can customize the decoding strategy

that the `generate()` method will use. Modifying a decoding strategy does not change the values of any trainable parameters.

However, it can have a noticeable impact on the quality of the generated output. It can help reduce repetition in the text

and make it more coherent.

This guide describes:

* default generation configuration

* common decoding strategies and their main parameters

* saving and sharing custom generation configurations with your fine-tuned model on 🤗 Hub

## Default text generation configuration

A decoding strategy for a model is defined in its generation configuration. When using pre-trained models for inference

within a [`pipeline`], the models call the `PreTrainedModel.generate()` method that applies a default generation

configuration under the hood. The default configuration is also used when no custom configuration has been saved with

the model.

When you load a model explicitly, you can inspect the generation configuration that comes with it through

`model.generation_config`:

```python

>>> from transformers import AutoModelForCausalLM

>>> model = AutoModelForCausalLM.from_pretrained("distilbert/distilgpt2")

>>> model.generation_config

GenerationConfig {

"bos_token_id": 50256,

"eos_token_id": 50256,

}

```

Printing out the `model.generation_config` reveals only the values that are different from the default generation

configuration, and does not list any of the default values.

The default generation configuration limits the size of the output combined with the input prompt to a maximum of 20

tokens to avoid running into resource limitations. The default decoding strategy is greedy search, which is the simplest decoding strategy that picks a token with the highest probability as the next token. For many tasks

and small output sizes this works well. However, when used to generate longer outputs, greedy search can start

producing highly repetitive results.

## Customize text generation

You can override any `generation_config` by passing the parameters and their values directly to the [`generate`] method:

```python

>>> my_model.generate(**inputs, num_beams=4, do_sample=True) # doctest: +SKIP

```

Even if the default decoding strategy mostly works for your task, you can still tweak a few things. Some of the

commonly adjusted parameters include:

- `max_new_tokens`: the maximum number of tokens to generate. In other words, the size of the output sequence, not

including the tokens in the prompt. As an alternative to using the output's length as a stopping criteria, you can choose

to stop generation whenever the full generation exceeds some amount of time. To learn more, check [`StoppingCriteria`].

- `num_beams`: by specifying a number of beams higher than 1, you are effectively switching from greedy search to

beam search. This strategy evaluates several hypotheses at each time step and eventually chooses the hypothesis that

has the overall highest probability for the entire sequence. This has the advantage of identifying high-probability

sequences that start with a lower probability initial tokens and would've been ignored by the greedy search.

- `do_sample`: if set to `True`, this parameter enables decoding strategies such as multinomial sampling, beam-search

multinomial sampling, Top-K sampling and Top-p sampling. All these strategies select the next token from the probability

distribution over the entire vocabulary with various strategy-specific adjustments.

- `num_return_sequences`: the number of sequence candidates to return for each input. This option is only available for

the decoding strategies that support multiple sequence candidates, e.g. variations of beam search and sampling. Decoding

strategies like greedy search and contrastive search return a single output sequence.

## Save a custom decoding strategy with your model

If you would like to share your fine-tuned model with a specific generation configuration, you can:

* Create a [`GenerationConfig`] class instance

* Specify the decoding strategy parameters

* Save your generation configuration with [`GenerationConfig.save_pretrained`], making sure to leave its `config_file_name` argument empty

* Set `push_to_hub` to `True` to upload your config to the model's repo

```python

>>> from transformers import AutoModelForCausalLM, GenerationConfig

>>> model = AutoModelForCausalLM.from_pretrained("my_account/my_model") # doctest: +SKIP

>>> generation_config = GenerationConfig(

... max_new_tokens=50, do_sample=True, top_k=50, eos_token_id=model.config.eos_token_id

... )

>>> generation_config.save_pretrained("my_account/my_model", push_to_hub=True) # doctest: +SKIP

```

You can also store several generation configurations in a single directory, making use of the `config_file_name`

argument in [`GenerationConfig.save_pretrained`]. You can later instantiate them with [`GenerationConfig.from_pretrained`]. This is useful if you want to

store several generation configurations for a single model (e.g. one for creative text generation with sampling, and

one for summarization with beam search). You must have the right Hub permissions to add configuration files to a model.

```python

>>> from transformers import AutoModelForSeq2SeqLM, AutoTokenizer, GenerationConfig

>>> tokenizer = AutoTokenizer.from_pretrained("google-t5/t5-small")

>>> model = AutoModelForSeq2SeqLM.from_pretrained("google-t5/t5-small")

>>> translation_generation_config = GenerationConfig(

... num_beams=4,

... early_stopping=True,

... decoder_start_token_id=0,

... eos_token_id=model.config.eos_token_id,

... pad_token=model.config.pad_token_id,

... )

>>> # Tip: add `push_to_hub=True` to push to the Hub

>>> translation_generation_config.save_pretrained("/tmp", "translation_generation_config.json")

>>> # You could then use the named generation config file to parameterize generation

>>> generation_config = GenerationConfig.from_pretrained("/tmp", "translation_generation_config.json")

>>> inputs = tokenizer("translate English to French: Configuration files are easy to use!", return_tensors="pt")

>>> outputs = model.generate(**inputs, generation_config=generation_config)

>>> print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

['Les fichiers de configuration sont faciles à utiliser!']

```

## Streaming

The `generate()` supports streaming, through its `streamer` input. The `streamer` input is compatible with any instance

from a class that has the following methods: `put()` and `end()`. Internally, `put()` is used to push new tokens and

`end()` is used to flag the end of text generation.

<Tip warning={true}>

The API for the streamer classes is still under development and may change in the future.

</Tip>

In practice, you can craft your own streaming class for all sorts of purposes! We also have basic streaming classes

ready for you to use. For example, you can use the [`TextStreamer`] class to stream the output of `generate()` into

your screen, one word at a time:

```python

>>> from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

>>> tok = AutoTokenizer.from_pretrained("openai-community/gpt2")

>>> model = AutoModelForCausalLM.from_pretrained("openai-community/gpt2")

>>> inputs = tok(["An increasing sequence: one,"], return_tensors="pt")

>>> streamer = TextStreamer(tok)

>>> # Despite returning the usual output, the streamer will also print the generated text to stdout.

>>> _ = model.generate(**inputs, streamer=streamer, max_new_tokens=20)

An increasing sequence: one, two, three, four, five, six, seven, eight, nine, ten, eleven,

```

## Decoding strategies

Certain combinations of the `generate()` parameters, and ultimately `generation_config`, can be used to enable specific

decoding strategies. If you are new to this concept, we recommend reading [this blog post that illustrates how common decoding strategies work](https://huggingface.co/blog/how-to-generate).

Here, we'll show some of the parameters that control the decoding strategies and illustrate how you can use them.

### Greedy Search

[`generate`] uses greedy search decoding by default so you don't have to pass any parameters to enable it. This means the parameters `num_beams` is set to 1 and `do_sample=False`.

```python

>>> from transformers import AutoModelForCausalLM, AutoTokenizer

>>> prompt = "I look forward to"

>>> checkpoint = "distilbert/distilgpt2"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> outputs = model.generate(**inputs)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['I look forward to seeing you all again!\n\n\n\n\n\n\n\n\n\n\n']

```

### Contrastive search

The contrastive search decoding strategy was proposed in the 2022 paper [A Contrastive Framework for Neural Text Generation](https://arxiv.org/abs/2202.06417).

It demonstrates superior results for generating non-repetitive yet coherent long outputs. To learn how contrastive search

works, check out [this blog post](https://huggingface.co/blog/introducing-csearch).

The two main parameters that enable and control the behavior of contrastive search are `penalty_alpha` and `top_k`:

```python

>>> from transformers import AutoTokenizer, AutoModelForCausalLM

>>> checkpoint = "openai-community/gpt2-large"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> prompt = "Hugging Face Company is"

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> outputs = model.generate(**inputs, penalty_alpha=0.6, top_k=4, max_new_tokens=100)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['Hugging Face Company is a family owned and operated business. We pride ourselves on being the best

in the business and our customer service is second to none.\n\nIf you have any questions about our

products or services, feel free to contact us at any time. We look forward to hearing from you!']

```

### Multinomial sampling

As opposed to greedy search that always chooses a token with the highest probability as the

next token, multinomial sampling (also called ancestral sampling) randomly selects the next token based on the probability distribution over the entire

vocabulary given by the model. Every token with a non-zero probability has a chance of being selected, thus reducing the

risk of repetition.

To enable multinomial sampling set `do_sample=True` and `num_beams=1`.

```python

>>> from transformers import AutoTokenizer, AutoModelForCausalLM, set_seed

>>> set_seed(0) # For reproducibility

>>> checkpoint = "openai-community/gpt2-large"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> prompt = "Today was an amazing day because"

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> outputs = model.generate(**inputs, do_sample=True, num_beams=1, max_new_tokens=100)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['Today was an amazing day because when you go to the World Cup and you don\'t, or when you don\'t get invited,

that\'s a terrible feeling."']

```

### Beam-search decoding

Unlike greedy search, beam-search decoding keeps several hypotheses at each time step and eventually chooses

the hypothesis that has the overall highest probability for the entire sequence. This has the advantage of identifying high-probability

sequences that start with lower probability initial tokens and would've been ignored by the greedy search.

To enable this decoding strategy, specify the `num_beams` (aka number of hypotheses to keep track of) that is greater than 1.

```python

>>> from transformers import AutoModelForCausalLM, AutoTokenizer

>>> prompt = "It is astonishing how one can"

>>> checkpoint = "openai-community/gpt2-medium"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> outputs = model.generate(**inputs, num_beams=5, max_new_tokens=50)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['It is astonishing how one can have such a profound impact on the lives of so many people in such a short period of

time."\n\nHe added: "I am very proud of the work I have been able to do in the last few years.\n\n"I have']

```

### Beam-search multinomial sampling

As the name implies, this decoding strategy combines beam search with multinomial sampling. You need to specify

the `num_beams` greater than 1, and set `do_sample=True` to use this decoding strategy.

```python

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, set_seed

>>> set_seed(0) # For reproducibility

>>> prompt = "translate English to German: The house is wonderful."

>>> checkpoint = "google-t5/t5-small"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForSeq2SeqLM.from_pretrained(checkpoint)

>>> outputs = model.generate(**inputs, num_beams=5, do_sample=True)

>>> tokenizer.decode(outputs[0], skip_special_tokens=True)

'Das Haus ist wunderbar.'

```

### Diverse beam search decoding

The diverse beam search decoding strategy is an extension of the beam search strategy that allows for generating a more diverse

set of beam sequences to choose from. To learn how it works, refer to [Diverse Beam Search: Decoding Diverse Solutions from Neural Sequence Models](https://arxiv.org/pdf/1610.02424.pdf).

This approach has three main parameters: `num_beams`, `num_beam_groups`, and `diversity_penalty`.

The diversity penalty ensures the outputs are distinct across groups, and beam search is used within each group.

```python

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

>>> checkpoint = "google/pegasus-xsum"

>>> prompt = (

... "The Permaculture Design Principles are a set of universal design principles "

... "that can be applied to any location, climate and culture, and they allow us to design "

... "the most efficient and sustainable human habitation and food production systems. "

... "Permaculture is a design system that encompasses a wide variety of disciplines, such "

... "as ecology, landscape design, environmental science and energy conservation, and the "

... "Permaculture design principles are drawn from these various disciplines. Each individual "

... "design principle itself embodies a complete conceptual framework based on sound "

... "scientific principles. When we bring all these separate principles together, we can "

... "create a design system that both looks at whole systems, the parts that these systems "

... "consist of, and how those parts interact with each other to create a complex, dynamic, "

... "living system. Each design principle serves as a tool that allows us to integrate all "

... "the separate parts of a design, referred to as elements, into a functional, synergistic, "

... "whole system, where the elements harmoniously interact and work together in the most "

... "efficient way possible."

... )

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForSeq2SeqLM.from_pretrained(checkpoint)

>>> outputs = model.generate(**inputs, num_beams=5, num_beam_groups=5, max_new_tokens=30, diversity_penalty=1.0)

>>> tokenizer.decode(outputs[0], skip_special_tokens=True)

'The Design Principles are a set of universal design principles that can be applied to any location, climate and

culture, and they allow us to design the'

```

This guide illustrates the main parameters that enable various decoding strategies. More advanced parameters exist for the

[`generate`] method, which gives you even further control over the [`generate`] method's behavior.

For the complete list of the available parameters, refer to the [API documentation](./main_classes/text_generation.md).

### Speculative Decoding

Speculative decoding (also known as assisted decoding) is a modification of the decoding strategies above, that uses an

assistant model (ideally a much smaller one) with the same tokenizer, to generate a few candidate tokens. The main

model then validates the candidate tokens in a single forward pass, which speeds up the decoding process. If

`do_sample=True`, then the token validation with resampling introduced in the

[speculative decoding paper](https://arxiv.org/pdf/2211.17192.pdf) is used.

Currently, only greedy search and sampling are supported with assisted decoding, and assisted decoding doesn't support batched inputs.

To learn more about assisted decoding, check [this blog post](https://huggingface.co/blog/assisted-generation).

To enable assisted decoding, set the `assistant_model` argument with a model.

```python

>>> from transformers import AutoModelForCausalLM, AutoTokenizer

>>> prompt = "Alice and Bob"

>>> checkpoint = "EleutherAI/pythia-1.4b-deduped"

>>> assistant_checkpoint = "EleutherAI/pythia-160m-deduped"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> assistant_model = AutoModelForCausalLM.from_pretrained(assistant_checkpoint)

>>> outputs = model.generate(**inputs, assistant_model=assistant_model)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['Alice and Bob are sitting in a bar. Alice is drinking a beer and Bob is drinking a']

```

When using assisted decoding with sampling methods, you can use the `temperature` argument to control the randomness,

just like in multinomial sampling. However, in assisted decoding, reducing the temperature may help improve the latency.

```python

>>> from transformers import AutoModelForCausalLM, AutoTokenizer, set_seed

>>> set_seed(42) # For reproducibility

>>> prompt = "Alice and Bob"

>>> checkpoint = "EleutherAI/pythia-1.4b-deduped"

>>> assistant_checkpoint = "EleutherAI/pythia-160m-deduped"

>>> tokenizer = AutoTokenizer.from_pretrained(checkpoint)

>>> inputs = tokenizer(prompt, return_tensors="pt")

>>> model = AutoModelForCausalLM.from_pretrained(checkpoint)

>>> assistant_model = AutoModelForCausalLM.from_pretrained(assistant_checkpoint)

>>> outputs = model.generate(**inputs, assistant_model=assistant_model, do_sample=True, temperature=0.5)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['Alice and Bob are going to the same party. It is a small party, in a small']

```

| transformers/docs/source/en/generation_strategies.md/0 | {

"file_path": "transformers/docs/source/en/generation_strategies.md",

"repo_id": "transformers",

"token_count": 5563

} | 4 |

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Optimizing LLMs for Speed and Memory

[[open-in-colab]]

Large Language Models (LLMs) such as GPT3/4, [Falcon](https://huggingface.co/tiiuae/falcon-40b), and [Llama](https://huggingface.co/meta-llama/Llama-2-70b-hf) are rapidly advancing in their ability to tackle human-centric tasks, establishing themselves as essential tools in modern knowledge-based industries.

Deploying these models in real-world tasks remains challenging, however:

- To exhibit near-human text understanding and generation capabilities, LLMs currently require to be composed of billions of parameters (see [Kaplan et al](https://arxiv.org/abs/2001.08361), [Wei et. al](https://arxiv.org/abs/2206.07682)). This consequently amplifies the memory demands for inference.

- In many real-world tasks, LLMs need to be given extensive contextual information. This necessitates the model's capability to manage very long input sequences during inference.

The crux of these challenges lies in augmenting the computational and memory capabilities of LLMs, especially when handling expansive input sequences.

In this guide, we will go over the effective techniques for efficient LLM deployment:

1. **Lower Precision:** Research has shown that operating at reduced numerical precision, namely [8-bit and 4-bit](./main_classes/quantization.md) can achieve computational advantages without a considerable decline in model performance.

2. **Flash Attention:** Flash Attention is a variation of the attention algorithm that not only provides a more memory-efficient approach but also realizes increased efficiency due to optimized GPU memory utilization.

3. **Architectural Innovations:** Considering that LLMs are always deployed in the same way during inference, namely autoregressive text generation with a long input context, specialized model architectures have been proposed that allow for more efficient inference. The most important advancement in model architectures hereby are [Alibi](https://arxiv.org/abs/2108.12409), [Rotary embeddings](https://arxiv.org/abs/2104.09864), [Multi-Query Attention (MQA)](https://arxiv.org/abs/1911.02150) and [Grouped-Query-Attention (GQA)]((https://arxiv.org/abs/2305.13245)).

Throughout this guide, we will offer an analysis of auto-regressive generation from a tensor's perspective. We delve into the pros and cons of adopting lower precision, provide a comprehensive exploration of the latest attention algorithms, and discuss improved LLM architectures. While doing so, we run practical examples showcasing each of the feature improvements.

## 1. Lower Precision

Memory requirements of LLMs can be best understood by seeing the LLM as a set of weight matrices and vectors and the text inputs as a sequence of vectors. In the following, the definition *weights* will be used to signify all model weight matrices and vectors.

At the time of writing this guide, LLMs consist of at least a couple billion parameters. Each parameter thereby is made of a decimal number, e.g. `4.5689` which is usually stored in either [float32](https://en.wikipedia.org/wiki/Single-precision_floating-point_format), [bfloat16](https://en.wikipedia.org/wiki/Bfloat16_floating-point_format), or [float16](https://en.wikipedia.org/wiki/Half-precision_floating-point_format) format. This allows us to easily compute the memory requirement to load the LLM into memory:

> *Loading the weights of a model having X billion parameters requires roughly 4 * X GB of VRAM in float32 precision*

Nowadays, models are however rarely trained in full float32 precision, but usually in bfloat16 precision or less frequently in float16 precision. Therefore the rule of thumb becomes:

> *Loading the weights of a model having X billion parameters requires roughly 2 * X GB of VRAM in bfloat16/float16 precision*

For shorter text inputs (less than 1024 tokens), the memory requirement for inference is very much dominated by the memory requirement to load the weights. Therefore, for now, let's assume that the memory requirement for inference is equal to the memory requirement to load the model into the GPU VRAM.

To give some examples of how much VRAM it roughly takes to load a model in bfloat16:

- **GPT3** requires 2 \* 175 GB = **350 GB** VRAM

- [**Bloom**](https://huggingface.co/bigscience/bloom) requires 2 \* 176 GB = **352 GB** VRAM

- [**Llama-2-70b**](https://huggingface.co/meta-llama/Llama-2-70b-hf) requires 2 \* 70 GB = **140 GB** VRAM

- [**Falcon-40b**](https://huggingface.co/tiiuae/falcon-40b) requires 2 \* 40 GB = **80 GB** VRAM

- [**MPT-30b**](https://huggingface.co/mosaicml/mpt-30b) requires 2 \* 30 GB = **60 GB** VRAM

- [**bigcode/starcoder**](https://huggingface.co/bigcode/starcoder) requires 2 \* 15.5 = **31 GB** VRAM

As of writing this document, the largest GPU chip on the market is the A100 & H100 offering 80GB of VRAM. Most of the models listed before require more than 80GB just to be loaded and therefore necessarily require [tensor parallelism](https://huggingface.co/docs/transformers/perf_train_gpu_many#tensor-parallelism) and/or [pipeline parallelism](https://huggingface.co/docs/transformers/perf_train_gpu_many#naive-model-parallelism-vertical-and-pipeline-parallelism).

🤗 Transformers does not support tensor parallelism out of the box as it requires the model architecture to be written in a specific way. If you're interested in writing models in a tensor-parallelism-friendly way, feel free to have a look at [the text-generation-inference library](https://github.com/huggingface/text-generation-inference/tree/main/server/text_generation_server/models/custom_modeling).

Naive pipeline parallelism is supported out of the box. For this, simply load the model with `device="auto"` which will automatically place the different layers on the available GPUs as explained [here](https://huggingface.co/docs/accelerate/v0.22.0/en/concept_guides/big_model_inference).

Note, however that while very effective, this naive pipeline parallelism does not tackle the issues of GPU idling. For this more advanced pipeline parallelism is required as explained [here](https://huggingface.co/docs/transformers/en/perf_train_gpu_many#naive-model-parallelism-vertical-and-pipeline-parallelism).

If you have access to an 8 x 80GB A100 node, you could load BLOOM as follows

```bash

!pip install transformers accelerate bitsandbytes optimum

```

```python

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("bigscience/bloom", device_map="auto", pad_token_id=0)

```

By using `device_map="auto"` the attention layers would be equally distributed over all available GPUs.

In this guide, we will use [bigcode/octocoder](https://huggingface.co/bigcode/octocoder) as it can be run on a single 40 GB A100 GPU device chip. Note that all memory and speed optimizations that we will apply going forward, are equally applicable to models that require model or tensor parallelism.

Since the model is loaded in bfloat16 precision, using our rule of thumb above, we would expect the memory requirement to run inference with `bigcode/octocoder` to be around 31 GB VRAM. Let's give it a try.

We first load the model and tokenizer and then pass both to Transformers' [pipeline](https://huggingface.co/docs/transformers/main_classes/pipelines) object.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

import torch

model = AutoModelForCausalLM.from_pretrained("bigcode/octocoder", torch_dtype=torch.bfloat16, device_map="auto", pad_token_id=0)

tokenizer = AutoTokenizer.from_pretrained("bigcode/octocoder")

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

```

```python

prompt = "Question: Please write a function in Python that transforms bytes to Giga bytes.\n\nAnswer:"

result = pipe(prompt, max_new_tokens=60)[0]["generated_text"][len(prompt):]

result

```

**Output**:

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

Nice, we can now directly use the result to convert bytes into Gigabytes.

```python

def bytes_to_giga_bytes(bytes):

return bytes / 1024 / 1024 / 1024

```

Let's call [`torch.cuda.max_memory_allocated`](https://pytorch.org/docs/stable/generated/torch.cuda.max_memory_allocated.html) to measure the peak GPU memory allocation.

```python

bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

```bash

29.0260648727417

```

Close enough to our back-of-the-envelope computation! We can see the number is not exactly correct as going from bytes to kilobytes requires a multiplication of 1024 instead of 1000. Therefore the back-of-the-envelope formula can also be understood as an "at most X GB" computation.

Note that if we had tried to run the model in full float32 precision, a whopping 64 GB of VRAM would have been required.

> Almost all models are trained in bfloat16 nowadays, there is no reason to run the model in full float32 precision if [your GPU supports bfloat16](https://discuss.pytorch.org/t/bfloat16-native-support/117155/5). Float32 won't give better inference results than the precision that was used to train the model.

If you are unsure in which format the model weights are stored on the Hub, you can always look into the checkpoint's config under `"torch_dtype"`, *e.g.* [here](https://huggingface.co/meta-llama/Llama-2-7b-hf/blob/6fdf2e60f86ff2481f2241aaee459f85b5b0bbb9/config.json#L21). It is recommended to set the model to the same precision type as written in the config when loading with `from_pretrained(..., torch_dtype=...)` except when the original type is float32 in which case one can use both `float16` or `bfloat16` for inference.

Let's define a `flush(...)` function to free all allocated memory so that we can accurately measure the peak allocated GPU memory.

```python

del pipe

del model

import gc

import torch

def flush():

gc.collect()

torch.cuda.empty_cache()

torch.cuda.reset_peak_memory_stats()

```

Let's call it now for the next experiment.

```python

flush()

```

In the recent version of the accelerate library, you can also use an utility method called `release_memory()`

```python

from accelerate.utils import release_memory

# ...

release_memory(model)

```

Now what if your GPU does not have 32 GB of VRAM? It has been found that model weights can be quantized to 8-bit or 4-bits without a significant loss in performance (see [Dettmers et al.](https://arxiv.org/abs/2208.07339)).

Model can be quantized to even 3 or 2 bits with an acceptable loss in performance as shown in the recent [GPTQ paper](https://arxiv.org/abs/2210.17323) 🤯.

Without going into too many details, quantization schemes aim at reducing the precision of weights while trying to keep the model's inference results as accurate as possible (*a.k.a* as close as possible to bfloat16).

Note that quantization works especially well for text generation since all we care about is choosing the *set of most likely next tokens* and don't really care about the exact values of the next token *logit* distribution.

All that matters is that the next token *logit* distribution stays roughly the same so that an `argmax` or `topk` operation gives the same results.

There are various quantization techniques, which we won't discuss in detail here, but in general, all quantization techniques work as follows:

- 1. Quantize all weights to the target precision

- 2. Load the quantized weights, and pass the input sequence of vectors in bfloat16 precision

- 3. Dynamically dequantize weights to bfloat16 to perform the computation with their input vectors in bfloat16 precision

In a nutshell, this means that *inputs-weight matrix* multiplications, with \\( X \\) being the *inputs*, \\( W \\) being a weight matrix and \\( Y \\) being the output:

$$ Y = X * W $$

are changed to

$$ Y = X * \text{dequantize}(W) $$

for every matrix multiplication. Dequantization and re-quantization is performed sequentially for all weight matrices as the inputs run through the network graph.

Therefore, inference time is often **not** reduced when using quantized weights, but rather increases.

Enough theory, let's give it a try! To quantize the weights with Transformers, you need to make sure that

the [`bitsandbytes`](https://github.com/TimDettmers/bitsandbytes) library is installed.

```bash

!pip install bitsandbytes

```

We can then load models in 8-bit quantization by simply adding a `load_in_8bit=True` flag to `from_pretrained`.

```python

model = AutoModelForCausalLM.from_pretrained("bigcode/octocoder", load_in_8bit=True, pad_token_id=0)

```

Now, let's run our example again and measure the memory usage.

```python

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

result = pipe(prompt, max_new_tokens=60)[0]["generated_text"][len(prompt):]

result

```

**Output**:

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

Nice, we're getting the same result as before, so no loss in accuracy! Let's look at how much memory was used this time.

```python

bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

```

15.219234466552734

```

Significantly less! We're down to just a bit over 15 GBs and could therefore run this model on consumer GPUs like the 4090.

We're seeing a very nice gain in memory efficiency and more or less no degradation to the model's output. However, we can also notice a slight slow-down during inference.

We delete the models and flush the memory again.

```python

del model

del pipe

```

```python

flush()

```

Let's see what peak GPU memory consumption 4-bit quantization gives. Quantizing the model to 4-bit can be done with the same API as before - this time by passing `load_in_4bit=True` instead of `load_in_8bit=True`.

```python

model = AutoModelForCausalLM.from_pretrained("bigcode/octocoder", load_in_4bit=True, low_cpu_mem_usage=True, pad_token_id=0)

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

result = pipe(prompt, max_new_tokens=60)[0]["generated_text"][len(prompt):]

result

```

**Output**:

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```\ndef bytes_to_gigabytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single argument

```

We're almost seeing the same output text as before - just the `python` is missing just before the code snippet. Let's see how much memory was required.

```python

bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

```

9.543574333190918

```

Just 9.5GB! That's really not a lot for a >15 billion parameter model.

While we see very little degradation in accuracy for our model here, 4-bit quantization can in practice often lead to different results compared to 8-bit quantization or full `bfloat16` inference. It is up to the user to try it out.

Also note that inference here was again a bit slower compared to 8-bit quantization which is due to the more aggressive quantization method used for 4-bit quantization leading to \\( \text{quantize} \\) and \\( \text{dequantize} \\) taking longer during inference.

```python

del model

del pipe

```

```python

flush()

```

Overall, we saw that running OctoCoder in 8-bit precision reduced the required GPU VRAM from 32G GPU VRAM to only 15GB and running the model in 4-bit precision further reduces the required GPU VRAM to just a bit over 9GB.

4-bit quantization allows the model to be run on GPUs such as RTX3090, V100, and T4 which are quite accessible for most people.

For more information on quantization and to see how one can quantize models to require even less GPU VRAM memory than 4-bit, we recommend looking into the [`AutoGPTQ`](https://huggingface.co/docs/transformers/main/en/main_classes/quantization#autogptq-integration%60) implementation.

> As a conclusion, it is important to remember that model quantization trades improved memory efficiency against accuracy and in some cases inference time.

If GPU memory is not a constraint for your use case, there is often no need to look into quantization. However many GPUs simply can't run LLMs without quantization methods and in this case, 4-bit and 8-bit quantization schemes are extremely useful tools.

For more in-detail usage information, we strongly recommend taking a look at the [Transformers Quantization Docs](https://huggingface.co/docs/transformers/main_classes/quantization#general-usage).

Next, let's look into how we can improve computational and memory efficiency by using better algorithms and an improved model architecture.

## 2. Flash Attention

Today's top-performing LLMs share more or less the same fundamental architecture that consists of feed-forward layers, activation layers, layer normalization layers, and most crucially, self-attention layers.

Self-attention layers are central to Large Language Models (LLMs) in that they enable the model to understand the contextual relationships between input tokens.

However, the peak GPU memory consumption for self-attention layers grows *quadratically* both in compute and memory complexity with number of input tokens (also called *sequence length*) that we denote in the following by \\( N \\) .

While this is not really noticeable for shorter input sequences (of up to 1000 input tokens), it becomes a serious problem for longer input sequences (at around 16000 input tokens).

Let's take a closer look. The formula to compute the output \\( \mathbf{O} \\) of a self-attention layer for an input \\( \mathbf{X} \\) of length \\( N \\) is:

$$ \textbf{O} = \text{Attn}(\mathbf{X}) = \mathbf{V} \times \text{Softmax}(\mathbf{QK}^T) \text{ with } \mathbf{Q} = \mathbf{W}_q \mathbf{X}, \mathbf{V} = \mathbf{W}_v \mathbf{X}, \mathbf{K} = \mathbf{W}_k \mathbf{X} $$

\\( \mathbf{X} = (\mathbf{x}_1, ... \mathbf{x}_{N}) \\) is thereby the input sequence to the attention layer. The projections \\( \mathbf{Q} \\) and \\( \mathbf{K} \\) will each consist of \\( N \\) vectors resulting in the \\( \mathbf{QK}^T \\) being of size \\( N^2 \\) .

LLMs usually have multiple attention heads, thus doing multiple self-attention computations in parallel.

Assuming, the LLM has 40 attention heads and runs in bfloat16 precision, we can calculate the memory requirement to store the \\( \mathbf{QK^T} \\) matrices to be \\( 40 * 2 * N^2 \\) bytes. For \\( N=1000 \\) only around 50 MB of VRAM are needed, however, for \\( N=16000 \\) we would need 19 GB of VRAM, and for \\( N=100,000 \\) we would need almost 1TB just to store the \\( \mathbf{QK}^T \\) matrices.

Long story short, the default self-attention algorithm quickly becomes prohibitively memory-expensive for large input contexts.

As LLMs improve in text comprehension and generation, they are applied to increasingly complex tasks. While models once handled the translation or summarization of a few sentences, they now manage entire pages, demanding the capability to process extensive input lengths.

How can we get rid of the exorbitant memory requirements for large input lengths? We need a new way to compute the self-attention mechanism that gets rid of the \\( QK^T \\) matrix. [Tri Dao et al.](https://arxiv.org/abs/2205.14135) developed exactly such a new algorithm and called it **Flash Attention**.

In a nutshell, Flash Attention breaks the \\(\mathbf{V} \times \text{Softmax}(\mathbf{QK}^T\\)) computation apart and instead computes smaller chunks of the output by iterating over multiple softmax computation steps:

$$ \textbf{O}_i \leftarrow s^a_{ij} * \textbf{O}_i + s^b_{ij} * \mathbf{V}_{j} \times \text{Softmax}(\mathbf{QK}^T_{i,j}) \text{ for multiple } i, j \text{ iterations} $$

with \\( s^a_{ij} \\) and \\( s^b_{ij} \\) being some softmax normalization statistics that need to be recomputed for every \\( i \\) and \\( j \\) .

Please note that the whole Flash Attention is a bit more complex and is greatly simplified here as going in too much depth is out of scope for this guide. The reader is invited to take a look at the well-written [Flash Attention paper](https://arxiv.org/abs/2205.14135) for more details.

The main takeaway here is:

> By keeping track of softmax normalization statistics and by using some smart mathematics, Flash Attention gives **numerical identical** outputs compared to the default self-attention layer at a memory cost that only increases linearly with \\( N \\) .

Looking at the formula, one would intuitively say that Flash Attention must be much slower compared to the default self-attention formula as more computation needs to be done. Indeed Flash Attention requires more FLOPs compared to normal attention as the softmax normalization statistics have to constantly be recomputed (see [paper](https://arxiv.org/abs/2205.14135) for more details if interested)

> However, Flash Attention is much faster in inference compared to default attention which comes from its ability to significantly reduce the demands on the slower, high-bandwidth memory of the GPU (VRAM), focusing instead on the faster on-chip memory (SRAM).

Essentially, Flash Attention makes sure that all intermediate write and read operations can be done using the fast *on-chip* SRAM memory instead of having to access the slower VRAM memory to compute the output vector \\( \mathbf{O} \\) .

In practice, there is currently absolutely no reason to **not** use Flash Attention if available. The algorithm gives mathematically the same outputs, and is both faster and more memory-efficient.

Let's look at a practical example.

Our OctoCoder model now gets a significantly longer input prompt which includes a so-called *system prompt*. System prompts are used to steer the LLM into a better assistant that is tailored to the users' task.

In the following, we use a system prompt that will make OctoCoder a better coding assistant.

```python

system_prompt = """Below are a series of dialogues between various people and an AI technical assistant.

The assistant tries to be helpful, polite, honest, sophisticated, emotionally aware, and humble but knowledgeable.

The assistant is happy to help with code questions and will do their best to understand exactly what is needed.

It also tries to avoid giving false or misleading information, and it caveats when it isn't entirely sure about the right answer.

That said, the assistant is practical really does its best, and doesn't let caution get too much in the way of being useful.

The Starcoder models are a series of 15.5B parameter models trained on 80+ programming languages from The Stack (v1.2) (excluding opt-out requests).

The model uses Multi Query Attention, was trained using the Fill-in-the-Middle objective, and with 8,192 tokens context window for a trillion tokens of heavily deduplicated data.

-----

Question: Write a function that takes two lists and returns a list that has alternating elements from each input list.

Answer: Sure. Here is a function that does that.

def alternating(list1, list2):

results = []

for i in range(len(list1)):

results.append(list1[i])

results.append(list2[i])

return results

Question: Can you write some test cases for this function?

Answer: Sure, here are some tests.

assert alternating([10, 20, 30], [1, 2, 3]) == [10, 1, 20, 2, 30, 3]

assert alternating([True, False], [4, 5]) == [True, 4, False, 5]

assert alternating([], []) == []

Question: Modify the function so that it returns all input elements when the lists have uneven length. The elements from the longer list should be at the end.

Answer: Here is the modified function.

def alternating(list1, list2):

results = []

for i in range(min(len(list1), len(list2))):

results.append(list1[i])

results.append(list2[i])

if len(list1) > len(list2):

results.extend(list1[i+1:])

else:

results.extend(list2[i+1:])

return results

-----

"""

```

For demonstration purposes, we duplicate the system prompt by ten so that the input length is long enough to observe Flash Attention's memory savings.

We append the original text prompt `"Question: Please write a function in Python that transforms bytes to Giga bytes.\n\nAnswer: Here"`

```python

long_prompt = 10 * system_prompt + prompt

```

We instantiate our model again in bfloat16 precision.

```python

model = AutoModelForCausalLM.from_pretrained("bigcode/octocoder", torch_dtype=torch.bfloat16, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("bigcode/octocoder")

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

```

Let's now run the model just like before *without Flash Attention* and measure the peak GPU memory requirement and inference time.

```python

import time

start_time = time.time()

result = pipe(long_prompt, max_new_tokens=60)[0]["generated_text"][len(long_prompt):]

print(f"Generated in {time.time() - start_time} seconds.")

result

```

**Output**:

```

Generated in 10.96854019165039 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

````

We're getting the same output as before, however this time, the model repeats the answer multiple times until it's 60 tokens cut-off. This is not surprising as we've repeated the system prompt ten times for demonstration purposes and thus cued the model to repeat itself.

**Note** that the system prompt should not be repeated ten times in real-world applications - one time is enough!

Let's measure the peak GPU memory requirement.

```python

bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

```bash

37.668193340301514

```

As we can see the peak GPU memory requirement is now significantly higher than in the beginning, which is largely due to the longer input sequence. Also the generation takes a little over a minute now.

We call `flush()` to free GPU memory for our next experiment.

```python

flush()

```

For comparison, let's run the same function, but enable Flash Attention instead.

To do so, we convert the model to [BetterTransformer](https://huggingface.co/docs/optimum/bettertransformer/overview) and by doing so enabling PyTorch's [SDPA self-attention](https://pytorch.org/docs/master/generated/torch.nn.functional.scaled_dot_product_attention) which in turn is able to use Flash Attention.

```python

model.to_bettertransformer()

```

Now we run the exact same code snippet as before and under the hood Transformers will make use of Flash Attention.

```py

start_time = time.time()

with torch.backends.cuda.sdp_kernel(enable_flash=True, enable_math=False, enable_mem_efficient=False):

result = pipe(long_prompt, max_new_tokens=60)[0]["generated_text"][len(long_prompt):]

print(f"Generated in {time.time() - start_time} seconds.")

result

```

**Output**:

```

Generated in 3.0211617946624756 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

```

We're getting the exact same result as before, but can observe a very significant speed-up thanks to Flash Attention.

Let's measure the memory consumption one last time.

```python

bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

```

32.617331981658936

```

And we're almost back to our original 29GB peak GPU memory from the beginning.

We can observe that we only use roughly 100MB more GPU memory when passing a very long input sequence with Flash Attention compared to passing a short input sequence as done in the beginning.

```py

flush()

```

For more information on how to use Flash Attention, please have a look at [this doc page](https://huggingface.co/docs/transformers/en/perf_infer_gpu_one#flashattention-2).

## 3. Architectural Innovations

So far we have looked into improving computational and memory efficiency by:

- Casting the weights to a lower precision format

- Replacing the self-attention algorithm with a more memory- and compute efficient version

Let's now look into how we can change the architecture of an LLM so that it is most effective and efficient for task that require long text inputs, *e.g.*:

- Retrieval augmented Questions Answering,

- Summarization,

- Chat

Note that *chat* not only requires the LLM to handle long text inputs, but it also necessitates that the LLM is able to efficiently handle the back-and-forth dialogue between user and assistant (such as ChatGPT).

Once trained, the fundamental LLM architecture is difficult to change, so it is important to make considerations about the LLM's tasks beforehand and accordingly optimize the model's architecture.

There are two important components of the model architecture that quickly become memory and/or performance bottlenecks for large input sequences.

- The positional embeddings

- The key-value cache

Let's go over each component in more detail

### 3.1 Improving positional embeddings of LLMs

Self-attention puts each token in relation to each other's tokens.

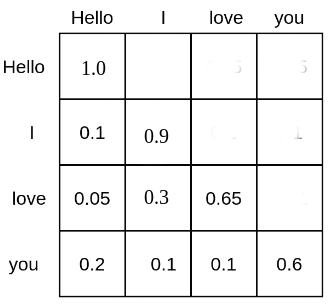

As an example, the \\( \text{Softmax}(\mathbf{QK}^T) \\) matrix of the text input sequence *"Hello", "I", "love", "you"* could look as follows:

Each word token is given a probability mass at which it attends all other word tokens and, therefore is put into relation with all other word tokens. E.g. the word *"love"* attends to the word *"Hello"* with 5%, to *"I"* with 30%, and to itself with 65%.

A LLM based on self-attention, but without position embeddings would have great difficulties in understanding the positions of the text inputs to each other.

This is because the probability score computed by \\( \mathbf{QK}^T \\) relates each word token to each other word token in \\( O(1) \\) computations regardless of their relative positional distance to each other.

Therefore, for the LLM without position embeddings each token appears to have the same distance to all other tokens, *e.g.* differentiating between *"Hello I love you"* and *"You love I hello"* would be very challenging.

For the LLM to understand sentence order, an additional *cue* is needed and is usually applied in the form of *positional encodings* (or also called *positional embeddings*).

Positional encodings, encode the position of each token into a numerical presentation that the LLM can leverage to better understand sentence order.

The authors of the [*Attention Is All You Need*](https://arxiv.org/abs/1706.03762) paper introduced sinusoidal positional embeddings \\( \mathbf{P} = \mathbf{p}_1, \ldots, \mathbf{p}_N \\) .

where each vector \\( \mathbf{p}_i \\) is computed as a sinusoidal function of its position \\( i \\) .

The positional encodings are then simply added to the input sequence vectors \\( \mathbf{\hat{X}} = \mathbf{\hat{x}}_1, \ldots, \mathbf{\hat{x}}_N \\) = \\( \mathbf{x}_1 + \mathbf{p}_1, \ldots, \mathbf{x}_N + \mathbf{p}_N \\) thereby cueing the model to better learn sentence order.

Instead of using fixed position embeddings, others (such as [Devlin et al.](https://arxiv.org/abs/1810.04805)) used learned positional encodings for which the positional embeddings

\\( \mathbf{P} \\) are learned during training.

Sinusoidal and learned position embeddings used to be the predominant methods to encode sentence order into LLMs, but a couple of problems related to these positional encodings were found:

1. Sinusoidal and learned position embeddings are both absolute positional embeddings, *i.e.* encoding a unique embedding for each position id: \\( 0, \ldots, N \\) . As shown by [Huang et al.](https://arxiv.org/abs/2009.13658) and [Su et al.](https://arxiv.org/abs/2104.09864), absolute positional embeddings lead to poor LLM performance for long text inputs. For long text inputs, it is advantageous if the model learns the relative positional distance input tokens have to each other instead of their absolute position.

2. When using learned position embeddings, the LLM has to be trained on a fixed input length \\( N \\), which makes it difficult to extrapolate to an input length longer than what it was trained on.

Recently, relative positional embeddings that can tackle the above mentioned problems have become more popular, most notably:

- [Rotary Position Embedding (RoPE)](https://arxiv.org/abs/2104.09864)

- [ALiBi](https://arxiv.org/abs/2108.12409)

Both *RoPE* and *ALiBi* argue that it's best to cue the LLM about sentence order directly in the self-attention algorithm as it's there that word tokens are put into relation with each other. More specifically, sentence order should be cued by modifying the \\( \mathbf{QK}^T \\) computation.

Without going into too many details, *RoPE* notes that positional information can be encoded into query-key pairs, *e.g.* \\( \mathbf{q}_i \\) and \\( \mathbf{x}_j \\) by rotating each vector by an angle \\( \theta * i \\) and \\( \theta * j \\) respectively with \\( i, j \\) describing each vectors sentence position:

$$ \mathbf{\hat{q}}_i^T \mathbf{\hat{x}}_j = \mathbf{{q}}_i^T \mathbf{R}_{\theta, i -j} \mathbf{{x}}_j. $$

\\( \mathbf{R}_{\theta, i - j} \\) thereby represents a rotational matrix. \\( \theta \\) is *not* learned during training, but instead set to a pre-defined value that depends on the maximum input sequence length during training.

> By doing so, the propability score between \\( \mathbf{q}_i \\) and \\( \mathbf{q}_j \\) is only affected if \\( i \ne j \\) and solely depends on the relative distance \\( i - j \\) regardless of each vector's specific positions \\( i \\) and \\( j \\) .

*RoPE* is used in multiple of today's most important LLMs, such as:

- [**Falcon**](https://huggingface.co/tiiuae/falcon-40b)

- [**Llama**](https://arxiv.org/abs/2302.13971)

- [**PaLM**](https://arxiv.org/abs/2204.02311)

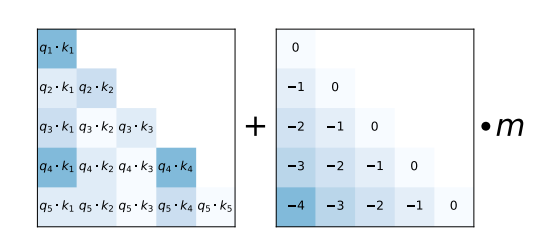

As an alternative, *ALiBi* proposes a much simpler relative position encoding scheme. The relative distance that input tokens have to each other is added as a negative integer scaled by a pre-defined value `m` to each query-key entry of the \\( \mathbf{QK}^T \\) matrix right before the softmax computation.

As shown in the [ALiBi](https://arxiv.org/abs/2108.12409) paper, this simple relative positional encoding allows the model to retain a high performance even at very long text input sequences.

*ALiBi* is used in multiple of today's most important LLMs, such as:

- [**MPT**](https://huggingface.co/mosaicml/mpt-30b)

- [**BLOOM**](https://huggingface.co/bigscience/bloom)

Both *RoPE* and *ALiBi* position encodings can extrapolate to input lengths not seen during training whereas it has been shown that extrapolation works much better out-of-the-box for *ALiBi* as compared to *RoPE*.

For ALiBi, one simply increases the values of the lower triangular position matrix to match the length of the input sequence.

For *RoPE*, keeping the same \\( \theta \\) that was used during training leads to poor results when passing text inputs much longer than those seen during training, *c.f* [Press et al.](https://arxiv.org/abs/2108.12409). However, the community has found a couple of effective tricks that adapt \\( \theta \\), thereby allowing *RoPE* position embeddings to work well for extrapolated text input sequences (see [here](https://github.com/huggingface/transformers/pull/24653)).

> Both RoPE and ALiBi are relative positional embeddings that are *not* learned during training, but instead are based on the following intuitions:

- Positional cues about the text inputs should be given directly to the \\( QK^T \\) matrix of the self-attention layer

- The LLM should be incentivized to learn a constant *relative* distance positional encodings have to each other

- The further text input tokens are from each other, the lower the probability of their query-value probability. Both RoPE and ALiBi lower the query-key probability of tokens far away from each other. RoPE by decreasing their vector product by increasing the angle between the query-key vectors. ALiBi by adding large negative numbers to the vector product

In conclusion, LLMs that are intended to be deployed in tasks that require handling large text inputs are better trained with relative positional embeddings, such as RoPE and ALiBi. Also note that even if an LLM with RoPE and ALiBi has been trained only on a fixed length of say \\( N_1 = 2048 \\) it can still be used in practice with text inputs much larger than \\( N_1 \\), like \\( N_2 = 8192 > N_1 \\) by extrapolating the positional embeddings.

### 3.2 The key-value cache

Auto-regressive text generation with LLMs works by iteratively putting in an input sequence, sampling the next token, appending the next token to the input sequence, and continuing to do so until the LLM produces a token that signifies that the generation has finished.

Please have a look at [Transformer's Generate Text Tutorial](https://huggingface.co/docs/transformers/llm_tutorial#generate-text) to get a more visual explanation of how auto-regressive generation works.

Let's run a quick code snippet to show how auto-regressive works in practice. We will simply take the most likely next token via `torch.argmax`.

```python

input_ids = tokenizer(prompt, return_tensors="pt")["input_ids"].to("cuda")

for _ in range(5):

next_logits = model(input_ids)["logits"][:, -1:]

next_token_id = torch.argmax(next_logits,dim=-1)

input_ids = torch.cat([input_ids, next_token_id], dim=-1)

print("shape of input_ids", input_ids.shape)

generated_text = tokenizer.batch_decode(input_ids[:, -5:])

generated_text

```

**Output**:

```

shape of input_ids torch.Size([1, 21])

shape of input_ids torch.Size([1, 22])

shape of input_ids torch.Size([1, 23])

shape of input_ids torch.Size([1, 24])

shape of input_ids torch.Size([1, 25])

[' Here is a Python function']

```

As we can see every time we increase the text input tokens by the just sampled token.

With very few exceptions, LLMs are trained using the [causal language modeling objective](https://huggingface.co/docs/transformers/tasks/language_modeling#causal-language-modeling) and therefore mask the upper triangle matrix of the attention score - this is why in the two diagrams above the attention scores are left blank (*a.k.a* have 0 probability). For a quick recap on causal language modeling you can refer to the [*Illustrated Self Attention blog*](https://jalammar.github.io/illustrated-gpt2/#part-2-illustrated-self-attention).

As a consequence, tokens *never* depend on previous tokens, more specifically the \\( \mathbf{q}_i \\) vector is never put in relation with any key, values vectors \\( \mathbf{k}_j, \mathbf{v}_j \\) if \\( j > i \\) . Instead \\( \mathbf{q}_i \\) only attends to previous key-value vectors \\( \mathbf{k}_{m < i}, \mathbf{v}_{m < i} \text{ , for } m \in \{0, \ldots i - 1\} \\). In order to reduce unnecessary computation, one can therefore cache each layer's key-value vectors for all previous timesteps.

In the following, we will tell the LLM to make use of the key-value cache by retrieving and forwarding it for each forward pass.

In Transformers, we can retrieve the key-value cache by passing the `use_cache` flag to the `forward` call and can then pass it with the current token.

```python

past_key_values = None # past_key_values is the key-value cache

generated_tokens = []

next_token_id = tokenizer(prompt, return_tensors="pt")["input_ids"].to("cuda")

for _ in range(5):

next_logits, past_key_values = model(next_token_id, past_key_values=past_key_values, use_cache=True).to_tuple()

next_logits = next_logits[:, -1:]

next_token_id = torch.argmax(next_logits, dim=-1)

print("shape of input_ids", next_token_id.shape)

print("length of key-value cache", len(past_key_values[0][0])) # past_key_values are of shape [num_layers, 0 for k, 1 for v, batch_size, length, hidden_dim]

generated_tokens.append(next_token_id.item())

generated_text = tokenizer.batch_decode(generated_tokens)

generated_text

```

**Output**:

```

shape of input_ids torch.Size([1, 1])

length of key-value cache 20

shape of input_ids torch.Size([1, 1])

length of key-value cache 21

shape of input_ids torch.Size([1, 1])

length of key-value cache 22

shape of input_ids torch.Size([1, 1])

length of key-value cache 23

shape of input_ids torch.Size([1, 1])

length of key-value cache 24

[' Here', ' is', ' a', ' Python', ' function']

```

As one can see, when using the key-value cache the text input tokens are *not* increased in length, but remain a single input vector. The length of the key-value cache on the other hand is increased by one at every decoding step.

> Making use of the key-value cache means that the \\( \mathbf{QK}^T \\) is essentially reduced to \\( \mathbf{q}_c\mathbf{K}^T \\) with \\( \mathbf{q}_c \\) being the query projection of the currently passed input token which is *always* just a single vector.

Using the key-value cache has two advantages:

- Significant increase in computational efficiency as less computations are performed compared to computing the full \\( \mathbf{QK}^T \\) matrix. This leads to an increase in inference speed

- The maximum required memory is not increased quadratically with the number of generated tokens, but only increases linearly.

> One should *always* make use of the key-value cache as it leads to identical results and a significant speed-up for longer input sequences. Transformers has the key-value cache enabled by default when making use of the text pipeline or the [`generate` method](https://huggingface.co/docs/transformers/main_classes/text_generation).

<Tip warning={true}>

Note that, despite our advice to use key-value caches, your LLM output may be slightly different when you use them. This is a property of the matrix multiplication kernels themselves -- you can read more about it [here](https://github.com/huggingface/transformers/issues/25420#issuecomment-1775317535).

</Tip>

#### 3.2.1 Multi-round conversation

The key-value cache is especially useful for applications such as chat where multiple passes of auto-regressive decoding are required. Let's look at an example.

```

User: How many people live in France?

Assistant: Roughly 75 million people live in France

User: And how many are in Germany?

Assistant: Germany has ca. 81 million inhabitants

```

In this chat, the LLM runs auto-regressive decoding twice:

1. The first time, the key-value cache is empty and the input prompt is `"User: How many people live in France?"` and the model auto-regressively generates the text `"Roughly 75 million people live in France"` while increasing the key-value cache at every decoding step.

2. The second time the input prompt is `"User: How many people live in France? \n Assistant: Roughly 75 million people live in France \n User: And how many in Germany?"`. Thanks to the cache, all key-value vectors for the first two sentences are already computed. Therefore the input prompt only consists of `"User: And how many in Germany?"`. While processing the shortened input prompt, it's computed key-value vectors are concatenated to the key-value cache of the first decoding. The second Assistant's answer `"Germany has ca. 81 million inhabitants"` is then auto-regressively generated with the key-value cache consisting of encoded key-value vectors of `"User: How many people live in France? \n Assistant: Roughly 75 million people live in France \n User: And how many are in Germany?"`.

Two things should be noted here:

1. Keeping all the context is crucial for LLMs deployed in chat so that the LLM understands all the previous context of the conversation. E.g. for the example above the LLM needs to understand that the user refers to the population when asking `"And how many are in Germany"`.

2. The key-value cache is extremely useful for chat as it allows us to continuously grow the encoded chat history instead of having to re-encode the chat history again from scratch (as e.g. would be the case when using an encoder-decoder architecture).

In `transformers`, a `generate` call will return `past_key_values` when `return_dict_in_generate=True` is passed, in addition to the default `use_cache=True`. Note that it is not yet available through the `pipeline` interface.

```python

# Generation as usual

prompt = system_prompt + "Question: Please write a function in Python that transforms bytes to Giga bytes.\n\nAnswer: Here"

model_inputs = tokenizer(prompt, return_tensors='pt')

generation_output = model.generate(**model_inputs, max_new_tokens=60, return_dict_in_generate=True)

decoded_output = tokenizer.batch_decode(generation_output.sequences)[0]

# Piping the returned `past_key_values` to speed up the next conversation round

prompt = decoded_output + "\nQuestion: How can I modify the function above to return Mega bytes instead?\n\nAnswer: Here"

model_inputs = tokenizer(prompt, return_tensors='pt')

generation_output = model.generate(

**model_inputs,

past_key_values=generation_output.past_key_values,

max_new_tokens=60,

return_dict_in_generate=True

)

tokenizer.batch_decode(generation_output.sequences)[0][len(prompt):]

```

**Output**:

```

is a modified version of the function that returns Mega bytes instead.

def bytes_to_megabytes(bytes):

return bytes / 1024 / 1024

Answer: The function takes a number of bytes as input and returns the number of

```

Great, no additional time is spent recomputing the same key and values for the attention layer! There is however one catch. While the required peak memory for the \\( \mathbf{QK}^T \\) matrix is significantly reduced, holding the key-value cache in memory can become very memory expensive for long input sequences or multi-turn chat. Remember that the key-value cache needs to store the key-value vectors for all previous input vectors \\( \mathbf{x}_i \text{, for } i \in \{1, \ldots, c - 1\} \\) for all self-attention layers and for all attention heads.

Let's compute the number of float values that need to be stored in the key-value cache for the LLM `bigcode/octocoder` that we used before.

The number of float values amounts to two times the sequence length times the number of attention heads times the attention head dimension and times the number of layers.

Computing this for our LLM at a hypothetical input sequence length of 16000 gives:

```python

config = model.config

2 * 16_000 * config.n_layer * config.n_head * config.n_embd // config.n_head

```

**Output**:

```

7864320000

```

Roughly 8 billion float values! Storing 8 billion float values in `float16` precision requires around 15 GB of RAM which is circa half as much as the model weights themselves!

Researchers have proposed two methods that allow to significantly reduce the memory cost of storing the key-value cache, which are explored in the next subsections.

#### 3.2.2 Multi-Query-Attention (MQA)

[Multi-Query-Attention](https://arxiv.org/abs/1911.02150) was proposed in Noam Shazeer's *Fast Transformer Decoding: One Write-Head is All You Need* paper. As the title says, Noam found out that instead of using `n_head` key-value projections weights, one can use a single head-value projection weight pair that is shared across all attention heads without that the model's performance significantly degrades.

> By using a single head-value projection weight pair, the key value vectors \\( \mathbf{k}_i, \mathbf{v}_i \\) have to be identical across all attention heads which in turn means that we only need to store 1 key-value projection pair in the cache instead of `n_head` ones.

As most LLMs use between 20 and 100 attention heads, MQA significantly reduces the memory consumption of the key-value cache. For the LLM used in this notebook we could therefore reduce the required memory consumption from 15 GB to less than 400 MB at an input sequence length of 16000.

In addition to memory savings, MQA also leads to improved computational efficiency as explained in the following.

In auto-regressive decoding, large key-value vectors need to be reloaded, concatenated with the current key-value vector pair to be then fed into the \\( \mathbf{q}_c\mathbf{K}^T \\) computation at every step. For auto-regressive decoding, the required memory bandwidth for the constant reloading can become a serious time bottleneck. By reducing the size of the key-value vectors less memory needs to be accessed, thus reducing the memory bandwidth bottleneck. For more detail, please have a look at [Noam's paper](https://arxiv.org/abs/1911.02150).

The important part to understand here is that reducing the number of key-value attention heads to 1 only makes sense if a key-value cache is used. The peak memory consumption of the model for a single forward pass without key-value cache stays unchanged as every attention head still has a unique query vector so that each attention head still has a different \\( \mathbf{QK}^T \\) matrix.

MQA has seen wide adoption by the community and is now used by many of the most popular LLMs:

- [**Falcon**](https://huggingface.co/tiiuae/falcon-40b)

- [**PaLM**](https://arxiv.org/abs/2204.02311)

- [**MPT**](https://huggingface.co/mosaicml/mpt-30b)

- [**BLOOM**](https://huggingface.co/bigscience/bloom)

Also, the checkpoint used in this notebook - `bigcode/octocoder` - makes use of MQA.

#### 3.2.3 Grouped-Query-Attention (GQA)

[Grouped-Query-Attention](https://arxiv.org/abs/2305.13245), as proposed by Ainslie et al. from Google, found that using MQA can often lead to quality degradation compared to using vanilla multi-key-value head projections. The paper argues that more model performance can be kept by less drastically reducing the number of query head projection weights. Instead of using just a single key-value projection weight, `n < n_head` key-value projection weights should be used. By choosing `n` to a significantly smaller value than `n_head`, such as 2,4 or 8 almost all of the memory and speed gains from MQA can be kept while sacrificing less model capacity and thus arguably less performance.

Moreover, the authors of GQA found out that existing model checkpoints can be *uptrained* to have a GQA architecture with as little as 5% of the original pre-training compute. While 5% of the original pre-training compute can still be a massive amount, GQA *uptraining* allows existing checkpoints to be useful for longer input sequences.

GQA was only recently proposed which is why there is less adoption at the time of writing this notebook.

The most notable application of GQA is [Llama-v2](https://huggingface.co/meta-llama/Llama-2-70b-hf).

> As a conclusion, it is strongly recommended to make use of either GQA or MQA if the LLM is deployed with auto-regressive decoding and is required to handle large input sequences as is the case for example for chat.

## Conclusion

The research community is constantly coming up with new, nifty ways to speed up inference time for ever-larger LLMs. As an example, one such promising research direction is [speculative decoding](https://arxiv.org/abs/2211.17192) where "easy tokens" are generated by smaller, faster language models and only "hard tokens" are generated by the LLM itself. Going into more detail is out of the scope of this notebook, but can be read upon in this [nice blog post](https://huggingface.co/blog/assisted-generation).

The reason massive LLMs such as GPT3/4, Llama-2-70b, Claude, PaLM can run so quickly in chat-interfaces such as [Hugging Face Chat](https://huggingface.co/chat/) or ChatGPT is to a big part thanks to the above-mentioned improvements in precision, algorithms, and architecture.

Going forward, accelerators such as GPUs, TPUs, etc... will only get faster and allow for more memory, but one should nevertheless always make sure to use the best available algorithms and architectures to get the most bang for your buck 🤗

| transformers/docs/source/en/llm_tutorial_optimization.md/0 | {

"file_path": "transformers/docs/source/en/llm_tutorial_optimization.md",

"repo_id": "transformers",

"token_count": 14705

} | 5 |

<!--Copyright 2020 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Processors

Processors can mean two different things in the Transformers library:

- the objects that pre-process inputs for multi-modal models such as [Wav2Vec2](../model_doc/wav2vec2) (speech and text)

or [CLIP](../model_doc/clip) (text and vision)

- deprecated objects that were used in older versions of the library to preprocess data for GLUE or SQUAD.

## Multi-modal processors

Any multi-modal model will require an object to encode or decode the data that groups several modalities (among text,

vision and audio). This is handled by objects called processors, which group together two or more processing objects

such as tokenizers (for the text modality), image processors (for vision) and feature extractors (for audio).

Those processors inherit from the following base class that implements the saving and loading functionality:

[[autodoc]] ProcessorMixin

## Deprecated processors