Upload 11 files

Browse files- .gitattributes +2 -0

- FaceSwap.py +146 -0

- README.md +140 -3

- display.png +3 -0

- images/input_images/input1.png +0 -0

- images/input_images/input2.jpg +0 -0

- images/output_images/output1.png +0 -0

- images/output_images/output2.png +3 -0

- images/source_face_images/face1.jpg +0 -0

- images/source_face_images/face2.jpg +0 -0

- inswapper_128.onnx +3 -0

- node.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

display.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/output_images/output2.png filter=lfs diff=lfs merge=lfs -text

|

FaceSwap.py

ADDED

|

@@ -0,0 +1,146 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#This is an example that uses the websockets api to know when a prompt execution is done

|

| 2 |

+

#Once the prompt execution is done it downloads the images using the /history endpoint

|

| 3 |

+

|

| 4 |

+

import websocket #NOTE: websocket-client (https://github.com/websocket-client/websocket-client)

|

| 5 |

+

import uuid

|

| 6 |

+

import json

|

| 7 |

+

import urllib.request

|

| 8 |

+

import urllib.parse

|

| 9 |

+

|

| 10 |

+

server_address = "127.0.0.1:8188"

|

| 11 |

+

client_id = str(uuid.uuid4())

|

| 12 |

+

|

| 13 |

+

def queue_prompt(prompt):

|

| 14 |

+

p = {"prompt": prompt, "client_id": client_id}

|

| 15 |

+

data = json.dumps(p).encode('utf-8')

|

| 16 |

+

req = urllib.request.Request("http://{}/prompt".format(server_address), data=data)

|

| 17 |

+

return json.loads(urllib.request.urlopen(req).read())

|

| 18 |

+

|

| 19 |

+

def get_image(filename, subfolder, folder_type):

|

| 20 |

+

data = {"filename": filename, "subfolder": subfolder, "type": folder_type}

|

| 21 |

+

url_values = urllib.parse.urlencode(data)

|

| 22 |

+

with urllib.request.urlopen("http://{}/view?{}".format(server_address, url_values)) as response:

|

| 23 |

+

return response.read()

|

| 24 |

+

|

| 25 |

+

def get_history(prompt_id):

|

| 26 |

+

with urllib.request.urlopen("http://{}/history/{}".format(server_address, prompt_id)) as response:

|

| 27 |

+

return json.loads(response.read())

|

| 28 |

+

|

| 29 |

+

def get_images(ws, prompt):

|

| 30 |

+

prompt_id = queue_prompt(prompt)['prompt_id']

|

| 31 |

+

output_images = {}

|

| 32 |

+

while True:

|

| 33 |

+

out = ws.recv()

|

| 34 |

+

if isinstance(out, str):

|

| 35 |

+

message = json.loads(out)

|

| 36 |

+

if message['type'] == 'executing':

|

| 37 |

+

data = message['data']

|

| 38 |

+

if data['node'] is None and data['prompt_id'] == prompt_id:

|

| 39 |

+

break #Execution is done

|

| 40 |

+

else:

|

| 41 |

+

continue #previews are binary data

|

| 42 |

+

|

| 43 |

+

history = get_history(prompt_id)[prompt_id]

|

| 44 |

+

for node_id in history['outputs']:

|

| 45 |

+

node_output = history['outputs'][node_id]

|

| 46 |

+

images_output = []

|

| 47 |

+

if 'images' in node_output:

|

| 48 |

+

for image in node_output['images']:

|

| 49 |

+

image_data = get_image(image['filename'], image['subfolder'], image['type'])

|

| 50 |

+

images_output.append(image_data)

|

| 51 |

+

output_images[node_id] = images_output

|

| 52 |

+

|

| 53 |

+

return output_images

|

| 54 |

+

|

| 55 |

+

prompt_text = """

|

| 56 |

+

{

|

| 57 |

+

"1": {

|

| 58 |

+

"inputs": {

|

| 59 |

+

"image": "/home/ml/Desktop/comfy_to_python/output.jpg",

|

| 60 |

+

"upload": "image"

|

| 61 |

+

},

|

| 62 |

+

"class_type": "LoadImage",

|

| 63 |

+

"_meta": {

|

| 64 |

+

"title": "Load Image"

|

| 65 |

+

}

|

| 66 |

+

},

|

| 67 |

+

"2": {

|

| 68 |

+

"inputs": {

|

| 69 |

+

"image": "/home/ml/Desktop/comfy_to_python/me.jpg",

|

| 70 |

+

"upload": "image"

|

| 71 |

+

},

|

| 72 |

+

"class_type": "LoadImage",

|

| 73 |

+

"_meta": {

|

| 74 |

+

"title": "Load Image"

|

| 75 |

+

}

|

| 76 |

+

},

|

| 77 |

+

"4": {

|

| 78 |

+

"inputs": {

|

| 79 |

+

"images": [

|

| 80 |

+

"5",

|

| 81 |

+

0

|

| 82 |

+

]

|

| 83 |

+

},

|

| 84 |

+

"class_type": "PreviewImage",

|

| 85 |

+

"_meta": {

|

| 86 |

+

"title": "Preview Image"

|

| 87 |

+

}

|

| 88 |

+

},

|

| 89 |

+

"5": {

|

| 90 |

+

"inputs": {

|

| 91 |

+

"enabled": true,

|

| 92 |

+

"swap_model": "inswapper_128.onnx",

|

| 93 |

+

"facedetection": "YOLOv5l",

|

| 94 |

+

"face_restore_model": "none",

|

| 95 |

+

"face_restore_visibility": 1,

|

| 96 |

+

"codeformer_weight": 1,

|

| 97 |

+

"detect_gender_input": "no",

|

| 98 |

+

"detect_gender_source": "no",

|

| 99 |

+

"input_faces_index": "0",

|

| 100 |

+

"source_faces_index": "0",

|

| 101 |

+

"console_log_level": 1,

|

| 102 |

+

"input_image": [

|

| 103 |

+

"1",

|

| 104 |

+

0

|

| 105 |

+

],

|

| 106 |

+

"source_image": [

|

| 107 |

+

"2",

|

| 108 |

+

0

|

| 109 |

+

]

|

| 110 |

+

},

|

| 111 |

+

"class_type": "ReActorFaceSwap",

|

| 112 |

+

"_meta": {

|

| 113 |

+

"title": "ReActor 🌌 Fast Face Swap"

|

| 114 |

+

}

|

| 115 |

+

}

|

| 116 |

+

}

|

| 117 |

+

"""

|

| 118 |

+

|

| 119 |

+

prompt = json.loads(prompt_text)

|

| 120 |

+

# #set the text prompt for our positive CLIPTextEncode

|

| 121 |

+

# prompt["6"]["inputs"]["text"] = "your instruction here"

|

| 122 |

+

|

| 123 |

+

prompt["1"]["inputs"]["image"] = "/home/ml/Desktop/comfy_to_python/66.jpg"

|

| 124 |

+

prompt["2"]["inputs"]["image"] = "/home/ml/Desktop/comfy_to_python/me.jpg"

|

| 125 |

+

|

| 126 |

+

# # If you have a group input face image change the number here (1,2,3,..) if single then put 0.

|

| 127 |

+

# prompt["5"]["inputs"]["input_faces_index"] = ""

|

| 128 |

+

|

| 129 |

+

# # If you have a group source face image change the number here (1,2,3,..) if single then put 0.

|

| 130 |

+

# prompt["5"]["inputs"]["source_faces_index"] = ""

|

| 131 |

+

|

| 132 |

+

ws = websocket.WebSocket()

|

| 133 |

+

ws.connect("ws://{}/ws?clientId={}".format(server_address, client_id))

|

| 134 |

+

images = get_images(ws, prompt)

|

| 135 |

+

|

| 136 |

+

# Commented out code to display the output images:

|

| 137 |

+

|

| 138 |

+

for node_id in images:

|

| 139 |

+

for image_data in images[node_id]:

|

| 140 |

+

from PIL import Image

|

| 141 |

+

import io

|

| 142 |

+

image = Image.open(io.BytesIO(image_data))

|

| 143 |

+

image.save("output1.jpg")

|

| 144 |

+

# image.show()

|

| 145 |

+

|

| 146 |

+

|

README.md

CHANGED

|

@@ -1,3 +1,140 @@

|

|

| 1 |

-

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

tags:

|

| 5 |

+

- FaceSwap

|

| 6 |

+

- Headshot

|

| 7 |

+

- SmoothChange

|

| 8 |

+

- FaceChange

|

| 9 |

+

- SmoothSwap

|

| 10 |

+

- SwapFace

|

| 11 |

+

- FaceEditing

|

| 12 |

+

|

| 13 |

+

license: apache-2.0

|

| 14 |

+

---

|

| 15 |

+

# FaceSwap - AI-Powered Headshot Generator

|

| 16 |

+

|

| 17 |

+

**FaceSwap** is a flow designed to seamlessly swap faces between two images using deep learning models. This project is built on top of ComfyUI, a modular and flexible interface that enables easy integration and customization of AI workflows. By leveraging advanced facial recognition and manipulation technologies, FaceSwap allows users to replace one face with another in images while maintaining realistic features and alignment.

|

| 18 |

+

|

| 19 |

+

FaceSwap utilizes models to detect key facial landmarks, map them to the target face, and generate a realistic face swap by considering aspects like lighting, expression, and pose. This flow is designed to provide an easy-to-use interface through ComfyUI, allowing users to swap faces effortlessly with minimal setup.

|

| 20 |

+

|

| 21 |

+

<div align="center">

|

| 22 |

+

<img width="600" height="500" alt="foduucom/FaceSwap" src="https://huggingface.co/foduucom/Headshot_Generator-FaceSwap/resolve/main/display.png">

|

| 23 |

+

</div>

|

| 24 |

+

|

| 25 |

+

## Requirements

|

| 26 |

+

|

| 27 |

+

To run **FaceSwap**, you'll need to install **ComfyUI** and set up a few dependencies. Follow the steps below for installation and setup.

|

| 28 |

+

|

| 29 |

+

### Prerequisites

|

| 30 |

+

- **Python 3.9 or higher** (Ensure Python 3.9+ is installed on your machine)

|

| 31 |

+

- **NVIDIA GPU** (Recommended for faster processing, though CPU can also be used)

|

| 32 |

+

|

| 33 |

+

---

|

| 34 |

+

|

| 35 |

+

## Step-by-Step Installation

|

| 36 |

+

|

| 37 |

+

1. Clone the ComfyUI Repository

|

| 38 |

+

Start by cloning the main ComfyUI repository:

|

| 39 |

+

|

| 40 |

+

```bash

|

| 41 |

+

git clone https://github.com/comfyanonymous/ComfyUI.git

|

| 42 |

+

cd ComfyUI

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

2. Install Dependencies

|

| 46 |

+

Install all the required dependencies for ComfyUI:

|

| 47 |

+

|

| 48 |

+

```bash

|

| 49 |

+

pip install -r requirements.txt

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

3. Install **ComfyUI-Manager**

|

| 53 |

+

To manage custom nodes, you'll need to install ComfyUI-Manager. Run the following commands inside the **ComfyUI/custom_nodes** directory:

|

| 54 |

+

|

| 55 |

+

```bash

|

| 56 |

+

cd ComfyUI/custom_nodes

|

| 57 |

+

git clone https://github.com/ltdrdata/ComfyUI-Manager.git

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

After cloning the ComfyUI-Manager, **restart ComfyUI**.

|

| 61 |

+

|

| 62 |

+

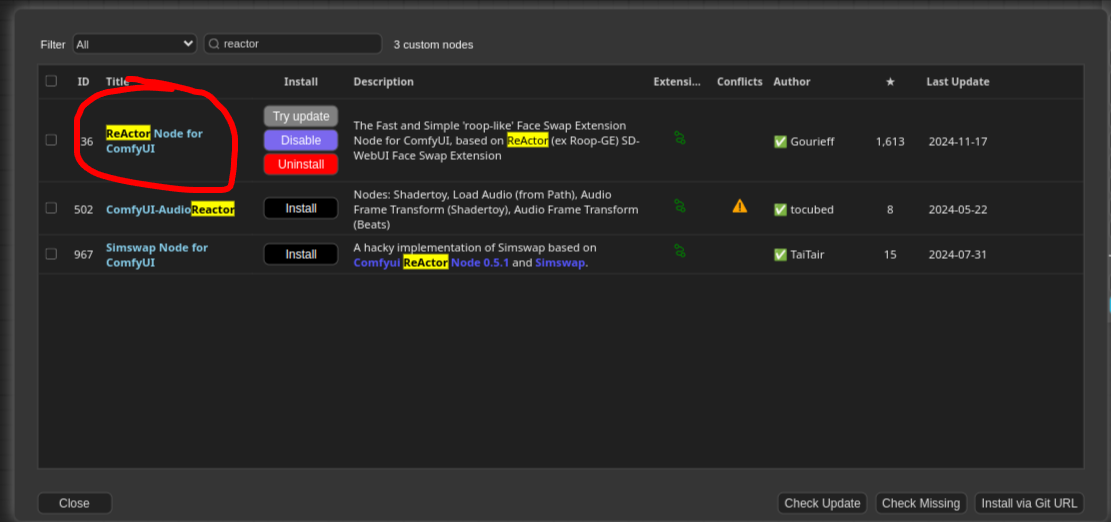

4. Install **Reactor Node for ComfyUI** by clicking on Manager -> Custom Nodes Manage

|

| 63 |

+

<div align="center">

|

| 64 |

+

<img width="600" height="500" alt="foduucom/FaceSwap" src="https://huggingface.co/foduucom/Headshot_Generator-FaceSwap/resolve/main/node.png">

|

| 65 |

+

</div>

|

| 66 |

+

|

| 67 |

+

or you can clone the repository in the directory **ComfyUI/custom_nodes**

|

| 68 |

+

```bash

|

| 69 |

+

git clone https://github.com/Gourieff/comfyui-reactor-node.git

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

5. Download Pre-trained Models

|

| 73 |

+

Download **inswapper_128.onnx** and place the downloaded model in the ComfyUI/models/insightface/ directory

|

| 74 |

+

|

| 75 |

+

Note: If directory don't exist, create them manually.

|

| 76 |

+

|

| 77 |

+

4. Start ComfyUI

|

| 78 |

+

Run ComfyUI by executing the following command:

|

| 79 |

+

|

| 80 |

+

```bash

|

| 81 |

+

python3 FaceSwap.py

|

| 82 |

+

```

|

| 83 |

+

This will start ComfyUI, and you can access the interface by navigating to http://127.0.0.1:8188 in your browser.

|

| 84 |

+

|

| 85 |

+

## Setting Up and Using the FaceSwap Workflow

|

| 86 |

+

|

| 87 |

+

1. Clone the Headshot_Generator-FaceSwap Repository

|

| 88 |

+

Clone this repository that contains the FaceSwap workflow and assets:

|

| 89 |

+

|

| 90 |

+

```bash

|

| 91 |

+

git clone https://huggingface.co/foduucom/Headshot_Generator-FaceSwap

|

| 92 |

+

cd Headshot_Generator-FaceSwap

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

2. How to use

|

| 96 |

+

Once ComfyUI is running, open the browser window (default: http://127.0.0.1:8188), and follow these steps:

|

| 97 |

+

|

| 98 |

+

- Click on the **Load button** in the menu bar and select the **workflow.json** file from this repo you just cloned.

|

| 99 |

+

- Upload the **Workflow.json** to start the face-swapping process.

|

| 100 |

+

- Provide Input Images

|

| 101 |

+

- **Source Image:** The image containing the face you want to swap.

|

| 102 |

+

- **Target Image:** The image where the face will be swapped into.

|

| 103 |

+

- Click **Queue Prompt** to initiate the face-swapping process. The AI model will process the images and generate the output.

|

| 104 |

+

|

| 105 |

+

or you can use by python script provided in this repository:

|

| 106 |

+

```bash

|

| 107 |

+

python3 FaceSwap.py

|

| 108 |

+

|

| 109 |

+

#Remember change the input paths in script here :

|

| 110 |

+

#prompt["1"]["inputs"]["image"] = "//put your input image"

|

| 111 |

+

#prompt["2"]["inputs"]["image"] = "//put your source face image"

|

| 112 |

+

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

## Troubleshooting

|

| 116 |

+

- ComfyUI Not Running: Ensure that all dependencies are installed correctly and that you’re using Python 3.9 or higher. If issues persist, check the ComfyUI GitHub repository for troubleshooting guides.

|

| 117 |

+

|

| 118 |

+

- Missing Models: Ensure you’ve downloaded the required models (sam_vit_h_4b8939.pth and groundingdino_swint_ogc.pth) and placed them in the correct directories (ComfyUI/models/sams/ and ComfyUI/models/grounding-dino/).

|

| 119 |

+

|

| 120 |

+

## Slow Performance:

|

| 121 |

+

Using a GPU is highly recommended for better performance. If you’re using a CPU, the processing time may be longer.

|

| 122 |

+

|

| 123 |

+

## Compute Infrastructure

|

| 124 |

+

|

| 125 |

+

## Hardware

|

| 126 |

+

|

| 127 |

+

NVIDIA GeForce RTX 3060 card

|

| 128 |

+

|

| 129 |

+

## Model Card Contact

|

| 130 |

+

|

| 131 |

+

For inquiries and contributions, please contact us at [email protected].

|

| 132 |

+

|

| 133 |

+

```bibtex

|

| 134 |

+

@ModelCard{

|

| 135 |

+

author = {Nehul Agrawal and

|

| 136 |

+

Priyal Mehta},

|

| 137 |

+

title = {FaceSwap - AI-Powered Face Swap / Headshot Generator},

|

| 138 |

+

year = {2024}

|

| 139 |

+

}

|

| 140 |

+

```

|

display.png

ADDED

|

Git LFS Details

|

images/input_images/input1.png

ADDED

|

images/input_images/input2.jpg

ADDED

|

images/output_images/output1.png

ADDED

|

images/output_images/output2.png

ADDED

|

Git LFS Details

|

images/source_face_images/face1.jpg

ADDED

|

images/source_face_images/face2.jpg

ADDED

|

inswapper_128.onnx

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e4a3f08c753cb72d04e10aa0f7dbe3deebbf39567d4ead6dce08e98aa49e16af

|

| 3 |

+

size 554253681

|

node.png

ADDED

|