Commit

·

5671ee4

1

Parent(s):

62ca2e7

init

Browse files- README.md +41 -3

- Simulator.ipynb +1071 -0

- Training.ipynb +1433 -0

- img/Solar_Transformer.png +0 -0

- img/output.png +0 -0

- models/model-best.h5 +3 -0

README.md

CHANGED

|

@@ -1,3 +1,41 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Solar Transformer

|

| 2 |

+

|

| 3 |

+

Please check our paper [Solar Irradiance Forecasting with Transformer model

|

| 4 |

+

](https://www.mdpi.com/2076-3417/12/17/8852) for more details.

|

| 5 |

+

|

| 6 |

+

[](https://github.com/markub3327/Solar-Transformer/issues)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Paper

|

| 11 |

+

|

| 12 |

+

* Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

|

| 13 |

+

* Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; Uszkoreit, J. An image is worth 16x16 words: Transformers for image recognition at scale. 2020, arXiv preprint arXiv:2010.11929.

|

| 14 |

+

* Bao, H.; Dong, L.; Wei, F. Beit: Bert pre-training of image transformers. 2021, arXiv preprint arXiv:2106.08254.

|

| 15 |

+

* Brahma, B.; Wadhvani, R. Solar irradiance forecasting based on deep learning methodologies and multi-site data. Sym-metry 2020, 12(11), p.1830. Available online: https://www.mdpi.com/2073-8994/12/11/1830

|

| 16 |

+

|

| 17 |

+

## About

|

| 18 |

+

|

| 19 |

+

Solar energy is one of the most popular sources of renewable energy today. It is therefore essential to be able to predict solar power generation and adapt the energy needs to these predictions. This paper uses Transformer deep neural network model, in which the attention mechanism is typically applied in NLP or vision problems. Here it is extended by combining features based on their spatio-temporal properties in solar irradiance prediction. The results were predicted for arbitrary long-time horizons since the prediction is always 1 day ahead, which can be included at the end along the timestep axis of the input data and the first timestep representing the oldest timestep removed. A maximum worst-case mean absolute percentage error of 3.45% for the 1 day-ahead prediction was achieved, thus providing better results than the directly competing method.

|

| 20 |

+

|

| 21 |

+

## Dataset

|

| 22 |

+

|

| 23 |

+

[NASA POWER Project](https://power.larc.nasa.gov)

|

| 24 |

+

|

| 25 |

+

Solar irradiance + Weather (temperature, humidity, pressure, wind speed, wind direction)

|

| 26 |

+

|

| 27 |

+

## Model

|

| 28 |

+

|

| 29 |

+

<p align="center">

|

| 30 |

+

<img src="img/Solar_Transformer.png">

|

| 31 |

+

</p>

|

| 32 |

+

|

| 33 |

+

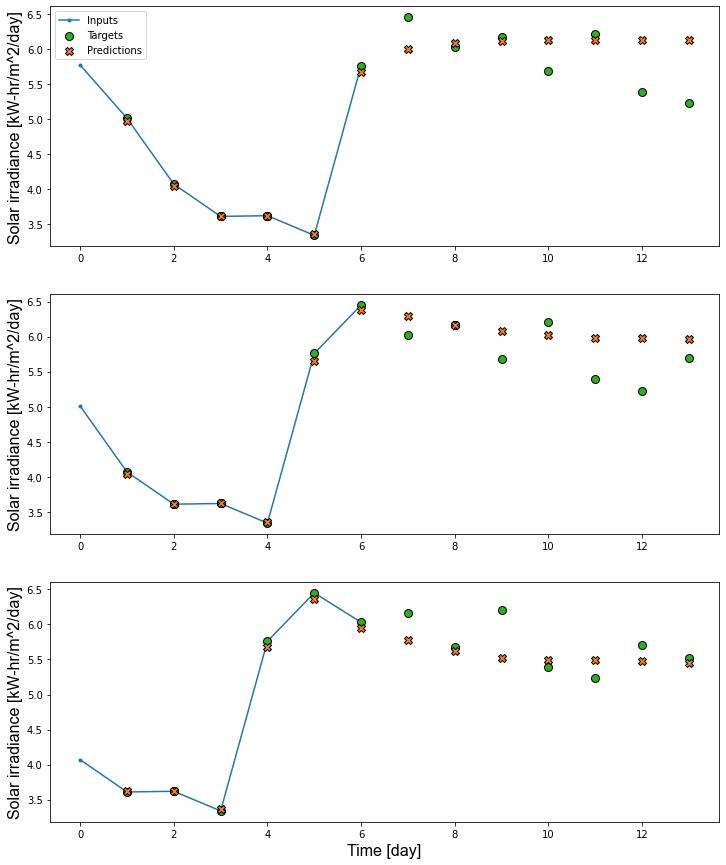

## Results

|

| 34 |

+

|

| 35 |

+

<p align="center">

|

| 36 |

+

<img src="img/output.png">

|

| 37 |

+

</p>

|

| 38 |

+

|

| 39 |

+

----------------------------------

|

| 40 |

+

|

| 41 |

+

**Frameworks:** TensorFlow, NumPy, Pandas, WanDB, Seaborn, Matplotlib

|

Simulator.ipynb

ADDED

|

@@ -0,0 +1,1071 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"!pip install wandb tensorflow_probability tensorflow_addons"

|

| 10 |

+

]

|

| 11 |

+

},

|

| 12 |

+

{

|

| 13 |

+

"cell_type": "code",

|

| 14 |

+

"execution_count": null,

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"from tensorflow.keras.layers import Add, Dense, Dropout, Layer, LayerNormalization, MultiHeadAttention\n",

|

| 19 |

+

"from tensorflow.keras.models import Model\n",

|

| 20 |

+

"from tensorflow.keras.initializers import TruncatedNormal\n",

|

| 21 |

+

"from tensorflow.keras.metrics import MeanSquaredError, RootMeanSquaredError, MeanAbsoluteError, MeanAbsolutePercentageError\n",

|

| 22 |

+

"from tensorflow_addons.metrics import RSquare\n",

|

| 23 |

+

"\n",

|

| 24 |

+

"import pandas as pd\n",

|

| 25 |

+

"import tensorflow as tf\n",

|

| 26 |

+

"import numpy as np\n",

|

| 27 |

+

"import matplotlib.pyplot as plt\n",

|

| 28 |

+

"import seaborn as sns"

|

| 29 |

+

]

|

| 30 |

+

},

|

| 31 |

+

{

|

| 32 |

+

"cell_type": "markdown",

|

| 33 |

+

"metadata": {},

|

| 34 |

+

"source": [

|

| 35 |

+

"## Plotting"

|

| 36 |

+

]

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

"cell_type": "code",

|

| 40 |

+

"execution_count": null,

|

| 41 |

+

"metadata": {},

|

| 42 |

+

"outputs": [],

|

| 43 |

+

"source": [

|

| 44 |

+

"def plot_prediction(targets, predictions, max_subplots=3):\n",

|

| 45 |

+

" plt.figure(figsize=(12, 15))\n",

|

| 46 |

+

" max_n = min(max_subplots, len(targets))\n",

|

| 47 |

+

" for n in range(max_n):\n",

|

| 48 |

+

" # input\n",

|

| 49 |

+

" plt.subplot(max_n, 1, n+1)\n",

|

| 50 |

+

" plt.ylabel('Solar irradiance [kW-hr/m^2/day]', fontfamily=\"Arial\", fontsize=16)\n",

|

| 51 |

+

" plt.plot(np.arange(targets.shape[1]-horizon), targets[n, :-horizon, 0, -1], label='Inputs', marker='.', zorder=-10)\n",

|

| 52 |

+

"\n",

|

| 53 |

+

" # real\n",

|

| 54 |

+

" plt.scatter(np.arange(1, targets.shape[1]), targets[n, 1:, 0, -1], edgecolors='k', label='Targets', c='#2cb01d', s=64)\n",

|

| 55 |

+

" \n",

|

| 56 |

+

" # predicted\n",

|

| 57 |

+

" plt.scatter(np.arange(1, targets.shape[1]), predictions[n, :, 0, -1], marker='X', edgecolors='k', label='Predictions', c='#fe7e0f', s=64)\n",

|

| 58 |

+

"\n",

|

| 59 |

+

" if n == 0:\n",

|

| 60 |

+

" plt.legend()\n",

|

| 61 |

+

"\n",

|

| 62 |

+

" plt.xlabel('Time [day]', fontfamily=\"Arial\", fontsize=16)\n",

|

| 63 |

+

" plt.show()"

|

| 64 |

+

]

|

| 65 |

+

},

|

| 66 |

+

{

|

| 67 |

+

"cell_type": "code",

|

| 68 |

+

"execution_count": null,

|

| 69 |

+

"metadata": {},

|

| 70 |

+

"outputs": [],

|

| 71 |

+

"source": [

|

| 72 |

+

"def patch_similarity_plot(pos):\n",

|

| 73 |

+

" similarity_scores = np.dot(\n",

|

| 74 |

+

" pos, np.transpose(pos)\n",

|

| 75 |

+

" ) / (\n",

|

| 76 |

+

" np.linalg.norm(pos, axis=-1)\n",

|

| 77 |

+

" * np.linalg.norm(pos, axis=-1)\n",

|

| 78 |

+

" )\n",

|

| 79 |

+

"\n",

|

| 80 |

+

" plt.figure(figsize=(7, 7), dpi=300)\n",

|

| 81 |

+

" ax = sns.heatmap(similarity_scores, center=0)\n",

|

| 82 |

+

" ax.set_title(\"Spatial Positional Embedding\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 83 |

+

" ax.set_xlabel(\"Patch\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 84 |

+

" ax.set_ylabel(\"Patch\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 85 |

+

" plt.show()\n",

|

| 86 |

+

"\n",

|

| 87 |

+

"def timestep_similarity_plot(pos):\n",

|

| 88 |

+

" similarity_scores = np.dot(\n",

|

| 89 |

+

" pos, np.transpose(pos)\n",

|

| 90 |

+

" ) / (\n",

|

| 91 |

+

" np.linalg.norm(pos, axis=-1)\n",

|

| 92 |

+

" * np.linalg.norm(pos, axis=-1)\n",

|

| 93 |

+

" )\n",

|

| 94 |

+

"\n",

|

| 95 |

+

" plt.figure(figsize=(7, 7), dpi=300)\n",

|

| 96 |

+

" ax = sns.heatmap(similarity_scores, center=0)\n",

|

| 97 |

+

" ax.set_title(\"Temporal Positional Embedding\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 98 |

+

" ax.set_xlabel(\"Timestep\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 99 |

+

" ax.set_ylabel(\"Timestep\", fontfamily=\"Arial\", fontsize=16)\n",

|

| 100 |

+

" plt.show()"

|

| 101 |

+

]

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"cell_type": "markdown",

|

| 105 |

+

"metadata": {},

|

| 106 |

+

"source": [

|

| 107 |

+

"## Layer"

|

| 108 |

+

]

|

| 109 |

+

},

|

| 110 |

+

{

|

| 111 |

+

"cell_type": "code",

|

| 112 |

+

"execution_count": null,

|

| 113 |

+

"metadata": {},

|

| 114 |

+

"outputs": [],

|

| 115 |

+

"source": [

|

| 116 |

+

"class Normalization(tf.keras.layers.experimental.preprocessing.PreprocessingLayer):\n",

|

| 117 |

+

" \"\"\"A preprocessing layer which normalizes continuous features.\n",

|

| 118 |

+

" This layer will shift and scale inputs into a distribution centered around\n",

|

| 119 |

+

" 0 with standard deviation 1. It accomplishes this by precomputing the mean\n",

|

| 120 |

+

" and variance of the data, and calling `(input - mean) / sqrt(var)` at\n",

|

| 121 |

+

" runtime.\n",

|

| 122 |

+

" The mean and variance values for the layer must be either supplied on\n",

|

| 123 |

+

" construction or learned via `adapt()`. `adapt()` will compute the mean and\n",

|

| 124 |

+

" variance of the data and store them as the layer's weights. `adapt()` should\n",

|

| 125 |

+

" be called before `fit()`, `evaluate()`, or `predict()`.\n",

|

| 126 |

+

" For an overview and full list of preprocessing layers, see the preprocessing\n",

|

| 127 |

+

" [guide](https://www.tensorflow.org/guide/keras/preprocessing_layers).\n",

|

| 128 |

+

" Args:\n",

|

| 129 |

+

" axis: Integer, tuple of integers, or None. The axis or axes that should\n",

|

| 130 |

+

" have a separate mean and variance for each index in the shape. For\n",

|

| 131 |

+

" example, if shape is `(None, 5)` and `axis=1`, the layer will track 5\n",

|

| 132 |

+

" separate mean and variance values for the last axis. If `axis` is set\n",

|

| 133 |

+

" to `None`, the layer will normalize all elements in the input by a\n",

|

| 134 |

+

" scalar mean and variance. Defaults to -1, where the last axis of the\n",

|

| 135 |

+

" input is assumed to be a feature dimension and is normalized per\n",

|

| 136 |

+

" index. Note that in the specific case of batched scalar inputs where\n",

|

| 137 |

+

" the only axis is the batch axis, the default will normalize each index\n",

|

| 138 |

+

" in the batch separately. In this case, consider passing `axis=None`.\n",

|

| 139 |

+

" mean: The mean value(s) to use during normalization. The passed value(s)\n",

|

| 140 |

+

" will be broadcast to the shape of the kept axes above; if the value(s)\n",

|

| 141 |

+

" cannot be broadcast, an error will be raised when this layer's\n",

|

| 142 |

+

" `build()` method is called.\n",

|

| 143 |

+

" variance: The variance value(s) to use during normalization. The passed\n",

|

| 144 |

+

" value(s) will be broadcast to the shape of the kept axes above; if the\n",

|

| 145 |

+

" value(s) cannot be broadcast, an error will be raised when this\n",

|

| 146 |

+

" layer's `build()` method is called.\n",

|

| 147 |

+

" invert: If True, this layer will apply the inverse transformation\n",

|

| 148 |

+

" to its inputs: it would turn a normalized input back into its\n",

|

| 149 |

+

" original form.\n",

|

| 150 |

+

" Examples:\n",

|

| 151 |

+

" Calculate a global mean and variance by analyzing the dataset in `adapt()`.\n",

|

| 152 |

+

" >>> adapt_data = np.array([1., 2., 3., 4., 5.], dtype='float32')\n",

|

| 153 |

+

" >>> input_data = np.array([1., 2., 3.], dtype='float32')\n",

|

| 154 |

+

" >>> layer = tf.keras.layers.Normalization(axis=None)\n",

|

| 155 |

+

" >>> layer.adapt(adapt_data)\n",

|

| 156 |

+

" >>> layer(input_data)\n",

|

| 157 |

+

" <tf.Tensor: shape=(3,), dtype=float32, numpy=\n",

|

| 158 |

+

" array([-1.4142135, -0.70710677, 0.], dtype=float32)>\n",

|

| 159 |

+

" Calculate a mean and variance for each index on the last axis.\n",

|

| 160 |

+

" >>> adapt_data = np.array([[0., 7., 4.],\n",

|

| 161 |

+

" ... [2., 9., 6.],\n",

|

| 162 |

+

" ... [0., 7., 4.],\n",

|

| 163 |

+

" ... [2., 9., 6.]], dtype='float32')\n",

|

| 164 |

+

" >>> input_data = np.array([[0., 7., 4.]], dtype='float32')\n",

|

| 165 |

+

" >>> layer = tf.keras.layers.Normalization(axis=-1)\n",

|

| 166 |

+

" >>> layer.adapt(adapt_data)\n",

|

| 167 |

+

" >>> layer(input_data)\n",

|

| 168 |

+

" <tf.Tensor: shape=(1, 3), dtype=float32, numpy=\n",

|

| 169 |

+

" array([-1., -1., -1.], dtype=float32)>\n",

|

| 170 |

+

" Pass the mean and variance directly.\n",

|

| 171 |

+

" >>> input_data = np.array([[1.], [2.], [3.]], dtype='float32')\n",

|

| 172 |

+

" >>> layer = tf.keras.layers.Normalization(mean=3., variance=2.)\n",

|

| 173 |

+

" >>> layer(input_data)\n",

|

| 174 |

+

" <tf.Tensor: shape=(3, 1), dtype=float32, numpy=\n",

|

| 175 |

+

" array([[-1.4142135 ],\n",

|

| 176 |

+

" [-0.70710677],\n",

|

| 177 |

+

" [ 0. ]], dtype=float32)>\n",

|

| 178 |

+

" Use the layer to de-normalize inputs (after adapting the layer).\n",

|

| 179 |

+

" >>> adapt_data = np.array([[0., 7., 4.],\n",

|

| 180 |

+

" ... [2., 9., 6.],\n",

|

| 181 |

+

" ... [0., 7., 4.],\n",

|

| 182 |

+

" ... [2., 9., 6.]], dtype='float32')\n",

|

| 183 |

+

" >>> input_data = np.array([[1., 2., 3.]], dtype='float32')\n",

|

| 184 |

+

" >>> layer = tf.keras.layers.Normalization(axis=-1, invert=True)\n",

|

| 185 |

+

" >>> layer.adapt(adapt_data)\n",

|

| 186 |

+

" >>> layer(input_data)\n",

|

| 187 |

+

" <tf.Tensor: shape=(1, 3), dtype=float32, numpy=\n",

|

| 188 |

+

" array([2., 10., 8.], dtype=float32)>\n",

|

| 189 |

+

" \"\"\"\n",

|

| 190 |

+

"\n",

|

| 191 |

+

" def __init__(\n",

|

| 192 |

+

" self, axis=-1, mean=None, variance=None, invert=False, **kwargs\n",

|

| 193 |

+

" ):\n",

|

| 194 |

+

" super().__init__(**kwargs)\n",

|

| 195 |

+

"\n",

|

| 196 |

+

" # Standardize `axis` to a tuple.\n",

|

| 197 |

+

" if axis is None:\n",

|

| 198 |

+

" axis = ()\n",

|

| 199 |

+

" elif isinstance(axis, int):\n",

|

| 200 |

+

" axis = (axis,)\n",

|

| 201 |

+

" else:\n",

|

| 202 |

+

" axis = tuple(axis)\n",

|

| 203 |

+

" self.axis = axis\n",

|

| 204 |

+

"\n",

|

| 205 |

+

" # Set `mean` and `variance` if passed.\n",

|

| 206 |

+

" if isinstance(mean, tf.Variable):\n",

|

| 207 |

+

" raise ValueError(\n",

|

| 208 |

+

" \"Normalization does not support passing a Variable \"\n",

|

| 209 |

+

" \"for the `mean` init arg.\"\n",

|

| 210 |

+

" )\n",

|

| 211 |

+

" if isinstance(variance, tf.Variable):\n",

|

| 212 |

+

" raise ValueError(\n",

|

| 213 |

+

" \"Normalization does not support passing a Variable \"\n",

|

| 214 |

+

" \"for the `variance` init arg.\"\n",

|

| 215 |

+

" )\n",

|

| 216 |

+

" if (mean is not None) != (variance is not None):\n",

|

| 217 |

+

" raise ValueError(\n",

|

| 218 |

+

" \"When setting values directly, both `mean` and `variance` \"\n",

|

| 219 |

+

" \"must be set. Got mean: {} and variance: {}\".format(\n",

|

| 220 |

+

" mean, variance\n",

|

| 221 |

+

" )\n",

|

| 222 |

+

" )\n",

|

| 223 |

+

" self.input_mean = mean\n",

|

| 224 |

+

" self.input_variance = variance\n",

|

| 225 |

+

" self.invert = invert\n",

|

| 226 |

+

"\n",

|

| 227 |

+

" def build(self, input_shape):\n",

|

| 228 |

+

" super().build(input_shape)\n",

|

| 229 |

+

"\n",

|

| 230 |

+

" if isinstance(input_shape, (list, tuple)) and all(\n",

|

| 231 |

+

" isinstance(shape, tf.TensorShape) for shape in input_shape\n",

|

| 232 |

+

" ):\n",

|

| 233 |

+

" raise ValueError(\n",

|

| 234 |

+

" \"Normalization only accepts a single input. If you are \"\n",

|

| 235 |

+

" \"passing a python list or tuple as a single input, \"\n",

|

| 236 |

+

" \"please convert to a numpy array or `tf.Tensor`.\"\n",

|

| 237 |

+

" )\n",

|

| 238 |

+

"\n",

|

| 239 |

+

" input_shape = tf.TensorShape(input_shape).as_list()\n",

|

| 240 |

+

" ndim = len(input_shape)\n",

|

| 241 |

+

"\n",

|

| 242 |

+

" if any(a < -ndim or a >= ndim for a in self.axis):\n",

|

| 243 |

+

" raise ValueError(\n",

|

| 244 |

+

" \"All `axis` values must be in the range [-ndim, ndim). \"\n",

|

| 245 |

+

" \"Found ndim: `{}`, axis: {}\".format(ndim, self.axis)\n",

|

| 246 |

+

" )\n",

|

| 247 |

+

"\n",

|

| 248 |

+

" # Axes to be kept, replacing negative values with positive equivalents.\n",

|

| 249 |

+

" # Sorted to avoid transposing axes.\n",

|

| 250 |

+

" self._keep_axis = sorted([d if d >= 0 else d + ndim for d in self.axis])\n",

|

| 251 |

+

" # All axes to be kept should have known shape.\n",

|

| 252 |

+

" for d in self._keep_axis:\n",

|

| 253 |

+

" if input_shape[d] is None:\n",

|

| 254 |

+

" raise ValueError(\n",

|

| 255 |

+

" \"All `axis` values to be kept must have known shape. \"\n",

|

| 256 |

+

" \"Got axis: {}, \"\n",

|

| 257 |

+

" \"input shape: {}, with unknown axis at index: {}\".format(\n",

|

| 258 |

+

" self.axis, input_shape, d\n",

|

| 259 |

+

" )\n",

|

| 260 |

+

" )\n",

|

| 261 |

+

" # Axes to be reduced.\n",

|

| 262 |

+

" self._reduce_axis = [d for d in range(ndim) if d not in self._keep_axis]\n",

|

| 263 |

+

" # 1 if an axis should be reduced, 0 otherwise.\n",

|

| 264 |

+

" self._reduce_axis_mask = [\n",

|

| 265 |

+

" 0 if d in self._keep_axis else 1 for d in range(ndim)\n",

|

| 266 |

+

" ]\n",

|

| 267 |

+

" # Broadcast any reduced axes.\n",

|

| 268 |

+

" self._broadcast_shape = [\n",

|

| 269 |

+

" input_shape[d] if d in self._keep_axis else 1 for d in range(ndim)\n",

|

| 270 |

+

" ]\n",

|

| 271 |

+

" mean_and_var_shape = tuple(input_shape[d] for d in self._keep_axis)\n",

|

| 272 |

+

"\n",

|

| 273 |

+

" if self.input_mean is None:\n",

|

| 274 |

+

" self.adapt_mean = self.add_weight(\n",

|

| 275 |

+

" name=\"mean\",\n",

|

| 276 |

+

" shape=mean_and_var_shape,\n",

|

| 277 |

+

" dtype=self.compute_dtype,\n",

|

| 278 |

+

" initializer=\"zeros\",\n",

|

| 279 |

+

" trainable=False,\n",

|

| 280 |

+

" )\n",

|

| 281 |

+

" self.adapt_variance = self.add_weight(\n",

|

| 282 |

+

" name=\"variance\",\n",

|

| 283 |

+

" shape=mean_and_var_shape,\n",

|

| 284 |

+

" dtype=self.compute_dtype,\n",

|

| 285 |

+

" initializer=\"ones\",\n",

|

| 286 |

+

" trainable=False,\n",

|

| 287 |

+

" )\n",

|

| 288 |

+

" self.count = self.add_weight(\n",

|

| 289 |

+

" name=\"count\",\n",

|

| 290 |

+

" shape=(),\n",

|

| 291 |

+

" dtype=tf.int64,\n",

|

| 292 |

+

" initializer=\"zeros\",\n",

|

| 293 |

+

" trainable=False,\n",

|

| 294 |

+

" )\n",

|

| 295 |

+

" self.finalize_state()\n",

|

| 296 |

+

" else:\n",

|

| 297 |

+

" # In the no adapt case, make constant tensors for mean and variance\n",

|

| 298 |

+

" # with proper broadcast shape for use during call.\n",

|

| 299 |

+

" mean = self.input_mean * np.ones(mean_and_var_shape)\n",

|

| 300 |

+

" variance = self.input_variance * np.ones(mean_and_var_shape)\n",

|

| 301 |

+

" mean = tf.reshape(mean, self._broadcast_shape)\n",

|

| 302 |

+

" variance = tf.reshape(variance, self._broadcast_shape)\n",

|

| 303 |

+

" self.mean = tf.cast(mean, self.compute_dtype)\n",

|

| 304 |

+

" self.variance = tf.cast(variance, self.compute_dtype)\n",

|

| 305 |

+

"\n",

|

| 306 |

+

" # We override this method solely to generate a docstring.\n",

|

| 307 |

+

" def adapt(self, data, batch_size=None, steps=None):\n",

|

| 308 |

+

" \"\"\"Computes the mean and variance of values in a dataset.\n",

|

| 309 |

+

" Calling `adapt()` on a `Normalization` layer is an alternative to\n",

|

| 310 |

+

" passing in `mean` and `variance` arguments during layer construction. A\n",

|

| 311 |

+

" `Normalization` layer should always either be adapted over a dataset or\n",

|

| 312 |

+

" passed `mean` and `variance`.\n",

|

| 313 |

+

" During `adapt()`, the layer will compute a `mean` and `variance`\n",

|

| 314 |

+

" separately for each position in each axis specified by the `axis`\n",

|

| 315 |

+

" argument. To calculate a single `mean` and `variance` over the input\n",

|

| 316 |

+

" data, simply pass `axis=None`.\n",

|

| 317 |

+

" In order to make `Normalization` efficient in any distribution context,\n",

|

| 318 |

+

" the computed mean and variance are kept static with respect to any\n",

|

| 319 |

+

" compiled `tf.Graph`s that call the layer. As a consequence, if the layer\n",

|

| 320 |

+

" is adapted a second time, any models using the layer should be\n",

|

| 321 |

+

" re-compiled. For more information see\n",

|

| 322 |

+

" `tf.keras.layers.experimental.preprocessing.PreprocessingLayer.adapt`.\n",

|

| 323 |

+

" `adapt()` is meant only as a single machine utility to compute layer\n",

|

| 324 |

+

" state. To analyze a dataset that cannot fit on a single machine, see\n",

|

| 325 |

+

" [Tensorflow Transform](\n",

|

| 326 |

+

" https://www.tensorflow.org/tfx/transform/get_started)\n",

|

| 327 |

+

" for a multi-machine, map-reduce solution.\n",

|

| 328 |

+

" Arguments:\n",

|

| 329 |

+

" data: The data to train on. It can be passed either as a\n",

|

| 330 |

+

" `tf.data.Dataset`, or as a numpy array.\n",

|

| 331 |

+

" batch_size: Integer or `None`.\n",

|

| 332 |

+

" Number of samples per state update.\n",

|

| 333 |

+

" If unspecified, `batch_size` will default to 32.\n",

|

| 334 |

+

" Do not specify the `batch_size` if your data is in the\n",

|

| 335 |

+

" form of datasets, generators, or `keras.utils.Sequence` instances\n",

|

| 336 |

+

" (since they generate batches).\n",

|

| 337 |

+

" steps: Integer or `None`.\n",

|

| 338 |

+

" Total number of steps (batches of samples)\n",

|

| 339 |

+

" When training with input tensors such as\n",

|

| 340 |

+

" TensorFlow data tensors, the default `None` is equal to\n",

|

| 341 |

+

" the number of samples in your dataset divided by\n",

|

| 342 |

+

" the batch size, or 1 if that cannot be determined. If x is a\n",

|

| 343 |

+

" `tf.data` dataset, and 'steps' is None, the epoch will run until\n",

|

| 344 |

+

" the input dataset is exhausted. When passing an infinitely\n",

|

| 345 |

+

" repeating dataset, you must specify the `steps` argument. This\n",

|

| 346 |

+

" argument is not supported with array inputs.\n",

|

| 347 |

+

" \"\"\"\n",

|

| 348 |

+

" super().adapt(data, batch_size=batch_size, steps=steps)\n",

|

| 349 |

+

"\n",

|

| 350 |

+

" def update_state(self, data):\n",

|

| 351 |

+

" if self.input_mean is not None:\n",

|

| 352 |

+

" raise ValueError(\n",

|

| 353 |

+

" \"Cannot `adapt` a Normalization layer that is initialized with \"\n",

|

| 354 |

+

" \"static `mean` and `variance`, \"\n",

|

| 355 |

+

" \"you passed mean {} and variance {}.\".format(\n",

|

| 356 |

+

" self.input_mean, self.input_variance\n",

|

| 357 |

+

" )\n",

|

| 358 |

+

" )\n",

|

| 359 |

+

"\n",

|

| 360 |

+

" if not self.built:\n",

|

| 361 |

+

" raise RuntimeError(\"`build` must be called before `update_state`.\")\n",

|

| 362 |

+

"\n",

|

| 363 |

+

" data = self._standardize_inputs(data)\n",

|

| 364 |

+

" data = tf.cast(data, self.adapt_mean.dtype)\n",

|

| 365 |

+

" batch_mean, batch_variance = tf.nn.moments(data, axes=self._reduce_axis)\n",

|

| 366 |

+

" batch_shape = tf.shape(data, out_type=self.count.dtype)\n",

|

| 367 |

+

" if self._reduce_axis:\n",

|

| 368 |

+

" batch_reduce_shape = tf.gather(batch_shape, self._reduce_axis)\n",

|

| 369 |

+

" batch_count = tf.reduce_prod(batch_reduce_shape)\n",

|

| 370 |

+

" else:\n",

|

| 371 |

+

" batch_count = 1\n",

|

| 372 |

+

"\n",

|

| 373 |

+

" total_count = batch_count + self.count\n",

|

| 374 |

+

" batch_weight = tf.cast(batch_count, dtype=self.compute_dtype) / tf.cast(\n",

|

| 375 |

+

" total_count, dtype=self.compute_dtype\n",

|

| 376 |

+

" )\n",

|

| 377 |

+

" existing_weight = 1.0 - batch_weight\n",

|

| 378 |

+

"\n",

|

| 379 |

+

" total_mean = (\n",

|

| 380 |

+

" self.adapt_mean * existing_weight + batch_mean * batch_weight\n",

|

| 381 |

+

" )\n",

|

| 382 |

+

" # The variance is computed using the lack-of-fit sum of squares\n",

|

| 383 |

+

" # formula (see\n",

|

| 384 |

+

" # https://en.wikipedia.org/wiki/Lack-of-fit_sum_of_squares).\n",

|

| 385 |

+

" total_variance = (\n",

|

| 386 |

+

" self.adapt_variance + (self.adapt_mean - total_mean) ** 2\n",

|

| 387 |

+

" ) * existing_weight + (\n",

|

| 388 |

+

" batch_variance + (batch_mean - total_mean) ** 2\n",

|

| 389 |

+

" ) * batch_weight\n",

|

| 390 |

+

" self.adapt_mean.assign(total_mean)\n",

|

| 391 |

+

" self.adapt_variance.assign(total_variance)\n",

|

| 392 |

+

" self.count.assign(total_count)\n",

|

| 393 |

+

"\n",

|

| 394 |

+

" def reset_state(self):\n",

|

| 395 |

+

" if self.input_mean is not None or not self.built:\n",

|

| 396 |

+

" return\n",

|

| 397 |

+

"\n",

|

| 398 |

+

" self.adapt_mean.assign(tf.zeros_like(self.adapt_mean))\n",

|

| 399 |

+

" self.adapt_variance.assign(tf.ones_like(self.adapt_variance))\n",

|

| 400 |

+

" self.count.assign(tf.zeros_like(self.count))\n",

|

| 401 |

+

"\n",

|

| 402 |

+

" def finalize_state(self):\n",

|

| 403 |

+

" if self.input_mean is not None or not self.built:\n",

|

| 404 |

+

" return\n",

|

| 405 |

+

"\n",

|

| 406 |

+

" # In the adapt case, we make constant tensors for mean and variance with\n",

|

| 407 |

+

" # proper broadcast shape and dtype each time `finalize_state` is called.\n",

|

| 408 |

+

" self.mean = tf.reshape(self.adapt_mean, self._broadcast_shape)\n",

|

| 409 |

+

" self.mean = tf.cast(self.mean, self.compute_dtype)\n",

|

| 410 |

+

" self.variance = tf.reshape(self.adapt_variance, self._broadcast_shape)\n",

|

| 411 |

+

" self.variance = tf.cast(self.variance, self.compute_dtype)\n",

|

| 412 |

+

"\n",

|

| 413 |

+

" def call(self, inputs):\n",

|

| 414 |

+

" inputs = self._standardize_inputs(inputs)\n",

|

| 415 |

+

" # The base layer automatically casts floating-point inputs, but we\n",

|

| 416 |

+

" # explicitly cast here to also allow integer inputs to be passed\n",

|

| 417 |

+

" inputs = tf.cast(inputs, self.compute_dtype)\n",

|

| 418 |

+

" if self.invert:\n",

|

| 419 |

+

" return (inputs + self.mean) * tf.maximum(\n",

|

| 420 |

+

" tf.sqrt(self.variance), tf.keras.backend.epsilon()\n",

|

| 421 |

+

" )\n",

|

| 422 |

+

" else:\n",

|

| 423 |

+

" return (inputs - self.mean) / tf.maximum(\n",

|

| 424 |

+

" tf.sqrt(self.variance), tf.keras.backend.epsilon()\n",

|

| 425 |

+

" )\n",

|

| 426 |

+

"\n",

|

| 427 |

+

" def compute_output_shape(self, input_shape):\n",

|

| 428 |

+

" return input_shape\n",

|

| 429 |

+

"\n",

|

| 430 |

+

" def compute_output_signature(self, input_spec):\n",

|

| 431 |

+

" return input_spec\n",

|

| 432 |

+

"\n",

|

| 433 |

+

" def get_config(self):\n",

|

| 434 |

+

" config = super().get_config()\n",

|

| 435 |

+

" config.update(\n",

|

| 436 |

+

" {\n",

|

| 437 |

+

" \"axis\": self.axis,\n",

|

| 438 |

+

" \"mean\": tf.keras.layers.experimental.preprocessing.preprocessing_utils.utils.listify_tensors(self.input_mean),\n",

|

| 439 |

+

" \"variance\": tf.keras.layers.experimental.preprocessing.preprocessing_utils.utils.listify_tensors(self.input_variance),\n",

|

| 440 |

+

" }\n",

|

| 441 |

+

" )\n",

|

| 442 |

+

" return config\n",

|

| 443 |

+

"\n",

|

| 444 |

+

" def _standardize_inputs(self, inputs):\n",

|

| 445 |

+

" inputs = tf.convert_to_tensor(inputs)\n",

|

| 446 |

+

" if inputs.dtype != self.compute_dtype:\n",

|

| 447 |

+

" inputs = tf.cast(inputs, self.compute_dtype)\n",

|

| 448 |

+

" return inputs"

|

| 449 |

+

]

|

| 450 |

+

},

|

| 451 |

+

{

|

| 452 |

+

"cell_type": "code",

|

| 453 |

+

"execution_count": null,

|

| 454 |

+

"metadata": {},

|

| 455 |

+

"outputs": [],

|

| 456 |

+

"source": [

|

| 457 |

+

"class PositionalEmbedding(Layer):\n",

|

| 458 |

+

" def __init__(self, units, dropout_rate, **kwargs):\n",

|

| 459 |

+

" super(PositionalEmbedding, self).__init__(**kwargs)\n",

|

| 460 |

+

"\n",

|

| 461 |

+

" self.units = units\n",

|

| 462 |

+

"\n",

|

| 463 |

+

" self.projection = Dense(units, kernel_initializer=TruncatedNormal(stddev=0.02))\n",

|

| 464 |

+

" self.dropout = Dropout(rate=dropout_rate)\n",

|

| 465 |

+

"\n",

|

| 466 |

+

" def build(self, input_shape):\n",

|

| 467 |

+

" super(PositionalEmbedding, self).build(input_shape)\n",

|

| 468 |

+

"\n",

|

| 469 |

+

" print(\"pos_embbeding: \", input_shape)\n",

|

| 470 |

+

" self.temporal_position = self.add_weight(\n",

|

| 471 |

+

" name=\"temporal_position\",\n",

|

| 472 |

+

" shape=(1, input_shape[1], 1, self.units),\n",

|

| 473 |

+

" initializer=TruncatedNormal(stddev=0.02),\n",

|

| 474 |

+

" trainable=True,\n",

|

| 475 |

+

" )\n",

|

| 476 |

+

" self.spatial_position = self.add_weight(\n",

|

| 477 |

+

" name=\"spatial_position\",\n",

|

| 478 |

+

" shape=(1, 1, input_shape[2], self.units),\n",

|

| 479 |

+

" initializer=TruncatedNormal(stddev=0.02),\n",

|

| 480 |

+

" trainable=True,\n",

|

| 481 |

+

" )\n",

|

| 482 |

+

"\n",

|

| 483 |

+

" def call(self, inputs, training):\n",

|

| 484 |

+

" x = self.projection(inputs)\n",

|

| 485 |

+

" x += self.temporal_position\n",

|

| 486 |

+

" x += self.spatial_position\n",

|

| 487 |

+

"\n",

|

| 488 |

+

" return self.dropout(x, training=training)"

|

| 489 |

+

]

|

| 490 |

+

},

|

| 491 |

+

{

|

| 492 |

+

"cell_type": "code",

|

| 493 |

+

"execution_count": null,

|

| 494 |

+

"metadata": {},

|

| 495 |

+

"outputs": [],

|

| 496 |

+

"source": [

|

| 497 |

+

"class Encoder(Layer):\n",

|

| 498 |

+

" def __init__(\n",

|

| 499 |

+

" self, embed_dim, mlp_dim, num_heads, dropout_rate, attention_dropout_rate, **kwargs\n",

|

| 500 |

+

" ):\n",

|

| 501 |

+

" super(Encoder, self).__init__(**kwargs)\n",

|

| 502 |

+

"\n",

|

| 503 |

+

" # Multi-head Attention\n",

|

| 504 |

+

" self.mha = MultiHeadAttention(\n",

|

| 505 |

+

" num_heads=num_heads,\n",

|

| 506 |

+

" key_dim=embed_dim,\n",

|

| 507 |

+

" dropout=attention_dropout_rate,\n",

|

| 508 |

+

" kernel_initializer=TruncatedNormal(stddev=0.02),\n",

|

| 509 |

+

" attention_axes=(1, 2), # 2D attention (timestep, patch)\n",

|

| 510 |

+

" )\n",

|

| 511 |

+

"\n",

|

| 512 |

+

" # Point wise feed forward network\n",

|

| 513 |

+

" self.dense_0 = Dense(\n",

|

| 514 |

+

" units=mlp_dim,\n",

|

| 515 |

+

" activation=\"gelu\",\n",

|

| 516 |

+

" kernel_initializer=TruncatedNormal(stddev=0.02),\n",

|

| 517 |

+

" )\n",

|

| 518 |

+

" self.dense_1 = Dense(\n",

|

| 519 |

+

" units=embed_dim, kernel_initializer=TruncatedNormal(stddev=0.02)\n",

|

| 520 |

+

" )\n",

|

| 521 |

+

"\n",

|

| 522 |

+

" self.dropout_0 = Dropout(rate=dropout_rate)\n",

|

| 523 |

+

" self.dropout_1 = Dropout(rate=dropout_rate)\n",

|

| 524 |

+

"\n",

|

| 525 |

+

" self.norm_0 = LayerNormalization(epsilon=1e-12)\n",

|

| 526 |

+

" self.norm_1 = LayerNormalization(epsilon=1e-12)\n",

|

| 527 |

+

"\n",

|

| 528 |

+

" self.add_0 = Add()\n",

|

| 529 |

+

" self.add_1 = Add()\n",

|

| 530 |

+

"\n",

|

| 531 |

+

" def call(self, inputs, training):\n",

|

| 532 |

+

" # Attention block\n",

|

| 533 |

+

" x = self.norm_0(inputs)\n",

|

| 534 |

+

" x = self.mha(\n",

|

| 535 |

+

" query=x,\n",

|

| 536 |

+

" key=x,\n",

|

| 537 |

+

" value=x,\n",

|

| 538 |

+

" training=training,\n",

|

| 539 |

+

" )\n",

|

| 540 |

+

" x = self.dropout_0(x, training=training)\n",

|

| 541 |

+

" x = self.add_0([x, inputs])\n",

|

| 542 |

+

"\n",

|

| 543 |

+

" # MLP block\n",

|

| 544 |

+

" y = self.norm_1(x)\n",

|

| 545 |

+

" y = self.dense_0(y)\n",

|

| 546 |

+

" y = self.dense_1(y)\n",

|

| 547 |

+

" y = self.dropout_1(y, training=training)\n",

|

| 548 |

+

"\n",

|

| 549 |

+

" return self.add_1([x, y])"

|

| 550 |

+

]

|

| 551 |

+

},

|

| 552 |

+

{

|

| 553 |

+

"cell_type": "code",

|

| 554 |

+

"execution_count": null,

|

| 555 |

+

"metadata": {},

|

| 556 |

+

"outputs": [],

|

| 557 |

+

"source": [

|

| 558 |

+

"class Decoder(Layer):\n",

|

| 559 |

+

" def __init__(\n",

|

| 560 |

+

" self, embed_dim, mlp_dim, num_heads, dropout_rate, attention_dropout_rate, **kwargs\n",

|

| 561 |

+

" ):\n",

|

| 562 |

+

" super(Decoder, self).__init__(**kwargs)\n",

|

| 563 |

+

"\n",

|

| 564 |

+

" # MultiHeadAttention\n",

|

| 565 |

+

" self.mha_0 = MultiHeadAttention(\n",

|

| 566 |

+

" num_heads=num_heads,\n",

|

| 567 |

+

" key_dim=embed_dim,\n",

|

| 568 |

+

" dropout=attention_dropout_rate,\n",

|

| 569 |

+

" kernel_initializer=TruncatedNormal(stddev=0.02),\n",

|

| 570 |

+

" attention_axes=(1, 2), # 2D attention (timestep, patch)\n",

|

| 571 |

+

" )\n",

|

| 572 |

+

" self.mha_1 = MultiHeadAttention(\n",

|

| 573 |

+

" num_heads=num_heads,\n",

|

| 574 |

+

" key_dim=embed_dim,\n",

|

| 575 |

+

" dropout=attention_dropout_rate,\n",

|

| 576 |

+

" kernel_initializer=TruncatedNormal(stddev=0.02),\n",

|

| 577 |

+

" attention_axes=(1, 2), # 2D attention (timestep, patch)\n",

|

| 578 |

+

" )\n",

|

| 579 |

+

"\n",

|

| 580 |

+

" # Point wise feed forward network\n",

|

| 581 |

+

" self.dense_0 = Dense(\n",

|

| 582 |

+

" units=mlp_dim,\n",

|

| 583 |

+

" activation=\"gelu\",\n",

|

| 584 |

+

" kernel_initializer=TruncatedNormal(stddev=0.02),\n",

|

| 585 |

+

" )\n",

|

| 586 |

+

" self.dense_1 = Dense(\n",

|

| 587 |

+

" units=embed_dim, kernel_initializer=TruncatedNormal(stddev=0.02)\n",

|

| 588 |

+

" )\n",

|

| 589 |

+

"\n",

|

| 590 |

+

" self.dropout_0 = Dropout(rate=dropout_rate)\n",

|

| 591 |

+

" self.dropout_1 = Dropout(rate=dropout_rate)\n",

|

| 592 |

+

" self.dropout_2 = Dropout(rate=dropout_rate)\n",

|

| 593 |

+

"\n",

|

| 594 |

+

" self.norm_0 = LayerNormalization(epsilon=1e-12)\n",

|

| 595 |

+

" self.norm_1 = LayerNormalization(epsilon=1e-12)\n",

|

| 596 |

+

" self.norm_2 = LayerNormalization(epsilon=1e-12)\n",

|

| 597 |

+

"\n",

|

| 598 |

+

" self.add_0 = Add()\n",

|

| 599 |

+

" self.add_1 = Add()\n",

|

| 600 |

+

" self.add_2 = Add()\n",

|

| 601 |

+

"\n",

|

| 602 |

+

" def call(self, inputs, enc_output, training):\n",

|

| 603 |

+

" # Attention block\n",

|

| 604 |

+

" x = self.norm_0(inputs)\n",

|

| 605 |

+

" x = self.mha_0(\n",

|

| 606 |

+

" query=x,\n",

|

| 607 |

+

" key=x,\n",

|

| 608 |

+

" value=x,\n",

|

| 609 |

+

" training=training,\n",

|

| 610 |

+

" )\n",

|

| 611 |

+

" x = self.dropout_0(x, training=training)\n",

|

| 612 |

+

" x = self.add_0([x, inputs])\n",

|

| 613 |

+

"\n",

|

| 614 |

+

" # Attention block\n",

|

| 615 |

+

" y = self.norm_1(x)\n",

|

| 616 |

+

" y = self.mha_1(\n",

|

| 617 |

+