Upload folder using huggingface_hub

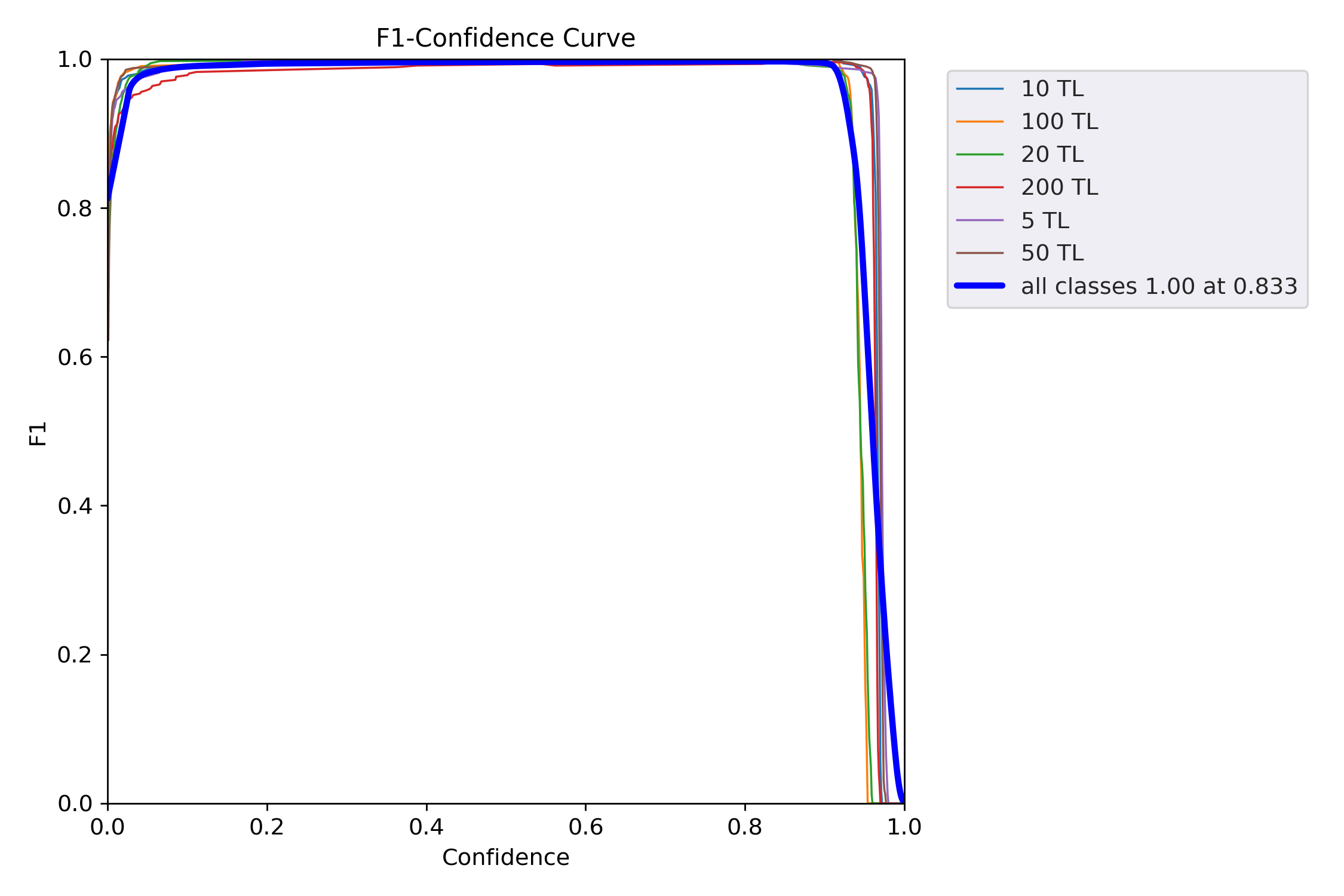

Browse files- F1_curve.png +0 -0

- PR_curve.png +0 -0

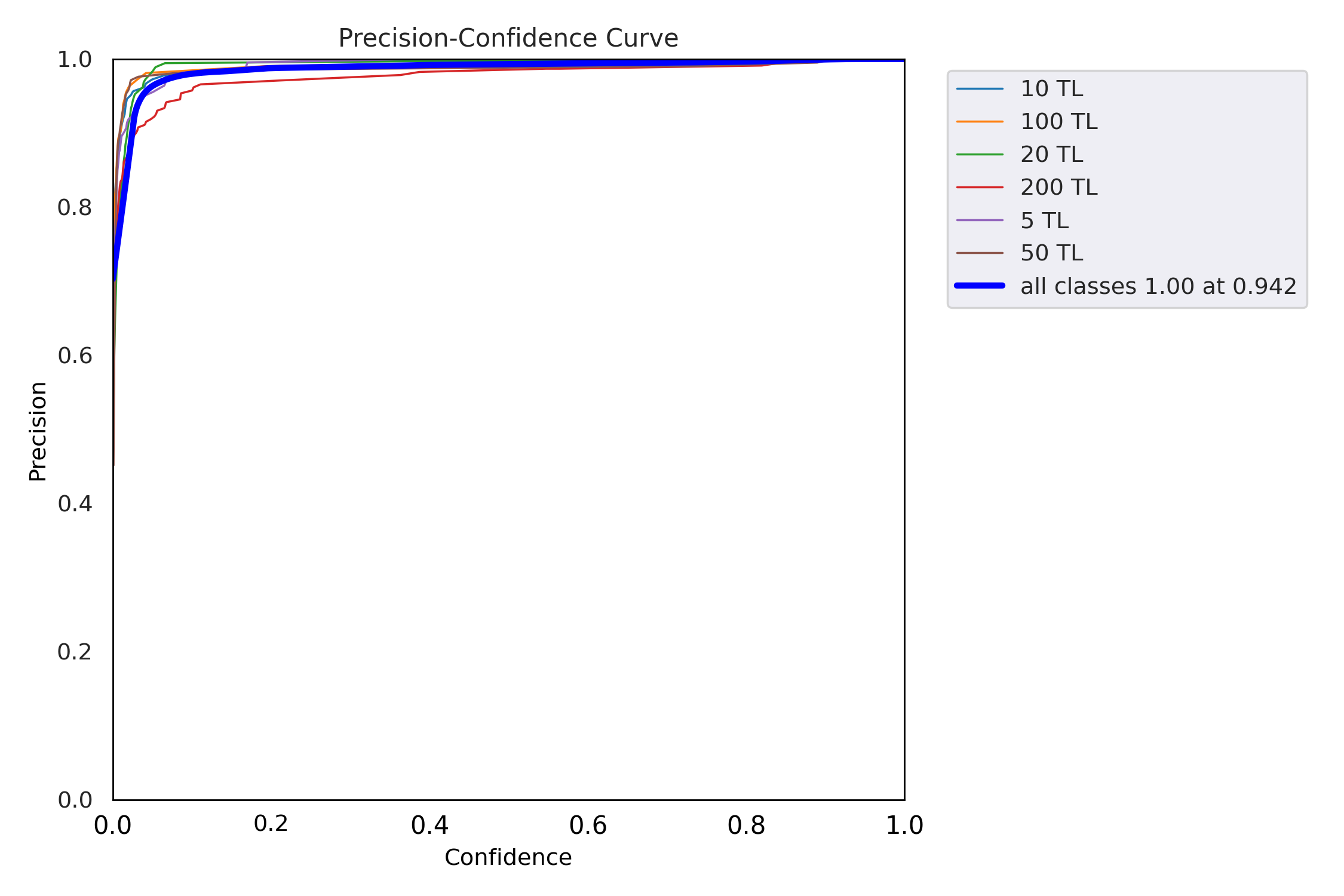

- P_curve.png +0 -0

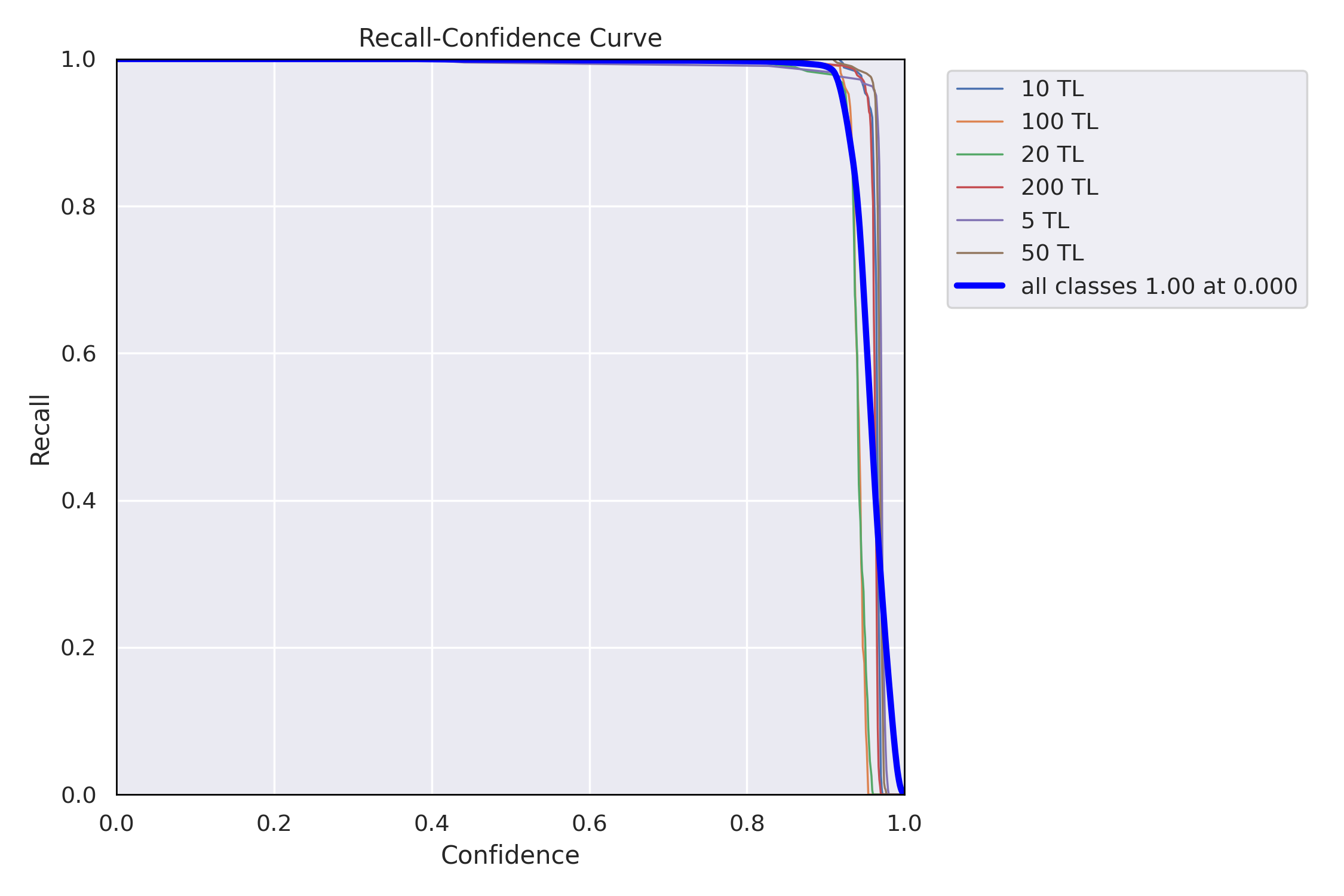

- R_curve.png +0 -0

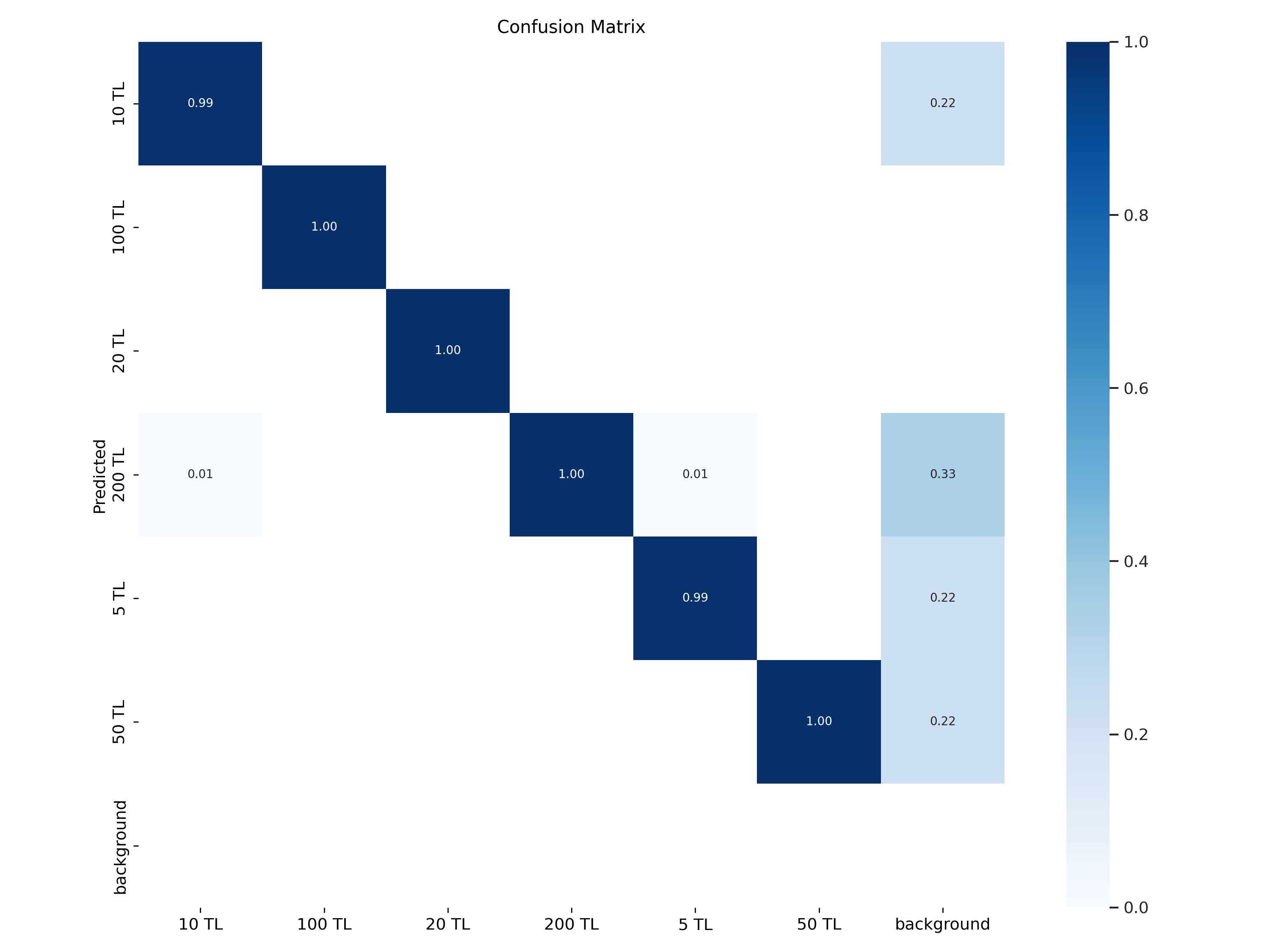

- confusion_matrix.png +0 -0

- events.out.tfevents.1725060504.fcc76edf3b45.1821.0 +3 -0

- hyp.yaml +28 -0

- labels.jpg +0 -0

- labels_correlogram.jpg +0 -0

- model_artifacts.json +1 -0

- opt.yaml +72 -0

- results.csv +26 -0

- results.png +0 -0

- roboflow_deploy.zip +3 -0

- state_dict.pt +3 -0

- train_batch0.jpg +0 -0

- train_batch1.jpg +0 -0

- train_batch2.jpg +0 -0

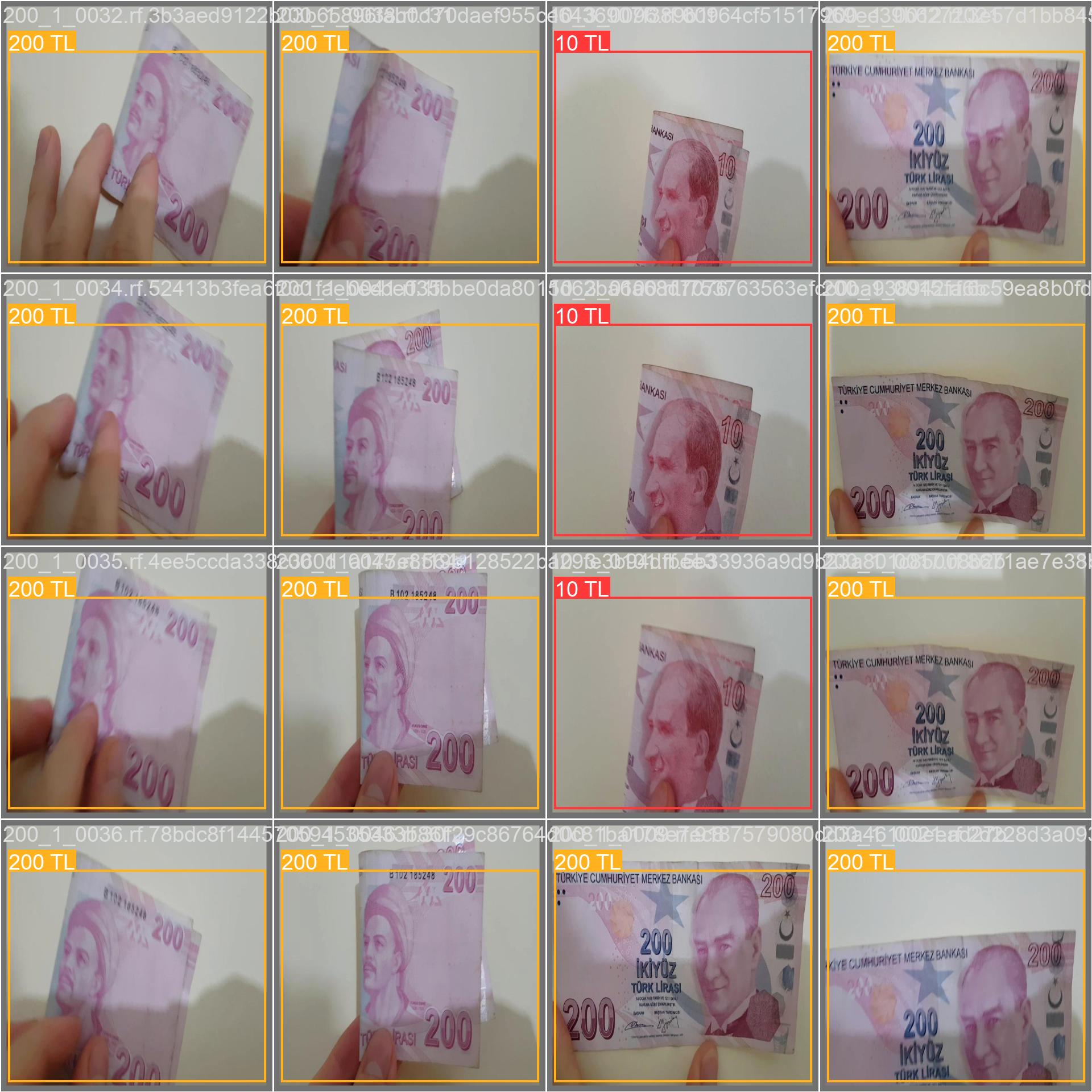

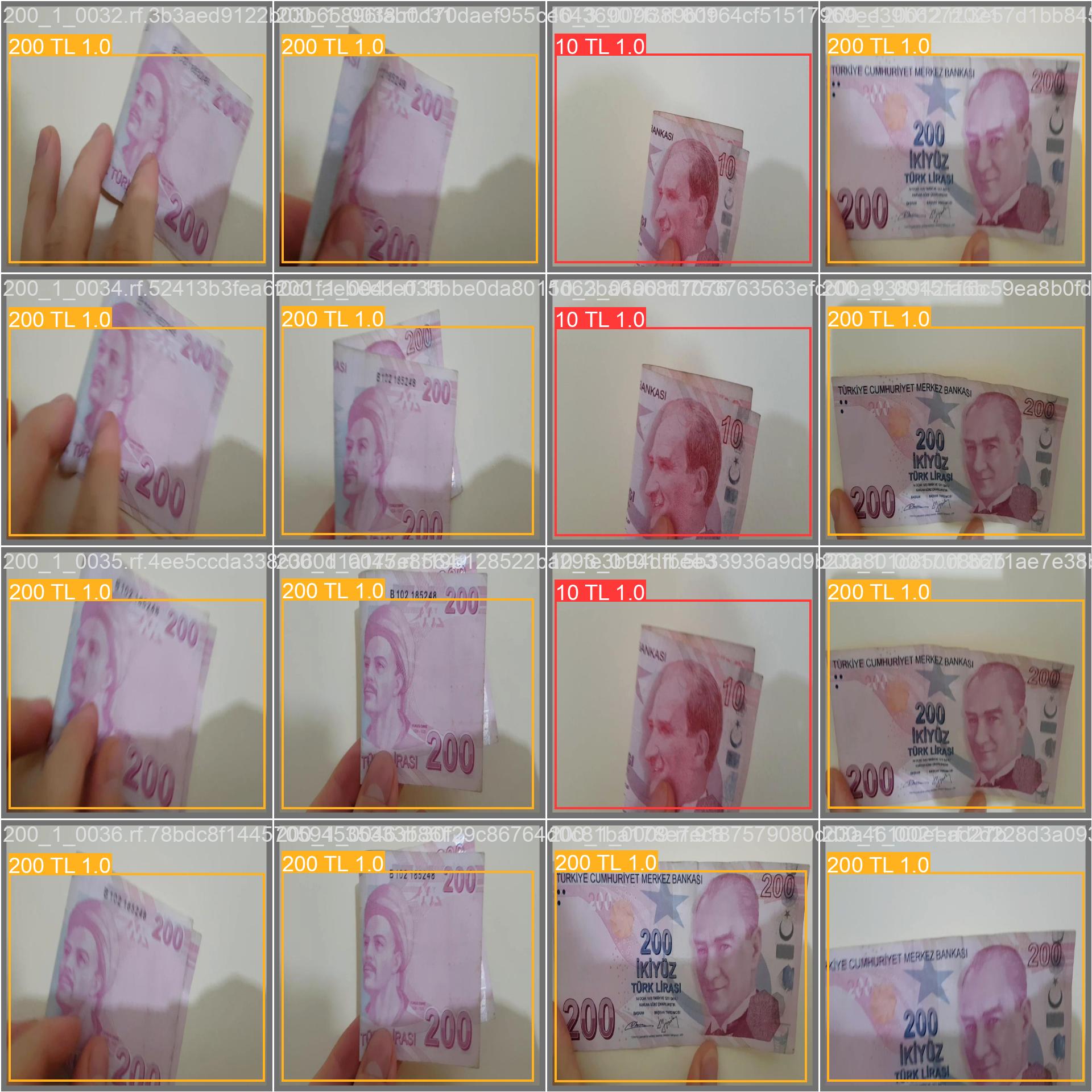

- val_batch0_labels.jpg +0 -0

- val_batch0_pred.jpg +0 -0

- val_batch1_labels.jpg +0 -0

- val_batch1_pred.jpg +0 -0

- val_batch2_labels.jpg +0 -0

- val_batch2_pred.jpg +0 -0

- weights/best.pt +3 -0

- weights/best_striped.pt +3 -0

- weights/last.pt +3 -0

- weights/last_striped.pt +3 -0

F1_curve.png

ADDED

|

PR_curve.png

ADDED

|

P_curve.png

ADDED

|

R_curve.png

ADDED

|

confusion_matrix.png

ADDED

|

events.out.tfevents.1725060504.fcc76edf3b45.1821.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:50b333f8376ca2811c00af1c0ed56719b3c5cc6be52e16684295e1976cb59f2f

|

| 3 |

+

size 1736517

|

hyp.yaml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

lr0: 0.01

|

| 2 |

+

lrf: 0.01

|

| 3 |

+

momentum: 0.937

|

| 4 |

+

weight_decay: 0.0005

|

| 5 |

+

warmup_epochs: 3.0

|

| 6 |

+

warmup_momentum: 0.8

|

| 7 |

+

warmup_bias_lr: 0.1

|

| 8 |

+

box: 7.5

|

| 9 |

+

cls: 0.5

|

| 10 |

+

cls_pw: 1.0

|

| 11 |

+

dfl: 1.5

|

| 12 |

+

obj_pw: 1.0

|

| 13 |

+

iou_t: 0.2

|

| 14 |

+

anchor_t: 5.0

|

| 15 |

+

fl_gamma: 0.0

|

| 16 |

+

hsv_h: 0.015

|

| 17 |

+

hsv_s: 0.7

|

| 18 |

+

hsv_v: 0.4

|

| 19 |

+

degrees: 0.0

|

| 20 |

+

translate: 0.1

|

| 21 |

+

scale: 0.9

|

| 22 |

+

shear: 0.0

|

| 23 |

+

perspective: 0.0

|

| 24 |

+

flipud: 0.0

|

| 25 |

+

fliplr: 0.5

|

| 26 |

+

mosaic: 1.0

|

| 27 |

+

mixup: 0.15

|

| 28 |

+

copy_paste: 0.3

|

labels.jpg

ADDED

|

labels_correlogram.jpg

ADDED

|

model_artifacts.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"names": ["10 TL", "100 TL", "20 TL", "200 TL", "5 TL", "50 TL"], "nc": 6, "args": {"imgsz": 640, "batch": 16}, "model_type": "yolov9", "yaml": {"nc": 6, "depth_multiple": 1.0, "width_multiple": 1.0, "anchors": 3, "backbone": [[-1, 1, "Conv", [64, 3, 2]], [-1, 1, "Conv", [128, 3, 2]], [-1, 1, "RepNCSPELAN4", [256, 128, 64, 1]], [-1, 1, "ADown", [256]], [-1, 1, "RepNCSPELAN4", [512, 256, 128, 1]], [-1, 1, "ADown", [512]], [-1, 1, "RepNCSPELAN4", [512, 512, 256, 1]], [-1, 1, "ADown", [512]], [-1, 1, "RepNCSPELAN4", [512, 512, 256, 1]]], "head": [[-1, 1, "SPPELAN", [512, 256]], [-1, 1, "nn.Upsample", ["None", 2, "nearest"]], [[-1, 6], 1, "Concat", [1]], [-1, 1, "RepNCSPELAN4", [512, 512, 256, 1]], [-1, 1, "nn.Upsample", ["None", 2, "nearest"]], [[-1, 4], 1, "Concat", [1]], [-1, 1, "RepNCSPELAN4", [256, 256, 128, 1]], [-1, 1, "ADown", [256]], [[-1, 12], 1, "Concat", [1]], [-1, 1, "RepNCSPELAN4", [512, 512, 256, 1]], [-1, 1, "ADown", [512]], [[-1, 9], 1, "Concat", [1]], [-1, 1, "RepNCSPELAN4", [512, 512, 256, 1]], [[15, 18, 21], 1, "DDetect", ["nc"]]], "ch": 3}}

|

opt.yaml

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

weights: /content/weights/gelan-c.pt

|

| 2 |

+

cfg: models/detect/gelan-c.yaml

|

| 3 |

+

data: /content/yolov9/para-tanima-2/data.yaml

|

| 4 |

+

hyp:

|

| 5 |

+

lr0: 0.01

|

| 6 |

+

lrf: 0.01

|

| 7 |

+

momentum: 0.937

|

| 8 |

+

weight_decay: 0.0005

|

| 9 |

+

warmup_epochs: 3.0

|

| 10 |

+

warmup_momentum: 0.8

|

| 11 |

+

warmup_bias_lr: 0.1

|

| 12 |

+

box: 7.5

|

| 13 |

+

cls: 0.5

|

| 14 |

+

cls_pw: 1.0

|

| 15 |

+

dfl: 1.5

|

| 16 |

+

obj_pw: 1.0

|

| 17 |

+

iou_t: 0.2

|

| 18 |

+

anchor_t: 5.0

|

| 19 |

+

fl_gamma: 0.0

|

| 20 |

+

hsv_h: 0.015

|

| 21 |

+

hsv_s: 0.7

|

| 22 |

+

hsv_v: 0.4

|

| 23 |

+

degrees: 0.0

|

| 24 |

+

translate: 0.1

|

| 25 |

+

scale: 0.9

|

| 26 |

+

shear: 0.0

|

| 27 |

+

perspective: 0.0

|

| 28 |

+

flipud: 0.0

|

| 29 |

+

fliplr: 0.5

|

| 30 |

+

mosaic: 1.0

|

| 31 |

+

mixup: 0.15

|

| 32 |

+

copy_paste: 0.3

|

| 33 |

+

epochs: 25

|

| 34 |

+

batch_size: 16

|

| 35 |

+

imgsz: 640

|

| 36 |

+

rect: false

|

| 37 |

+

resume: false

|

| 38 |

+

nosave: false

|

| 39 |

+

noval: false

|

| 40 |

+

noautoanchor: false

|

| 41 |

+

noplots: false

|

| 42 |

+

evolve: null

|

| 43 |

+

bucket: ''

|

| 44 |

+

cache: null

|

| 45 |

+

image_weights: false

|

| 46 |

+

device: '0'

|

| 47 |

+

multi_scale: false

|

| 48 |

+

single_cls: false

|

| 49 |

+

optimizer: SGD

|

| 50 |

+

sync_bn: false

|

| 51 |

+

workers: 8

|

| 52 |

+

project: runs/train

|

| 53 |

+

name: exp

|

| 54 |

+

exist_ok: false

|

| 55 |

+

quad: false

|

| 56 |

+

cos_lr: false

|

| 57 |

+

flat_cos_lr: false

|

| 58 |

+

fixed_lr: false

|

| 59 |

+

label_smoothing: 0.0

|

| 60 |

+

patience: 100

|

| 61 |

+

freeze:

|

| 62 |

+

- 0

|

| 63 |

+

save_period: -1

|

| 64 |

+

seed: 0

|

| 65 |

+

local_rank: -1

|

| 66 |

+

min_items: 0

|

| 67 |

+

close_mosaic: 15

|

| 68 |

+

entity: null

|

| 69 |

+

upload_dataset: false

|

| 70 |

+

bbox_interval: -1

|

| 71 |

+

artifact_alias: latest

|

| 72 |

+

save_dir: runs/train/exp

|

results.csv

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision, metrics/recall, metrics/mAP_0.5,metrics/mAP_0.5:0.95, val/box_loss, val/cls_loss, val/dfl_loss, x/lr0, x/lr1, x/lr2

|

| 2 |

+

0, 0.88252, 1.8432, 1.3754, 0.94016, 0.90972, 0.97322, 0.90454, 0.37222, 0.60163, 1.0468, 0.070123, 0.0033196, 0.0033196

|

| 3 |

+

1, 0.72024, 0.97979, 1.2164, 0.85556, 0.82168, 0.88379, 0.77593, 0.45809, 0.98985, 1.168, 0.03986, 0.0063895, 0.0063895

|

| 4 |

+

2, 0.76763, 1.0334, 1.2524, 0.81785, 0.70752, 0.79806, 0.56731, 0.98722, 1.6022, 1.8426, 0.0093325, 0.0091954, 0.0091954

|

| 5 |

+

3, 0.78704, 1.0848, 1.2715, 0.81426, 0.79119, 0.88966, 0.56661, 0.94341, 1.2358, 1.6505, 0.008812, 0.008812, 0.008812

|

| 6 |

+

4, 0.7514, 1.0018, 1.237, 0.92151, 0.92984, 0.96649, 0.7224, 0.84681, 0.91712, 1.5872, 0.008812, 0.008812, 0.008812

|

| 7 |

+

5, 0.71603, 0.92162, 1.214, 0.92402, 0.89547, 0.96905, 0.8126, 0.65768, 0.70344, 1.4581, 0.008416, 0.008416, 0.008416

|

| 8 |

+

6, 0.69041, 0.91273, 1.1982, 0.97218, 0.95404, 0.99109, 0.88117, 0.54191, 0.54814, 1.2096, 0.00802, 0.00802, 0.00802

|

| 9 |

+

7, 0.67006, 0.86874, 1.1874, 0.97249, 0.9701, 0.99186, 0.84037, 0.60067, 0.51293, 1.2266, 0.007624, 0.007624, 0.007624

|

| 10 |

+

8, 0.63014, 0.81505, 1.1593, 0.974, 0.99228, 0.99229, 0.88805, 0.48941, 0.46551, 1.1613, 0.007228, 0.007228, 0.007228

|

| 11 |

+

9, 0.60669, 0.79376, 1.1411, 0.9667, 0.98155, 0.99109, 0.93512, 0.37412, 0.48994, 1.0514, 0.006832, 0.006832, 0.006832

|

| 12 |

+

10, 0.27779, 0.43606, 1.0769, 0.96651, 0.9821, 0.99327, 0.9099, 0.36574, 0.37635, 1.006, 0.006436, 0.006436, 0.006436

|

| 13 |

+

11, 0.22003, 0.35626, 1.0261, 0.94504, 0.96286, 0.99305, 0.93038, 0.38966, 0.42161, 1.0586, 0.00604, 0.00604, 0.00604

|

| 14 |

+

12, 0.21833, 0.34583, 1.0347, 0.94361, 0.94989, 0.99142, 0.98768, 0.24372, 0.41852, 0.90121, 0.005644, 0.005644, 0.005644

|

| 15 |

+

13, 0.20043, 0.29711, 1.0107, 0.96478, 0.9544, 0.9863, 0.98451, 0.25271, 0.39661, 0.89518, 0.005248, 0.005248, 0.005248

|

| 16 |

+

14, 0.19662, 0.27814, 1.0135, 0.98841, 0.99211, 0.99481, 0.99385, 0.24574, 0.27229, 0.88403, 0.004852, 0.004852, 0.004852

|

| 17 |

+

15, 0.1857, 0.24168, 1.0064, 0.9959, 0.99285, 0.99497, 0.94513, 0.33919, 0.27097, 0.99197, 0.004456, 0.004456, 0.004456

|

| 18 |

+

16, 0.18126, 0.21709, 1.0067, 0.99167, 0.9934, 0.99489, 0.98708, 0.28977, 0.24611, 0.94808, 0.00406, 0.00406, 0.00406

|

| 19 |

+

17, 0.18172, 0.20862, 1.0056, 0.9862, 0.98849, 0.99456, 0.99348, 0.21272, 0.24951, 0.83152, 0.003664, 0.003664, 0.003664

|

| 20 |

+

18, 0.16843, 0.19872, 0.99908, 0.9809, 0.97734, 0.99399, 0.99374, 0.23835, 0.29962, 0.87817, 0.003268, 0.003268, 0.003268

|

| 21 |

+

19, 0.1672, 0.17939, 1.0003, 0.99667, 0.99695, 0.995, 0.99488, 0.23018, 0.20267, 0.86361, 0.002872, 0.002872, 0.002872

|

| 22 |

+

20, 0.15985, 0.1616, 0.99427, 0.9704, 0.97807, 0.99244, 0.99222, 0.25208, 0.25832, 0.87329, 0.002476, 0.002476, 0.002476

|

| 23 |

+

21, 0.15558, 0.142, 0.98971, 0.9961, 0.99796, 0.99398, 0.94168, 0.30649, 0.19504, 0.92509, 0.00208, 0.00208, 0.00208

|

| 24 |

+

22, 0.14932, 0.14761, 0.98875, 0.9994, 0.99998, 0.995, 0.97926, 0.28341, 0.25527, 0.92273, 0.001684, 0.001684, 0.001684

|

| 25 |

+

23, 0.14334, 0.12234, 0.98213, 0.99846, 0.99858, 0.995, 0.99427, 0.25557, 0.20835, 0.88574, 0.001288, 0.001288, 0.001288

|

| 26 |

+

24, 0.13105, 0.11604, 0.98043, 0.99882, 0.9983, 0.995, 0.99269, 0.2453, 0.19962, 0.8813, 0.000892, 0.000892, 0.000892

|

results.png

ADDED

|

roboflow_deploy.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c42360da92714f7f76c95536850024729a0643b09c92981ffb67a722554c03ff

|

| 3 |

+

size 47586072

|

state_dict.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ba10ddbf947b9b20b3f02999d94465b6d459e2971747a2375f748b8cc7fc60c1

|

| 3 |

+

size 51323111

|

train_batch0.jpg

ADDED

|

train_batch1.jpg

ADDED

|

train_batch2.jpg

ADDED

|

val_batch0_labels.jpg

ADDED

|

val_batch0_pred.jpg

ADDED

|

val_batch1_labels.jpg

ADDED

|

val_batch1_pred.jpg

ADDED

|

val_batch2_labels.jpg

ADDED

|

val_batch2_pred.jpg

ADDED

|

weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3beda31fb42846ceec1a3015aad09e7f34e23344772c338873636e32b45bca4c

|

| 3 |

+

size 204662374

|

weights/best_striped.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1dc866b3b10b054cabf2ef641f49427aab8cad0634728b20fce0ab85818b3aeb

|

| 3 |

+

size 51466832

|

weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4a5ef885c125240bc17b7e48fa8143b479d629c6db8388509b217aec47c1d610

|

| 3 |

+

size 204662374

|

weights/last_striped.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a06288e203955b93989c5f281ee61b380e0703a61f7f97993a0c90b724a2cc32

|

| 3 |

+

size 51466832

|