Spaces:

Build error

Build error

init space

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- Dockerfile +52 -0

- LICENSE +21 -0

- README.md +681 -8

- app/app.py +34 -0

- code_snippets/03_custom_odm_example.py +10 -0

- code_snippets/03_orm.py +37 -0

- code_snippets/08_instructor_embeddings.py +18 -0

- code_snippets/08_text_embeddings.py +28 -0

- code_snippets/08_text_image_embeddings.py +37 -0

- configs/digital_data_etl_cs370.yaml +14 -0

- configs/end_to_end_data.yaml +87 -0

- configs/evaluating.yaml +9 -0

- configs/export_artifact_to_json.yaml +13 -0

- configs/feature_engineering.yaml +10 -0

- configs/generate_instruct_datasets.yaml +13 -0

- configs/generate_preference_datasets.yaml +13 -0

- configs/training.yaml +14 -0

- data/artifacts/cleaned_documents.json +0 -0

- data/artifacts/instruct_datasets.json +0 -0

- data/artifacts/preference_datasets.json +0 -0

- data/artifacts/raw_documents.json +0 -0

- data/data_warehouse_raw_data/ArticleDocument.json +0 -0

- data/data_warehouse_raw_data/PostDocument.json +1 -0

- data/data_warehouse_raw_data/RepositoryDocument.json +1 -0

- data/data_warehouse_raw_data/UserDocument.json +1 -0

- docker-compose.yml +83 -0

- images/cover_plus.png +0 -0

- images/crazy_cat.jpg +0 -0

- llm_engineering/__init__.py +4 -0

- llm_engineering/application/__init__.py +3 -0

- llm_engineering/application/crawlers/__init__.py +7 -0

- llm_engineering/application/crawlers/__pycache__/__init__.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/base.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/custom_article.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/dispatcher.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/github.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/linkedin.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/__pycache__/medium.cpython-311.pyc +0 -0

- llm_engineering/application/crawlers/base.py +63 -0

- llm_engineering/application/crawlers/custom_article.py +54 -0

- llm_engineering/application/crawlers/dispatcher.py +39 -0

- llm_engineering/application/crawlers/github.py +158 -0

- llm_engineering/application/dataset/__init__.py +3 -0

- llm_engineering/application/dataset/__pycache__/__init__.cpython-311.pyc +0 -0

- llm_engineering/application/dataset/__pycache__/constants.cpython-311.pyc +0 -0

- llm_engineering/application/dataset/__pycache__/generation.cpython-311.pyc +0 -0

- llm_engineering/application/dataset/__pycache__/output_parsers.cpython-311.pyc +0 -0

- llm_engineering/application/dataset/__pycache__/utils.cpython-311.pyc +0 -0

- llm_engineering/application/dataset/constants.py +26 -0

- llm_engineering/application/dataset/generation.py +260 -0

Dockerfile

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.11-slim-bullseye AS release

|

| 2 |

+

|

| 3 |

+

ENV WORKSPACE_ROOT=/llm_engineering/

|

| 4 |

+

ENV PYTHONDONTWRITEBYTECODE=1 \

|

| 5 |

+

PYTHONUNBUFFERED=1 \

|

| 6 |

+

POETRY_VERSION=1.8.3 \

|

| 7 |

+

DEBIAN_FRONTEND=noninteractive \

|

| 8 |

+

POETRY_NO_INTERACTION=1

|

| 9 |

+

|

| 10 |

+

RUN apt-get update -y && apt-get install -y --no-install-recommends \

|

| 11 |

+

wget \

|

| 12 |

+

curl \

|

| 13 |

+

gnupg \

|

| 14 |

+

build-essential \

|

| 15 |

+

gcc \

|

| 16 |

+

python3-dev \

|

| 17 |

+

libglib2.0-dev \

|

| 18 |

+

libnss3-dev \

|

| 19 |

+

&& wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | \

|

| 20 |

+

gpg --dearmor -o /usr/share/keyrings/google-linux-signing-key.gpg \

|

| 21 |

+

&& echo "deb [signed-by=/usr/share/keyrings/google-linux-signing-key.gpg] https://dl.google.com/linux/chrome/deb/ stable main" > /etc/apt/sources.list.d/google-chrome.list \

|

| 22 |

+

&& apt-get update -y && apt-get install -y --no-install-recommends google-chrome-stable \

|

| 23 |

+

&& apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/*

|

| 24 |

+

|

| 25 |

+

RUN pip install --no-cache-dir "poetry==$POETRY_VERSION" && \

|

| 26 |

+

poetry config installer.max-workers 20 && \

|

| 27 |

+

poetry config virtualenvs.create false

|

| 28 |

+

|

| 29 |

+

WORKDIR $WORKSPACE_ROOT

|

| 30 |

+

|

| 31 |

+

COPY pyproject.toml poetry.lock $WORKSPACE_ROOT

|

| 32 |

+

RUN poetry install --no-root --no-interaction --no-cache --without dev && \

|

| 33 |

+

poetry self add 'poethepoet[poetry_plugin]' && \

|

| 34 |

+

rm -rf ~/.cache/pypoetry/*

|

| 35 |

+

|

| 36 |

+

RUN curl -fsSL https://ollama.com/install.sh | sh

|

| 37 |

+

RUN ollama --version

|

| 38 |

+

|

| 39 |

+

RUN bash -c "ollama serve & sleep 5 && ollama pull llama3.1"

|

| 40 |

+

|

| 41 |

+

# Ensure app.py is copied

|

| 42 |

+

|

| 43 |

+

EXPOSE 7860

|

| 44 |

+

|

| 45 |

+

COPY . $WORKSPACE_ROOT

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

RUN poetry install

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

#ENTRYPOINT ["bash", "-c", "pwd && ls && poetry run python3 ./app/app.py"]

|

| 52 |

+

CMD ["bash", "-c", "ollama serve & poetry run python3 ./app/app.py"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Packt

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,10 +1,683 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

-

title: Docker Test

|

| 3 |

-

emoji: 🐨

|

| 4 |

-

colorFrom: gray

|

| 5 |

-

colorTo: indigo

|

| 6 |

-

sdk: docker

|

| 7 |

-

pinned: false

|

| 8 |

-

---

|

| 9 |

|

| 10 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CS370 Project

|

| 2 |

+

|

| 3 |

+

In this project we build a Retrieval Augmented Generation (RAG) system. RAG is a recent paradigm for large-scale language understanding tasks. It combines the strengths of retrieval-based and generation-based models, enabling the model to retrieve relevant information from a large corpus and generate a coherent domain-specific response

|

| 4 |

+

|

| 5 |

+

## Team Members

|

| 6 |

+

|

| 7 |

+

Jonah-Alexander Loewnich

|

| 8 |

+

- Github:

|

| 9 |

+

- HuggingFace:

|

| 10 |

+

|

| 11 |

+

Thomas Gammer

|

| 12 |

+

- Github:

|

| 13 |

+

- HuggingFace:

|

| 14 |

+

|

| 15 |

+

## Docker Containers

|

| 16 |

+

|

| 17 |

+

Here are the docker containers up and running.

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

## Crawled Resources

|

| 21 |

+

|

| 22 |

+

- https://github.com/ros-infrastructure/www.ros.org/

|

| 23 |

+

- https://github.com/ros-navigation/docs.nav2.org

|

| 24 |

+

- https://github.com/moveit/moveit2

|

| 25 |

+

- https://github.com/gazebosim/gz-sim

|

| 26 |

+

|

| 27 |

+

## LLM + RAG Responses

|

| 28 |

+

|

| 29 |

+

Here is our models response to the first question.

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

Here is our models response to the second question.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 37 |

|

| 38 |

+

<div align="center">

|

| 39 |

+

<h1>👷 LLM Engineer's Handbook</h1>

|

| 40 |

+

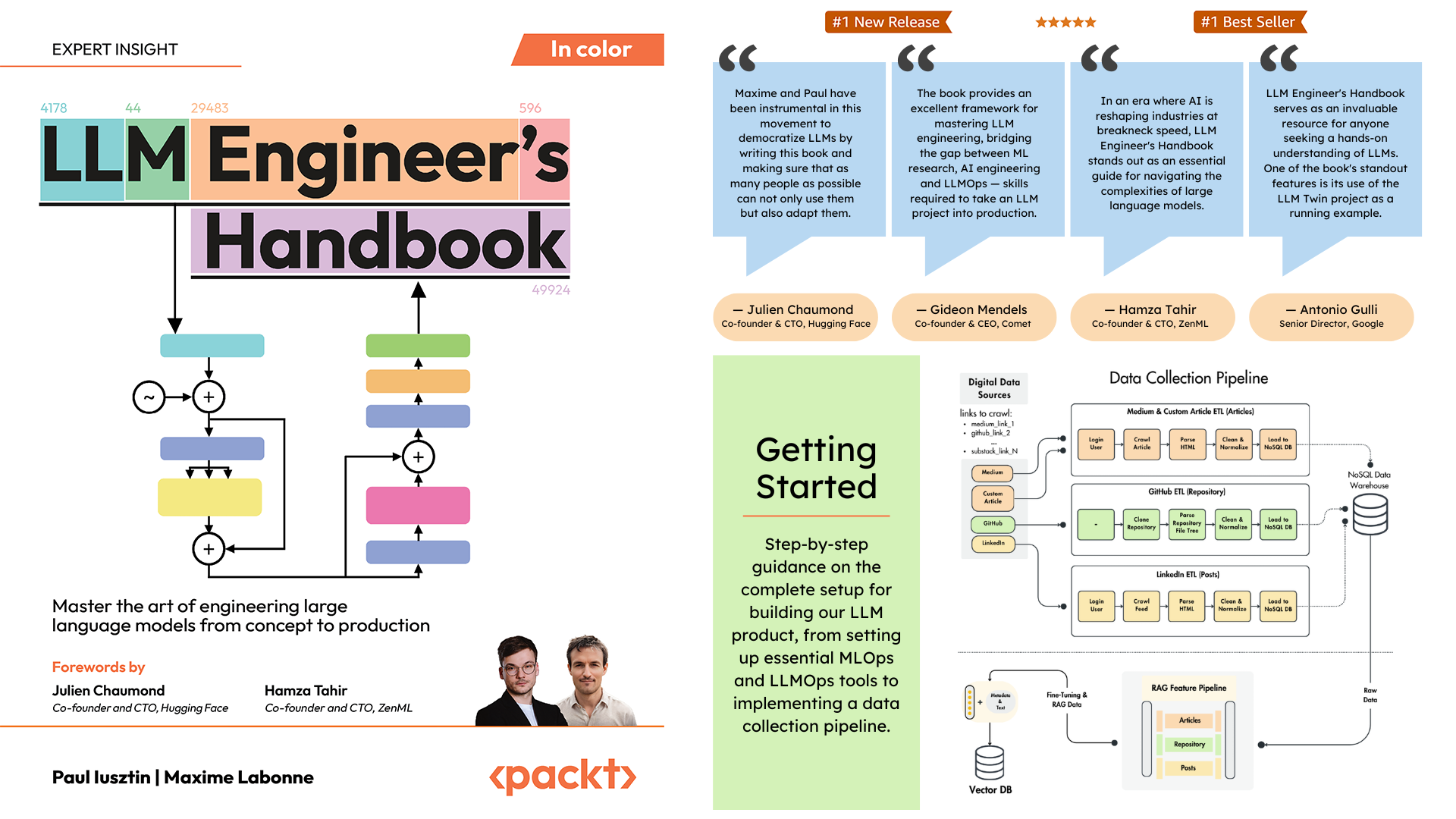

<p class="tagline">Official repository of the <a href="https://www.amazon.com/LLM-Engineers-Handbook-engineering-production/dp/1836200072/">LLM Engineer's Handbook</a> by <a href="https://github.com/iusztinpaul">Paul Iusztin</a> and <a href="https://github.com/mlabonne">Maxime Labonne</a></p>

|

| 41 |

+

</div>

|

| 42 |

+

</br>

|

| 43 |

+

|

| 44 |

+

<p align="center">

|

| 45 |

+

<a href="https://www.amazon.com/LLM-Engineers-Handbook-engineering-production/dp/1836200072/">

|

| 46 |

+

<img src="images/cover_plus.png" alt="Book cover">

|

| 47 |

+

</a>

|

| 48 |

+

</p>

|

| 49 |

+

|

| 50 |

+

## 🌟 Features

|

| 51 |

+

|

| 52 |

+

The goal of this book is to create your own end-to-end LLM-based system using best practices:

|

| 53 |

+

|

| 54 |

+

- 📝 Data collection & generation

|

| 55 |

+

- 🔄 LLM training pipeline

|

| 56 |

+

- 📊 Simple RAG system

|

| 57 |

+

- 🚀 Production-ready AWS deployment

|

| 58 |

+

- 🔍 Comprehensive monitoring

|

| 59 |

+

- 🧪 Testing and evaluation framework

|

| 60 |

+

|

| 61 |

+

You can download and use the final trained model on [Hugging Face](https://huggingface.co/mlabonne/TwinLlama-3.1-8B-DPO).

|

| 62 |

+

|

| 63 |

+

## 🔗 Dependencies

|

| 64 |

+

|

| 65 |

+

### Local dependencies

|

| 66 |

+

|

| 67 |

+

To install and run the project locally, you need the following dependencies.

|

| 68 |

+

|

| 69 |

+

| Tool | Version | Purpose | Installation Link |

|

| 70 |

+

|------|---------|---------|------------------|

|

| 71 |

+

| pyenv | ≥2.3.36 | Multiple Python versions (optional) | [Install Guide](https://github.com/pyenv/pyenv?tab=readme-ov-file#installation) |

|

| 72 |

+

| Python | 3.11 | Runtime environment | [Download](https://www.python.org/downloads/) |

|

| 73 |

+

| Poetry | ≥1.8.3 | Package management | [Install Guide](https://python-poetry.org/docs/#installation) |

|

| 74 |

+

| Docker | ≥27.1.1 | Containerization | [Install Guide](https://docs.docker.com/engine/install/) |

|

| 75 |

+

| AWS CLI | ≥2.15.42 | Cloud management | [Install Guide](https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html) |

|

| 76 |

+

| Git | ≥2.44.0 | Version control | [Download](https://git-scm.com/downloads) |

|

| 77 |

+

|

| 78 |

+

### Cloud services

|

| 79 |

+

|

| 80 |

+

The code also uses and depends on the following cloud services. For now, you don't have to do anything. We will guide you in the installation and deployment sections on how to use them:

|

| 81 |

+

|

| 82 |

+

| Service | Purpose |

|

| 83 |

+

|---------|---------|

|

| 84 |

+

| [HuggingFace](https://huggingface.com/) | Model registry |

|

| 85 |

+

| [Comet ML](https://www.comet.com/site/) | Experiment tracker |

|

| 86 |

+

| [Opik](https://www.comet.com/site/products/opik/) | Prompt monitoring |

|

| 87 |

+

| [ZenML](https://www.zenml.io/) | Orchestrator and artifacts layer |

|

| 88 |

+

| [AWS](https://aws.amazon.com/) | Compute and storage |

|

| 89 |

+

| [MongoDB](https://www.mongodb.com/) | NoSQL database |

|

| 90 |

+

| [Qdrant](https://qdrant.tech/) | Vector database |

|

| 91 |

+

| [GitHub Actions](https://github.com/features/actions) | CI/CD pipeline |

|

| 92 |

+

|

| 93 |

+

In the [LLM Engineer's Handbook](https://www.amazon.com/LLM-Engineers-Handbook-engineering-production/dp/1836200072/), Chapter 2 will walk you through each tool. Chapters 10 and 11 provide step-by-step guides on how to set up everything you need.

|

| 94 |

+

|

| 95 |

+

## 🗂️ Project Structure

|

| 96 |

+

|

| 97 |

+

Here is the directory overview:

|

| 98 |

+

|

| 99 |

+

```bash

|

| 100 |

+

.

|

| 101 |

+

├── code_snippets/ # Standalone example code

|

| 102 |

+

├── configs/ # Pipeline configuration files

|

| 103 |

+

├── llm_engineering/ # Core project package

|

| 104 |

+

│ ├── application/

|

| 105 |

+

│ ├── domain/

|

| 106 |

+

│ ├── infrastructure/

|

| 107 |

+

│ ├── model/

|

| 108 |

+

├── pipelines/ # ML pipeline definitions

|

| 109 |

+

├── steps/ # Pipeline components

|

| 110 |

+

├── tests/ # Test examples

|

| 111 |

+

├── tools/ # Utility scripts

|

| 112 |

+

│ ├── run.py

|

| 113 |

+

│ ├── ml_service.py

|

| 114 |

+

│ ├── rag.py

|

| 115 |

+

│ ├── data_warehouse.py

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

`llm_engineering/` is the main Python package implementing LLM and RAG functionality. It follows Domain-Driven Design (DDD) principles:

|

| 119 |

+

|

| 120 |

+

- `domain/`: Core business entities and structures

|

| 121 |

+

- `application/`: Business logic, crawlers, and RAG implementation

|

| 122 |

+

- `model/`: LLM training and inference

|

| 123 |

+

- `infrastructure/`: External service integrations (AWS, Qdrant, MongoDB, FastAPI)

|

| 124 |

+

|

| 125 |

+

The code logic and imports flow as follows: `infrastructure` → `model` → `application` → `domain`

|

| 126 |

+

|

| 127 |

+

`pipelines/`: Contains the ZenML ML pipelines, which serve as the entry point for all the ML pipelines. Coordinates the data processing and model training stages of the ML lifecycle.

|

| 128 |

+

|

| 129 |

+

`steps/`: Contains individual ZenML steps, which are reusable components for building and customizing ZenML pipelines. Steps perform specific tasks (e.g., data loading, preprocessing) and can be combined within the ML pipelines.

|

| 130 |

+

|

| 131 |

+

`tests/`: Covers a few sample tests used as examples within the CI pipeline.

|

| 132 |

+

|

| 133 |

+

`tools/`: Utility scripts used to call the ZenML pipelines and inference code:

|

| 134 |

+

- `run.py`: Entry point script to run ZenML pipelines.

|

| 135 |

+

- `ml_service.py`: Starts the REST API inference server.

|

| 136 |

+

- `rag.py`: Demonstrates usage of the RAG retrieval module.

|

| 137 |

+

- `data_warehouse.py`: Used to export or import data from the MongoDB data warehouse through JSON files.

|

| 138 |

+

|

| 139 |

+

`configs/`: ZenML YAML configuration files to control the execution of pipelines and steps.

|

| 140 |

+

|

| 141 |

+

`code_snippets/`: Independent code examples that can be executed independently.

|

| 142 |

+

|

| 143 |

+

## 💻 Installation

|

| 144 |

+

|

| 145 |

+

### 1. Clone the Repository

|

| 146 |

+

|

| 147 |

+

Start by cloning the repository and navigating to the project directory:

|

| 148 |

+

|

| 149 |

+

```bash

|

| 150 |

+

git clone https://github.com/PacktPublishing/LLM-Engineers-Handbook.git

|

| 151 |

+

cd LLM-Engineers-Handbook

|

| 152 |

+

```

|

| 153 |

+

|

| 154 |

+

Next, we have to prepare your Python environment and its adjacent dependencies.

|

| 155 |

+

|

| 156 |

+

### 2. Set Up Python Environment

|

| 157 |

+

|

| 158 |

+

The project requires Python 3.11. You can either use your global Python installation or set up a project-specific version using pyenv.

|

| 159 |

+

|

| 160 |

+

#### Option A: Using Global Python (if version 3.11 is installed)

|

| 161 |

+

|

| 162 |

+

Verify your Python version:

|

| 163 |

+

|

| 164 |

+

```bash

|

| 165 |

+

python --version # Should show Python 3.11.x

|

| 166 |

+

```

|

| 167 |

+

|

| 168 |

+

#### Option B: Using pyenv (recommended)

|

| 169 |

+

|

| 170 |

+

1. Verify pyenv installation:

|

| 171 |

+

|

| 172 |

+

```bash

|

| 173 |

+

pyenv --version # Should show pyenv 2.3.36 or later

|

| 174 |

+

```

|

| 175 |

+

|

| 176 |

+

2. Install Python 3.11.8:

|

| 177 |

+

|

| 178 |

+

```bash

|

| 179 |

+

pyenv install 3.11.8

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

3. Verify the installation:

|

| 183 |

+

|

| 184 |

+

```bash

|

| 185 |

+

python --version # Should show Python 3.11.8

|

| 186 |

+

```

|

| 187 |

+

|

| 188 |

+

4. Confirm Python version in the project directory:

|

| 189 |

+

|

| 190 |

+

```bash

|

| 191 |

+

python --version

|

| 192 |

+

# Output: Python 3.11.8

|

| 193 |

+

```

|

| 194 |

+

|

| 195 |

+

> [!NOTE]

|

| 196 |

+

> The project includes a `.python-version` file that automatically sets the correct Python version when you're in the project directory.

|

| 197 |

+

|

| 198 |

+

### 3. Install Dependencies

|

| 199 |

+

|

| 200 |

+

The project uses Poetry for dependency management.

|

| 201 |

+

|

| 202 |

+

1. Verify Poetry installation:

|

| 203 |

+

|

| 204 |

+

```bash

|

| 205 |

+

poetry --version # Should show Poetry version 1.8.3 or later

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

2. Set up the project environment and install dependencies:

|

| 209 |

+

|

| 210 |

+

```bash

|

| 211 |

+

poetry env use 3.11

|

| 212 |

+

poetry install --without aws

|

| 213 |

+

poetry run pre-commit install

|

| 214 |

+

```

|

| 215 |

+

|

| 216 |

+

This will:

|

| 217 |

+

|

| 218 |

+

- Configure Poetry to use Python 3.11

|

| 219 |

+

- Install project dependencies (excluding AWS-specific packages)

|

| 220 |

+

- Set up pre-commit hooks for code verification

|

| 221 |

+

|

| 222 |

+

### 4. Activate the Environment

|

| 223 |

+

|

| 224 |

+

As our task manager, we run all the scripts using [Poe the Poet](https://poethepoet.natn.io/index.html).

|

| 225 |

+

|

| 226 |

+

1. Start a Poetry shell:

|

| 227 |

+

|

| 228 |

+

```bash

|

| 229 |

+

poetry shell

|

| 230 |

+

```

|

| 231 |

+

|

| 232 |

+

2. Run project commands using Poe the Poet:

|

| 233 |

+

|

| 234 |

+

```bash

|

| 235 |

+

poetry poe ...

|

| 236 |

+

```

|

| 237 |

+

|

| 238 |

+

<details>

|

| 239 |

+

<summary>🔧 Troubleshooting Poe the Poet Installation</summary>

|

| 240 |

+

|

| 241 |

+

### Alternative Command Execution

|

| 242 |

+

|

| 243 |

+

If you're experiencing issues with `poethepoet`, you can still run the project commands directly through Poetry. Here's how:

|

| 244 |

+

|

| 245 |

+

1. Look up the command definition in `pyproject.toml`

|

| 246 |

+

2. Use `poetry run` with the underlying command

|

| 247 |

+

|

| 248 |

+

#### Example:

|

| 249 |

+

Instead of:

|

| 250 |

+

```bash

|

| 251 |

+

poetry poe local-infrastructure-up

|

| 252 |

+

```

|

| 253 |

+

Use the direct command from pyproject.toml:

|

| 254 |

+

```bash

|

| 255 |

+

poetry run <actual-command-from-pyproject-toml>

|

| 256 |

+

```

|

| 257 |

+

Note: All project commands are defined in the [tool.poe.tasks] section of pyproject.toml

|

| 258 |

+

</details>

|

| 259 |

+

|

| 260 |

+

Now, let's configure our local project with all the necessary credentials and tokens to run the code locally.

|

| 261 |

+

|

| 262 |

+

### 5. Local Development Setup

|

| 263 |

+

|

| 264 |

+

After you have installed all the dependencies, you must create and fill a `.env` file with your credentials to appropriately interact with other services and run the project. Setting your sensitive credentials in a `.env` file is a good security practice, as this file won't be committed to GitHub or shared with anyone else.

|

| 265 |

+

|

| 266 |

+

1. First, copy our example by running the following:

|

| 267 |

+

|

| 268 |

+

```bash

|

| 269 |

+

cp .env.example .env # The file must be at your repository's root!

|

| 270 |

+

```

|

| 271 |

+

|

| 272 |

+

2. Now, let's understand how to fill in all the essential variables within the `.env` file to get you started. The following are the mandatory settings we must complete when working locally:

|

| 273 |

+

|

| 274 |

+

#### OpenAI

|

| 275 |

+

|

| 276 |

+

To authenticate to OpenAI's API, you must fill out the `OPENAI_API_KEY` env var with an authentication token.

|

| 277 |

+

|

| 278 |

+

```env

|

| 279 |

+

OPENAI_API_KEY=your_api_key_here

|

| 280 |

+

```

|

| 281 |

+

|

| 282 |

+

→ Check out this [tutorial](https://platform.openai.com/docs/quickstart) to learn how to provide one from OpenAI.

|

| 283 |

+

|

| 284 |

+

#### Hugging Face

|

| 285 |

+

|

| 286 |

+

To authenticate to Hugging Face, you must fill out the `HUGGINGFACE_ACCESS_TOKEN` env var with an authentication token.

|

| 287 |

+

|

| 288 |

+

```env

|

| 289 |

+

HUGGINGFACE_ACCESS_TOKEN=your_token_here

|

| 290 |

+

```

|

| 291 |

+

|

| 292 |

+

→ Check out this [tutorial](https://huggingface.co/docs/hub/en/security-tokens) to learn how to provide one from Hugging Face.

|

| 293 |

+

|

| 294 |

+

#### Comet ML & Opik

|

| 295 |

+

|

| 296 |

+

To authenticate to Comet ML (required only during training) and Opik, you must fill out the `COMET_API_KEY` env var with your authentication token.

|

| 297 |

+

|

| 298 |

+

```env

|

| 299 |

+

COMET_API_KEY=your_api_key_here

|

| 300 |

+

```

|

| 301 |

+

|

| 302 |

+

→ Check out this [tutorial](https://www.comet.com/docs/v2/api-and-sdk/rest-api/overview/) to learn how to get the Comet ML variables from above. You can also access Opik's dashboard using 🔗[this link](https://www.comet.com/opik).

|

| 303 |

+

|

| 304 |

+

### 6. Deployment Setup

|

| 305 |

+

|

| 306 |

+

When deploying the project to the cloud, we must set additional settings for Mongo, Qdrant, and AWS. If you are just working locally, the default values of these env vars will work out of the box. Detailed deployment instructions are available in Chapter 11 of the [LLM Engineer's Handbook](https://www.amazon.com/LLM-Engineers-Handbook-engineering-production/dp/1836200072/).

|

| 307 |

+

|

| 308 |

+

#### MongoDB

|

| 309 |

+

|

| 310 |

+

We must change the `DATABASE_HOST` env var with the URL pointing to your cloud MongoDB cluster.

|

| 311 |

+

|

| 312 |

+

```env

|

| 313 |

+

DATABASE_HOST=your_mongodb_url

|

| 314 |

+

```

|

| 315 |

+

|

| 316 |

+

→ Check out this [tutorial](https://www.mongodb.com/resources/products/fundamentals/mongodb-cluster-setup) to learn how to create and host a MongoDB cluster for free.

|

| 317 |

+

|

| 318 |

+

#### Qdrant

|

| 319 |

+

|

| 320 |

+

Change `USE_QDRANT_CLOUD` to `true`, `QDRANT_CLOUD_URL` with the URL point to your cloud Qdrant cluster, and `QDRANT_APIKEY` with its API key.

|

| 321 |

+

|

| 322 |

+

```env

|

| 323 |

+

USE_QDRANT_CLOUD=true

|

| 324 |

+

QDRANT_CLOUD_URL=your_qdrant_cloud_url

|

| 325 |

+

QDRANT_APIKEY=your_qdrant_api_key

|

| 326 |

+

```

|

| 327 |

+

|

| 328 |

+

→ Check out this [tutorial](https://qdrant.tech/documentation/cloud/create-cluster/) to learn how to create a Qdrant cluster for free

|

| 329 |

+

|

| 330 |

+

#### AWS

|

| 331 |

+

|

| 332 |

+

For your AWS set-up to work correctly, you need the AWS CLI installed on your local machine and properly configured with an admin user (or a user with enough permissions to create new SageMaker, ECR, and S3 resources; using an admin user will make everything more straightforward).

|

| 333 |

+

|

| 334 |

+

Chapter 2 provides step-by-step instructions on how to install the AWS CLI, create an admin user on AWS, and get an access key to set up the `AWS_ACCESS_KEY` and `AWS_SECRET_KEY` environment variables. If you already have an AWS admin user in place, you have to configure the following env vars in your `.env` file:

|

| 335 |

+

|

| 336 |

+

```bash

|

| 337 |

+

AWS_REGION=eu-central-1 # Change it with your AWS region.

|

| 338 |

+

AWS_ACCESS_KEY=your_aws_access_key

|

| 339 |

+

AWS_SECRET_KEY=your_aws_secret_key

|

| 340 |

+

```

|

| 341 |

+

|

| 342 |

+

AWS credentials are typically stored in `~/.aws/credentials`. You can view this file directly using `cat` or similar commands:

|

| 343 |

+

|

| 344 |

+

```bash

|

| 345 |

+

cat ~/.aws/credentials

|

| 346 |

+

```

|

| 347 |

+

|

| 348 |

+

> [!IMPORTANT]

|

| 349 |

+

> Additional configuration options are available in [settings.py](https://github.com/PacktPublishing/LLM-Engineers-Handbook/blob/main/llm_engineering/settings.py). Any variable in the `Settings` class can be configured through the `.env` file.

|

| 350 |

+

|

| 351 |

+

## 🏗️ Infrastructure

|

| 352 |

+

|

| 353 |

+

### Local infrastructure (for testing and development)

|

| 354 |

+

|

| 355 |

+

When running the project locally, we host a MongoDB and Qdrant database using Docker. Also, a testing ZenML server is made available through their Python package.

|

| 356 |

+

|

| 357 |

+

> [!WARNING]

|

| 358 |

+

> You need Docker installed (>= v27.1.1)

|

| 359 |

+

|

| 360 |

+

For ease of use, you can start the whole local development infrastructure with the following command:

|

| 361 |

+

```bash

|

| 362 |

+

poetry poe local-infrastructure-up

|

| 363 |

+

```

|

| 364 |

+

|

| 365 |

+

Also, you can stop the ZenML server and all the Docker containers using the following command:

|

| 366 |

+

```bash

|

| 367 |

+

poetry poe local-infrastructure-down

|

| 368 |

+

```

|

| 369 |

+

|

| 370 |

+

> [!WARNING]

|

| 371 |

+

> When running on MacOS, before starting the server, export the following environment variable:

|

| 372 |

+

> `export OBJC_DISABLE_INITIALIZE_FORK_SAFETY=YES`

|

| 373 |

+

> Otherwise, the connection between the local server and pipeline will break. 🔗 More details in [this issue](https://github.com/zenml-io/zenml/issues/2369).

|

| 374 |

+

> This is done by default when using Poe the Poet.

|

| 375 |

+

|

| 376 |

+

Start the inference real-time RESTful API:

|

| 377 |

+

```bash

|

| 378 |

+

poetry poe run-inference-ml-service

|

| 379 |

+

```

|

| 380 |

+

|

| 381 |

+

> [!IMPORTANT]

|

| 382 |

+

> The LLM microservice, called by the RESTful API, will work only after deploying the LLM to AWS SageMaker.

|

| 383 |

+

|

| 384 |

+

#### ZenML

|

| 385 |

+

|

| 386 |

+

Dashboard URL: `localhost:8237`

|

| 387 |

+

|

| 388 |

+

Default credentials:

|

| 389 |

+

- `username`: default

|

| 390 |

+

- `password`:

|

| 391 |

+

|

| 392 |

+

→ Find out more about using and setting up [ZenML](https://docs.zenml.io/).

|

| 393 |

+

|

| 394 |

+

#### Qdrant

|

| 395 |

+

|

| 396 |

+

REST API URL: `localhost:6333`

|

| 397 |

+

|

| 398 |

+

Dashboard URL: `localhost:6333/dashboard`

|

| 399 |

+

|

| 400 |

+

→ Find out more about using and setting up [Qdrant with Docker](https://qdrant.tech/documentation/quick-start/).

|

| 401 |

+

|

| 402 |

+

#### MongoDB

|

| 403 |

+

|

| 404 |

+

Database URI: `mongodb://llm_engineering:[email protected]:27017`

|

| 405 |

+

|

| 406 |

+

Database name: `twin`

|

| 407 |

+

|

| 408 |

+

Default credentials:

|

| 409 |

+

- `username`: llm_engineering

|

| 410 |

+

- `password`: llm_engineering

|

| 411 |

+

|

| 412 |

+

→ Find out more about using and setting up [MongoDB with Docker](https://www.mongodb.com/docs/manual/tutorial/install-mongodb-community-with-docker).

|

| 413 |

+

|

| 414 |

+

You can search your MongoDB collections using your **IDEs MongoDB plugin** (which you have to install separately), where you have to use the database URI to connect to the MongoDB database hosted within the Docker container: `mongodb://llm_engineering:[email protected]:27017`

|

| 415 |

+

|

| 416 |

+

> [!IMPORTANT]

|

| 417 |

+

> Everything related to training or running the LLMs (e.g., training, evaluation, inference) can only be run if you set up AWS SageMaker, as explained in the next section on cloud infrastructure.

|

| 418 |

+

|

| 419 |

+

### Cloud infrastructure (for production)

|

| 420 |

+

|

| 421 |

+

Here we will quickly present how to deploy the project to AWS and other serverless services. We won't go into the details (as everything is presented in the book) but only point out the main steps you have to go through.

|

| 422 |

+

|

| 423 |

+

First, reinstall your Python dependencies with the AWS group:

|

| 424 |

+

```bash

|

| 425 |

+

poetry install --with aws

|

| 426 |

+

```

|

| 427 |

+

|

| 428 |

+

#### AWS SageMaker

|

| 429 |

+

|

| 430 |

+

> [!NOTE]

|

| 431 |

+

> Chapter 10 provides step-by-step instructions in the section "Implementing the LLM microservice using AWS SageMaker".

|

| 432 |

+

|

| 433 |

+

By this point, we expect you to have AWS CLI installed and your AWS CLI and project's env vars (within the `.env` file) properly configured with an AWS admin user.

|

| 434 |

+

|

| 435 |

+

To ensure best practices, we must create a new AWS user restricted to creating and deleting only resources related to AWS SageMaker. Create it by running:

|

| 436 |

+

```bash

|

| 437 |

+

poetry poe create-sagemaker-role

|

| 438 |

+

```

|

| 439 |

+

It will create a `sagemaker_user_credentials.json` file at the root of your repository with your new `AWS_ACCESS_KEY` and `AWS_SECRET_KEY` values. **But before replacing your new AWS credentials, also run the following command to create the execution role (to create it using your admin credentials).**

|

| 440 |

+

|

| 441 |

+

To create the IAM execution role used by AWS SageMaker to access other AWS resources on our behalf, run the following:

|

| 442 |

+

```bash

|

| 443 |

+

poetry poe create-sagemaker-execution-role

|

| 444 |

+

```

|

| 445 |

+

It will create a `sagemaker_execution_role.json` file at the root of your repository with your new `AWS_ARN_ROLE` value. Add it to your `.env` file.

|

| 446 |

+

|

| 447 |

+

Once you've updated the `AWS_ACCESS_KEY`, `AWS_SECRET_KEY`, and `AWS_ARN_ROLE` values in your `.env` file, you can use AWS SageMaker. **Note that this step is crucial to complete the AWS setup.**

|

| 448 |

+

|

| 449 |

+

#### Training

|

| 450 |

+

|

| 451 |

+

We start the training pipeline through ZenML by running the following:

|

| 452 |

+

```bash

|

| 453 |

+

poetry poe run-training-pipeline

|

| 454 |

+

```

|

| 455 |

+

This will start the training code using the configs from `configs/training.yaml` directly in SageMaker. You can visualize the results in Comet ML's dashboard.

|

| 456 |

+

|

| 457 |

+

We start the evaluation pipeline through ZenML by running the following:

|

| 458 |

+

```bash

|

| 459 |

+

poetry poe run-evaluation-pipeline

|

| 460 |

+

```

|

| 461 |

+

This will start the evaluation code using the configs from `configs/evaluating.yaml` directly in SageMaker. You can visualize the results in `*-results` datasets saved to your Hugging Face profile.

|

| 462 |

+

|

| 463 |

+

#### Inference

|

| 464 |

+

|

| 465 |

+

To create an AWS SageMaker Inference Endpoint, run:

|

| 466 |

+

```bash

|

| 467 |

+

poetry poe deploy-inference-endpoint

|

| 468 |

+

```

|

| 469 |

+

To test it out, run:

|

| 470 |

+

```bash

|

| 471 |

+

poetry poe test-sagemaker-endpoint

|

| 472 |

+

```

|

| 473 |

+

To delete it, run:

|

| 474 |

+

```bash

|

| 475 |

+

poetry poe delete-inference-endpoint

|

| 476 |

+

```

|

| 477 |

+

|

| 478 |

+

#### AWS: ML pipelines, artifacts, and containers

|

| 479 |

+

|

| 480 |

+

The ML pipelines, artifacts, and containers are deployed to AWS by leveraging ZenML's deployment features. Thus, you must create an account with ZenML Cloud and follow their guide on deploying a ZenML stack to AWS. Otherwise, we provide step-by-step instructions in **Chapter 11**, section **Deploying the LLM Twin's pipelines to the cloud** on what you must do.

|

| 481 |

+

|

| 482 |

+

#### Qdrant & MongoDB

|

| 483 |

+

|

| 484 |

+

We leverage Qdrant's and MongoDB's serverless options when deploying the project. Thus, you can either follow [Qdrant's](https://qdrant.tech/documentation/cloud/create-cluster/) and [MongoDB's](https://www.mongodb.com/resources/products/fundamentals/mongodb-cluster-setup) tutorials on how to create a freemium cluster for each or go through **Chapter 11**, section **Deploying the LLM Twin's pipelines to the cloud** and follow our step-by-step instructions.

|

| 485 |

+

|

| 486 |

+

#### GitHub Actions

|

| 487 |

+

|

| 488 |

+

We use GitHub Actions to implement our CI/CD pipelines. To implement your own, you have to fork our repository and set the following env vars as Actions secrets in your forked repository:

|

| 489 |

+

- `AWS_ACCESS_KEY_ID`

|

| 490 |

+

- `AWS_SECRET_ACCESS_KEY`

|

| 491 |

+

- `AWS_ECR_NAME`

|

| 492 |

+

- `AWS_REGION`

|

| 493 |

+

|

| 494 |

+

Also, we provide instructions on how to set everything up in **Chapter 11**, section **Adding LLMOps to the LLM Twin**.

|

| 495 |

+

|

| 496 |

+

#### Comet ML & Opik

|

| 497 |

+

|

| 498 |

+

You can visualize the results on their self-hosted dashboards if you create a Comet account and correctly set the `COMET_API_KEY` env var. As Opik is powered by Comet, you don't have to set up anything else along Comet:

|

| 499 |

+

- [Comet ML (for experiment tracking)](https://www.comet.com/)

|

| 500 |

+

- [Opik (for prompt monitoring)](https://www.comet.com/opik)

|

| 501 |

+

|

| 502 |

+

## ⚡ Pipelines

|

| 503 |

+

|

| 504 |

+

All the ML pipelines will be orchestrated behind the scenes by [ZenML](https://www.zenml.io/). A few exceptions exist when running utility scrips, such as exporting or importing from the data warehouse.

|

| 505 |

+

|

| 506 |

+

The ZenML pipelines are the entry point for most processes throughout this project. They are under the `pipelines/` folder. Thus, when you want to understand or debug a workflow, starting with the ZenML pipeline is the best approach.

|

| 507 |

+

|

| 508 |

+

To see the pipelines running and their results:

|

| 509 |

+

- go to your ZenML dashboard

|

| 510 |

+

- go to the `Pipelines` section

|

| 511 |

+

- click on a specific pipeline (e.g., `feature_engineering`)

|

| 512 |

+

- click on a specific run (e.g., `feature_engineering_run_2024_06_20_18_40_24`)

|

| 513 |

+

- click on a specific step or artifact of the DAG to find more details about it

|

| 514 |

+

|

| 515 |

+

Now, let's explore all the pipelines you can run. From data collection to training, we will present them in their natural order to go through the LLM project end-to-end.

|

| 516 |

+

|

| 517 |

+

### Data pipelines

|

| 518 |

+

|

| 519 |

+

Run the data collection ETL:

|

| 520 |

+

```bash

|

| 521 |

+

poetry poe run-digital-data-etl

|

| 522 |

+

```

|

| 523 |

+

|

| 524 |

+

> [!WARNING]

|

| 525 |

+

> You must have Chrome (or another Chromium-based browser) installed on your system for LinkedIn and Medium crawlers to work (which use Selenium under the hood). Based on your Chrome version, the Chromedriver will be automatically installed to enable Selenium support. Another option is to run everything using our Docker image if you don't want to install Chrome. For example, to run all the pipelines combined you can run `poetry poe run-docker-end-to-end-data-pipeline`. Note that the command can be tweaked to support any other pipeline.

|

| 526 |

+

>

|

| 527 |

+

> If, for any other reason, you don't have a Chromium-based browser installed and don't want to use Docker, you have two other options to bypass this Selenium issue:

|

| 528 |

+

> - Comment out all the code related to Selenium, Chrome and all the links that use Selenium to crawl them (e.g., Medium), such as the `chromedriver_autoinstaller.install()` command from [application.crawlers.base](https://github.com/PacktPublishing/LLM-Engineers-Handbook/blob/main/llm_engineering/application/crawlers/base.py) and other static calls that check for Chrome drivers and Selenium.

|

| 529 |

+

> - Install Google Chrome using your CLI in environments such as GitHub Codespaces or other cloud VMs using the same command as in our [Docker file](https://github.com/PacktPublishing/LLM-Engineers-Handbook/blob/main/Dockerfile#L10).

|

| 530 |

+

|

| 531 |

+

To add additional links to collect from, go to `configs/digital_data_etl_[author_name].yaml` and add them to the `links` field. Also, you can create a completely new file and specify it at run time, like this: `python -m llm_engineering.interfaces.orchestrator.run --run-etl --etl-config-filename configs/digital_data_etl_[your_name].yaml`

|

| 532 |

+

|

| 533 |

+

Run the feature engineering pipeline:

|

| 534 |

+

```bash

|

| 535 |

+

poetry poe run-feature-engineering-pipeline

|

| 536 |

+

```

|

| 537 |

+

|

| 538 |

+

Generate the instruct dataset:

|

| 539 |

+

```bash

|

| 540 |

+

poetry poe run-generate-instruct-datasets-pipeline

|

| 541 |

+

```

|

| 542 |

+

|

| 543 |

+

Generate the preference dataset:

|

| 544 |

+

```bash

|

| 545 |

+

poetry poe run-generate-preference-datasets-pipeline

|

| 546 |

+

```

|

| 547 |

+

|

| 548 |

+

Run all of the above compressed into a single pipeline:

|

| 549 |

+

```bash

|

| 550 |

+

poetry poe run-end-to-end-data-pipeline

|

| 551 |

+

```

|

| 552 |

+

|

| 553 |

+

### Utility pipelines

|

| 554 |

+

|

| 555 |

+

Export the data from the data warehouse to JSON files:

|

| 556 |

+

```bash

|

| 557 |

+

poetry poe run-export-data-warehouse-to-json

|

| 558 |

+

```

|

| 559 |

+

|

| 560 |

+

Import data to the data warehouse from JSON files (by default, it imports the data from the `data/data_warehouse_raw_data` directory):

|

| 561 |

+

```bash

|

| 562 |

+

poetry poe run-import-data-warehouse-from-json

|

| 563 |

+

```

|

| 564 |

+

|

| 565 |

+

Export ZenML artifacts to JSON:

|

| 566 |

+

```bash

|

| 567 |

+

poetry poe run-export-artifact-to-json-pipeline

|

| 568 |

+

```

|

| 569 |

+

|

| 570 |

+

This will export the following ZenML artifacts to the `output` folder as JSON files (it will take their latest version):

|

| 571 |

+

- cleaned_documents.json

|

| 572 |

+

- instruct_datasets.json

|

| 573 |

+

- preference_datasets.json

|

| 574 |

+

- raw_documents.json

|

| 575 |

+

|

| 576 |

+

You can configure what artifacts to export by tweaking the `configs/export_artifact_to_json.yaml` configuration file.

|

| 577 |

+

|

| 578 |

+

### Training pipelines

|

| 579 |

+

|

| 580 |

+

Run the training pipeline:

|

| 581 |

+

```bash

|

| 582 |

+

poetry poe run-training-pipeline

|

| 583 |

+

```

|

| 584 |

+

|

| 585 |

+

Run the evaluation pipeline:

|

| 586 |

+

```bash

|

| 587 |

+

poetry poe run-evaluation-pipeline

|

| 588 |

+

```

|

| 589 |

+

|

| 590 |

+

> [!WARNING]

|

| 591 |

+

> For this to work, make sure you properly configured AWS SageMaker as described in [Set up cloud infrastructure (for production)](#set-up-cloud-infrastructure-for-production).

|

| 592 |

+

|

| 593 |

+

### Inference pipelines

|

| 594 |

+

|

| 595 |

+

Call the RAG retrieval module with a test query:

|

| 596 |

+

```bash

|

| 597 |

+

poetry poe call-rag-retrieval-module

|

| 598 |

+

```

|

| 599 |

+

|

| 600 |

+

Start the inference real-time RESTful API:

|

| 601 |

+

```bash

|

| 602 |

+

poetry poe run-inference-ml-service

|

| 603 |

+

```

|

| 604 |

+

|

| 605 |

+

Call the inference real-time RESTful API with a test query:

|

| 606 |

+

```bash

|

| 607 |

+

poetry poe call-inference-ml-service

|

| 608 |

+

```

|

| 609 |

+

|

| 610 |

+

Remember that you can monitor the prompt traces on [Opik](https://www.comet.com/opik).

|

| 611 |

+

|

| 612 |

+

> [!WARNING]

|

| 613 |

+

> For the inference service to work, you must have the LLM microservice deployed to AWS SageMaker, as explained in the setup cloud infrastructure section.

|

| 614 |

+

|

| 615 |

+

### Linting & formatting (QA)

|

| 616 |

+

|

| 617 |

+

Check or fix your linting issues:

|

| 618 |

+

```bash

|

| 619 |

+

poetry poe lint-check

|

| 620 |

+

poetry poe lint-fix

|

| 621 |

+

```

|

| 622 |

+

|

| 623 |

+

Check or fix your formatting issues:

|

| 624 |

+

```bash

|

| 625 |

+

poetry poe format-check

|

| 626 |

+

poetry poe format-fix

|

| 627 |

+

```

|

| 628 |

+

|

| 629 |

+

Check the code for leaked credentials:

|

| 630 |

+

```bash

|

| 631 |

+

poetry poe gitleaks-check

|

| 632 |

+

```

|

| 633 |

+

|

| 634 |

+

### Tests

|

| 635 |

+

|

| 636 |

+

Run all the tests using the following command:

|

| 637 |

+

```bash

|

| 638 |

+

poetry poe test

|

| 639 |

+

```

|

| 640 |

+

|

| 641 |

+

## 🏃 Run project

|

| 642 |

+

|

| 643 |

+

Based on the setup and usage steps described above, assuming the local and cloud infrastructure works and the `.env` is filled as expected, follow the next steps to run the LLM system end-to-end:

|

| 644 |

+

|

| 645 |

+

### Data

|

| 646 |

+

|

| 647 |

+

1. Collect data: `poetry poe run-digital-data-etl`

|

| 648 |

+

|

| 649 |

+

2. Compute features: `poetry poe run-feature-engineering-pipeline`

|

| 650 |

+

|

| 651 |

+

3. Compute instruct dataset: `poetry poe run-generate-instruct-datasets-pipeline`

|

| 652 |

+

|

| 653 |

+

4. Compute preference alignment dataset: `poetry poe run-generate-preference-datasets-pipeline`

|

| 654 |

+

|

| 655 |

+

### Training

|

| 656 |

+

|

| 657 |

+

> [!IMPORTANT]

|

| 658 |

+

> From now on, for these steps to work, you need to properly set up AWS SageMaker, such as running `poetry install --with aws` and filling in the AWS-related environment variables and configs.

|

| 659 |

+

|

| 660 |

+

5. SFT fine-tuning Llamma 3.1: `poetry poe run-training-pipeline`

|

| 661 |

+

|

| 662 |

+

6. For DPO, go to `configs/training.yaml`, change `finetuning_type` to `dpo`, and run `poetry poe run-training-pipeline` again

|

| 663 |

+

|

| 664 |

+

7. Evaluate fine-tuned models: `poetry poe run-evaluation-pipeline`

|

| 665 |

+

|

| 666 |

+

### Inference

|

| 667 |

+

|

| 668 |

+

> [!IMPORTANT]

|

| 669 |

+

> From now on, for these steps to work, you need to properly set up AWS SageMaker, such as running `poetry install --with aws` and filling in the AWS-related environment variables and configs.

|

| 670 |

+

|

| 671 |

+

8. Call only the RAG retrieval module: `poetry poe call-rag-retrieval-module`

|

| 672 |

+

|

| 673 |

+

9. Deploy the LLM Twin microservice to SageMaker: `poetry poe deploy-inference-endpoint`

|

| 674 |

+

|

| 675 |

+

10. Test the LLM Twin microservice: `poetry poe test-sagemaker-endpoint`

|

| 676 |

+

|

| 677 |

+

11. Start end-to-end RAG server: `poetry poe run-inference-ml-service`

|

| 678 |

+

|

| 679 |

+

12. Test RAG server: `poetry poe call-inference-ml-service`

|

| 680 |

+

|

| 681 |

+

## 📄 License

|

| 682 |

+

|

| 683 |

+