huangzhii

commited on

Commit

·

32486dc

1

Parent(s):

2c7fd20

update

Browse files- .gitignore +4 -0

- README.md +5 -5

- app.py +21 -0

- examples/code_editor.json +7 -0

- examples/code_editor_scripts.py +137 -0

- examples/example_imageQA_scripts.py +99 -0

- examples/example_math.json +4 -0

- examples/example_math_scripts.py +80 -0

- examples/mathvista.json +5 -0

- home.py +132 -0

- img/mathvista/176.png +0 -0

- img/mathvista/math_chart.png +0 -0

- img/textgrad_logo_1x.png +0 -0

- requirements.txt +6 -0

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__

|

| 2 |

+

*.pyc

|

| 3 |

+

/logs

|

| 4 |

+

*.jsonl

|

README.md

CHANGED

|

@@ -1,10 +1,10 @@

|

|

| 1 |

---

|

| 2 |

-

title: Demo

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

-

sdk_version: 1.

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: mit

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Beginner Demo

|

| 3 |

+

emoji: 👀

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: green

|

| 6 |

sdk: streamlit

|

| 7 |

+

sdk_version: 1.36.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: mit

|

app.py

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import home

|

| 2 |

+

import streamlit as st

|

| 3 |

+

|

| 4 |

+

st.set_page_config(layout="wide")

|

| 5 |

+

st.sidebar.image('assets/img/textgrad_logo_1x.png', width=200)

|

| 6 |

+

st.sidebar.title("TextGrad Playground")

|

| 7 |

+

st.sidebar.markdown("## Menu")

|

| 8 |

+

|

| 9 |

+

PAGES = {

|

| 10 |

+

"🏠 Home": home,

|

| 11 |

+

}

|

| 12 |

+

|

| 13 |

+

page = st.sidebar.radio("", list(PAGES.keys()))

|

| 14 |

+

st.sidebar.markdown("## Links")

|

| 15 |

+

|

| 16 |

+

st.sidebar.markdown("""

|

| 17 |

+

📖 [Paper](https://arxiv.org/abs/2406.07496)

|

| 18 |

+

👨💻 [Code](https://github.com/zou-group/textgrad)

|

| 19 |

+

⚙️ [API documentation](https://textgrad.readthedocs.io/en/latest/index.html)""")

|

| 20 |

+

|

| 21 |

+

PAGES[page].app()

|

examples/code_editor.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"default_initial_solution": "def longest_increasing_subsequence(nums):\n n = len(nums)\n dp = [1] * n\n \n for i in range(1, n):\n for j in range(i):\n if nums[i] > nums[j]:\n dp[i] = max(dp[i], dp[j] + 1)\n \n max_length = max(dp)\n lis = []\n \n for i in range(n - 1, -1, -1):\n if dp[i] == max_length:\n lis.append(nums[i])\n max_length -= 1\n \n return len(lis[::-1])",

|

| 3 |

+

"default_target_solution": "import bisect\n\ndef longest_increasing_subsequence(nums):\n if not nums:\n return 0\n \n tails = []\n \n for num in nums:\n pos = bisect.bisect_left(tails, num)\n if pos == len(tails):\n tails.append(num)\n else:\n tails[pos] = num\n \n return len(tails)",

|

| 4 |

+

"default_loss_system_prompt": "You are a smart language model that evaluates code snippets. You do not solve problems or propose new code snippets, only evaluate existing solutions critically and give very concise feedback.",

|

| 5 |

+

"default_problem_description": "Longest Increasing Subsequence (LIS)\n\nProblem Statement:\nGiven a sequence of integers, find the length of the longest subsequence that is strictly increasing. A subsequence is a sequence that can be derived from another sequence by deleting some or no elements without changing the order of the remaining elements.\n\nInput:\nThe input consists of a list of integers representing the sequence.\n\nOutput:\nThe output should be an integer representing the length of the longest increasing subsequence.",

|

| 6 |

+

"instruction": "Think about the problem and the code snippet. Does the code solve the problem? What is the runtime complexity?"

|

| 7 |

+

}

|

examples/code_editor_scripts.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_elements import elements, mui, editor, dashboard

|

| 3 |

+

from stqdm import stqdm

|

| 4 |

+

import textgrad as tg

|

| 5 |

+

import os

|

| 6 |

+

|

| 7 |

+

class CodeEditor:

|

| 8 |

+

def __init__(self, data) -> None:

|

| 9 |

+

self.data = data

|

| 10 |

+

self.llm_engine = tg.get_engine("gpt-4o")

|

| 11 |

+

print("="*50, "init", "="*50)

|

| 12 |

+

self.loss_value = ""

|

| 13 |

+

self.code_gradients = ""

|

| 14 |

+

if 'iteration' not in st.session_state:

|

| 15 |

+

st.session_state.iteration = 0

|

| 16 |

+

if 'results' not in st.session_state:

|

| 17 |

+

st.session_state.results = []

|

| 18 |

+

tg.set_backward_engine(self.llm_engine, override=True)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def load_layout(self):

|

| 22 |

+

col1, col2 = st.columns([1, 1])

|

| 23 |

+

with col1:

|

| 24 |

+

self.problem = st.text_area("Problem description:", self.data["default_problem_description"], height=300)

|

| 25 |

+

with col2:

|

| 26 |

+

self.loss_system_prompt = st.text_area("Loss system prompt:", self.data["default_loss_system_prompt"], height=150)

|

| 27 |

+

self.instruction = st.text_area("Instruction for formatted LLM call:", self.data["instruction"], height=100)

|

| 28 |

+

|

| 29 |

+

if "code_content" not in st.session_state:

|

| 30 |

+

st.session_state.code_content = self.data["default_initial_solution"]

|

| 31 |

+

|

| 32 |

+

def update_code_content(value):

|

| 33 |

+

st.session_state.code_content = value

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

col1, col2 = st.columns(2)

|

| 37 |

+

with col1:

|

| 38 |

+

with elements("monaco_editors_1"):

|

| 39 |

+

mui.Typography("Initial Solution:", sx={"fontSize": "20px", "fontWeight": "bold"})

|

| 40 |

+

editor.Monaco(

|

| 41 |

+

height=300,

|

| 42 |

+

defaultLanguage="python",

|

| 43 |

+

defaultValue=st.session_state.code_content,

|

| 44 |

+

onChange=update_code_content

|

| 45 |

+

)

|

| 46 |

+

with col2:

|

| 47 |

+

with elements("monaco_editors_2"):

|

| 48 |

+

mui.Typography("Current Solution:", sx={"fontSize": "20px", "fontWeight": "bold"})

|

| 49 |

+

editor.Monaco(

|

| 50 |

+

height=300,

|

| 51 |

+

defaultLanguage="python",

|

| 52 |

+

value=st.session_state.code_content,

|

| 53 |

+

options={"readOnly": True} # Make the editor read-only

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

# format_string = f"{instruction}\nProblem: {problem}\nCurrent Code: {st.session_state.code_content}"

|

| 58 |

+

# mui.Typography(format_string)

|

| 59 |

+

|

| 60 |

+

# mui.Typography("Final Snippet vs. Current Solution:", sx={"fontSize": "20px", "fontWeight": "bold"})

|

| 61 |

+

# editor.MonacoDiff(

|

| 62 |

+

# original=self.data["default_target_solution"],

|

| 63 |

+

# modified=st.session_state.code_content,

|

| 64 |

+

# height=300,

|

| 65 |

+

# )

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

def _run(self):

|

| 70 |

+

# Code is the variable of interest we want to optimize -- so requires_grad=True

|

| 71 |

+

solution = st.session_state.code_content

|

| 72 |

+

code = tg.Variable(value=solution,

|

| 73 |

+

requires_grad=True,

|

| 74 |

+

role_description="code instance to optimize")

|

| 75 |

+

|

| 76 |

+

# We are not interested in optimizing the problem -- so requires_grad=False

|

| 77 |

+

problem = tg.Variable(self.problem,

|

| 78 |

+

requires_grad=False,

|

| 79 |

+

role_description="the coding problem")

|

| 80 |

+

|

| 81 |

+

# Let TGD know to update code!

|

| 82 |

+

optimizer = tg.TGD(parameters=[code])

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

instruction = self.instruction

|

| 86 |

+

llm_engine = self.llm_engine

|

| 87 |

+

loss_system_prompt = self.loss_system_prompt

|

| 88 |

+

loss_system_prompt = tg.Variable(loss_system_prompt, requires_grad=False, role_description="system prompt to the loss function")

|

| 89 |

+

|

| 90 |

+

format_string = "{instruction}\nProblem: {{problem}}\nCurrent Code: {{code}}"

|

| 91 |

+

format_string = format_string.format(instruction=self.instruction)

|

| 92 |

+

|

| 93 |

+

fields = {"problem": None, "code": None}

|

| 94 |

+

formatted_llm_call = tg.autograd.FormattedLLMCall(engine=self.llm_engine,

|

| 95 |

+

format_string=format_string,

|

| 96 |

+

fields=fields,

|

| 97 |

+

system_prompt=loss_system_prompt)

|

| 98 |

+

# Finally, the loss function

|

| 99 |

+

def loss_fn(problem: tg.Variable, code: tg.Variable) -> tg.Variable:

|

| 100 |

+

inputs = {"problem": problem, "code": code}

|

| 101 |

+

|

| 102 |

+

return formatted_llm_call(inputs=inputs,

|

| 103 |

+

response_role_description=f"evaluation of the {code.get_role_description()}")

|

| 104 |

+

loss = loss_fn(problem, code)

|

| 105 |

+

self.loss_value = loss.value

|

| 106 |

+

self.graph = loss.generate_graph()

|

| 107 |

+

|

| 108 |

+

loss.backward()

|

| 109 |

+

self.gradients = code.gradients

|

| 110 |

+

optimizer.step() # Let's update the code

|

| 111 |

+

|

| 112 |

+

st.session_state.code_content = code.value

|

| 113 |

+

|

| 114 |

+

def show_results(self):

|

| 115 |

+

self._run()

|

| 116 |

+

st.session_state.iteration += 1

|

| 117 |

+

st.session_state.results.append({

|

| 118 |

+

'iteration': st.session_state.iteration,

|

| 119 |

+

'loss_value': self.loss_value,

|

| 120 |

+

'gradients': self.gradients

|

| 121 |

+

})

|

| 122 |

+

|

| 123 |

+

tabs = st.tabs([f"Iteration {i+1}" for i in range(st.session_state.iteration)])

|

| 124 |

+

|

| 125 |

+

for i, tab in enumerate(tabs):

|

| 126 |

+

with tab:

|

| 127 |

+

result = st.session_state.results[i]

|

| 128 |

+

st.markdown(f"Current iteration: **{result['iteration']}**")

|

| 129 |

+

col1, col2 = st.columns([1, 1])

|

| 130 |

+

with col1:

|

| 131 |

+

st.markdown("## Loss value")

|

| 132 |

+

st.markdown(result['loss_value'])

|

| 133 |

+

with col2:

|

| 134 |

+

st.markdown("## Code gradients")

|

| 135 |

+

for j, g in enumerate(result['gradients']):

|

| 136 |

+

st.markdown(f"### Gradient {j}")

|

| 137 |

+

st.markdown(g.value)

|

examples/example_imageQA_scripts.py

ADDED

|

@@ -0,0 +1,99 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_elements import elements, mui, editor, dashboard

|

| 3 |

+

from stqdm import stqdm

|

| 4 |

+

import textgrad as tg

|

| 5 |

+

import os

|

| 6 |

+

from PIL import Image

|

| 7 |

+

from textgrad.autograd import MultimodalLLMCall

|

| 8 |

+

from textgrad.loss import ImageQALoss

|

| 9 |

+

from io import BytesIO

|

| 10 |

+

|

| 11 |

+

class ImageQA:

|

| 12 |

+

def __init__(self, data) -> None:

|

| 13 |

+

self.data = data

|

| 14 |

+

self.llm_engine = tg.get_engine("gpt-4o")

|

| 15 |

+

print("="*50, "init", "="*50)

|

| 16 |

+

self.loss_value = ""

|

| 17 |

+

self.gradients = ""

|

| 18 |

+

if 'iteration' not in st.session_state:

|

| 19 |

+

st.session_state.iteration = 0

|

| 20 |

+

st.session_state.results = []

|

| 21 |

+

tg.set_backward_engine(self.llm_engine, override=True)

|

| 22 |

+

|

| 23 |

+

def load_layout(self):

|

| 24 |

+

st.markdown(f"**This is a solution optimization for image QA.**")

|

| 25 |

+

col1, col2 = st.columns([1, 1])

|

| 26 |

+

with col1:

|

| 27 |

+

uploaded_file = st.file_uploader("Upload an image", type=["png", "jpg", "jpeg"])

|

| 28 |

+

if uploaded_file is not None:

|

| 29 |

+

image = Image.open(uploaded_file)

|

| 30 |

+

st.image(image, caption="Uploaded Image")

|

| 31 |

+

else:

|

| 32 |

+

image_url = self.data["image_URL"]

|

| 33 |

+

image = Image.open(image_url)

|

| 34 |

+

st.image(image_url, caption="Default: MathVista image")

|

| 35 |

+

|

| 36 |

+

img_byte_arr = BytesIO()

|

| 37 |

+

image.save(img_byte_arr, format='PNG') # You can choose the format you want

|

| 38 |

+

img_byte_arr = img_byte_arr.getvalue()

|

| 39 |

+

self.image_variable = tg.Variable(img_byte_arr, role_description="image to answer a question about", requires_grad=False)

|

| 40 |

+

with col2:

|

| 41 |

+

question_text = st.text_area("Question:", self.data["question_text"], height=150)

|

| 42 |

+

self.question_variable = tg.Variable(question_text, role_description="question", requires_grad=False)

|

| 43 |

+

self.evaluation_instruction_text = st.text_area("Evaluation instruction:", self.data["evaluation_instruction"], height=100)

|

| 44 |

+

|

| 45 |

+

self.loss_fn = ImageQALoss(

|

| 46 |

+

evaluation_instruction=self.evaluation_instruction_text,

|

| 47 |

+

engine="gpt-4o",

|

| 48 |

+

)

|

| 49 |

+

if "current_response" not in st.session_state:

|

| 50 |

+

st.session_state.current_response = ""

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def _run(self):

|

| 54 |

+

# Set up the textgrad variables

|

| 55 |

+

self.response = MultimodalLLMCall("gpt-4o")([

|

| 56 |

+

self.image_variable,

|

| 57 |

+

self.question_variable

|

| 58 |

+

])

|

| 59 |

+

|

| 60 |

+

optimizer = tg.TGD(parameters=[self.response])

|

| 61 |

+

|

| 62 |

+

loss = self.loss_fn(question=self.question_variable, image=self.image_variable, response=self.response)

|

| 63 |

+

self.loss_value = loss.value

|

| 64 |

+

# self.graph = loss.generate_graph()

|

| 65 |

+

|

| 66 |

+

loss.backward()

|

| 67 |

+

self.gradients = self.response.gradients

|

| 68 |

+

|

| 69 |

+

optimizer.step() # Let's update the response

|

| 70 |

+

st.session_state.current_response = self.response.value

|

| 71 |

+

|

| 72 |

+

def show_results(self):

|

| 73 |

+

self._run()

|

| 74 |

+

st.session_state.iteration += 1

|

| 75 |

+

st.session_state.results.append({

|

| 76 |

+

'iteration': st.session_state.iteration,

|

| 77 |

+

'loss_value': self.loss_value,

|

| 78 |

+

'response': self.response.value,

|

| 79 |

+

'gradients': self.gradients

|

| 80 |

+

})

|

| 81 |

+

|

| 82 |

+

tabs = st.tabs([f"Iteration {i+1}" for i in range(st.session_state.iteration)])

|

| 83 |

+

|

| 84 |

+

for i, tab in enumerate(tabs):

|

| 85 |

+

with tab:

|

| 86 |

+

result = st.session_state.results[i]

|

| 87 |

+

st.markdown(f"Current iteration: **{result['iteration']}**")

|

| 88 |

+

st.markdown("## Current solution:")

|

| 89 |

+

st.markdown(result['response'])

|

| 90 |

+

|

| 91 |

+

col1, col2 = st.columns([1, 1])

|

| 92 |

+

with col1:

|

| 93 |

+

st.markdown("## Loss value")

|

| 94 |

+

st.markdown(result['loss_value'])

|

| 95 |

+

with col2:

|

| 96 |

+

st.markdown("## Code gradients")

|

| 97 |

+

for j, g in enumerate(result['gradients']):

|

| 98 |

+

st.markdown(f"### Gradient")

|

| 99 |

+

st.markdown(g.value)

|

examples/example_math.json

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"default_initial_solution": "To solve the equation 3x^2 - 7x + 2 = 0, we use the quadratic formula:\nx = (-b ± √(b^2 - 4ac)) / 2a\na = 3, b = -7, c = 2\nx = (7 ± √((-7)^2 + 4(3)(2))) / 6\nx = (7 ± √73) / 6\nThe solutions are:\nx1 = (7 + √73)\nx2 = (7 - √73)",

|

| 3 |

+

"default_loss_system_prompt": "You will evaluate a solution to a math question.\nDo not attempt to solve it yourself, do not give a solution, only identify errors. Be super concise."

|

| 4 |

+

}

|

examples/example_math_scripts.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_elements import elements, mui, editor, dashboard

|

| 3 |

+

from stqdm import stqdm

|

| 4 |

+

import textgrad as tg

|

| 5 |

+

import os

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class MathSolution:

|

| 9 |

+

def __init__(self, data) -> None:

|

| 10 |

+

self.data = data

|

| 11 |

+

self.llm_engine = tg.get_engine("gpt-4o")

|

| 12 |

+

print("="*50, "init", "="*50)

|

| 13 |

+

self.loss_value = ""

|

| 14 |

+

self.gradients = ""

|

| 15 |

+

if 'iteration' not in st.session_state:

|

| 16 |

+

st.session_state.iteration = 0

|

| 17 |

+

st.session_state.results = []

|

| 18 |

+

tg.set_backward_engine(self.llm_engine, override=True)

|

| 19 |

+

|

| 20 |

+

def load_layout(self):

|

| 21 |

+

col1, col2 = st.columns([1, 1])

|

| 22 |

+

with col1:

|

| 23 |

+

self.initial_solution = st.text_area("Initial solution:", self.data["default_initial_solution"], height=300)

|

| 24 |

+

with col2:

|

| 25 |

+

self.loss_system_prompt = st.text_area("Loss system prompt:", self.data["default_loss_system_prompt"], height=300)

|

| 26 |

+

|

| 27 |

+

if "current_solution" not in st.session_state:

|

| 28 |

+

st.session_state.current_solution = self.data["default_initial_solution"]

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

def _run(self):

|

| 32 |

+

# Set up the textgrad variables

|

| 33 |

+

current_solution = st.session_state.current_solution

|

| 34 |

+

|

| 35 |

+

self.response = tg.Variable(current_solution,

|

| 36 |

+

requires_grad=True,

|

| 37 |

+

role_description="solution to the math question")

|

| 38 |

+

|

| 39 |

+

loss_fn = tg.TextLoss(tg.Variable(self.loss_system_prompt,

|

| 40 |

+

requires_grad=False,

|

| 41 |

+

role_description="system prompt"))

|

| 42 |

+

optimizer = tg.TGD([self.response])

|

| 43 |

+

|

| 44 |

+

loss = loss_fn(self.response)

|

| 45 |

+

self.loss_value = loss.value

|

| 46 |

+

self.graph = loss.generate_graph()

|

| 47 |

+

|

| 48 |

+

loss.backward()

|

| 49 |

+

self.gradients = self.response.gradients

|

| 50 |

+

|

| 51 |

+

optimizer.step() # Let's update the solution

|

| 52 |

+

st.session_state.current_solution = self.response.value

|

| 53 |

+

|

| 54 |

+

def show_results(self):

|

| 55 |

+

self._run()

|

| 56 |

+

st.session_state.iteration += 1

|

| 57 |

+

st.session_state.results.append({

|

| 58 |

+

'iteration': st.session_state.iteration,

|

| 59 |

+

'loss_value': self.loss_value,

|

| 60 |

+

'response': self.response.value,

|

| 61 |

+

'gradients': self.gradients

|

| 62 |

+

})

|

| 63 |

+

|

| 64 |

+

tabs = st.tabs([f"Iteration {i+1}" for i in range(st.session_state.iteration)])

|

| 65 |

+

|

| 66 |

+

for i, tab in enumerate(tabs):

|

| 67 |

+

with tab:

|

| 68 |

+

result = st.session_state.results[i]

|

| 69 |

+

st.markdown(f"Current iteration: **{result['iteration']}**")

|

| 70 |

+

st.markdown("## Current solution:")

|

| 71 |

+

st.markdown(result['response'])

|

| 72 |

+

col1, col2 = st.columns([1, 1])

|

| 73 |

+

with col1:

|

| 74 |

+

st.markdown("## Loss value")

|

| 75 |

+

st.markdown(result['loss_value'])

|

| 76 |

+

with col2:

|

| 77 |

+

st.markdown("## Code gradients")

|

| 78 |

+

for j, g in enumerate(result['gradients']):

|

| 79 |

+

st.markdown(f"### Gradient")

|

| 80 |

+

st.markdown(g.value)

|

examples/mathvista.json

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

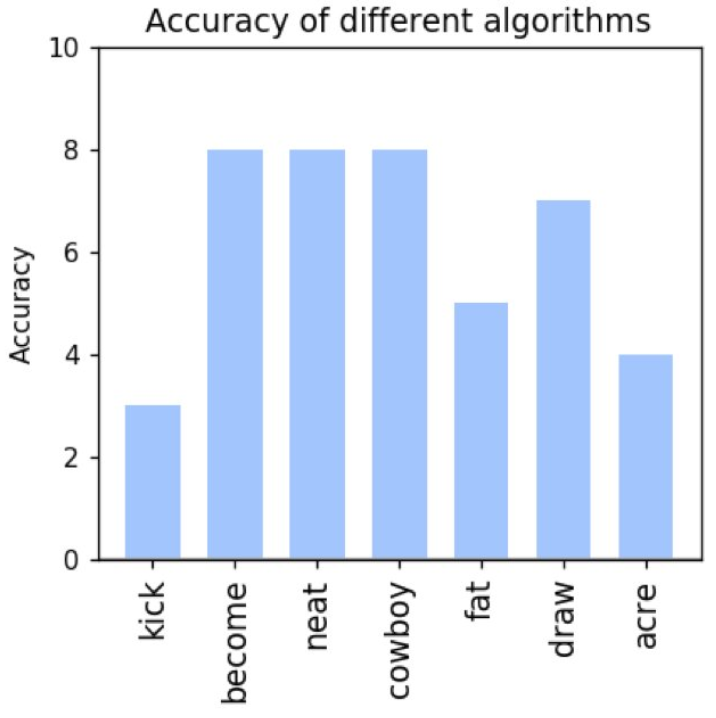

"image_URL": "assets/img/mathvista/math_chart.png",

|

| 3 |

+

"question_text": "What is the sum of the accuracies of the algorithms fat and acre?",

|

| 4 |

+

"evaluation_instruction": "Does this seem like a complete and good answer for the image? Criticize heavily."

|

| 5 |

+

}

|

home.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from pathlib import Path

|

| 2 |

+

import streamlit as st

|

| 3 |

+

import streamlit.components.v1 as components

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import base64

|

| 6 |

+

import textgrad as tg

|

| 7 |

+

from stqdm import stqdm

|

| 8 |

+

import json

|

| 9 |

+

import os

|

| 10 |

+

|

| 11 |

+

def read_markdown_file(markdown_file):

|

| 12 |

+

return Path(markdown_file).read_text()

|

| 13 |

+

|

| 14 |

+

def render_svg(svg_filename):

|

| 15 |

+

with open(svg_filename,"r") as f:

|

| 16 |

+

lines = f.readlines()

|

| 17 |

+

svg=''.join(lines)

|

| 18 |

+

"""Renders the given svg string."""

|

| 19 |

+

b64 = base64.b64encode(svg.encode('utf-8')).decode("utf-8")

|

| 20 |

+

html = r'<img src="data:image/svg+xml;base64,%s"/>' % b64

|

| 21 |

+

st.write(html, unsafe_allow_html=True)

|

| 22 |

+

|

| 23 |

+

def load_json(file_path):

|

| 24 |

+

with open(file_path, "r") as f:

|

| 25 |

+

return json.load(f)

|

| 26 |

+

|

| 27 |

+

def app():

|

| 28 |

+

st.title('TextGrad: Automatic ''Differentiation'' via Text')

|

| 29 |

+

st.image('assets/img/textgrad_logo_1x.png', width=200)

|

| 30 |

+

|

| 31 |

+

st.markdown("### Examples")

|

| 32 |

+

examples = {

|

| 33 |

+

"Code Editor": load_json("examples/code_editor.json"),

|

| 34 |

+

"Example Math Solution": load_json("examples/example_math.json"),

|

| 35 |

+

# "🏞️ Image QA": load_json("examples/mathvista.json"),

|

| 36 |

+

}

|

| 37 |

+

|

| 38 |

+

col1, col2 = st.columns([1,1])

|

| 39 |

+

with col1:

|

| 40 |

+

# Display clickable examples

|

| 41 |

+

option = st.selectbox(

|

| 42 |

+

"Select an example to use:",

|

| 43 |

+

examples.keys()

|

| 44 |

+

)

|

| 45 |

+

|

| 46 |

+

# Initialize session state if not already done

|

| 47 |

+

st.session_state.data = examples[option]

|

| 48 |

+

# if st.button("Clear example selection"):

|

| 49 |

+

# st.session_state.data = ""

|

| 50 |

+

# option = "None"

|

| 51 |

+

|

| 52 |

+

# Initialize session state if not already done

|

| 53 |

+

if "selected_option" not in st.session_state:

|

| 54 |

+

st.session_state.selected_option = None

|

| 55 |

+

st.session_state.object = None

|

| 56 |

+

|

| 57 |

+

# Check if the selected option has changed

|

| 58 |

+

if st.session_state.selected_option != option:

|

| 59 |

+

st.session_state.data = examples[option]

|

| 60 |

+

st.session_state.selected_option = option

|

| 61 |

+

st.session_state.object_initialized = False

|

| 62 |

+

|

| 63 |

+

# Display the selected option

|

| 64 |

+

st.markdown(f"**Example selected:** {option}")

|

| 65 |

+

|

| 66 |

+

with col2:

|

| 67 |

+

valid_api_key = st.session_state.get('valid_api_key', False)

|

| 68 |

+

form = st.form(key='api_key')

|

| 69 |

+

OPENAI_API_KEY = form.text_input(label='Enter OpenAI API Key. Keys will not be stored', type = 'password')

|

| 70 |

+

submit_button = form.form_submit_button(label='Submit')

|

| 71 |

+

|

| 72 |

+

if submit_button:

|

| 73 |

+

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEY

|

| 74 |

+

|

| 75 |

+

try:

|

| 76 |

+

engine = tg.get_engine("gpt-4o")

|

| 77 |

+

response = engine.generate("hello, what model is this?")

|

| 78 |

+

st.session_state.valid_api_key = True

|

| 79 |

+

valid_api_key = True

|

| 80 |

+

except:

|

| 81 |

+

st.error("Please enter a valid OpenAI API key.")

|

| 82 |

+

st.stop()

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

if valid_api_key:

|

| 86 |

+

# Initialize the object when the option is selected

|

| 87 |

+

if not st.session_state.object_initialized:

|

| 88 |

+

if option == "Example Math Solution":

|

| 89 |

+

from examples import example_math_scripts

|

| 90 |

+

st.session_state.iteration = 0

|

| 91 |

+

st.session_state.object = example_math_scripts.MathSolution(data=st.session_state.get("data", ""))

|

| 92 |

+

elif option == "Code Editor":

|

| 93 |

+

from examples import code_editor_scripts

|

| 94 |

+

st.session_state.iteration = 0

|

| 95 |

+

st.session_state.object = code_editor_scripts.CodeEditor(data=st.session_state.get("data", ""))

|

| 96 |

+

# elif option == "🏞️ Image QA":

|

| 97 |

+

# from examples import example_imageQA_scripts

|

| 98 |

+

# st.session_state.iteration = 0

|

| 99 |

+

# st.session_state.object = example_imageQA_scripts.ImageQA(data=st.session_state.get("data", ""))

|

| 100 |

+

st.session_state.object_initialized = True

|

| 101 |

+

|

| 102 |

+

# Ensure object is loaded

|

| 103 |

+

st.session_state.object.load_layout()

|

| 104 |

+

|

| 105 |

+

# Run TextGrad

|

| 106 |

+

# Add custom CSS to style the button

|

| 107 |

+

# st.markdown("""

|

| 108 |

+

# <style>

|

| 109 |

+

# .large-button {

|

| 110 |

+

# font-size: 24px;

|

| 111 |

+

# padding: 16px 32px;

|

| 112 |

+

# background-color: #007bff;

|

| 113 |

+

# color: white;

|

| 114 |

+

# border: none;

|

| 115 |

+

# border-radius: 4px;

|

| 116 |

+

# cursor: pointer;

|

| 117 |

+

# }

|

| 118 |

+

# .large-button:hover {

|

| 119 |

+

# background-color: #0056b3;

|

| 120 |

+

# }

|

| 121 |

+

# </style>

|

| 122 |

+

# """, unsafe_allow_html=True)

|

| 123 |

+

|

| 124 |

+

# # Create a larger button using HTML

|

| 125 |

+

# if st.markdown('<button class="large-button">Run 1 iteration of TextGrad</button>', unsafe_allow_html=True):

|

| 126 |

+

if st.button("Run 1 iteration of TextGrad"):

|

| 127 |

+

st.session_state.object.show_results()

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

st.markdown("""---""")

|

| 131 |

+

st.markdown('### Disclaimer')

|

| 132 |

+

st.markdown("Do not get addicted to TextGrad. It is a powerful tool that can be used for good or evil. Use it responsibly.")

|

img/mathvista/176.png

ADDED

|

img/mathvista/math_chart.png

ADDED

|

img/textgrad_logo_1x.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

git+https://github.com/zou-group/textgrad.git

|

| 2 |

+

stqdm

|

| 3 |

+

streamlit_elements

|

| 4 |

+

pillow

|

| 5 |

+

streamlit

|

| 6 |

+

IPython

|