muzairkhattak

commited on

Commit

·

ad2cd02

1

Parent(s):

3b3a347

added sample images

Browse files- app.py +1 -1

- docs/EVALUATION_DATA.md +24 -0

- docs/INSTALL.md +14 -0

- docs/UniMed-DATA.md +305 -0

- docs/sample_images/brain_MRI.jpg +3 -0

- docs/sample_images/ct_scan_right_kidney.jpg +3 -0

- docs/sample_images/ct_scan_right_kidney.tiff +0 -0

- docs/sample_images/retina_glaucoma.jpg +3 -0

- docs/sample_images/tumor_histo_pathology.jpg +3 -0

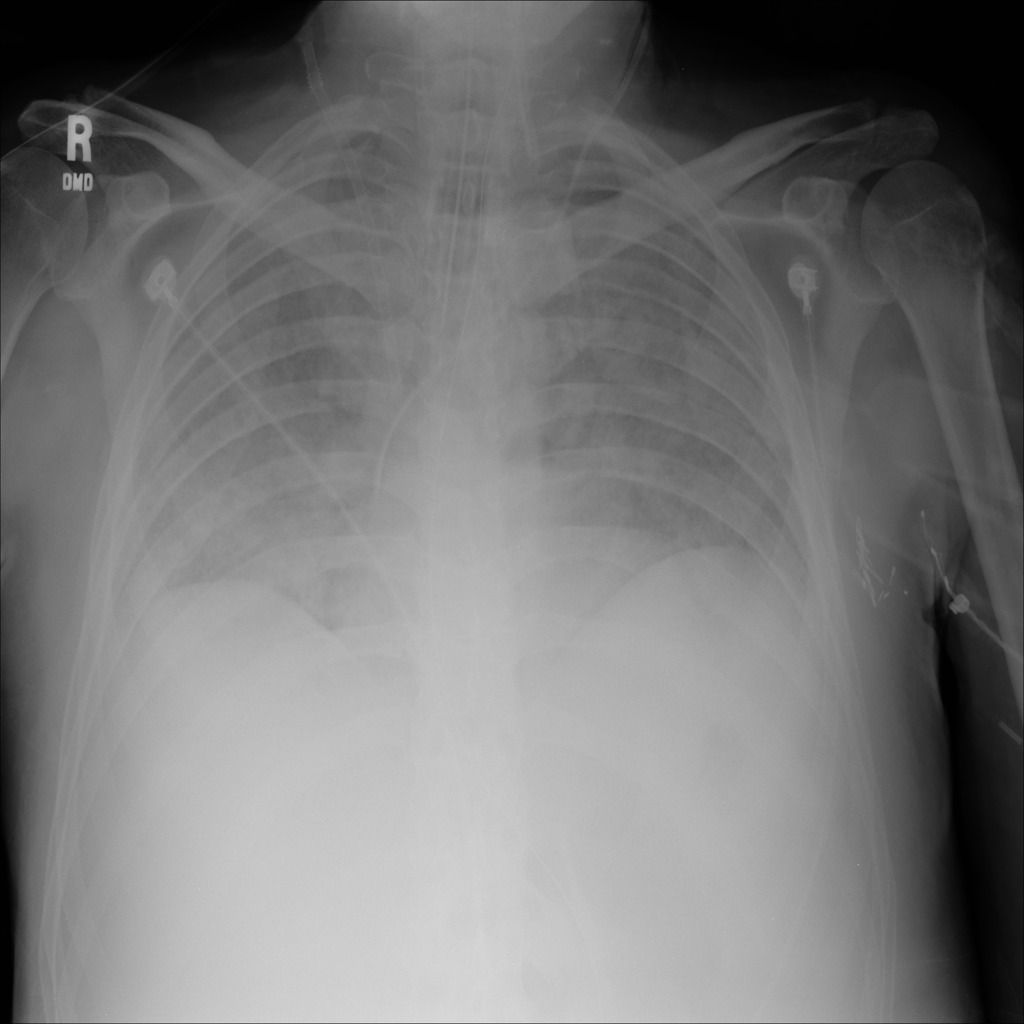

- docs/sample_images/xray_cardiomegaly.jpg +3 -0

- docs/sample_images/xray_pneumonia.png +0 -0

- docs/teaser_photo.svg +0 -0

app.py

CHANGED

|

@@ -132,4 +132,4 @@ iface = gr.Interface(shot,

|

|

| 132 |

<b>[DEMO USAGE]</b> To begin with the demo, provide a picture (either upload manually, or select from the given examples) and class labels. Optionally you can also add template as an prefix to the class labels. <br> </p>""",

|

| 133 |

title="Zero-shot Medical Image Classification with UniMed-CLIP")

|

| 134 |

|

| 135 |

-

iface.launch()

|

|

|

|

| 132 |

<b>[DEMO USAGE]</b> To begin with the demo, provide a picture (either upload manually, or select from the given examples) and class labels. Optionally you can also add template as an prefix to the class labels. <br> </p>""",

|

| 133 |

title="Zero-shot Medical Image Classification with UniMed-CLIP")

|

| 134 |

|

| 135 |

+

iface.launch(allowed_paths=["/home/user/app/docs/sample_images"])

|

docs/EVALUATION_DATA.md

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Preparing evaluation datasets

|

| 2 |

+

|

| 3 |

+

This readme provides instructions on downloading the downstream datasets used for zero-shot evaluation.

|

| 4 |

+

|

| 5 |

+

**About the evaluation datasets:** For evaluating the zero-shot performance of UniMed-CLIP and prior Medical VLMs, we utilize 21 medical datasets that covers 6 diverse modalities. We refer the readers to Table 5 (supplementary material), for additional details about evaluation datasets.

|

| 6 |

+

|

| 7 |

+

To facilitate quick prototyping, we directly provide the processed evaluation datasets. Download the datasets (zipped form) [from this link](https://mbzuaiac-my.sharepoint.com/:u:/g/personal/uzair_khattak_mbzuai_ac_ae/EdaUYopuq6lLhOTl0by1A9oBs92y56tV1g4iins9QiFwVg?e=WhKzUd).

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

After downloading and extracting the files, the directory structure looks like below.

|

| 11 |

+

```

|

| 12 |

+

extracted_folder/

|

| 13 |

+

|–– LC25000_test/

|

| 14 |

+

|–– BACH/

|

| 15 |

+

|–– chexpert/

|

| 16 |

+

|–– organs_coronal/

|

| 17 |

+

|–– thyroid_us/

|

| 18 |

+

|–– skin_tumor/

|

| 19 |

+

|–– organs_sagittal/

|

| 20 |

+

|–– fives_retina/

|

| 21 |

+

|–– meniscal_mri/

|

| 22 |

+

# remaining datasets...

|

| 23 |

+

```

|

| 24 |

+

|

docs/INSTALL.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Installation

|

| 2 |

+

|

| 3 |

+

This codebase is tested on Ubuntu 20.04.2 LTS with python 3.10. Follow the below steps to create environment and install dependencies.

|

| 4 |

+

|

| 5 |

+

* Setup conda environment (recommended).

|

| 6 |

+

```bash

|

| 7 |

+

# Create a conda environment

|

| 8 |

+

conda create -y -n unimed-clip python=3.10

|

| 9 |

+

# Activate the environment

|

| 10 |

+

conda activate unimed-clip

|

| 11 |

+

# Install requirements

|

| 12 |

+

pip install -r requirements.txt

|

| 13 |

+

```

|

| 14 |

+

|

docs/UniMed-DATA.md

ADDED

|

@@ -0,0 +1,305 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Preparing UniMed Dataset for training Medical VLMs training

|

| 2 |

+

|

| 3 |

+

This document provides detailed instructions on preparing UniMed dataset for pre-training contrastive medical VLMs. Note that, although UniMed is developed using fully open-source medical data sources, we are not able to release the processed data directly, as some data-sources are subject to strict distribution licenses. Therefore, we provide step-by-step instructions on assembling UniMed data and provide several parts of UniMed for which no licensing obligations are present.

|

| 4 |

+

|

| 5 |

+

**About the UniMed Pretraining Dataset:** UniMed is a large-scale medical image-text pretraining dataset that explicitly covers 6 diverse medical modalities including X-rays, CT, MRI, Ultrasound, HistoPathology and Retinal Fundus. UniMed is developed using completely open-sourced data-sources comprising over 5.3 million high-quality image-text pairs. Model trained using UniMed (e.g., our UniMed-CLIP) provides impressive zero-shot and downstream task performance compared to other generalist VLMs, that are often trained on proprietary/closed-source datasets.

|

| 6 |

+

|

| 7 |

+

Follow the instructions below to construct UniMed dataset. We download each part of UniMed independently and prepare its multi-modal versions (where applicable) using our processed textual-captions.

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Downloading Individual Datasets and Converting them into Image-text format

|

| 11 |

+

|

| 12 |

+

As the first step, we download the individual Medical Datasets from their respective data providers. We suggest putting all datasets under the same folder (say `$DATA`) to ease management. The file structure looks like below.

|

| 13 |

+

```

|

| 14 |

+

$DATA/

|

| 15 |

+

|–– CheXpert-v1.0-small/

|

| 16 |

+

|–– mimic-cxr-jpg/

|

| 17 |

+

|–– openi/

|

| 18 |

+

|-- chest_xray8/

|

| 19 |

+

|-- radimagenet/

|

| 20 |

+

|-- Retina-Datasets/

|

| 21 |

+

|-- Quilt/

|

| 22 |

+

|–– pmc_oa/

|

| 23 |

+

|–– ROCOV2/

|

| 24 |

+

|–– llava_med/

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

Datasets list:

|

| 28 |

+

- [CheXpert](#chexpert)

|

| 29 |

+

- [MIMIC-CXR](#mimic-cxr)

|

| 30 |

+

- [OpenI](#openi)

|

| 31 |

+

- [ChestX-ray8](#chestx-ray8)

|

| 32 |

+

- [RadImageNet](#radimagenet)

|

| 33 |

+

- [Retinal-Datasets](#retinal-datasets)

|

| 34 |

+

- [Quilt-1M](#quilt-1m)

|

| 35 |

+

- [PMC-OA](#pmc-oa)

|

| 36 |

+

- [ROCO-V2](#roco-v2)

|

| 37 |

+

- [LLaVA-Med](#LLaVA-Med)

|

| 38 |

+

|

| 39 |

+

We use the scripts provided in `data_prepration_scripts` for preparing UniMed dataset. Follow the instructions illustrated below.

|

| 40 |

+

|

| 41 |

+

### 1. CheXpert

|

| 42 |

+

#### Downloading Dataset:

|

| 43 |

+

- Step 1: Download the dataset from the following [link](https://www.kaggle.com/datasets/ashery/chexpert) on Kaggle.

|

| 44 |

+

|

| 45 |

+

#### Downloading Annotations:

|

| 46 |

+

- Download the processed text annotations file `chexpert_with_captions_only_frontal_view.csv` from this [link](https://mbzuaiac-my.sharepoint.com/:x:/g/personal/uzair_khattak_mbzuai_ac_ae/EYodM9cCJTxNvr_KZsYKz3gB7ozvtdyoqfLhyF59y_UXsw?e=6iOdrQ), and put it to the main folder.

|

| 47 |

+

- The final directory structure should look like below.

|

| 48 |

+

|

| 49 |

+

```

|

| 50 |

+

CheXpert-v1.0-small/

|

| 51 |

+

|–– train/

|

| 52 |

+

|–– valid/

|

| 53 |

+

|–– train.csv

|

| 54 |

+

|–– valid.csv

|

| 55 |

+

|–– chexpert_with_captions_only_frontal_view.csv

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

#### Preparing image-text dataset and conversion in webdataset format:

|

| 59 |

+

- Run the following command to create image-text dataset:

|

| 60 |

+

- `python data_prepration_scripts/CheXpert/webdataset_chexpert.py --csv_file chexpert_with_captions_only_frontal_view.csv --output_dir <path-to-save-all-image-text-datasets>/chexpert_webdataset --parent_dataset_path $DATA/CheXpert-v1.0-small`

|

| 61 |

+

- This will prepare chexpert image-text data in webdataset format, to be used directly for training.

|

| 62 |

+

|

| 63 |

+

### 2. MIMIC-CXR

|

| 64 |

+

#### Downloading Dataset:

|

| 65 |

+

- Step 1: Follow the instructions in the following [link](https://physionet.org/content/mimic-cxr-jpg/2.1.0/) to get access to the Mimic CXR jpg dataset (Note you have to complete a data-usage agreement form inorder to get access to the dataset).

|

| 66 |

+

- Step 2: Then, download the 10 folders p10-p19 from [link](https://physionet.org/content/mimic-cxr-jpg/2.1.0/files/).

|

| 67 |

+

|

| 68 |

+

#### Downloading Annotations:

|

| 69 |

+

- Download the processed text annotations folder `mimic_cxr_with_captions_and_reports_only_frontal_view.csv` from this [link](https://mbzuaiac-my.sharepoint.com/:x:/g/personal/uzair_khattak_mbzuai_ac_ae/EVshorDt6OJLp4ZBTsqklSQBaXaGlG184AWVv3dIWfrAkA?e=lPsm7x), and put it to the main folder.

|

| 70 |

+

- The final directory structure should look like below.

|

| 71 |

+

```

|

| 72 |

+

mimic-cxr-jpg/2.0.0/files/

|

| 73 |

+

|-- mimic_cxr_with_captions_and_reports_only_frontal_view.csv

|

| 74 |

+

|–– p10/

|

| 75 |

+

|–– p11/

|

| 76 |

+

|–– p12/

|

| 77 |

+

...

|

| 78 |

+

...

|

| 79 |

+

|–– p19/

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

#### Preparing image-text datasets in webdataset format:

|

| 83 |

+

- Run the following command to create image-text dataset:

|

| 84 |

+

- `python data_prepration_scripts/MIMIC-CXR/webdataset_mimic_cxr.py --csv_file mimic_cxr_with_captions_and_reports_only_frontal_view.csv --output_dir <path-to-save-all-image-text-datasets>/mimic_cxr_webdataset --parent_dataset_path $DATA/mimic-cxr-jpg`

|

| 85 |

+

- This will prepare mimic-cxr image-text data in webdataset format, to be used directly for training.

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

### 3. OpenI

|

| 89 |

+

#### Downloading Dataset:

|

| 90 |

+

- Step 1 : Download the OpenI PNG dataset from the [link](https://openi.nlm.nih.gov/imgs/collections/NLMCXR_png.tgz).

|

| 91 |

+

|

| 92 |

+

#### Downloading Annotations:

|

| 93 |

+

- Download the processed text annotations folder `openai_refined_concepts.json`, and `filter_cap.json` from this [link](https://mbzuaiac-my.sharepoint.com/:f:/g/personal/uzair_khattak_mbzuai_ac_ae/Es0rzhS3MZNHg1UyB8AWPKgB5D0KcrRSOQOGYM7gDkOmRg?e=gCulCg), and put it to the main folder.

|

| 94 |

+

- The final directory structure should look like below.

|

| 95 |

+

|

| 96 |

+

```

|

| 97 |

+

openI/

|

| 98 |

+

|-- openai_refined_concepts.json

|

| 99 |

+

|-- filter_cap.json

|

| 100 |

+

|–– image/

|

| 101 |

+

|-- # image files ...

|

| 102 |

+

```

|

| 103 |

+

|

| 104 |

+

#### Preparing image-text datasets in webdataset format:

|

| 105 |

+

- Run the following command to create image-text dataset:

|

| 106 |

+

- `python data_prepration_scripts/Openi/openi_webdataset.py --original_json_file_summarizations_path filter_cap.json --gpt_text_descriptions_path openai_refined_concepts.json --output_dir <path-to-save-all-image-text-datasets>/openi_webdataset --parent_dataset_path $DATA/OpenI/image`

|

| 107 |

+

- This will prepare openi image-text data in webdataset format, to be used directly for training.

|

| 108 |

+

|

| 109 |

+

### 4. ChestX-ray8

|

| 110 |

+

#### Downloading Dataset:

|

| 111 |

+

- Step 1: Download the images folder from the following [link](https://nihcc.app.box.com/v/ChestXray-NIHCC).

|

| 112 |

+

|

| 113 |

+

#### Downloading Annotations:

|

| 114 |

+

- Download the processed text annotations folder `Chest-Xray8_with_captions.csv` from this [link](https://mbzuaiac-my.sharepoint.com/:x:/g/personal/uzair_khattak_mbzuai_ac_ae/EVroaq0FiERErUlJsPwQuaoBprs44EwhHBhVH_TZ-A5PJQ?e=G6z0rf), and put it to the main folder.

|

| 115 |

+

- The final directory structure should look like below.

|

| 116 |

+

```

|

| 117 |

+

chest_xray8/

|

| 118 |

+

|-- Chest-Xray8_with_captions.csv

|

| 119 |

+

|–– images/

|

| 120 |

+

|-- # image files ...

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

#### Preparing image-text dataset and conversion in webdataset format:

|

| 124 |

+

- Run the following command to create image-text dataset:

|

| 125 |

+

- `python data_prepration_scripts/ChestX-ray8/chest-xray_8_webdataset.py --csv_file Chest-Xray8_with_captions.csv --output_dir <path-to-save-all-image-text-datasets>/chest_xray8_webdataset --parent_dataset_path $DATA/chest_xray8/images`

|

| 126 |

+

- This will prepare chest-xray8 image-text data in webdataset format, to be used directly for training.

|

| 127 |

+

|

| 128 |

+

### 5. RadImageNet

|

| 129 |

+

#### Downloading Dataset:

|

| 130 |

+

- Step 1 : Submit the request for dataset via the [link](https://www.radimagenet.com/) and,

|

| 131 |

+

- Step 2 : Download the official dataset splits csv from this [link](https://drive.google.com/drive/folders/1FUir_Y_kbQZWih1TMVf9Sz8Pdk9NF2Ym?usp=sharing). [Note that the access to the dataset-split will be granted once the request for dataset usage (in step 1) is approved]

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

#### Downloading Annotations:

|

| 135 |

+

- Download the processed text annotations folder `radimagenet_with_captions_training_set.csv` from this [link](https://mbzuaiac-my.sharepoint.com/:x:/g/personal/uzair_khattak_mbzuai_ac_ae/Eaf_k0g3FOlMmz0MkS6LU20BrIpTvsRujXPDmKMWLv6roQ?e=0Po3OI), and put it to the main folder.

|

| 136 |

+

- The final directory structure should look like below.

|

| 137 |

+

|

| 138 |

+

- The directory structure should look like below.

|

| 139 |

+

```

|

| 140 |

+

radimagenet/

|

| 141 |

+

|–– radiology_ai/

|

| 142 |

+

|-- radimagenet_with_captions_training_set.csv

|

| 143 |

+

|-- CT

|

| 144 |

+

|-- MR

|

| 145 |

+

|-- US

|

| 146 |

+

```

|

| 147 |

+

|

| 148 |

+

#### Preparing image-text dataset and conversion in webdataset format:

|

| 149 |

+

- Run the following command to create image-text dataset:

|

| 150 |

+

- `python data_prepration_scripts/RadImageNet/radimagenet_webdataset.py --csv_file radimagenet_with_captions_training_set.csv --output_dir <path-to-save-all-image-text-datasets>/radimagenet_webdataset --parent_dataset_path $DATA/radimagenet`

|

| 151 |

+

- This will prepare chest-xray8 image-text data in webdataset format, to be used directly for training.

|

| 152 |

+

|

| 153 |

+

### 6. Retinal-Datasets

|

| 154 |

+

|

| 155 |

+

For the retinal datasets, we select 35 Retinal datasets and convert the label only datasets into multi-modal versions using LLM-in-the-loop pipeline proposed in the paper.

|

| 156 |

+

#### Downloading Datasets:

|

| 157 |

+

- Part 1: Download the MM-Retinal dataset available from the official [google drive link](https://drive.google.com/drive/folders/177RCtDeA6n99gWqgBS_Sw3WT6qYbzVmy).

|

| 158 |

+

|

| 159 |

+

- Part 2: Download the datasets presented in the table below to prepare the FLAIR Dataset collection (table source: [FLAIR](https://github.com/jusiro/FLAIR/)).

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

| | | | | | |

|

| 163 |

+

|--------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------|-----|-----------------------------------------------------------------------------------------------------------------------------------------------------------------|-----|

|

| 164 |

+

| [08_ODIR-5K](https://www.kaggle.com/datasets/andrewmvd/ocular-disease-recognition-odir5k) | [15_APTOS](https://www.kaggle.com/competitions/aptos2019-blindness-detection/data) | [35_ScarDat](https://github.com/li-xirong/fundus10k) | | [29_AIROGS](https://zenodo.org/record/5793241#.ZDi2vNLMJH5) |

|

| 165 |

+

| [09_PAPILA](https://figshare.com/articles/dataset/PAPILA/14798004/1) | [16_FUND-OCT](https://data.mendeley.com/datasets/trghs22fpg/3) | [23_HRF](http://www5.cs.fau.de/research/data/fundus-images/) | | [30_SUSTech-SYSU](https://figshare.com/articles/dataset/The_SUSTech-SYSU_dataset_for_automated_exudate_detection_and_diabetic_retinopathy_grading/12570770/1) | |

|

| 166 |

+

| [03_IDRID](https://idrid.grand-challenge.org/Rules/) | [17_DiaRetDB1](https://www.it.lut.fi/project/imageret/diaretdb1_v2_1/) | [24_ORIGA](https://pubmed.ncbi.nlm.nih.gov/21095735/) | | [31_JICHI](https://figshare.com/articles/figure/Davis_Grading_of_One_and_Concatenated_Figures/4879853/1) | |

|

| 167 |

+

| [04_RFMid](https://ieee-dataport.org/documents/retinal-fundus-multi-disease-image-dataset-rfmid-20) | [18_DRIONS-DB](http://www.ia.uned.es/~ejcarmona/DRIONS-DB.html) | [26_ROC](http://webeye.ophth.uiowa.edu/ROC/) | | [32_CHAKSU](https://figshare.com/articles/dataset/Ch_k_u_A_glaucoma_specific_fundus_image_database/20123135?file=38944805) | |

|

| 168 |

+

| [10_PARAGUAY](https://zenodo.org/record/4647952#.ZBT5xXbMJD9) | [12_ARIA](https://www.damianjjfarnell.com/?page_id=276) | [27_BRSET](https://physionet.org/content/brazilian-ophthalmological/1.0.0/) | | [33_DR1-2](https://figshare.com/articles/dataset/Advancing_Bag_of_Visual_Words_Representations_for_Lesion_Classification_in_Retinal_Images/953671?file=6502302) | |

|

| 169 |

+

| [06_DEN](https://github.com/Jhhuangkay/DeepOpht-Medical-Report-Generation-for-Retinal-Images-via-Deep-Models-and-Visual-Explanation) | [19_Drishti-GS1](http://cvit.iiit.ac.in/projects/mip/drishti-gs/mip-dataset2/Home.php) | [20_E-ophta](https://www.adcis.net/en/third-party/e-ophtha/) | | [34_Cataract](https://www.kaggle.com/datasets/jr2ngb/cataractdataset) | |

|

| 170 |

+

| [11_STARE](https://cecas.clemson.edu/~ahoover/stare/) | [14_AGAR300](https://ieee-dataport.org/open-access/diabetic-retinopathy-fundus-image-datasetagar300) | [21_G1020](https://arxiv.org/abs/2006.09158) | | | |

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

* Vision-Language Pre-training.

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

#### Downloading Annotations:

|

| 178 |

+

- Download the processed text annotations folder `Retina-Annotations` from this [link](https://mbzuaiac-my.sharepoint.com/:f:/g/personal/uzair_khattak_mbzuai_ac_ae/Enxa-lnJAjZOtZHDkGkfLasBGfaxr3Ztb-KlP9cvTRG3OQ?e=Ac8xt9).

|

| 179 |

+

- The directory structure should look like below.

|

| 180 |

+

```

|

| 181 |

+

Retina-Datasets/

|

| 182 |

+

|-- Retina-Annotations/

|

| 183 |

+

|-- 03_IDRiD/

|

| 184 |

+

|-- 11_STARE/

|

| 185 |

+

...

|

| 186 |

+

```

|

| 187 |

+

|

| 188 |

+

|

| 189 |

+

#### Preparing image-text dataset and conversion in webdataset format:

|

| 190 |

+

- Run the following commands to create image-text datasets for Retinal datasets

|

| 191 |

+

```

|

| 192 |

+

python data_prepration_scripts/Retinal-Datasets/retina_webdataset_part1.py --csv_files_directory <path-to-csv-files-directory> --output_dir <path-to-save-all-image-text-datasets>/retina_part1_webdataset/ --parent_dataset_path $DATA/Retina-Datasets

|

| 193 |

+

python data_prepration_scripts/Retinal-Datasets/retina_webdataset_part2.py --csv_files_directory <path-to-csv-files-directory> --output_dir <path-to-save-all-image-text-datasets>/retina_part2_webdataset/ --parent_dataset_path $DATA/Retina-Datasets

|

| 194 |

+

python data_prepration_scripts/Retinal-Datasets/retina_webdataset_part3.py --csv_files_directory <path-to-csv-files-directory> --output_dir <path-to-save-all-image-text-datasets>/retina_part3_webdataset/ --parent_dataset_path $DATA/Retina-Datasets

|

| 195 |

+

```

|

| 196 |

+

|

| 197 |

+

- This will prepare image-text data for retina-modality in webdataset format, to be used directly for training.

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

### Quilt-1M

|

| 201 |

+

|

| 202 |

+

Note: Quilt-1M provides image-text pairs, and we directly utilize their image-text pairs in our pretraining.

|

| 203 |

+

|

| 204 |

+

#### Downloading Dataset:

|

| 205 |

+

- Step 1:Request access for Quilt-1M dataset via the [link](https://zenodo.org/records/8239942), and then download the respective dataset.

|

| 206 |

+

- The directory structure should look like below.

|

| 207 |

+

```

|

| 208 |

+

Quilt/

|

| 209 |

+

|-- quilt_1M_lookup.csv

|

| 210 |

+

|-- # bunch of files

|

| 211 |

+

|–– quilt_1m/

|

| 212 |

+

|-- #images

|

| 213 |

+

```

|

| 214 |

+

|

| 215 |

+

#### Preparing image-text datasets in webdataset format:

|

| 216 |

+

- Run the following command:

|

| 217 |

+

- `python data_prepration_scripts/Quilt-1M/quilt_1m_webdataset.py --csv_file $DATA/Quilt/quilt_1M_lookup.csv --output_dir <path-to-save-all-image-text-datasets>/quilt_1m_webdataset --parent_dataset_path $DATA/Quilt/quilt_1m/`

|

| 218 |

+

- This will prepare Quilt-1M image-text data in webdataset format, to be used directly for training.

|

| 219 |

+

|

| 220 |

+

### PMC-OA

|

| 221 |

+

|

| 222 |

+

Note: PMC-OA provides image-text pairs, and we directly utilize their image-text pairs in our UniMed pretraining dataset.

|

| 223 |

+

|

| 224 |

+

#### Downloading Dataset:

|

| 225 |

+

- Step 1: Download the PMC-OA images from the following [link](https://huggingface.co/datasets/axiong/pmc_oa/blob/main/images.zip).

|

| 226 |

+

- Step 2: Download the json file ([link](https://huggingface.co/datasets/axiong/pmc_oa/resolve/main/pmc_oa.jsonl)).

|

| 227 |

+

- The directory structure should look like below.

|

| 228 |

+

```

|

| 229 |

+

pmc_oa/

|

| 230 |

+

|–– pmc_oa.jsonl

|

| 231 |

+

|-- caption_T060_filtered_top4_sep_v0_subfigures

|

| 232 |

+

|-- # iamges

|

| 233 |

+

|-- # bunch of files

|

| 234 |

+

```

|

| 235 |

+

|

| 236 |

+

#### Preparing image-text datasets in webdataset format:

|

| 237 |

+

- Run the following command:

|

| 238 |

+

- `python data_prepration_scripts/PMC-OA/pmc_oa_webdataset.py --csv_file $DATA/pmc_oa/pmc_oa.jsonl --output_dir <path-to-save-all-image-text-datasets>/pmc_oa_webdataset/ --parent_dataset_path $DATA/pmc_oa/caption_T060_filtered_top4_sep_v0_subfigures/`

|

| 239 |

+

- This will prepare PMC-OA image-text data in webdataset format, to be used directly for training.

|

| 240 |

+

|

| 241 |

+

### ROCO-V2

|

| 242 |

+

Note: ROCO-V2 provides image-text pairs, and we directly utilize their image-text pairs in our pretraining.

|

| 243 |

+

|

| 244 |

+

#### Downloading Dataset:

|

| 245 |

+

- Step 1: Download the images and captions from the [link](https://zenodo.org/records/8333645).

|

| 246 |

+

- The directory structure should look like below.

|

| 247 |

+

```

|

| 248 |

+

ROCOV2/

|

| 249 |

+

|–– train/

|

| 250 |

+

|-- test/

|

| 251 |

+

|-- train_captions.csv

|

| 252 |

+

|-- # bunch of files

|

| 253 |

+

```

|

| 254 |

+

|

| 255 |

+

#### Preparing image-text datasets in webdataset format:

|

| 256 |

+

- Run the following command:

|

| 257 |

+

- `python data_prepration_scripts/ROCOV2/roco_webdataset.py --csv_file $DATA/ROCOV2/train_captions.csv --output_dir <path-to-save-all-image-text-datasets>/rocov2_webdataset/ --parent_dataset_path $DATA/ROCOV2/train/`

|

| 258 |

+

- This will prepare ROCOV2 image-text data in webdataset format, to be used directly for training.

|

| 259 |

+

|

| 260 |

+

### LLaVA-Med

|

| 261 |

+

Note: LLaVA-Med provides image-text pairs, and we directly utilize their image-text pairs in our pretraining.

|

| 262 |

+

|

| 263 |

+

#### Downloading Dataset:

|

| 264 |

+

- Download images by following instructions at LLaVA-Med official repository [here](https://github.com/microsoft/LLaVA-Med?tab=readme-ov-file#data-download).

|

| 265 |

+

|

| 266 |

+

#### Downloading Annotations:

|

| 267 |

+

- Download the filtered caption files `llava_med_instruct_fig_captions.json`, and `llava_med_alignment_500k_filtered.json` from this [link](https://mbzuaiac-my.sharepoint.com/:f:/g/personal/uzair_khattak_mbzuai_ac_ae/Es0rzhS3MZNHg1UyB8AWPKgB5D0KcrRSOQOGYM7gDkOmRg?e=gCulCg). The final directory should look like this:

|

| 268 |

+

|

| 269 |

+

```

|

| 270 |

+

llava_med/

|

| 271 |

+

|–– llava_med_alignment_500k_filtered.json

|

| 272 |

+

|-- llava_med_instruct_fig_captions.json

|

| 273 |

+

|-- images

|

| 274 |

+

|-- # images

|

| 275 |

+

```

|

| 276 |

+

|

| 277 |

+

#### Preparing image-text datasets in webdataset format:

|

| 278 |

+

- Run the following commands:

|

| 279 |

+

```

|

| 280 |

+

python data_prepration_scripts/LLaVA-Med/llava_med_alignment_webdataset.py --csv_file $DATA/llava_med/llava_med_alignment_500k_filtered.json --output_dir <path-to-save-all-image-text-datasets>/llava_med_alignment_webdataset/ --parent_dataset_path $DATA/llava_med/images/`

|

| 281 |

+

python data_prepration_scripts/LLaVA-Med/llava_med_instruct_webdataset.py --csv_file $DATA/llava_med/llava_med_instruct_fig_captions.json --output_dir <path-to-save-all-image-text-datasets>/llava_med_instruct_webdataset/ --parent_dataset_path $DATA/llava_med/images/`

|

| 282 |

+

```

|

| 283 |

+

- This will prepare LLaVa-Med image-text data in webdataset format, to be used directly for training.

|

| 284 |

+

|

| 285 |

+

|

| 286 |

+

## Final Dataset Directory Structure:

|

| 287 |

+

|

| 288 |

+

After following the above steps, UniMed dataset will be now completely prepared in the webdataset format. The final directory structure looks like below:

|

| 289 |

+

|

| 290 |

+

```

|

| 291 |

+

<path-to-save-all-image-text-datasets>/

|

| 292 |

+

|–– chexpert_webdataset/

|

| 293 |

+

|–– mimic_cxr_webdataset/

|

| 294 |

+

|–– openi_webdataset/

|

| 295 |

+

|-- chest_xray8_webdataset/

|

| 296 |

+

|-- radimagenet_webdataset/

|

| 297 |

+

|-- retina_part1_webdataset/

|

| 298 |

+

|-- retina_part2_webdataset/

|

| 299 |

+

|-- retina_part3_webdataset/

|

| 300 |

+

|-- quilt_1m_webdataset

|

| 301 |

+

|–– pmc_oa_webdataset/

|

| 302 |

+

|-- rocov2_webdataset/

|

| 303 |

+

|–– llava_med_alignment_webdataset/

|

| 304 |

+

|–– llava_med_instruct_webdataset/

|

| 305 |

+

```

|

docs/sample_images/brain_MRI.jpg

ADDED

|

Git LFS Details

|

docs/sample_images/ct_scan_right_kidney.jpg

ADDED

|

Git LFS Details

|

docs/sample_images/ct_scan_right_kidney.tiff

ADDED

|

|

docs/sample_images/retina_glaucoma.jpg

ADDED

|

Git LFS Details

|

docs/sample_images/tumor_histo_pathology.jpg

ADDED

|

Git LFS Details

|

docs/sample_images/xray_cardiomegaly.jpg

ADDED

|

Git LFS Details

|

docs/sample_images/xray_pneumonia.png

ADDED

|

docs/teaser_photo.svg

ADDED

|

|