Spaces:

Sleeping

Sleeping

Commit

·

9cecb44

1

Parent(s):

b6ad1b0

Added App with NN

Browse files- .gitignore +3 -0

- README.md +3 -0

- app.py +51 -0

- nn.png +0 -0

- nn.py +196 -0

- requirements.txt +1 -0

.gitignore

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.venv

|

| 2 |

+

__pycache__

|

| 3 |

+

*.json

|

README.md

CHANGED

|

@@ -10,3 +10,6 @@ pinned: false

|

|

| 10 |

---

|

| 11 |

|

| 12 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

---

|

| 11 |

|

| 12 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 13 |

+

|

| 14 |

+

# References

|

| 15 |

+

* https://www.codingame.com/playgrounds/59631/neural-network-xor-example-from-scratch-no-libs

|

app.py

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from nn import train, predict, save_model, sigmoid

|

| 3 |

+

|

| 4 |

+

# INPUTS = [[0,0],[0,1],[1,0],[1,1]]

|

| 5 |

+

# OUTPUTS = [[0],[1],[1],[0]]

|

| 6 |

+

# EPOCHS = 1000000

|

| 7 |

+

# ALPHAS = 20

|

| 8 |

+

|

| 9 |

+

INPUTS = [[0,0],[0,1],[1,0],[1,1]]

|

| 10 |

+

OUTPUTS = [[0],[1],[1],[0]]

|

| 11 |

+

|

| 12 |

+

def runNN(epoch, alpha):

|

| 13 |

+

# Train model

|

| 14 |

+

modelo = train(epochs=epoch, alpha=alpha)

|

| 15 |

+

|

| 16 |

+

print(modelo)

|

| 17 |

+

# Save model to file

|

| 18 |

+

save_model(modelo, "modelo.json")

|

| 19 |

+

|

| 20 |

+

for i in range(4):

|

| 21 |

+

result = predict(INPUTS[i][0],INPUTS[i][1], activation=sigmoid)

|

| 22 |

+

st.write("for input", INPUTS[i], "expected", OUTPUTS[i][0], "predicted", f"{result:4.4}", "which is", "correct" if round(result)==OUTPUTS[i][0] else "incorrect")

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def sidebar():

|

| 27 |

+

# Neural network controls

|

| 28 |

+

st.sidebar.header('Neural Network Controls')

|

| 29 |

+

st.sidebar.text('Number of epochs')

|

| 30 |

+

epochs = st.sidebar.slider('Epochs', 1000, 1000000, 100000)

|

| 31 |

+

st.sidebar.text('Learning rate')

|

| 32 |

+

alphas = st.sidebar.slider('Alphas', 1, 100, 20)

|

| 33 |

+

if st.sidebar.button('Run Neural Network'):

|

| 34 |

+

runNN(epochs, alphas)

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def app():

|

| 38 |

+

st.title('Simple Neural Network App')

|

| 39 |

+

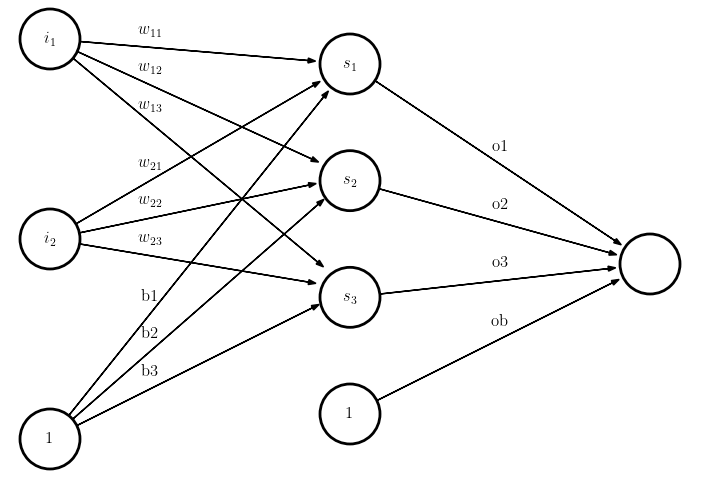

st.write('This is the Neural Network image we are trying to implement!')

|

| 40 |

+

st.image('nn.png', width=500)

|

| 41 |

+

sidebar()

|

| 42 |

+

|

| 43 |

+

st.markdown('''

|

| 44 |

+

### References

|

| 45 |

+

* https://www.codingame.com/playgrounds/59631/neural-network-xor-example-from-scratch-no-libs

|

| 46 |

+

''')

|

| 47 |

+

|

| 48 |

+

if __name__ == '__main__':

|

| 49 |

+

app()

|

| 50 |

+

|

| 51 |

+

|

nn.png

ADDED

|

nn.py

ADDED

|

@@ -0,0 +1,196 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import random

|

| 2 |

+

import math

|

| 3 |

+

import json

|

| 4 |

+

|

| 5 |

+

INPUTS = [[0,0],[0,1],[1,0],[1,1]]

|

| 6 |

+

OUTPUTS = [[0],[1],[1],[0]]

|

| 7 |

+

EPOCHS = 1000000

|

| 8 |

+

ALPHAS = 20

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

WEPOCHS = EPOCHS // 100

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

VARIANCE_W = 0.5

|

| 15 |

+

VARIANCE_B = 0

|

| 16 |

+

|

| 17 |

+

w11 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 18 |

+

w21 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 19 |

+

b1 = VARIANCE_B

|

| 20 |

+

|

| 21 |

+

w12 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 22 |

+

w22 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 23 |

+

b2 = VARIANCE_B

|

| 24 |

+

|

| 25 |

+

w13 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 26 |

+

w23 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 27 |

+

b3 = VARIANCE_B

|

| 28 |

+

|

| 29 |

+

o1 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 30 |

+

o2 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 31 |

+

o3 = random.uniform(-VARIANCE_W,VARIANCE_W)

|

| 32 |

+

ob = VARIANCE_B

|

| 33 |

+

|

| 34 |

+

## Tudo a 0.5

|

| 35 |

+

# VARIANCE_W = 0.5

|

| 36 |

+

# VARIANCE_B = 1

|

| 37 |

+

# w11 = VARIANCE_W

|

| 38 |

+

# w21 = VARIANCE_W

|

| 39 |

+

# b1 = VARIANCE_B

|

| 40 |

+

|

| 41 |

+

# w12 = VARIANCE_W

|

| 42 |

+

# w22 = VARIANCE_W

|

| 43 |

+

# b2 = VARIANCE_B

|

| 44 |

+

|

| 45 |

+

# w13 = VARIANCE_W

|

| 46 |

+

# w23 = VARIANCE_W

|

| 47 |

+

# b3 = VARIANCE_B

|

| 48 |

+

|

| 49 |

+

# o1 = VARIANCE_W

|

| 50 |

+

# o2 = VARIANCE_W

|

| 51 |

+

# o3 = VARIANCE_W

|

| 52 |

+

# ob = VARIANCE_B

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def sigmoid(x):

|

| 56 |

+

return 1.0 / (1.0 + math.exp(-x))

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def sigmoid_prime(x): # x already sigmoided

|

| 60 |

+

return x * (1 - x)

|

| 61 |

+

|

| 62 |

+

def relu(x):

|

| 63 |

+

return max(0,x)

|

| 64 |

+

|

| 65 |

+

def relu_prime(x):

|

| 66 |

+

return 1 if x>0 else 0

|

| 67 |

+

|

| 68 |

+

def tanh(x):

|

| 69 |

+

return math.tanh(x)

|

| 70 |

+

|

| 71 |

+

def tanh_prime(x):

|

| 72 |

+

return 1 - x**2

|

| 73 |

+

|

| 74 |

+

def softmax(x):

|

| 75 |

+

return math.exp(x) / (math.exp(x) + 1)

|

| 76 |

+

|

| 77 |

+

def softmax_prime(x):

|

| 78 |

+

return x * (1 - x)

|

| 79 |

+

|

| 80 |

+

def predict(i1, i2, activation=sigmoid):

|

| 81 |

+

s1 = w11 * i1 + w21 * i2 + b1

|

| 82 |

+

# s1 = sigmoid(s1)

|

| 83 |

+

s1 = activation(s1)

|

| 84 |

+

s2 = w12 * i1 + w22 * i2 + b2

|

| 85 |

+

# s2 = sigmoid(s2)

|

| 86 |

+

s2 = activation(s2)

|

| 87 |

+

s3 = w13 * i1 + w23 * i2 + b3

|

| 88 |

+

# s3 = sigmoid(s3)

|

| 89 |

+

s3 = activation(s3)

|

| 90 |

+

|

| 91 |

+

output = s1 * o1 + s2 * o2 + s3 * o3 + ob

|

| 92 |

+

# output = sigmoid(output)

|

| 93 |

+

output = activation(output)

|

| 94 |

+

|

| 95 |

+

return output

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

def learn(i1,i2,target, activation, activation_prime, alpha=0.2):

|

| 99 |

+

global w11,w21,b1,w12,w22,b2,w13,w23,b3

|

| 100 |

+

global o1,o2,o3,ob

|

| 101 |

+

|

| 102 |

+

s1 = w11 * i1 + w21 * i2 + b1

|

| 103 |

+

# s1 = sigmoid(s1)

|

| 104 |

+

s1 = activation(s1)

|

| 105 |

+

s2 = w12 * i1 + w22 * i2 + b2

|

| 106 |

+

# s2 = sigmoid(s2)

|

| 107 |

+

s2 = activation(s2)

|

| 108 |

+

s3 = w13 * i1 + w23 * i2 + b3

|

| 109 |

+

# s3 = sigmoid(s3)

|

| 110 |

+

s3 = activation(s3)

|

| 111 |

+

|

| 112 |

+

output = s1 * o1 + s2 * o2 + s3 * o3 + ob

|

| 113 |

+

# output = sigmoid(output)

|

| 114 |

+

output = activation(output)

|

| 115 |

+

|

| 116 |

+

error = target - output

|

| 117 |

+

# derror = error * sigmoid_prime(output)

|

| 118 |

+

derror = error * activation_prime(output)

|

| 119 |

+

|

| 120 |

+

# ds1 = derror * o1 * sigmoid_prime(s1)

|

| 121 |

+

ds1 = derror * o1 * activation_prime(s1)

|

| 122 |

+

# ds2 = derror * o2 * sigmoid_prime(s2)

|

| 123 |

+

ds2 = derror * o2 * activation_prime(s2)

|

| 124 |

+

# ds3 = derror * o3 * sigmoid_prime(s3)

|

| 125 |

+

ds3 = derror * o3 * activation_prime(s3)

|

| 126 |

+

|

| 127 |

+

o1 += alpha * s1 * derror

|

| 128 |

+

o2 += alpha * s2 * derror

|

| 129 |

+

o3 += alpha * s3 * derror

|

| 130 |

+

ob += alpha * derror

|

| 131 |

+

|

| 132 |

+

w11 += alpha * i1 * ds1

|

| 133 |

+

w21 += alpha * i2 * ds1

|

| 134 |

+

b1 += alpha * ds1

|

| 135 |

+

w12 += alpha * i1 * ds2

|

| 136 |

+

w22 += alpha * i2 * ds2

|

| 137 |

+

b2 += alpha * ds2

|

| 138 |

+

w13 += alpha * i1 * ds3

|

| 139 |

+

w23 += alpha * i2 * ds3

|

| 140 |

+

b3 += alpha * ds3

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

def train(epochs=EPOCHS, alpha=ALPHAS):

|

| 144 |

+

modelo = None

|

| 145 |

+

for epoch in range(1,epochs+1):

|

| 146 |

+

indexes = [0,1,2,3]

|

| 147 |

+

random.shuffle(indexes)

|

| 148 |

+

for j in indexes:

|

| 149 |

+

learn(INPUTS[j][0],INPUTS[j][1],OUTPUTS[j][0], activation=sigmoid, activation_prime=sigmoid_prime, alpha=alpha)

|

| 150 |

+

|

| 151 |

+

if epoch%WEPOCHS == 0:

|

| 152 |

+

cost = 0

|

| 153 |

+

for j in range(4):

|

| 154 |

+

o = predict(INPUTS[j][0],INPUTS[j][1], activation=sigmoid)

|

| 155 |

+

cost += (OUTPUTS[j][0] - o) ** 2

|

| 156 |

+

cost /= 4

|

| 157 |

+

print("epoch", epoch, "mean squared error:", cost)

|

| 158 |

+

|

| 159 |

+

modelo = {

|

| 160 |

+

"w11": w11,

|

| 161 |

+

"w21": w21,

|

| 162 |

+

"b1": b1,

|

| 163 |

+

"w12": w12,

|

| 164 |

+

"w22": w22,

|

| 165 |

+

"b2": b2,

|

| 166 |

+

"w13": w13,

|

| 167 |

+

"w23": w23,

|

| 168 |

+

"b3": b3,

|

| 169 |

+

"o1": o1,

|

| 170 |

+

"o2": o2,

|

| 171 |

+

"o3": o3,

|

| 172 |

+

"ob": ob

|

| 173 |

+

}

|

| 174 |

+

return modelo

|

| 175 |

+

|

| 176 |

+

def save_model(modelo, filename):

|

| 177 |

+

with open(filename, 'w') as json_file:

|

| 178 |

+

json.dump(modelo, json_file)

|

| 179 |

+

|

| 180 |

+

## Main

|

| 181 |

+

def main():

|

| 182 |

+

# Train model

|

| 183 |

+

modelo = train()

|

| 184 |

+

|

| 185 |

+

print(modelo)

|

| 186 |

+

# Save model to file

|

| 187 |

+

save_model(modelo, "modelo.json")

|

| 188 |

+

|

| 189 |

+

for i in range(4):

|

| 190 |

+

result = predict(INPUTS[i][0],INPUTS[i][1], activation=sigmoid)

|

| 191 |

+

print("for input", INPUTS[i], "expected", OUTPUTS[i][0], "predicted", f"{result:4.4}", "which is", "correct" if round(result)==OUTPUTS[i][0] else "incorrect")

|

| 192 |

+

# print("for input", INPUTS[i], "expected", OUTPUTS[i][0], "predicted", result, "which is", "correct" if round(result)==OUTPUTS[i][0] else "incorrect")

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

if __name__ == "__main__":

|

| 196 |

+

main()

|

requirements.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

streamlit

|