File size: 2,544 Bytes

cb50f6a 5d8f693 335fb00 cb50f6a 5d8f693 cb50f6a c947ee2 5d8f693 cb50f6a 8664840 cb50f6a 5d8f693 cb50f6a 18ed6e4 cb50f6a 5d8f693 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 |

---

license: mit

language:

- en

inference: true

base_model:

- microsoft/codebert-base-mlm

- web3se/SmartBERT-v2

pipeline_tag: fill-mask

tags:

- fill-mask

- smart-contract

- web3

- software-engineering

- embedding

- codebert

---

# SmartBERT V3 CodeBERT

## Overview

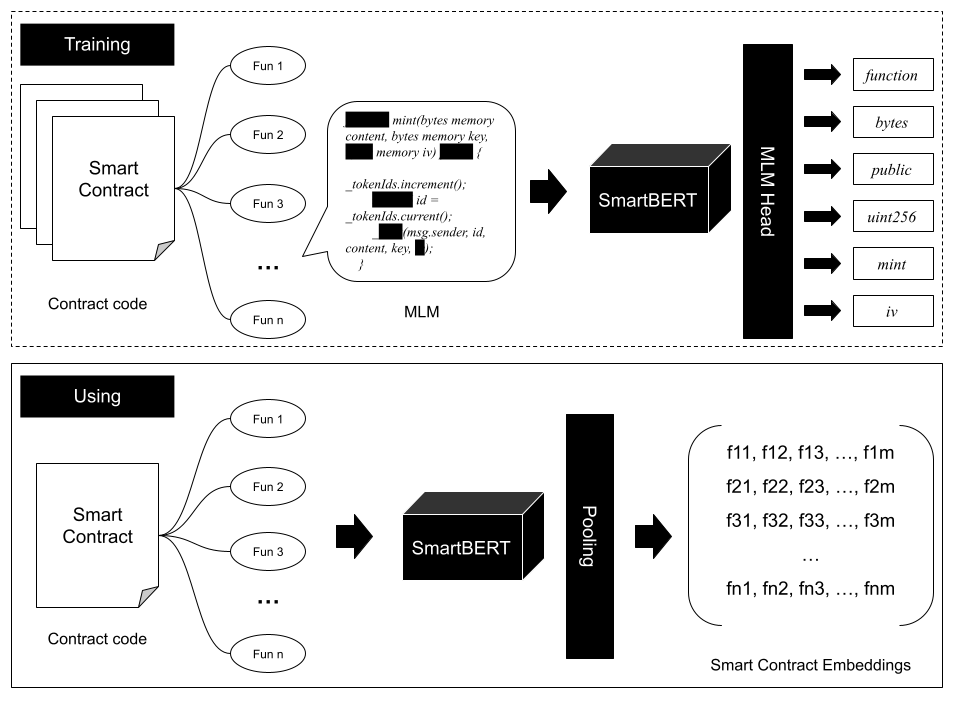

**SmartBERT V3** is a pre-trained programming language model, initialized with **[CodeBERT-base-mlm](https://huggingface.co/microsoft/codebert-base-mlm)**. It has been further trained on [SmartBERT V2](https://huggingface.co/web3se/SmartBERT-v2) with an additional **64,000** smart contracts, to enhance its robustness in representing smart contract code at the _function_ level.

- **Training Data:** Trained on a total of **80,000** smart contracts, including **16,000** from **[SmartBERT V2](https://huggingface.co/web3se/SmartBERT-v2)** and **64,000** (starts from 30001) new contracts.

- **Hardware:** Utilized 2 Nvidia A100 80G GPUs.

- **Training Duration:** Over 30 hours.

- **Evaluation Data:** Evaluated on **1,500** (starts from 96425) smart contracts.

## Usage

```python

from transformers import RobertaTokenizer, RobertaForMaskedLM, pipeline

model = RobertaForMaskedLM.from_pretrained('web3se/SmartBERT-v3')

tokenizer = RobertaTokenizer.from_pretrained('web3se/SmartBERT-v3')

code_example = "function totalSupply() external view <mask> (uint256);"

fill_mask = pipeline('fill-mask', model=model, tokenizer=tokenizer)

outputs = fill_mask(code_example)

print(outputs)

```

## Preprocessing

All newline (`\n`) and tab (`\t`) characters in the _function_ code were replaced with a single space to ensure consistency in the input data format.

## Base Model

- **Original Model**: [CodeBERT-base-mlm](https://huggingface.co/microsoft/codebert-base-mlm)

## Training Setup

```python

training_args = TrainingArguments(

output_dir=OUTPUT_DIR,

overwrite_output_dir=True,

num_train_epochs=20,

per_device_train_batch_size=64,

save_steps=10000,

save_total_limit=2,

evaluation_strategy="steps",

eval_steps=10000,

resume_from_checkpoint=checkpoint

)

```

## How to Use

To train and deploy the SmartBERT V3 model for Web API services, please refer to our GitHub repository: [web3se-lab/SmartBERT](https://github.com/web3se-lab/SmartBERT).

## Contributors

- [Youwei Huang](https://www.devil.ren)

- [Sen Fang](https://github.com/TomasAndersonFang)

## Sponsors

- [Institute of Intelligent Computing Technology, Suzhou, CAS](http://iict.ac.cn/)

- CAS Mino (中科劢诺) |