End of training

Browse files- README.md +84 -0

- image_0.png +0 -0

- image_1.png +0 -0

- image_2.png +0 -0

- image_3.png +0 -0

- logs/dreambooth-flux-dev-lora/1735880421.4236865/events.out.tfevents.1735880421.207-211-160-231.12773.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735880421.4322033/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735881120.7934933/events.out.tfevents.1735881120.207-211-160-231.16083.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735881120.8009305/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735881744.9094827/events.out.tfevents.1735881744.207-211-160-231.18888.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735881744.9170027/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735884349.6953244/events.out.tfevents.1735884349.207-211-160-231.22700.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735884349.7051013/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735884786.3328662/events.out.tfevents.1735884786.207-211-160-231.25414.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735884786.3440735/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735886977.0877852/events.out.tfevents.1735886977.207-211-160-231.28980.1 +3 -0

- logs/dreambooth-flux-dev-lora/1735886977.0969863/.ipynb_checkpoints/hparams-checkpoint.yml +73 -0

- logs/dreambooth-flux-dev-lora/1735886977.0969863/hparams.yml +73 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735880421.207-211-160-231.12773.0 +3 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735881120.207-211-160-231.16083.0 +3 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735881744.207-211-160-231.18888.0 +3 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735884349.207-211-160-231.22700.0 +3 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735884786.207-211-160-231.25414.0 +3 -0

- logs/dreambooth-flux-dev-lora/events.out.tfevents.1735886977.207-211-160-231.28980.0 +3 -0

- pytorch_lora_weights.safetensors +3 -0

README.md

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model: black-forest-labs/FLUX.1-dev

|

| 3 |

+

library_name: diffusers

|

| 4 |

+

license: other

|

| 5 |

+

instance_prompt: a photo of xjiminx

|

| 6 |

+

widget:

|

| 7 |

+

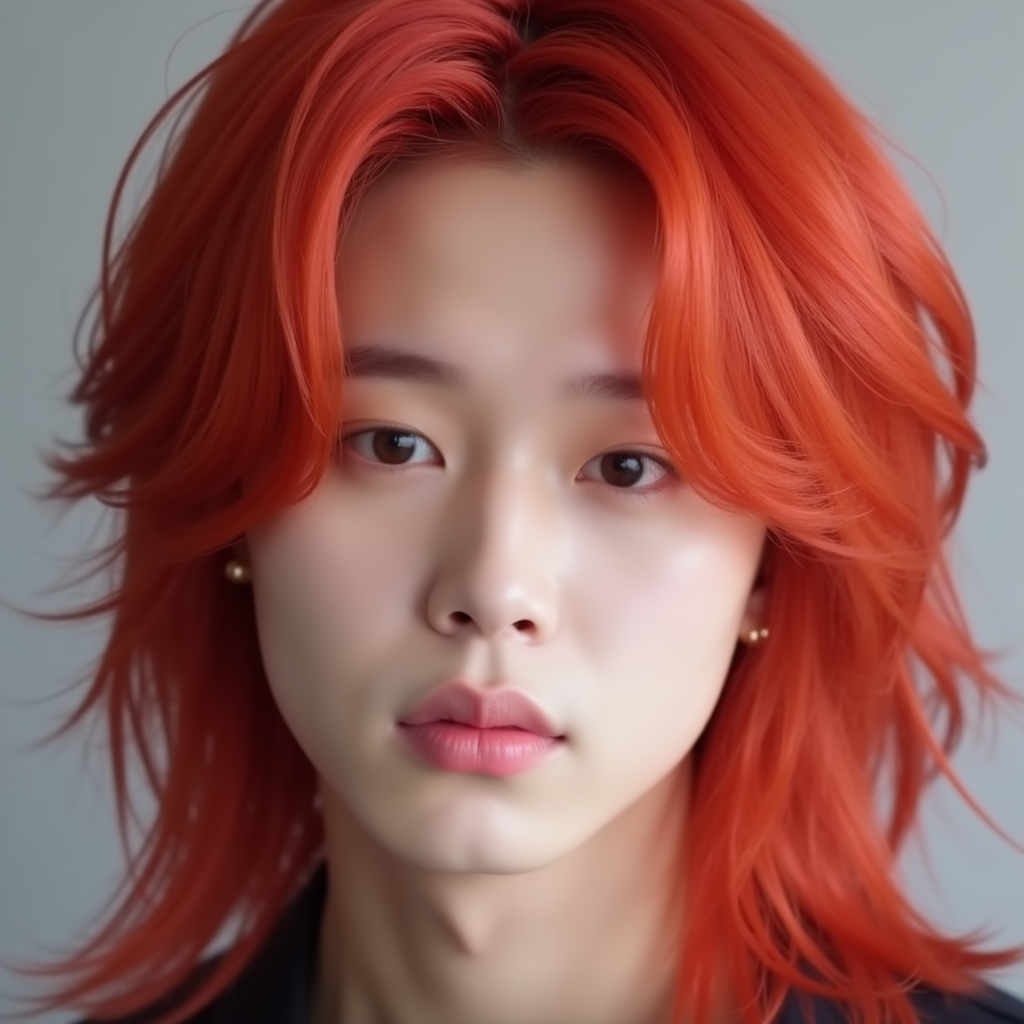

- text: A photo of xjiminx with red hair

|

| 8 |

+

output:

|

| 9 |

+

url: image_0.png

|

| 10 |

+

- text: A photo of xjiminx with red hair

|

| 11 |

+

output:

|

| 12 |

+

url: image_1.png

|

| 13 |

+

- text: A photo of xjiminx with red hair

|

| 14 |

+

output:

|

| 15 |

+

url: image_2.png

|

| 16 |

+

- text: A photo of xjiminx with red hair

|

| 17 |

+

output:

|

| 18 |

+

url: image_3.png

|

| 19 |

+

tags:

|

| 20 |

+

- text-to-image

|

| 21 |

+

- diffusers-training

|

| 22 |

+

- diffusers

|

| 23 |

+

- lora

|

| 24 |

+

- flux

|

| 25 |

+

- flux-diffusers

|

| 26 |

+

- template:sd-lora

|

| 27 |

+

---

|

| 28 |

+

|

| 29 |

+

<!-- This model card has been generated automatically according to the information the training script had access to. You

|

| 30 |

+

should probably proofread and complete it, then remove this comment. -->

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

# Flux DreamBooth LoRA - xgemstarx/m10_jm256_augment_15k_bs2

|

| 34 |

+

|

| 35 |

+

<Gallery />

|

| 36 |

+

|

| 37 |

+

## Model description

|

| 38 |

+

|

| 39 |

+

These are xgemstarx/m10_jm256_augment_15k_bs2 DreamBooth LoRA weights for black-forest-labs/FLUX.1-dev.

|

| 40 |

+

|

| 41 |

+

The weights were trained using [DreamBooth](https://dreambooth.github.io/) with the [Flux diffusers trainer](https://github.com/huggingface/diffusers/blob/main/examples/dreambooth/README_flux.md).

|

| 42 |

+

|

| 43 |

+

Was LoRA for the text encoder enabled? False.

|

| 44 |

+

|

| 45 |

+

## Trigger words

|

| 46 |

+

|

| 47 |

+

You should use `a photo of xjiminx` to trigger the image generation.

|

| 48 |

+

|

| 49 |

+

## Download model

|

| 50 |

+

|

| 51 |

+

[Download the *.safetensors LoRA](xgemstarx/m10_jm256_augment_15k_bs2/tree/main) in the Files & versions tab.

|

| 52 |

+

|

| 53 |

+

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

|

| 54 |

+

|

| 55 |

+

```py

|

| 56 |

+

from diffusers import AutoPipelineForText2Image

|

| 57 |

+

import torch

|

| 58 |

+

pipeline = AutoPipelineForText2Image.from_pretrained("black-forest-labs/FLUX.1-dev", torch_dtype=torch.bfloat16).to('cuda')

|

| 59 |

+

pipeline.load_lora_weights('xgemstarx/m10_jm256_augment_15k_bs2', weight_name='pytorch_lora_weights.safetensors')

|

| 60 |

+

image = pipeline('A photo of xjiminx with red hair').images[0]

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

|

| 64 |

+

|

| 65 |

+

## License

|

| 66 |

+

|

| 67 |

+

Please adhere to the licensing terms as described [here](https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md).

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

## Intended uses & limitations

|

| 71 |

+

|

| 72 |

+

#### How to use

|

| 73 |

+

|

| 74 |

+

```python

|

| 75 |

+

# TODO: add an example code snippet for running this diffusion pipeline

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

#### Limitations and bias

|

| 79 |

+

|

| 80 |

+

[TODO: provide examples of latent issues and potential remediations]

|

| 81 |

+

|

| 82 |

+

## Training details

|

| 83 |

+

|

| 84 |

+

[TODO: describe the data used to train the model]

|

image_0.png

ADDED

|

image_1.png

ADDED

|

image_2.png

ADDED

|

image_3.png

ADDED

|

logs/dreambooth-flux-dev-lora/1735880421.4236865/events.out.tfevents.1735880421.207-211-160-231.12773.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a53a56dbec37eeaa27c76c0dcd22acaab19d8ea722bb1dc12da32e5bc3290418

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735880421.4322033/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 500

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-05

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 50

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735881120.7934933/events.out.tfevents.1735881120.207-211-160-231.16083.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:59561894bf2ddbeb6a0a86d911d73106237be3c97177f416dfddc5396425a436

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735881120.8009305/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 500

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-05

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 500

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735881744.9094827/events.out.tfevents.1735881744.207-211-160-231.18888.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e7b4cffd7ed0da14471576fc41a49f7ec51cc7b6167bac887b2fcf2d020e54bc

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735881744.9170027/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 500

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-05

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 500

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735884349.6953244/events.out.tfevents.1735884349.207-211-160-231.22700.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cb564dde38c9a4d5b6798d42672e6f078e4a9e3f9bfec958f9033b2e66e43e7e

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735884349.7051013/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 1

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-05

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 500

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735884786.3328662/events.out.tfevents.1735884786.207-211-160-231.25414.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:087d6ecfdeff44d3fd2eb82f377b59d1b587d42ef1849718b8849e1f88a47549

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735884786.3440735/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 100000

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-05

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 250

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735886977.0877852/events.out.tfevents.1735886977.207-211-160-231.28980.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d525f50763bfd0d9322d1a048e4490834cdceeb8248ce3693967add5c6408fa7

|

| 3 |

+

size 3320

|

logs/dreambooth-flux-dev-lora/1735886977.0969863/.ipynb_checkpoints/hparams-checkpoint.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 100000

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-06

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 50

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/1735886977.0969863/hparams.yml

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: 0.001

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: false

|

| 9 |

+

caption_column: null

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 100000

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

dataloader_num_workers: 0

|

| 16 |

+

dataset_config_name: null

|

| 17 |

+

dataset_name: null

|

| 18 |

+

gradient_accumulation_steps: 4

|

| 19 |

+

gradient_checkpointing: false

|

| 20 |

+

guidance_scale: 1.0

|

| 21 |

+

hub_model_id: null

|

| 22 |

+

hub_token: null

|

| 23 |

+

image_column: image

|

| 24 |

+

instance_data_dir: jm_256_augment

|

| 25 |

+

instance_prompt: a photo of xjiminx

|

| 26 |

+

learning_rate: 1.0e-06

|

| 27 |

+

local_rank: 0

|

| 28 |

+

logging_dir: logs

|

| 29 |

+

logit_mean: 0.0

|

| 30 |

+

logit_std: 1.0

|

| 31 |

+

lora_layers: null

|

| 32 |

+

lr_num_cycles: 1

|

| 33 |

+

lr_power: 1.0

|

| 34 |

+

lr_scheduler: cosine

|

| 35 |

+

lr_warmup_steps: 0

|

| 36 |

+

max_grad_norm: 1.0

|

| 37 |

+

max_sequence_length: 512

|

| 38 |

+

max_train_steps: 15000

|

| 39 |

+

mixed_precision: bf16

|

| 40 |

+

mode_scale: 1.29

|

| 41 |

+

num_class_images: 100

|

| 42 |

+

num_train_epochs: 1250

|

| 43 |

+

num_validation_images: 4

|

| 44 |

+

optimizer: AdamW

|

| 45 |

+

output_dir: m10_jm256_augment_15k_bs2

|

| 46 |

+

pretrained_model_name_or_path: black-forest-labs/FLUX.1-dev

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: true

|

| 54 |

+

random_flip: false

|

| 55 |

+

rank: 4

|

| 56 |

+

repeats: 1

|

| 57 |

+

report_to: tensorboard

|

| 58 |

+

resolution: 512

|

| 59 |

+

resume_from_checkpoint: null

|

| 60 |

+

revision: null

|

| 61 |

+

sample_batch_size: 4

|

| 62 |

+

scale_lr: false

|

| 63 |

+

seed: 0

|

| 64 |

+

text_encoder_lr: 5.0e-06

|

| 65 |

+

train_batch_size: 2

|

| 66 |

+

train_text_encoder: false

|

| 67 |

+

upcast_before_saving: false

|

| 68 |

+

use_8bit_adam: false

|

| 69 |

+

validation_epochs: 50

|

| 70 |

+

validation_prompt: A photo of xjiminx with red hair

|

| 71 |

+

variant: null

|

| 72 |

+

weighting_scheme: none

|

| 73 |

+

with_prior_preservation: false

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735880421.207-211-160-231.12773.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d2bf2413ca6c729f2efff51051b68c52ef66e91e6662eea5a4d6ddddeb8459d6

|

| 3 |

+

size 4012

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735881120.207-211-160-231.16083.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a47380865f90bc66e4d4a912cd338ec3385d7a249e9ef0e8eff26aa97bb1a023

|

| 3 |

+

size 4012

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735881744.207-211-160-231.18888.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:27701537aad151d1f97371996c4eeb15fa49ee041210e988cdc586104b080fd2

|

| 3 |

+

size 5030302

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735884349.207-211-160-231.22700.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8c7387dfda478f8b84c6e8929a52f796fe2fba306a1a9f84d07d1170601e3a7f

|

| 3 |

+

size 322

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735884786.207-211-160-231.25414.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3a53b6ba63e0fa375dd4bf8d2e6aedac75af9c49fcddefd3ed861a21d54c3ad1

|

| 3 |

+

size 5057600

|

logs/dreambooth-flux-dev-lora/events.out.tfevents.1735886977.207-211-160-231.28980.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8ef4437f6d3596a3b603af36278d359cad042e7b57635a9b5af14974d887df1e

|

| 3 |

+

size 105348108

|

pytorch_lora_weights.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b97560e0f36f4ec5400c82124a61f92efc1b71bee1a1a5dff18ffadfb88cb632

|

| 3 |

+

size 22504080

|