Model Card for RigoChat-7b-v2-GGUF

Introduction

This repo contains IIC/RigoChat-7b-v2 model in the GGUF Format, with the original weights and quantized to different precisions.

The llama.cpp library has been used to transform the parameters into GGUF format, as well as to perform the quantizations. Specifically, the following command has been used to obtain the model in full precision:

- To download the weights:

from huggingface_hub import snapshot_download

import os

model_id="IIC/RigoChat-7b-v2"

os.environ["MODEL_DIR"] = snapshot_download(

repo_id=model_id,

local_dir="model",

local_dir_use_symlinks=False,

revision="main",

)

- To transform to

FP16:

python ./llama.cpp/convert_hf_to_gguf.py $MODEL_DIR --outfile rigochat-7b-v2-F16.gguf --outtype f16

Nevertheless, you can download this weights here.

To quantize rigochat-7b-v2-F16.gguf into diferent sizes, first, we calculates an importance matrix as follows:

./llama.cpp/llama-imatrix -m ./rigochat-7b-v2-fp16.gguf -f train_data.txt -c 1024

where train_data.txt is an spanish raw-text dataset for calibration. This generates an imatrix.dat file that we can use to quantize the original model. For example, to get the Q4_K_M precision with this config, do:

./llama.cpp/llama-quantize --imatrix imatrix.dat ./rigochat-7b-v2-fp16.gguf ./quantize_models/rigochat-7b-v2-Q4_K_M.gguf Q4_K_M

and so on. Yo can do:

./llama.cpp/llama-quantize --help

to see all the quantization options. To check how imatrix works, this example can be usefull. For more information on the quantization types, see this link.

Disclaimer

The train_data.txt dataset is optional for most quantizations. We have used an experimental dataset to obtain all possible quantizations. However, we highly recommend downloading the weights in full precision: rigochat-7b-v2-fp16.gguf and trying to quantize the model with your own datasets, adapted to the use case you want to use.

How to Get Started with the Model

You can do, for example

./llama.cpp/llama-cli -m ./rigochat-7b-v2-Q8_0.gguf -co -cnv -p "Your system." -fa -ngl -1 -n 512

or

./llama.cpp/llama-server -m ./rigochat-7b-v2-Q8_0.gguf -co -cnv -p "Your system." -fa -ngl -1 -n 512

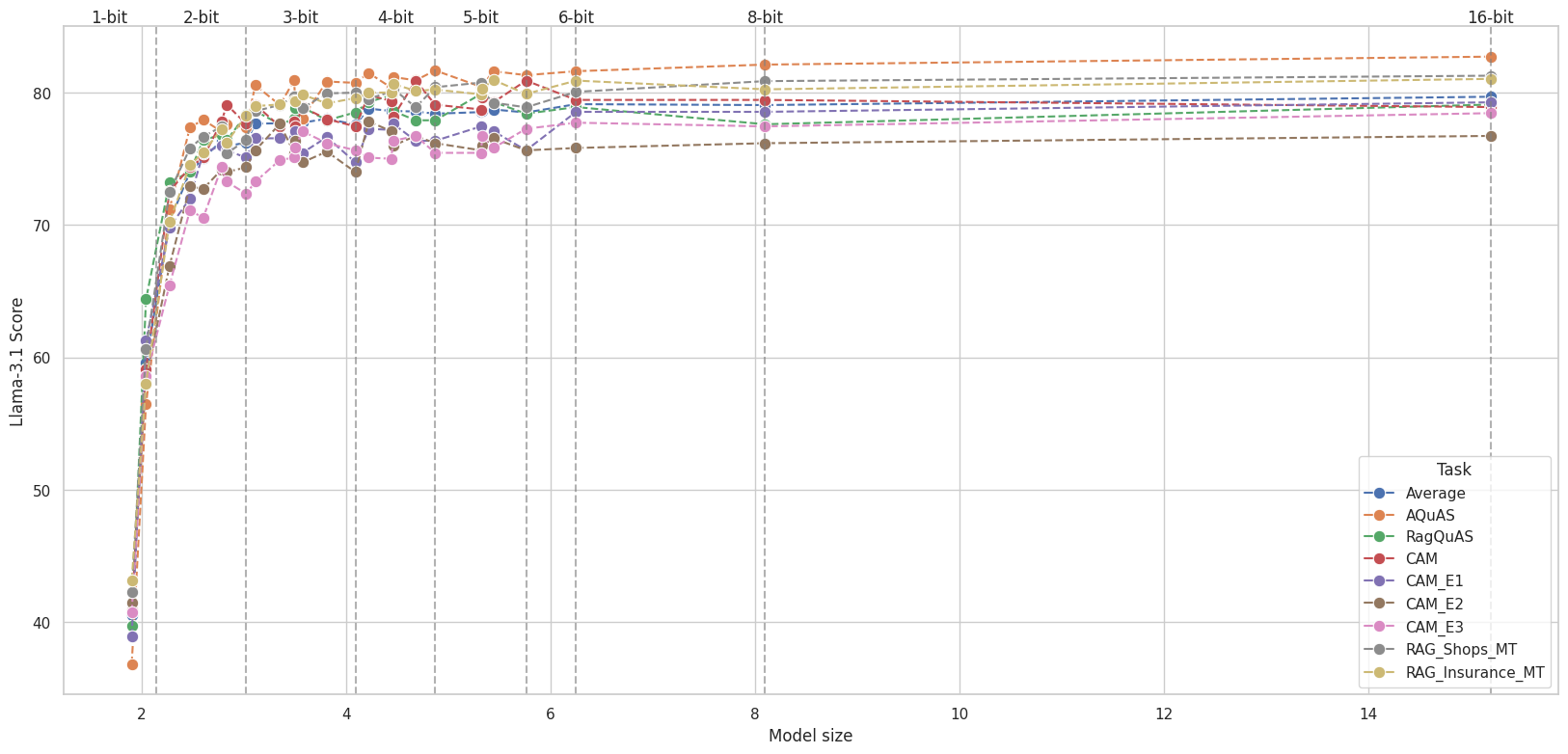

Evaluation

The evaluations are discussed in greater detail in the paper and the official repository. Here, we present only the graph illustrating how the model's performance improves as precision increases.

Citation

@misc {instituto_de_ingeniería_del_conocimiento_2025,

author = { {Instituto de Ingeniería del Conocimiento} },

title = { RigoChat-7b-v2-GGUF },

year = 2025,

url = { https://huggingface.co/IIC/RigoChat-7b-v2-GGUF },

doi = { 10.57967/hf/4159 },

publisher = { Hugging Face }

}

Model Card Contact

- Downloads last month

- 1,181