metadata

license: mit

language:

- en

pipeline_tag: text-to-speech

tags:

- audiocraft

- audiogen

- styletts2

- audio

- synthesis

- shift

- audeering

- dkounadis

- sound

- scene

- acoustic-scene

Affective TTS & Soundscape Synthesis

Affective TTS tool for SHIFT Horizon.

- Synthesizes affective speech with sound scape, trees, water, leaves, background from plain text or subtitles (.srt) & overlays it to videos.

134build-in affective voices available, tuned for StyleTTS2.- GitHub

Available Voices

Flask API

Install

virtualenv --python=python3 ~/.envs/.my_env

source ~/.envs/.my_env/bin/activate

cd shift/

pip install -r requirements.txt

Start Flask

CUDA_DEVICE_ORDER=PCI_BUS_ID HF_HOME=./hf_home CUDA_VISIBLE_DEVICES=2 python api.py

Inference

The following need api.py to be running, e.g. .. on computeXX.

Text 2 Speech

# Basic TTS - See Available Voices

python tts.py --text sample.txt --voice "en_US/m-ailabs_low#mary_ann" --affective

# voice cloning

python tts.py --text sample.txt --native assets/native_voice.wav

Image 2 Video

# Make video narrating an image - All above TTS args apply also here!

python tts.py --text sample.txt --image assets/image_from_T31.jpg

Video 2 Video

# Video Dubbing - from time-stamped subtitles (.srt)

python tts.py --text assets/head_of_fortuna_en.srt --video assets/head_of_fortuna.mp4

# Video narration - from text description (.txt)

python tts.py --text assets/head_of_fortuna_GPT.txt --video assets/head_of_fortuna.mp4

Examples

Substitute Native voice via TTS

Same video where Native voice is replaced with English TTS voice with similar emotion

Video dubbing from subtitles .srt

.srtVideo Dubbing

Generate dubbed video:

python tts.py --text assets/head_of_fortuna_en.srt --video assets/head_of_fortuna.mp4

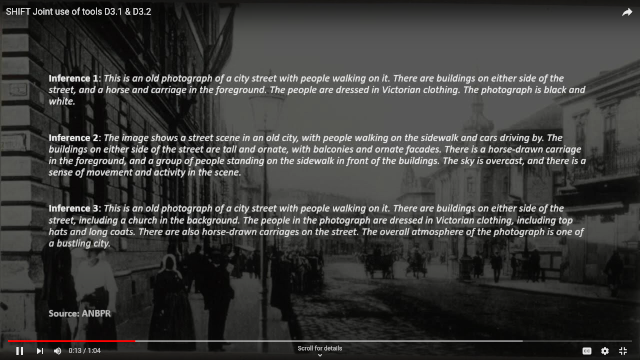

Joint Application of D3.1 & D3.2

From an image and text create a video:

python tts.py --text sample.txt --image assets/image_from_T31.jpg