|

--- |

|

language: |

|

- ru |

|

- en |

|

license: apache-2.0 |

|

library_name: torchtune |

|

base_model: |

|

- mistralai/Mistral-7B-v0.1 |

|

datasets: |

|

- IlyaGusev/rulm |

|

--- |

|

|

|

Модель mistralai/Mistral-7B-v0.1, обучение всех слоев с ~4млрд токенов из датасета. |

|

|

|

130 часов 2xTesla H100. |

|

|

|

``` |

|

batch_size: 20 |

|

epochs: 1 |

|

optimizer: |

|

_component_: torch.optim.AdamW |

|

lr: 5e-6 |

|

weight_decay: 0.01 |

|

loss: |

|

_component_: torch.nn.CrossEntropyLoss |

|

max_steps_per_epoch: null |

|

gradient_accumulation_steps: 5 |

|

``` |

|

Размер последовательности 1024 токенов. |

|

|

|

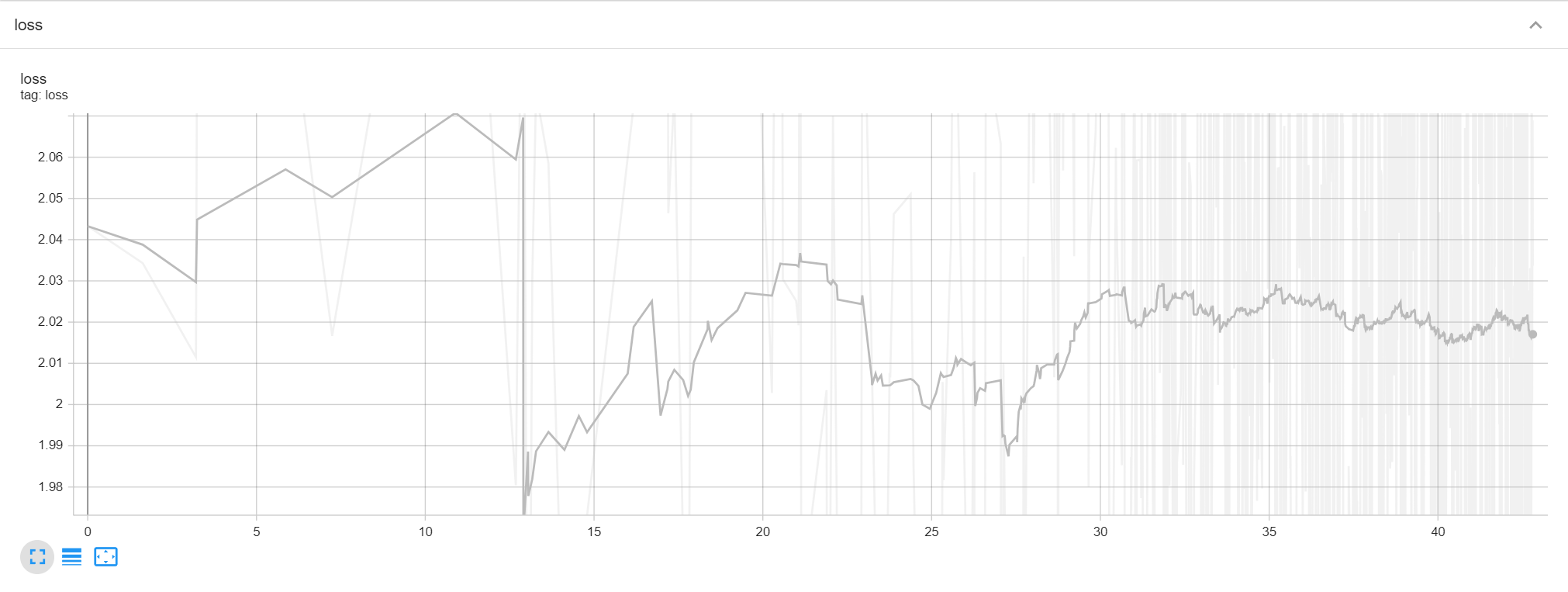

Loss curve |

|

|

|

|

|

По https://github.com/NLP-Core-Team/mmlu_ru |

|

|

|

Квантизация в 4b: accuracy_total=41.86218134391028 |

|

|