title: README

emoji: 🐢

colorFrom: purple

colorTo: gray

sdk: static

pinned: false

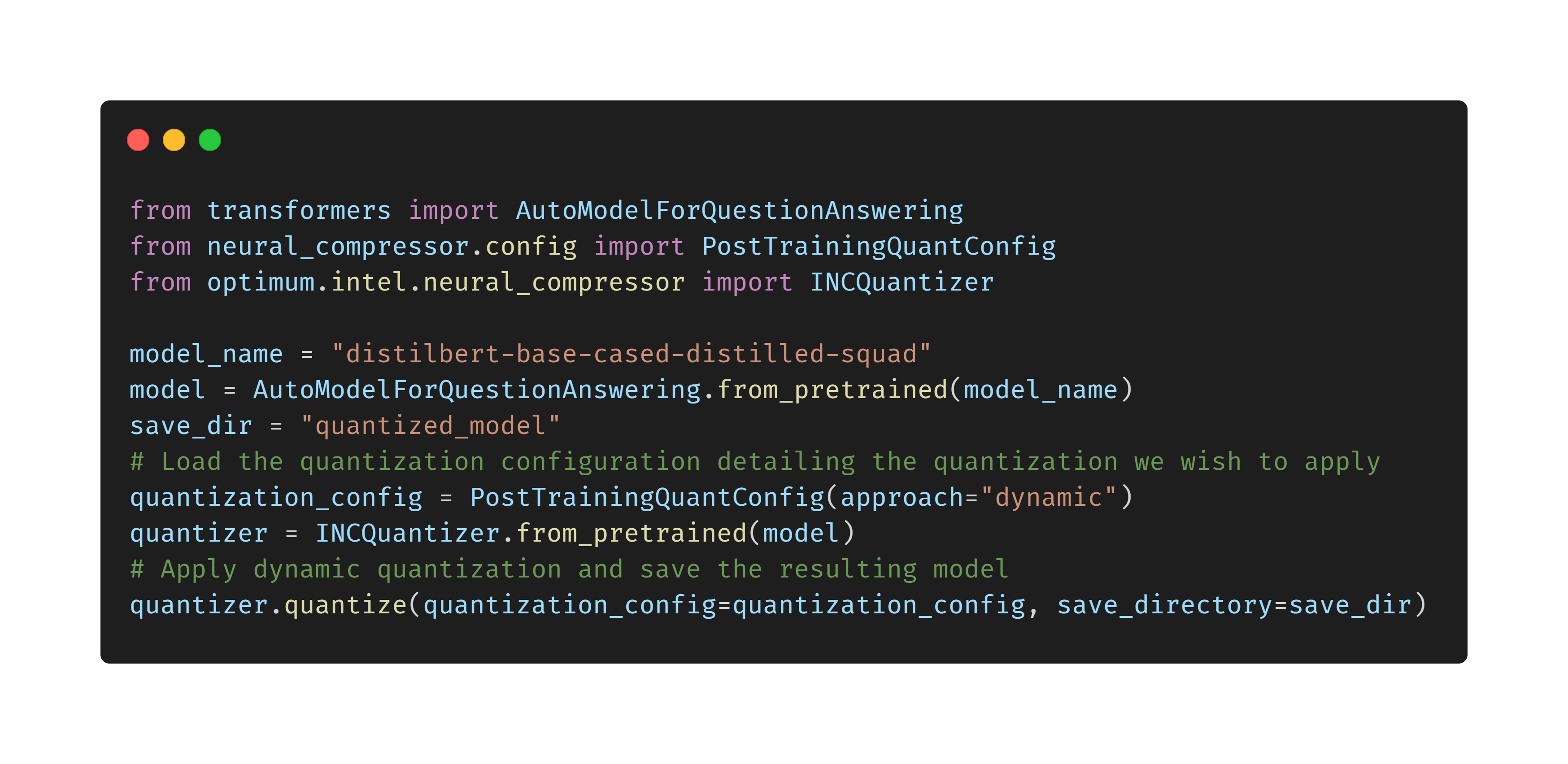

Intel and Hugging Face are working together to democratize machine learning, making the latest and greatest models from Hugging Face run fast and efficiently on Intel devices. To make this acceleration accessible to the global AI community, Intel is proud to sponsor the free and accelerated inference of over 80,000 open source models on Hugging Face, powered by Intel Xeon Ice Lake processors in the Hugging Face Inference API. Intel Xeon Ice Lake provides up to 34% acceleration for transformer model inference.

Try it out today on any Hugging Face model, right from the model page, using the Inference Widget!

Intel optimizes the most widely adopted and innovative AI software tools, frameworks, and libraries for Intel® architecture. Whether you are computing locally or deploying AI applications on a massive scale, your organization can achieve peak performance with AI software optimized for Intel Xeon Scalable platforms.

Intel’s engineering collaboration with Hugging Face offers state-of-the-art hardware and software acceleration to train, fine-tune and predict with Transformers.

Useful Resources: