mGPT-nsp

mGPT-nsp is fine-tuned for Next Sentence Prediction task on the wikipedia dataset using multilingual GPT model. It was introduced in this paper and first released on this page.

Model description

mGPT-nsp is a Transformer-based model which was fine-tuned for Next Sentence Prediction task on 11000 English and 11000 German Wikipedia articles. We use the same tokenization and vocabulary as the mT5 model.

Intended uses

- Apply Next Sentence Prediction tasks. (compare the results with BERT models since BERT natively supports this task)

- See how to fine-tune an mGPT2 model using our code

- Check our paper to see its results

How to use

You can use this model directly with a pipeline for next sentence prediction. Here is how to use this model in PyTorch:

Necessary Initialization

from transformers import MT5Tokenizer, GPT2Model

import torch

from huggingface_hub import hf_hub_download

class ModelNSP(torch.nn.Module):

def __init__(self, pretrained_model="THUMT/mGPT"):

super(ModelNSP, self).__init__()

self.core_model = GPT2Model.from_pretrained(pretrained_model)

self.nsp_head = torch.nn.Sequential(torch.nn.Linear(self.core_model.config.hidden_size, 300),

torch.nn.Linear(300, 300), torch.nn.Linear(300, 2))

def forward(self, input_ids, attention_mask=None):

return self.nsp_head(self.core_model(input_ids, attention_mask=attention_mask)[0].mean(dim=1)).softmax(dim=-1)

model = torch.nn.DataParallel(ModelNSP().eval())

model.load_state_dict(torch.load(hf_hub_download(repo_id="tolga-ozturk/mGPT-nsp", filename="model_weights.bin")))

tokenizer = MT5Tokenizer.from_pretrained("tolga-ozturk/mGPT-nsp")

Inference

batch_texts = [("In Italy, pizza is presented unsliced.", "The sky is blue."),

("In Italy, pizza is presented unsliced.", "However, it is served sliced in Turkey.")]

encoded_dict = tokenizer.batch_encode_plus(batch_text_or_text_pairs=batch_texts, truncation="longest_first",padding=True, return_tensors="pt", return_attention_mask=True, max_length=256)

print(torch.argmax(model(encoded_dict.input_ids, attention_mask=encoded_dict.attention_mask), dim=-1))

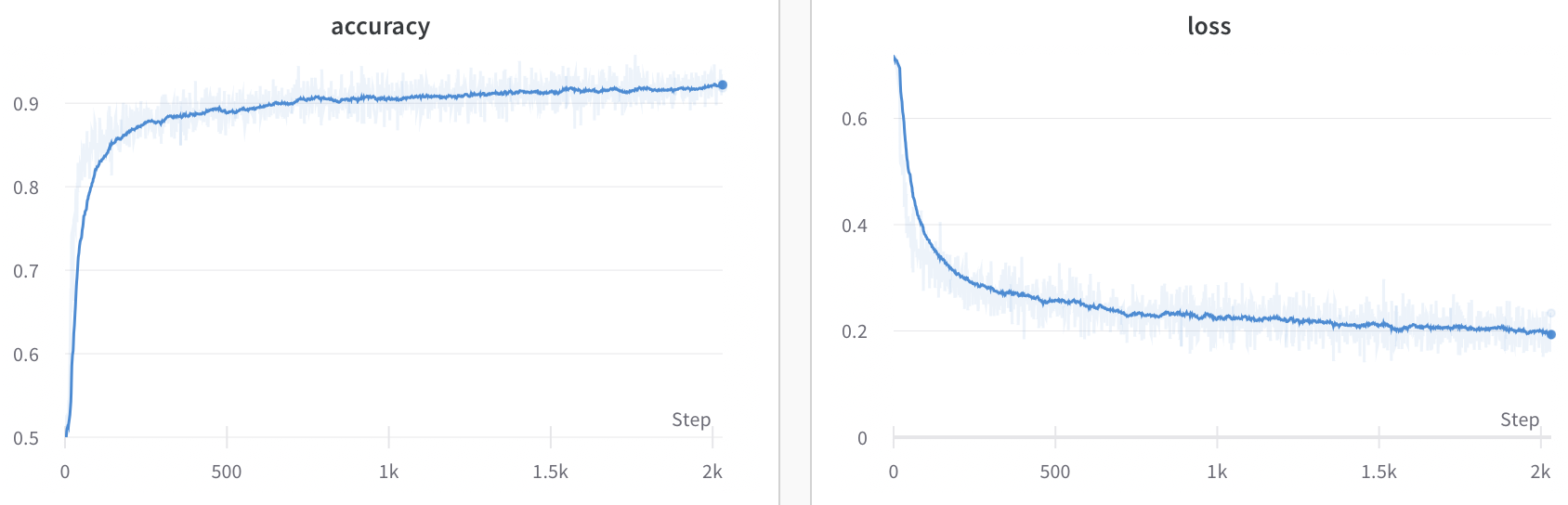

Training Metrics

BibTeX entry and citation info

@misc{title={How Different Is Stereotypical Bias Across Languages?},

author={Ibrahim Tolga Öztürk and Rostislav Nedelchev and Christian Heumann and Esteban Garces Arias and Marius Roger and Bernd Bischl and Matthias Aßenmacher},

year={2023},

eprint={2307.07331},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

The work is done with Ludwig-Maximilians-Universität Statistics group, don't forget to check out their huggingface page for other interesting works!

- Downloads last month

- 22