SmartBERT V2 CodeBERT

Overview

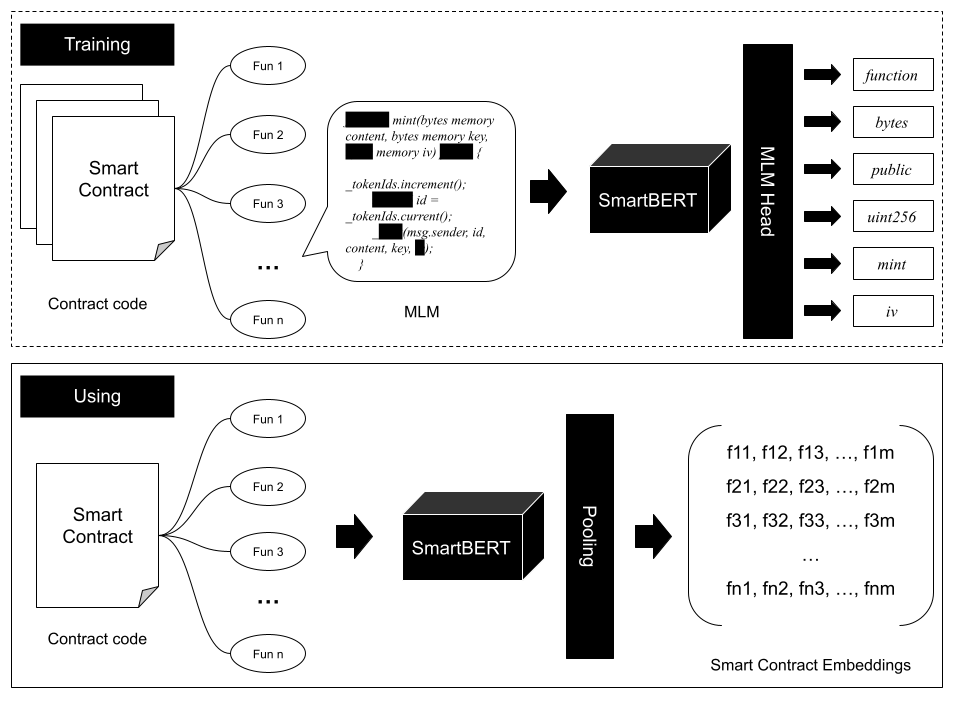

SmartBERT V2 CodeBERT is a pre-trained model, initialized with CodeBERT-base-mlm, designed to transfer Smart Contract function-level code into embeddings effectively.

- Training Data: Trained on 16,000 smart contracts.

- Hardware: Utilized 2 Nvidia A100 80G GPUs.

- Training Duration: More than 10 hours.

- Evaluation Data: Evaluated on 4,000 smart contracts.

Preprocessing

All newline (\n) and tab (\t) characters in the function code were replaced with a single space to ensure consistency in the input data format.

Base Model

- Base Model: CodeBERT-base-mlm

Training Setup

from transformers import TrainingArguments

training_args = TrainingArguments(

output_dir=OUTPUT_DIR,

overwrite_output_dir=True,

num_train_epochs=20,

per_device_train_batch_size=64,

save_steps=10000,

save_total_limit=2,

evaluation_strategy="steps",

eval_steps=10000,

resume_from_checkpoint=checkpoint

)

How to Use

To train and deploy the SmartBERT V2 model for Web API services, please refer to our GitHub repository: web3se-lab/SmartBERT.

Or use pipline:

from transformers import RobertaTokenizer, RobertaForMaskedLM, pipeline

model = RobertaForMaskedLM.from_pretrained('web3se/SmartBERT-v3')

tokenizer = RobertaTokenizer.from_pretrained('web3se/SmartBERT-v3')

code_example = "function totalSupply() external view <mask> (uint256);"

fill_mask = pipeline('fill-mask', model=model, tokenizer=tokenizer)

outputs = fill_mask(code_example)

print(outputs)

Contributors

Sponsors

- Institute of Intelligent Computing Technology, Suzhou, CAS

- CAS Mino (中科劢诺)

- Downloads last month

- 8

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Model tree for web3se/SmartBERT-v2

Base model

microsoft/codebert-base-mlm