Query expansion

Collection

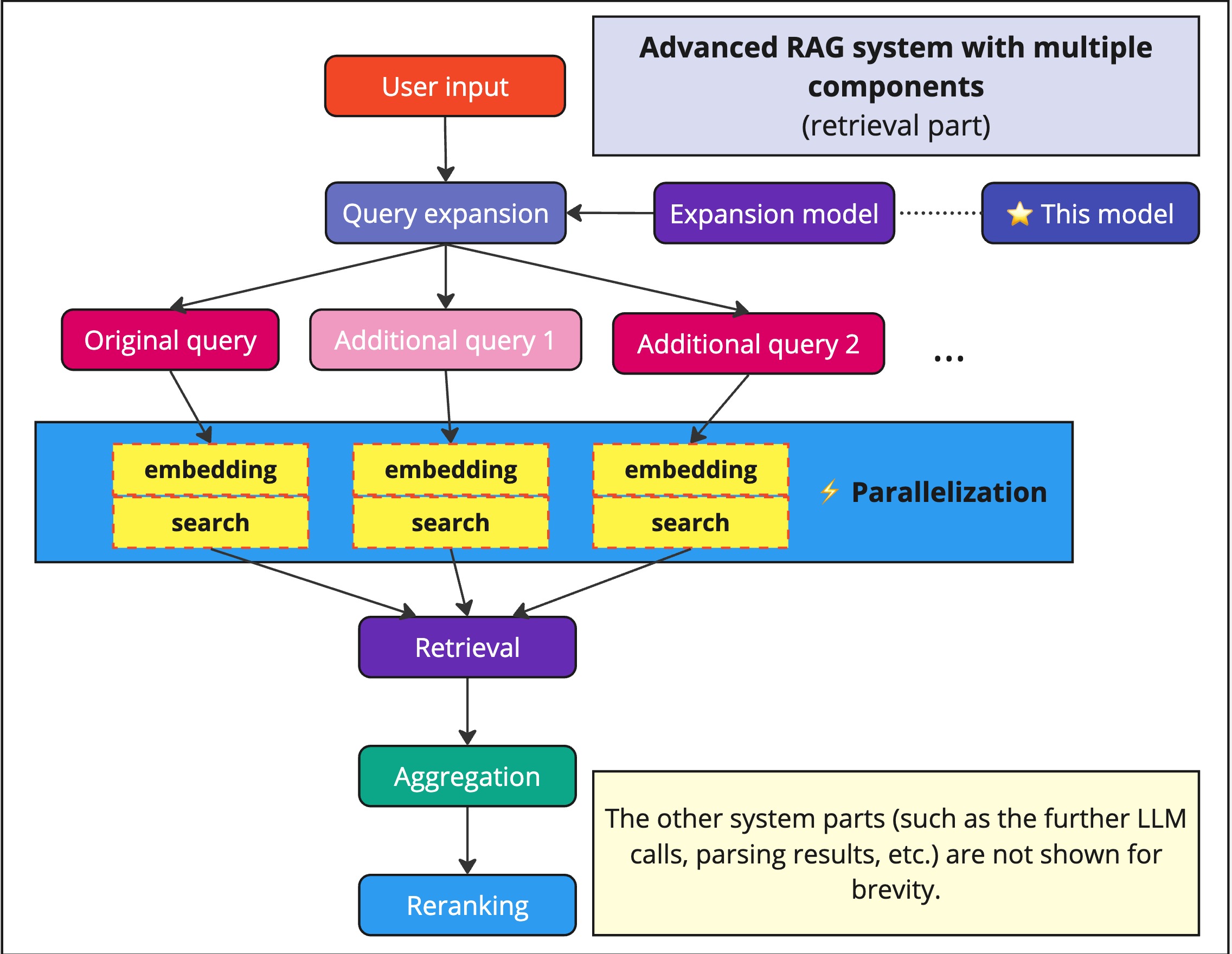

A collection of models along with the training dataset, designed to improve search queries and retrieval in RAG systems.

•

7 items

•

Updated

GGUF quantized version of Llama-3.2-3B for query expansion task. Part of a collection of query expansion models available in different architectures and sizes.

Task: Search query expansion

Base model: Llama-3.2-3B-Instruct

Training data: Query Expansion Dataset

Model available in multiple quantization formats:

This model is designed for enhancing search and retrieval systems by generating semantically relevant query expansions.

It could be useful for:

Input: "apple stock" Expansions:

If you find my work helpful, feel free to give me a citation.